Barry Lennox

Confined Space Underwater Positioning Using Collaborative Robots

Oct 31, 2025Abstract:Positioning of underwater robots in confined and cluttered spaces remains a key challenge for field operations. Existing systems are mostly designed for large, open-water environments and struggle in industrial settings due to poor coverage, reliance on external infrastructure, and the need for feature-rich surroundings. Multipath effects from continuous sound reflections further degrade signal quality, reducing accuracy and reliability. Accurate and easily deployable positioning is essential for repeatable autonomous missions; however, this requirement has created a technological bottleneck limiting underwater robotic deployment. This paper presents the Collaborative Aquatic Positioning (CAP) system, which integrates collaborative robotics and sensor fusion to overcome these limitations. Inspired by the "mother-ship" concept, the surface vehicle acts as a mobile leader to assist in positioning a submerged robot, enabling localization even in GPS-denied and highly constrained environments. The system is validated in a large test tank through repeatable autonomous missions using CAP's position estimates for real-time trajectory control. Experimental results demonstrate a mean Euclidean distance (MED) error of 70 mm, achieved in real time without requiring fixed infrastructure, extensive calibration, or environmental features. CAP leverages advances in mobile robot sensing and leader-follower control to deliver a step change in accurate, practical, and infrastructure-free underwater localization.

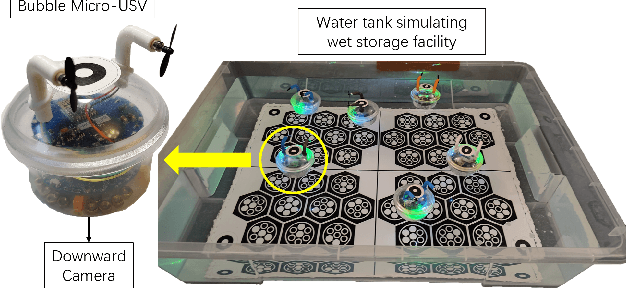

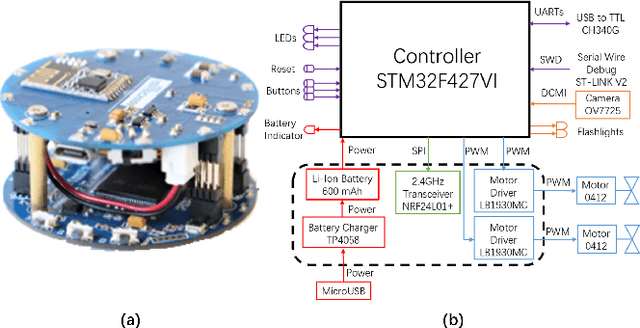

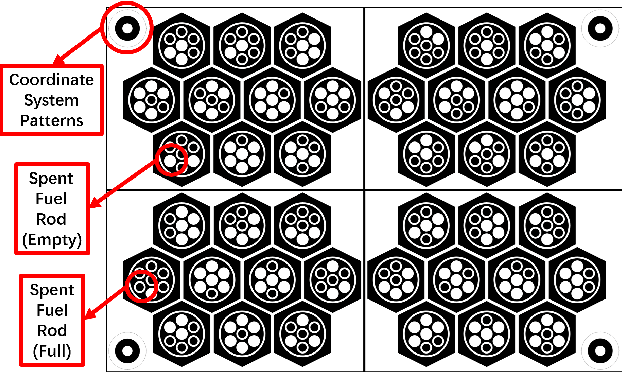

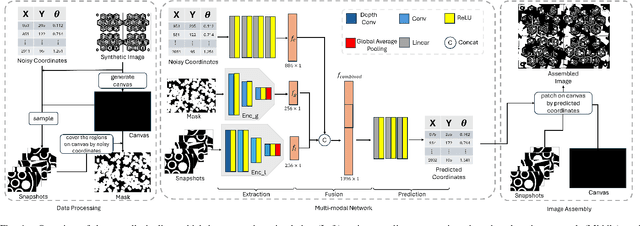

Deep Learning-Enhanced Visual Monitoring in Hazardous Underwater Environments with a Swarm of Micro-Robots

Mar 04, 2025

Abstract:Long-term monitoring and exploration of extreme environments, such as underwater storage facilities, is costly, labor-intensive, and hazardous. Automating this process with low-cost, collaborative robots can greatly improve efficiency. These robots capture images from different positions, which must be processed simultaneously to create a spatio-temporal model of the facility. In this paper, we propose a novel approach that integrates data simulation, a multi-modal deep learning network for coordinate prediction, and image reassembly to address the challenges posed by environmental disturbances causing drift and rotation in the robots' positions and orientations. Our approach enhances the precision of alignment in noisy environments by integrating visual information from snapshots, global positional context from masks, and noisy coordinates. We validate our method through extensive experiments using synthetic data that simulate real-world robotic operations in underwater settings. The results demonstrate very high coordinate prediction accuracy and plausible image assembly, indicating the real-world applicability of our approach. The assembled images provide clear and coherent views of the underwater environment for effective monitoring and inspection, showcasing the potential for broader use in extreme settings, further contributing to improved safety, efficiency, and cost reduction in hazardous field monitoring. Code is available on https://github.com/ChrisChen1023/Micro-Robot-Swarm.

Collaborative Aquatic Positioning System Utilising Multi-beam Sonar and Depth Sensors

Mar 18, 2024

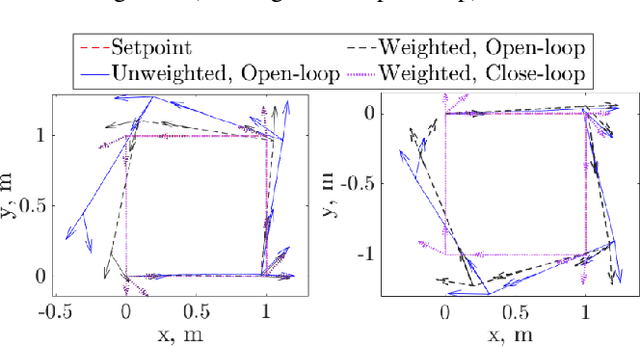

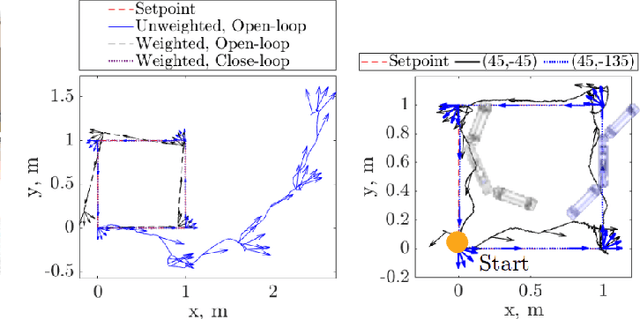

Abstract:Accurate positioning of remotely operated underwater vehicles (ROVs) in confined environments is crucial for inspection and mapping tasks and is also a prerequisite for autonomous operations. Presently, there are no positioning systems available that are suited for real-world use in confined underwater environments, unconstrained by environmental lighting and water turbidity levels and have sufficient accuracy for long-term, reliable and repeatable navigation. This shortage presents a significant barrier to enhancing the capabilities of ROVs in such scenarios. This paper introduces an innovative positioning system for ROVs operating in confined, cluttered underwater settings, achieved through the collaboration of an omnidirectional surface vehicle and an ROV. A formulation is proposed and evaluated in the simulation against ground truth. The experimental results from the simulation form a proof of principle of the proposed system and also demonstrate its deployability. Unlike many previous approaches, the system does not rely on fixed infrastructure or tracking of features in the environment and can cover large enclosed areas without additional equipment.

Virtual Elastic Tether: a New Approach for Multi-agent Navigation in Confined Aquatic Environments

Mar 15, 2024

Abstract:Underwater navigation is a challenging area in the field of mobile robotics due to inherent constraints in self-localisation and communication in underwater environments. Some of these challenges can be mitigated by using collaborative multi-agent teams. However, when applied underwater, the robustness of traditional multi-agent collaborative control approaches is highly limited due to the unavailability of reliable measurements. In this paper, the concept of a Virtual Elastic Tether (VET) is introduced in the context of incomplete state measurements, which represents an innovative approach to underwater navigation in confined spaces. The concept of VET is formulated and validated using the Cooperative Aquatic Vehicle Exploration System (CAVES), which is a sim-to-real multi-agent aquatic robotic platform. Within this framework, a vision-based Autonomous Underwater Vehicle-Autonomous Surface Vehicle leader-follower formulation is developed. Experiments were conducted in both simulation and on a physical platform, benchmarked against a traditional Image-Based Visual Servoing approach. Results indicate that the formation of the baseline approach fails under discrete disturbances, when induced distances between the robots exceeds 0.6 m in simulation and 0.3 m in the real world. In contrast, the VET-enhanced system recovers to pre-perturbation distances within 5 seconds. Furthermore, results illustrate the successful navigation of VET-enhanced CAVES in a confined water pond where the baseline approach fails to perform adequately.

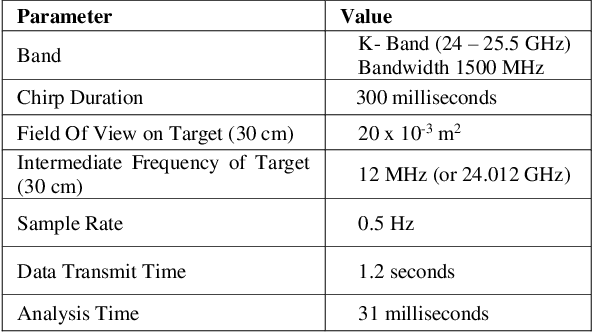

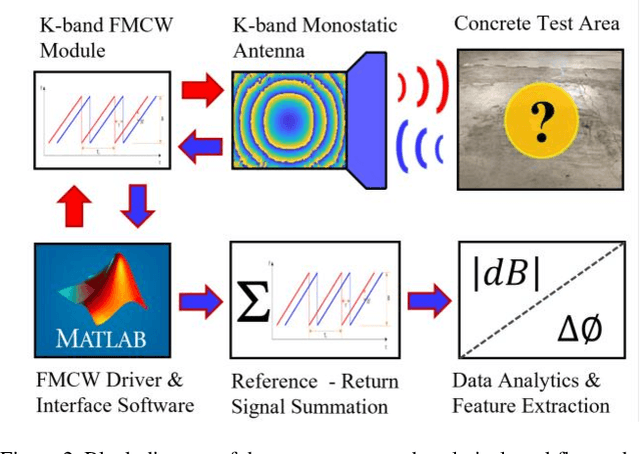

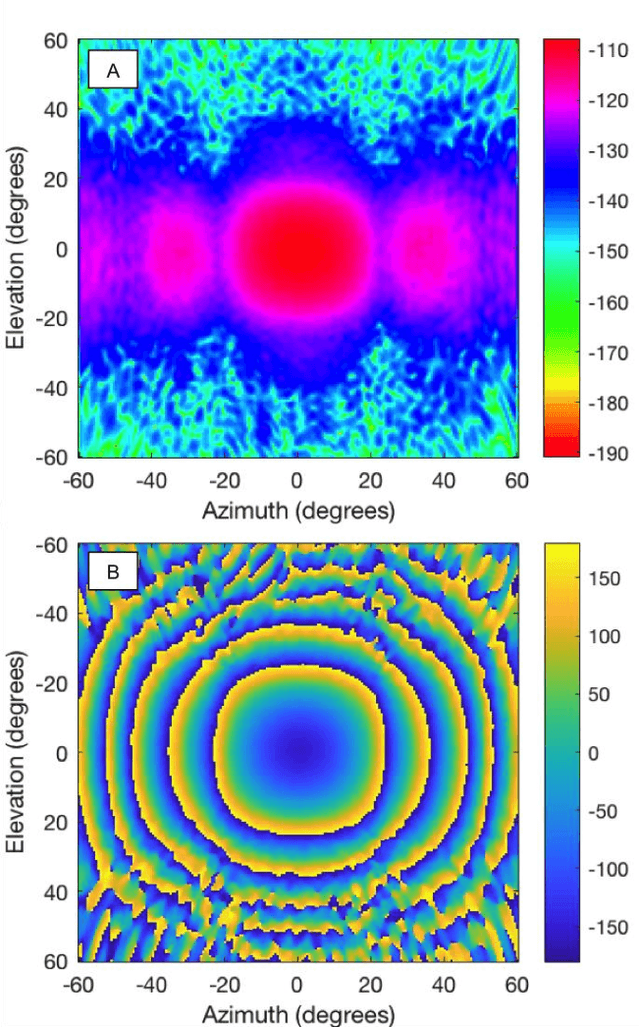

Millimeter-Wave Sensing for Avoidance of High-Risk Ground Conditions for Mobile Robots

Mar 30, 2022

Abstract:Mobile robot autonomy has made significant advances in recent years, with navigation algorithms well developed and used commercially in certain well-defined environments, such as warehouses. The common link in usage scenarios is that the environments in which the robots are utilized have a high degree of certainty. Operating environments are often designed to be robot friendly, for example augmented reality markers are strategically placed and the ground is typically smooth, level, and clear of debris. For robots to be useful in a wider range of environments, especially environments that are not sanitized for their use, robots must be able to handle uncertainty. This requires a robot to incorporate new sensors and sources of information, and to be able to use this information to make decisions regarding navigation and the overall mission. When using autonomous mobile robots in unstructured and poorly defined environments, such as a natural disaster site or in a rural environment, ground condition is of critical importance and is a common cause of failure. Examples include loss of traction due to high levels of ground water, hidden cavities, or material boundary failures. To evaluate a non-contact sensing method to mitigate these risks, Frequency Modulated Continuous Wave (FMCW) radar is integrated with an Unmanned Ground Vehicle (UGV), representing a novel application of FMCW to detect new measurands for Robotic Autonomous Systems (RAS) navigation, informing on terrain integrity and adding to the state-of-the-art in sensing for optimized autonomous path planning. In this paper, the FMCW is first evaluated in a desktop setting to determine its performance in anticipated ground conditions. The FMCW is then fixed to a UGV and the sensor system is tested and validated in a representative environment containing regions with significant levels of ground water saturation.

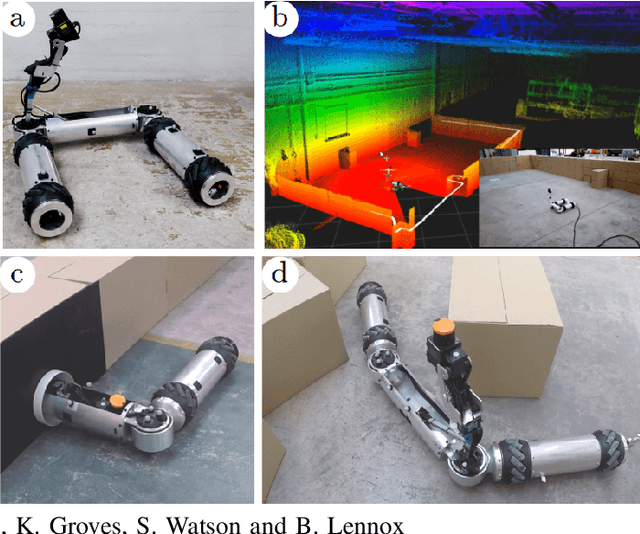

MIRRAX: A Reconfigurable Robot for Limited Access Environments

Mar 01, 2022

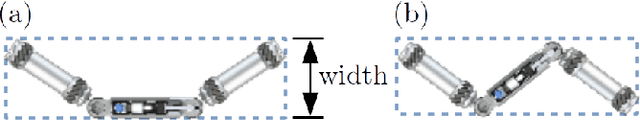

Abstract:The development of mobile robot platforms for inspection has gained traction in recent years with the rapid advancement in hardware and software. However, conventional mobile robots are unable to address the challenge of operating in extreme environments where the robot is required to traverse narrow gaps in highly cluttered areas with restricted access. This paper presents MIRRAX, a robot that has been designed to meet these challenges with the capability of re-configuring itself to both access restricted environments through narrow ports and navigate through tightly spaced obstacles. Controllers for the robot are detailed, along with an analysis on the controllability of the robot given the use of Mecanum wheels in a variable configuration. Characterisation on the robot's performance identified suitable configurations for operating in narrow environments. The minimum lateral footprint width achievable for stable configuration ($<2^\text{o}$~roll) was 0.19~m. Experimental validation of the robot's controllability shows good agreement with the theoretical analysis. A further series of experiments shows the feasibility of the robot in addressing the challenges above: the capability to reconfigure itself for restricted entry through ports as small as 150mm diameter, and navigating through cluttered environments. The paper also presents results from a deployment in a Magnox facility at the Sellafield nuclear site in the UK -- the first robot to ever do so, for remote inspection and mapping.

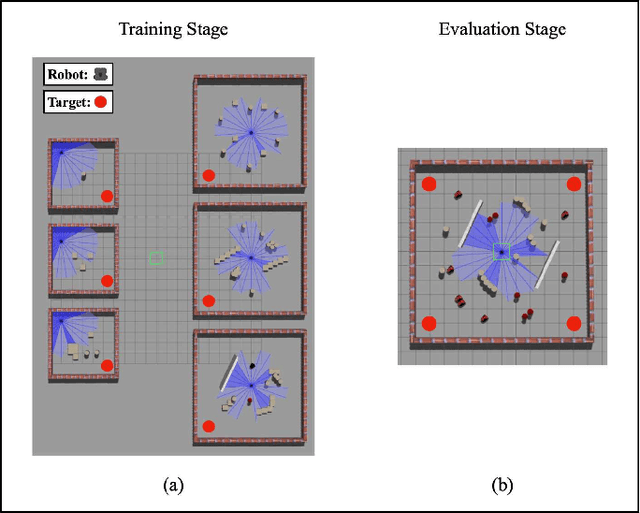

Federated Reinforcement Learning for Collective Navigation of Robotic Swarms

Feb 02, 2022

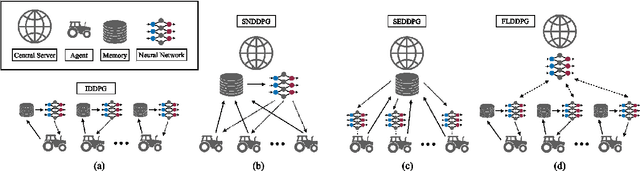

Abstract:The recent advancement of Deep Reinforcement Learning (DRL) contributed to robotics by allowing automatic controller design. Automatic controller design is a crucial approach for designing swarm robotic systems, which require more complex controller than a single robot system to lead a desired collective behaviour. Although DRL-based controller design method showed its effectiveness, the reliance on the central training server is a critical problem in the real-world environments where the robot-server communication is unstable or limited. We propose a novel Federated Learning (FL) based DRL training strategy for use in swarm robotic applications. As FL reduces the number of robot-server communication by only sharing neural network model weights, not local data samples, the proposed strategy reduces the reliance on the central server during controller training with DRL. The experimental results from the collective learning scenario showed that the proposed FL-based strategy dramatically reduced the number of communication by minimum 1600 times and even increased the success rate of navigation with the trained controller by 2.8 times compared to the baseline strategies that share a central server. The results suggest that our proposed strategy can efficiently train swarm robotic systems in the real-world environments with the limited robot-server communication, e.g. agri-robotics, underwater and damaged nuclear facilities.

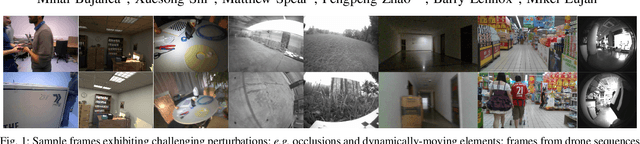

Robust SLAM Systems: Are We There Yet?

Sep 27, 2021

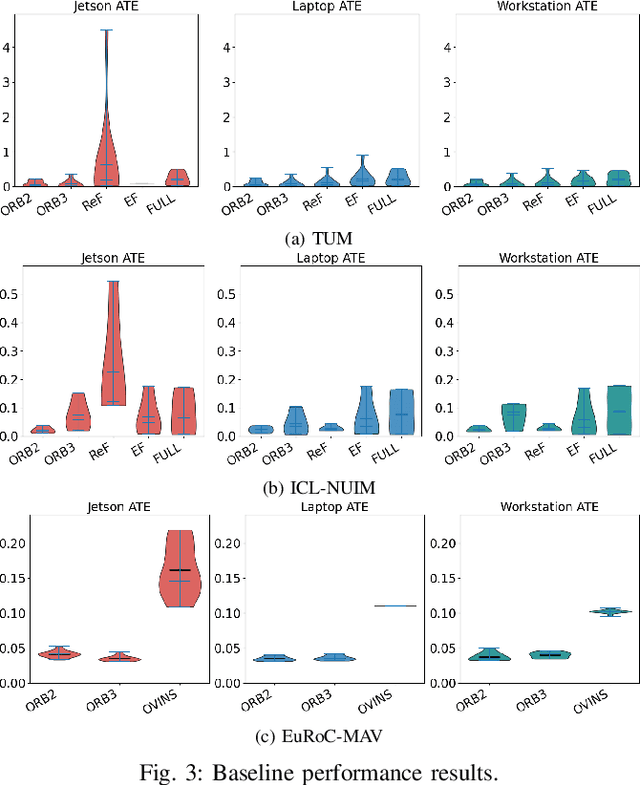

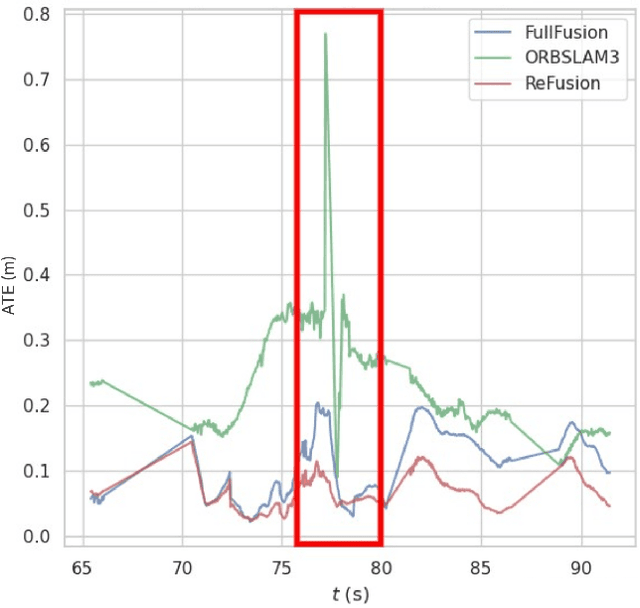

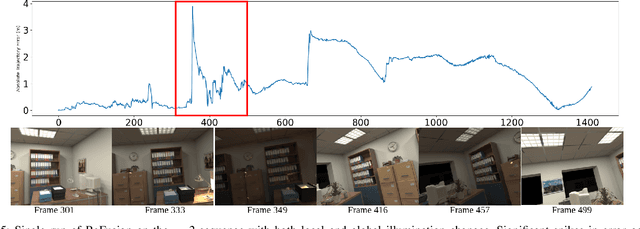

Abstract:Progress in the last decade has brought about significant improvements in the accuracy and speed of SLAM systems, broadening their mapping capabilities. Despite these advancements, long-term operation remains a major challenge, primarily due to the wide spectrum of perturbations robotic systems may encounter. Increasing the robustness of SLAM algorithms is an ongoing effort, however it usually addresses a specific perturbation. Generalisation of robustness across a large variety of challenging scenarios is not well-studied nor understood. This paper presents a systematic evaluation of the robustness of open-source state-of-the-art SLAM algorithms with respect to challenging conditions such as fast motion, non-uniform illumination, and dynamic scenes. The experiments are performed with perturbations present both independently of each other, as well as in combination in long-term deployment settings in unconstrained environments (lifelong operation).

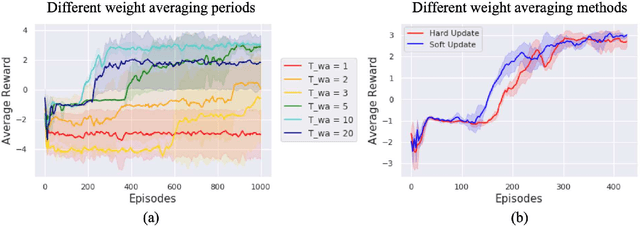

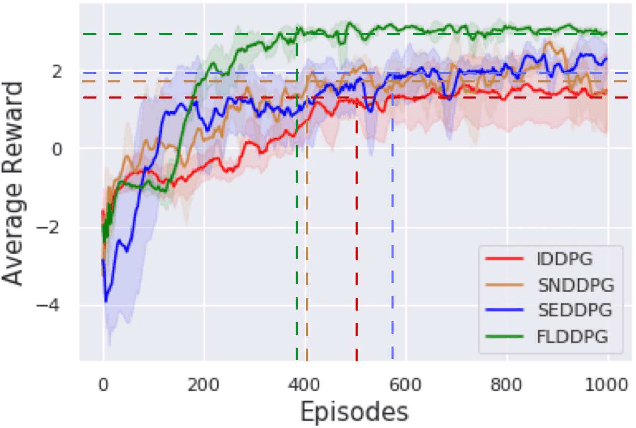

Accelerated Sim-to-Real Deep Reinforcement Learning: Learning Collision Avoidance from Human Player

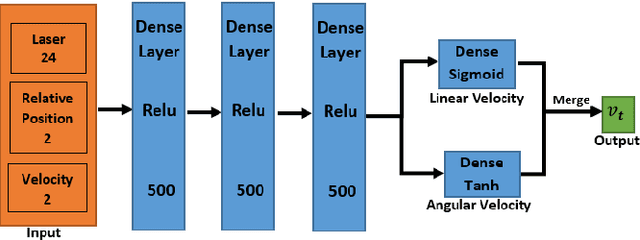

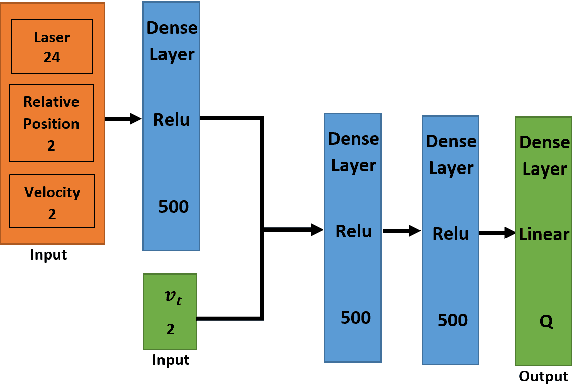

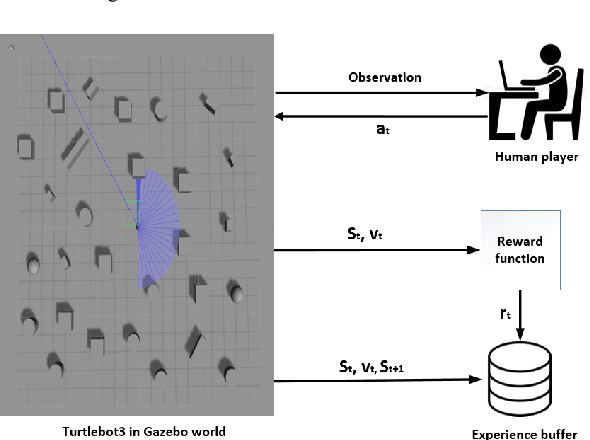

Feb 23, 2021

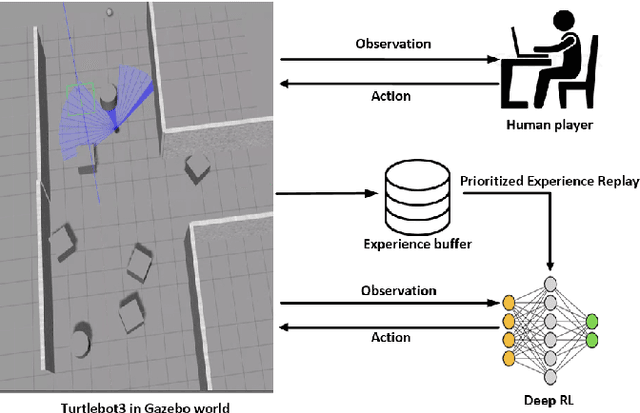

Abstract:This paper presents a sensor-level mapless collision avoidance algorithm for use in mobile robots that map raw sensor data to linear and angular velocities and navigate in an unknown environment without a map. An efficient training strategy is proposed to allow a robot to learn from both human experience data and self-exploratory data. A game format simulation framework is designed to allow the human player to tele-operate the mobile robot to a goal and human action is also scored using the reward function. Both human player data and self-playing data are sampled using prioritized experience replay algorithm. The proposed algorithm and training strategy have been evaluated in two different experimental configurations: \textit{Environment 1}, a simulated cluttered environment, and \textit{Environment 2}, a simulated corridor environment, to investigate the performance. It was demonstrated that the proposed method achieved the same level of reward using only 16\% of the training steps required by the standard Deep Deterministic Policy Gradient (DDPG) method in Environment 1 and 20\% of that in Environment 2. In the evaluation of 20 random missions, the proposed method achieved no collision in less than 2~h and 2.5~h of training time in the two Gazebo environments respectively. The method also generated smoother trajectories than DDPG. The proposed method has also been implemented on a real robot in the real-world environment for performance evaluation. We can confirm that the trained model with the simulation software can be directly applied into the real-world scenario without further fine-tuning, further demonstrating its higher robustness than DDPG. The video and code are available: https://youtu.be/BmwxevgsdGc https://github.com/hanlinniu/turtlebot3_ddpg_collision_avoidance

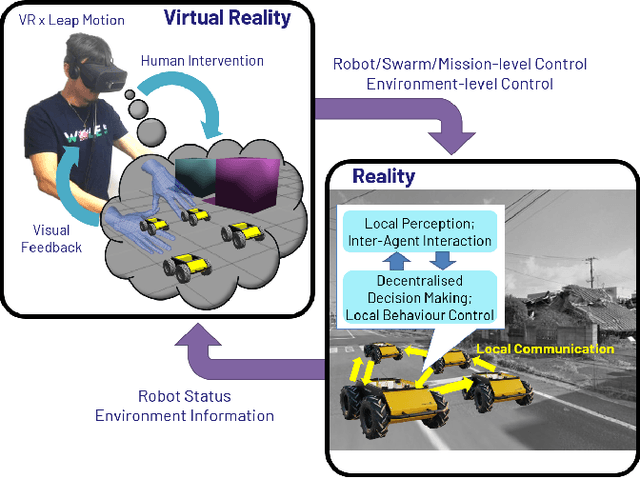

Omnipotent Virtual Giant for Remote Human-Swarm Interaction

Apr 01, 2019

Abstract:This paper proposes an intuitive human-swarm interaction framework inspired by our childhood memory in which we interacted with living ants by changing their positions and environments as if we were omnipotent relative to the ants. In virtual reality, analogously, we can be a super-powered virtual giant who can supervise a swarm of mobile robots in a vast and remote environment by flying over or resizing the world and coordinate them by picking and placing a robot or creating virtual walls. This work implements this idea by using Virtual Reality along with Leap Motion, which is then validated by proof-of-concept experiments using real and virtual mobile robots in mixed reality. We conduct a usability analysis to quantify the effectiveness of the overall system as well as the individual interfaces proposed in this work. The results revealed that the proposed method is intuitive and feasible for interaction with swarm robots, but may require appropriate training for the new end-user interface device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge