Farshad Arvin

Deep Learning-Enhanced Visual Monitoring in Hazardous Underwater Environments with a Swarm of Micro-Robots

Mar 04, 2025

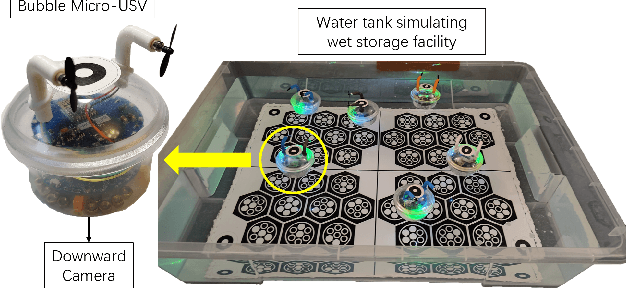

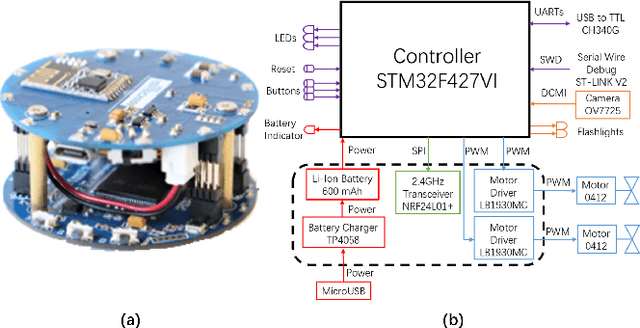

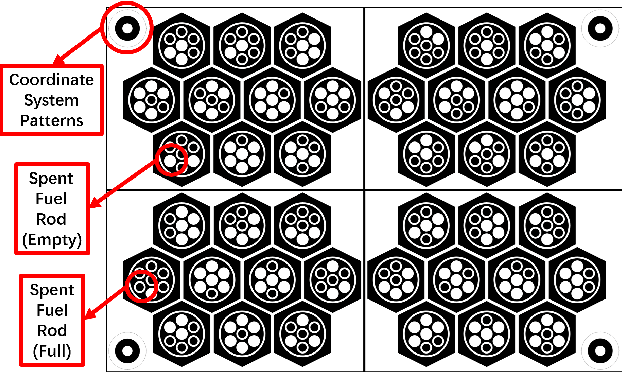

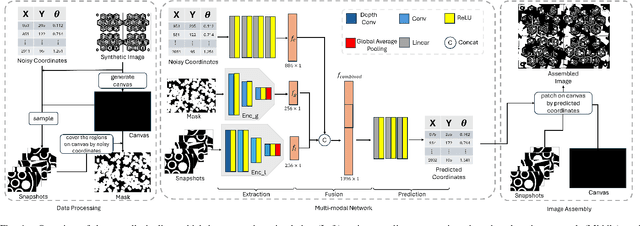

Abstract:Long-term monitoring and exploration of extreme environments, such as underwater storage facilities, is costly, labor-intensive, and hazardous. Automating this process with low-cost, collaborative robots can greatly improve efficiency. These robots capture images from different positions, which must be processed simultaneously to create a spatio-temporal model of the facility. In this paper, we propose a novel approach that integrates data simulation, a multi-modal deep learning network for coordinate prediction, and image reassembly to address the challenges posed by environmental disturbances causing drift and rotation in the robots' positions and orientations. Our approach enhances the precision of alignment in noisy environments by integrating visual information from snapshots, global positional context from masks, and noisy coordinates. We validate our method through extensive experiments using synthetic data that simulate real-world robotic operations in underwater settings. The results demonstrate very high coordinate prediction accuracy and plausible image assembly, indicating the real-world applicability of our approach. The assembled images provide clear and coherent views of the underwater environment for effective monitoring and inspection, showcasing the potential for broader use in extreme settings, further contributing to improved safety, efficiency, and cost reduction in hazardous field monitoring. Code is available on https://github.com/ChrisChen1023/Micro-Robot-Swarm.

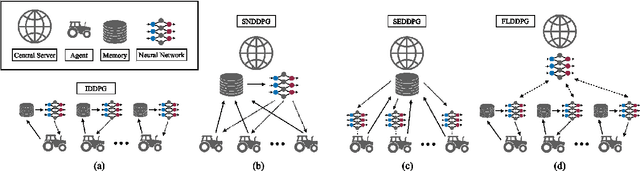

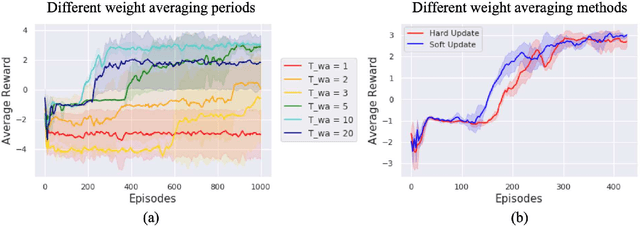

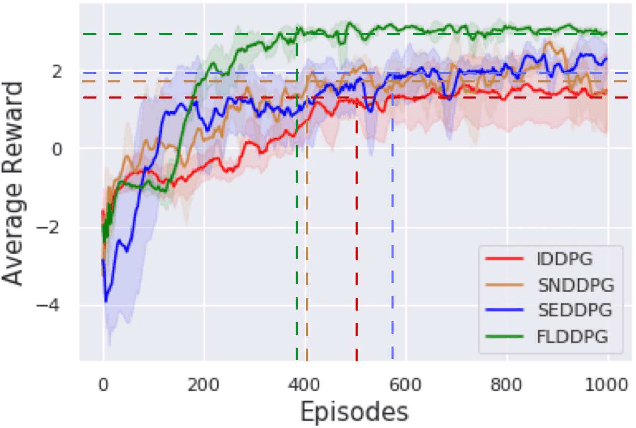

Federated Reinforcement Learning for Collective Navigation of Robotic Swarms

Feb 02, 2022

Abstract:The recent advancement of Deep Reinforcement Learning (DRL) contributed to robotics by allowing automatic controller design. Automatic controller design is a crucial approach for designing swarm robotic systems, which require more complex controller than a single robot system to lead a desired collective behaviour. Although DRL-based controller design method showed its effectiveness, the reliance on the central training server is a critical problem in the real-world environments where the robot-server communication is unstable or limited. We propose a novel Federated Learning (FL) based DRL training strategy for use in swarm robotic applications. As FL reduces the number of robot-server communication by only sharing neural network model weights, not local data samples, the proposed strategy reduces the reliance on the central server during controller training with DRL. The experimental results from the collective learning scenario showed that the proposed FL-based strategy dramatically reduced the number of communication by minimum 1600 times and even increased the success rate of navigation with the trained controller by 2.8 times compared to the baseline strategies that share a central server. The results suggest that our proposed strategy can efficiently train swarm robotic systems in the real-world environments with the limited robot-server communication, e.g. agri-robotics, underwater and damaged nuclear facilities.

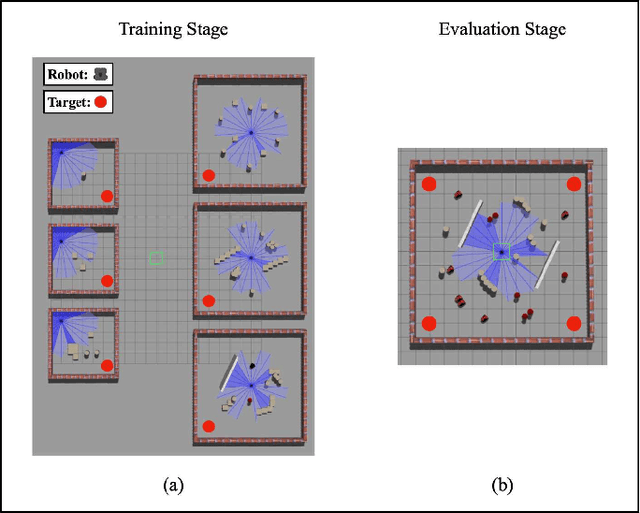

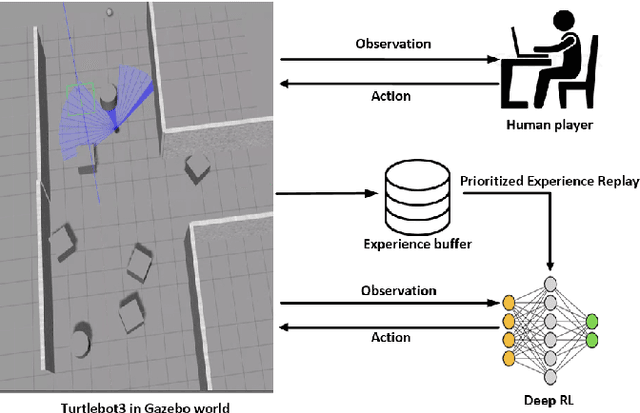

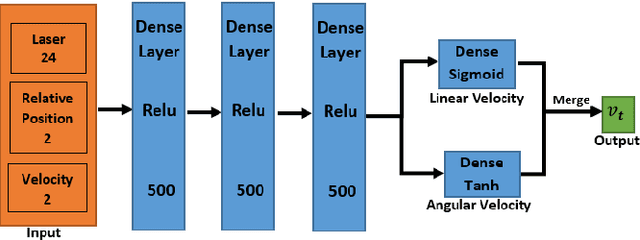

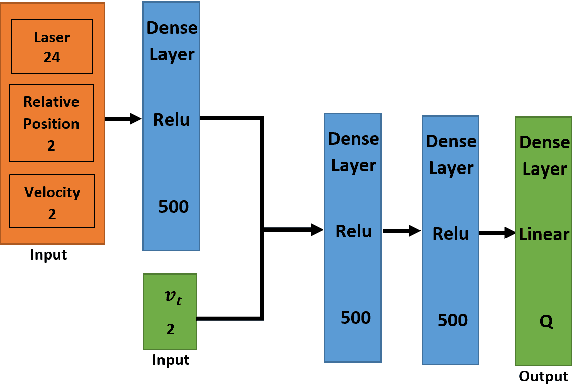

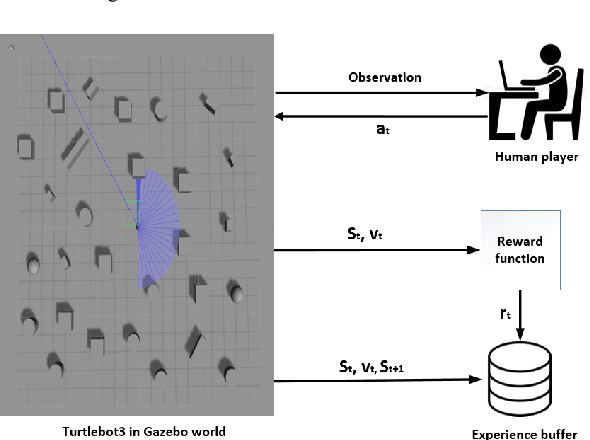

Accelerated Sim-to-Real Deep Reinforcement Learning: Learning Collision Avoidance from Human Player

Feb 23, 2021

Abstract:This paper presents a sensor-level mapless collision avoidance algorithm for use in mobile robots that map raw sensor data to linear and angular velocities and navigate in an unknown environment without a map. An efficient training strategy is proposed to allow a robot to learn from both human experience data and self-exploratory data. A game format simulation framework is designed to allow the human player to tele-operate the mobile robot to a goal and human action is also scored using the reward function. Both human player data and self-playing data are sampled using prioritized experience replay algorithm. The proposed algorithm and training strategy have been evaluated in two different experimental configurations: \textit{Environment 1}, a simulated cluttered environment, and \textit{Environment 2}, a simulated corridor environment, to investigate the performance. It was demonstrated that the proposed method achieved the same level of reward using only 16\% of the training steps required by the standard Deep Deterministic Policy Gradient (DDPG) method in Environment 1 and 20\% of that in Environment 2. In the evaluation of 20 random missions, the proposed method achieved no collision in less than 2~h and 2.5~h of training time in the two Gazebo environments respectively. The method also generated smoother trajectories than DDPG. The proposed method has also been implemented on a real robot in the real-world environment for performance evaluation. We can confirm that the trained model with the simulation software can be directly applied into the real-world scenario without further fine-tuning, further demonstrating its higher robustness than DDPG. The video and code are available: https://youtu.be/BmwxevgsdGc https://github.com/hanlinniu/turtlebot3_ddpg_collision_avoidance

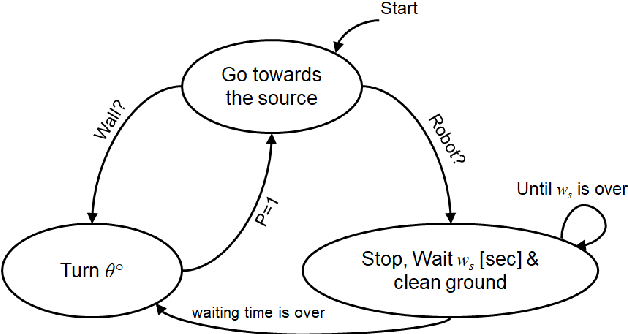

Cooperative Pollution Source Localization and Cleanup with a Bio-inspired Swarm Robot Aggregation

Jul 22, 2019

Abstract:Using robots for exploration of extreme and hazardous environments has the potential to significantly improve human safety. For example, robotic solutions can be deployed to find the source of a chemical leakage and clean the contaminated area. This paper demonstrates a proof-of-concept bio-inspired exploration method using a swarm robotic system, which is based on a combination of two bio-inspired behaviours: aggregation, and pheromone tracking. The main idea of the work presented is to follow pheromone trails to find the source of a chemical leakage and then carry out a decontamination task by aggregating at the critical zone. Using experiments conducted by a simulated model of a Mona robot, we evaluate the effects of population size and robot speed on the ability of the swarm in a decontamination task. The results indicate the feasibility of deploying robotic swarms in an exploration and cleaning task in an extreme environment.

Self-organized Collective Motion with a Simulated Real Robot Swarm

Apr 05, 2019

Abstract:Collective motion is one of the most fascinating phenomena observed in the nature. In the last decade, it aroused so much attention in physics, control and robotics fields. In particular, many studies have been done in swarm robotics related to collective motion, also called flocking. In most of these studies, robots use orientation and proximity of their neighbors to achieve collective motion. In such an approach, one of the biggest problems is to measure orientation information using on-board sensors. In most of the studies, this information is either simulated or implemented using communication. In this paper, to the best of our knowledge, we implemented a fully autonomous coordinated motion without alignment using very simple Mona robots. We used an approach based on Active Elastic Sheet (AES) method. We modified the method and added the capability to enable the swarm to move toward a desired direction and rotate about an arbitrary point. The parameters of the modified method are optimized using TCACS optimization algorithm. We tested our approach in different settings using Matlab and Webots.

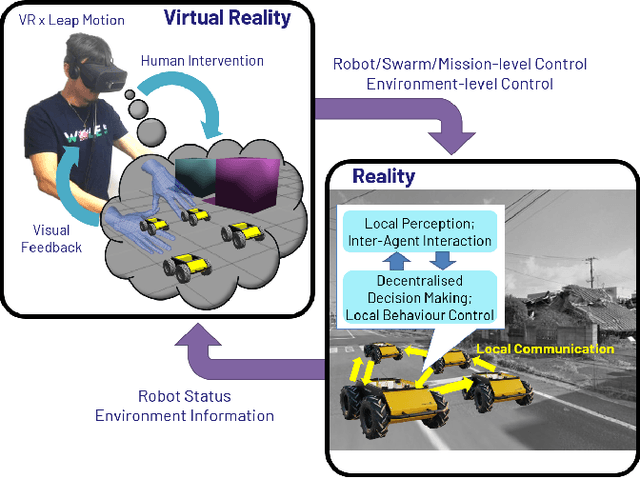

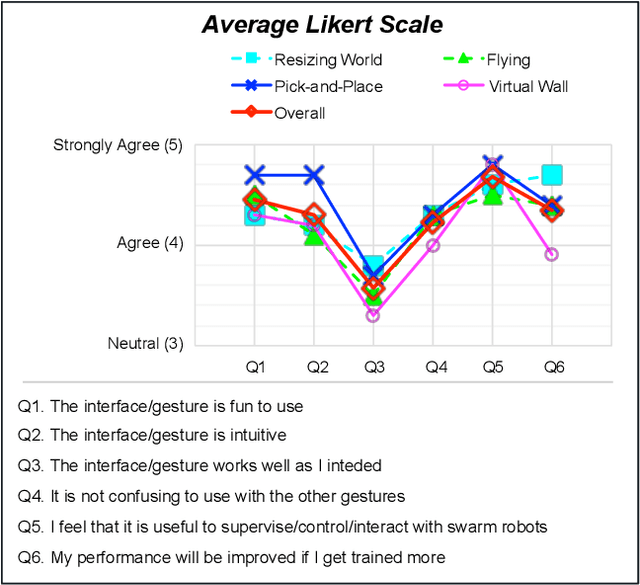

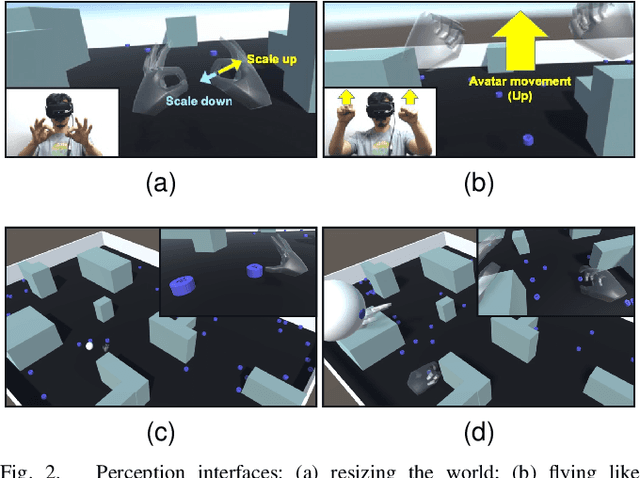

Omnipotent Virtual Giant for Remote Human-Swarm Interaction

Apr 01, 2019

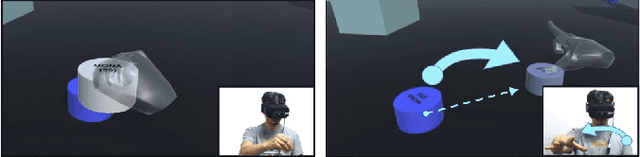

Abstract:This paper proposes an intuitive human-swarm interaction framework inspired by our childhood memory in which we interacted with living ants by changing their positions and environments as if we were omnipotent relative to the ants. In virtual reality, analogously, we can be a super-powered virtual giant who can supervise a swarm of mobile robots in a vast and remote environment by flying over or resizing the world and coordinate them by picking and placing a robot or creating virtual walls. This work implements this idea by using Virtual Reality along with Leap Motion, which is then validated by proof-of-concept experiments using real and virtual mobile robots in mixed reality. We conduct a usability analysis to quantify the effectiveness of the overall system as well as the individual interfaces proposed in this work. The results revealed that the proposed method is intuitive and feasible for interaction with swarm robots, but may require appropriate training for the new end-user interface device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge