Chih-Hong Cheng

Validating Generalist Robots with Situation Calculus and STL Falsification

Jan 06, 2026Abstract:Generalist robots are becoming a reality, capable of interpreting natural language instructions and executing diverse operations. However, their validation remains challenging because each task induces its own operational context and correctness specification, exceeding the assumptions of traditional validation methods. We propose a two-layer validation framework that combines abstract reasoning with concrete system falsification. At the abstract layer, situation calculus models the world and derives weakest preconditions, enabling constraint-aware combinatorial testing to systematically generate diverse, semantically valid world-task configurations with controllable coverage strength. At the concrete layer, these configurations are instantiated for simulation-based falsification with STL monitoring. Experiments on tabletop manipulation tasks show that our framework effectively uncovers failure cases in the NVIDIA GR00T controller, demonstrating its promise for validating general-purpose robot autonomy.

Randomized Smoothing Meets Vision-Language Models

Sep 19, 2025Abstract:Randomized smoothing (RS) is one of the prominent techniques to ensure the correctness of machine learning models, where point-wise robustness certificates can be derived analytically. While RS is well understood for classification, its application to generative models is unclear, since their outputs are sequences rather than labels. We resolve this by connecting generative outputs to an oracle classification task and showing that RS can still be enabled: the final response can be classified as a discrete action (e.g., service-robot commands in VLAs), as harmful vs. harmless (content moderation or toxicity detection in VLMs), or even applying oracles to cluster answers into semantically equivalent ones. Provided that the error rate for the oracle classifier comparison is bounded, we develop the theory that associates the number of samples with the corresponding robustness radius. We further derive improved scaling laws analytically relating the certified radius and accuracy to the number of samples, showing that the earlier result of 2 to 3 orders of magnitude fewer samples sufficing with minimal loss remains valid even under weaker assumptions. Together, these advances make robustness certification both well-defined and computationally feasible for state-of-the-art VLMs, as validated against recent jailbreak-style adversarial attacks.

Cumulative Consensus Score: Label-Free and Model-Agnostic Evaluation of Object Detectors in Deployment

Sep 16, 2025Abstract:Evaluating object detection models in deployment is challenging because ground-truth annotations are rarely available. We introduce the Cumulative Consensus Score (CCS), a label-free metric that enables continuous monitoring and comparison of detectors in real-world settings. CCS applies test-time data augmentation to each image, collects predicted bounding boxes across augmented views, and computes overlaps using Intersection over Union. Maximum overlaps are normalized and averaged across augmentation pairs, yielding a measure of spatial consistency that serves as a proxy for reliability without annotations. In controlled experiments on Open Images and KITTI, CCS achieved over 90% congruence with F1-score, Probabilistic Detection Quality, and Optimal Correction Cost. The method is model-agnostic, working across single-stage and two-stage detectors, and operates at the case level to highlight under-performing scenarios. Altogether, CCS provides a robust foundation for DevOps-style monitoring of object detectors.

On the Need for a Statistical Foundation in Scenario-Based Testing of Autonomous Vehicles

May 04, 2025Abstract:Scenario-based testing has emerged as a common method for autonomous vehicles (AVs) safety, offering a more efficient alternative to mile-based testing by focusing on high-risk scenarios. However, fundamental questions persist regarding its stopping rules, residual risk estimation, debug effectiveness, and the impact of simulation fidelity on safety claims. This paper argues that a rigorous statistical foundation is essential to address these challenges and enable rigorous safety assurance. By drawing parallels between AV testing and traditional software testing methodologies, we identify shared research gaps and reusable solutions. We propose proof-of-concept models to quantify the probability of failure per scenario (pfs) and evaluate testing effectiveness under varying conditions. Our analysis reveals that neither scenario-based nor mile-based testing universally outperforms the other. Furthermore, we introduce Risk Estimation Fidelity (REF), a novel metric to certify the alignment of synthetic and real-world testing outcomes, ensuring simulation-based safety claims are statistically defensible.

Mitigating Hallucinations in YOLO-based Object Detection Models: A Revisit to Out-of-Distribution Detection

Mar 10, 2025Abstract:Object detection systems must reliably perceive objects of interest without being overly confident to ensure safe decision-making in dynamic environments. Filtering techniques based on out-of-distribution (OoD) detection are commonly added as an extra safeguard to filter hallucinations caused by overconfidence in novel objects. Nevertheless, evaluating YOLO-family detectors and their filters under existing OoD benchmarks often leads to unsatisfactory performance. This paper studies the underlying reasons for performance bottlenecks and proposes a methodology to improve performance fundamentally. Our first contribution is a calibration of all existing evaluation results: Although images in existing OoD benchmark datasets are claimed not to have objects within in-distribution (ID) classes (i.e., categories defined in the training dataset), around 13% of objects detected by the object detector are actually ID objects. Dually, the ID dataset containing OoD objects can also negatively impact the decision boundary of filters. These ultimately lead to a significantly imprecise performance estimation. Our second contribution is to consider the task of hallucination reduction as a joint pipeline of detectors and filters. By developing a methodology to carefully synthesize an OoD dataset that semantically resembles the objects to be detected, and using the crafted OoD dataset in the fine-tuning of YOLO detectors to suppress the objectness score, we achieve a 88% reduction in overall hallucination error with a combined fine-tuned detection and filtering system on the self-driving benchmark BDD-100K. Our code and dataset are available at: https://gricad-gitlab.univ-grenoble-alpes.fr/dnn-safety/m-hood.

Online Collision Risk Estimation via Monocular Depth-Aware Object Detectors and Fuzzy Inference

Nov 09, 2024Abstract:This paper presents a monitoring framework that infers the level of autonomous vehicle (AV) collision risk based on its object detector's performance using only monocular camera images. Essentially, the framework takes two sets of predictions produced by different algorithms and associates their inconsistencies with the collision risk via fuzzy inference. The first set of predictions is obtained through retrieving safety-critical 2.5D objects from a depth map, and the second set comes from the AV's 3D object detector. We experimentally validate that, based on Intersection-over-Union (IoU) and a depth discrepancy measure, the inconsistencies between the two sets of predictions strongly correlate to the safety-related error of the 3D object detector against ground truths. This correlation allows us to construct a fuzzy inference system and map the inconsistency measures to an existing collision risk indicator. In particular, we apply various knowledge- and data-driven techniques and find using particle swarm optimization that learns general fuzzy rules gives the best mapping result. Lastly, we validate our monitor's capability to produce relevant risk estimates with the large-scale nuScenes dataset and show it can safeguard an AV in closed-loop simulations.

Trustworthy Text-to-Image Diffusion Models: A Timely and Focused Survey

Sep 26, 2024

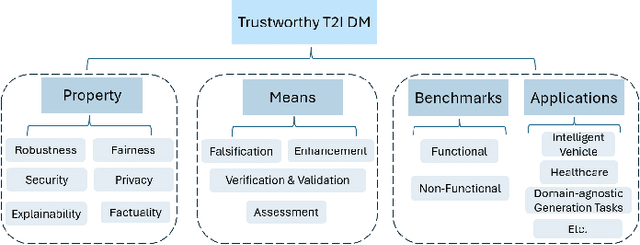

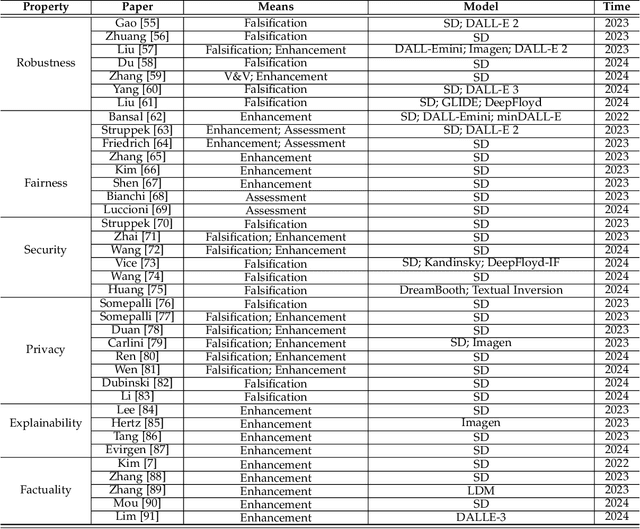

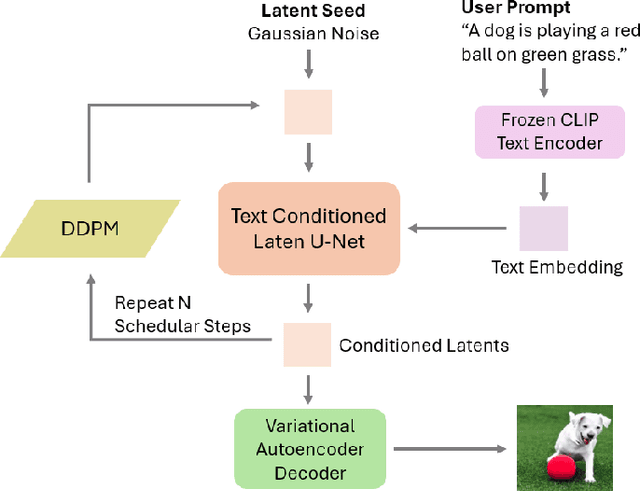

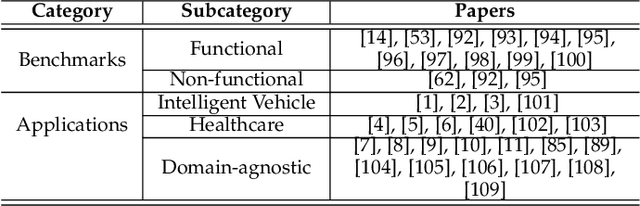

Abstract:Text-to-Image (T2I) Diffusion Models (DMs) have garnered widespread attention for their impressive advancements in image generation. However, their growing popularity has raised ethical and social concerns related to key non-functional properties of trustworthiness, such as robustness, fairness, security, privacy, factuality, and explainability, similar to those in traditional deep learning (DL) tasks. Conventional approaches for studying trustworthiness in DL tasks often fall short due to the unique characteristics of T2I DMs, e.g., the multi-modal nature. Given the challenge, recent efforts have been made to develop new methods for investigating trustworthiness in T2I DMs via various means, including falsification, enhancement, verification \& validation and assessment. However, there is a notable lack of in-depth analysis concerning those non-functional properties and means. In this survey, we provide a timely and focused review of the literature on trustworthy T2I DMs, covering a concise-structured taxonomy from the perspectives of property, means, benchmarks and applications. Our review begins with an introduction to essential preliminaries of T2I DMs, and then we summarise key definitions/metrics specific to T2I tasks and analyses the means proposed in recent literature based on these definitions/metrics. Additionally, we review benchmarks and domain applications of T2I DMs. Finally, we highlight the gaps in current research, discuss the limitations of existing methods, and propose future research directions to advance the development of trustworthy T2I DMs. Furthermore, we keep up-to-date updates in this field to track the latest developments and maintain our GitHub repository at: https://github.com/wellzline/Trustworthy_T2I_DMs

Formal Specification, Assessment, and Enforcement of Fairness for Generative AIs

Apr 26, 2024Abstract:Reinforcing or even exacerbating societal biases and inequalities will increase significantly as generative AI increasingly produces useful artifacts, from text to images and beyond, for the real world. We address these issues by formally characterizing the notion of fairness for generative AI as a basis for monitoring and enforcing fairness. We define two levels of fairness using the notion of infinite sequences of abstractions of AI-generated artifacts such as text or images. The first is the fairness demonstrated on the generated sequences, which is evaluated only on the outputs while agnostic to the prompts and models used. The second is the inherent fairness of the generative AI model, which requires that fairness be manifested when input prompts are neutral, that is, they do not explicitly instruct the generative AI to produce a particular type of output. We also study relative intersectional fairness to counteract the combinatorial explosion of fairness when considering multiple categories together with lazy fairness enforcement. Finally, fairness monitoring and enforcement are tested against some current generative AI models.

Estimating the Robustness Radius for Randomized Smoothing with 100$\times$ Sample Efficiency

Apr 26, 2024Abstract:Randomized smoothing (RS) has successfully been used to improve the robustness of predictions for deep neural networks (DNNs) by adding random noise to create multiple variations of an input, followed by deciding the consensus. To understand if an RS-enabled DNN is effective in the sampled input domains, it is mandatory to sample data points within the operational design domain, acquire the point-wise certificate regarding robustness radius, and compare it with pre-defined acceptance criteria. Consequently, ensuring that a point-wise robustness certificate for any given data point is obtained relatively cost-effectively is crucial. This work demonstrates that reducing the number of samples by one or two orders of magnitude can still enable the computation of a slightly smaller robustness radius (commonly ~20% radius reduction) with the same confidence. We provide the mathematical foundation for explaining the phenomenon while experimentally showing promising results on the standard CIFAR-10 and ImageNet datasets.

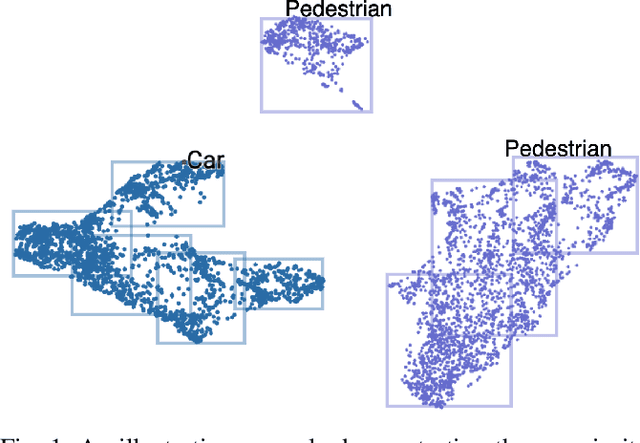

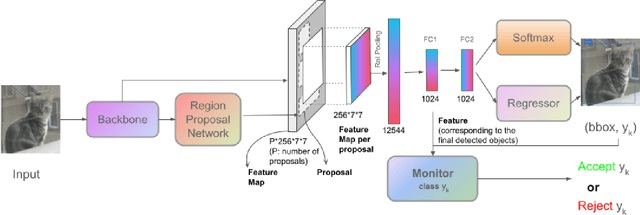

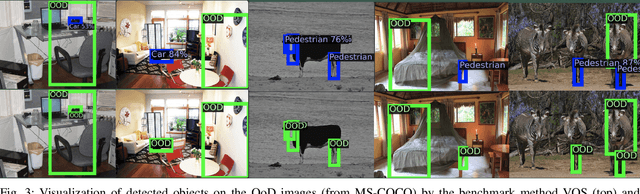

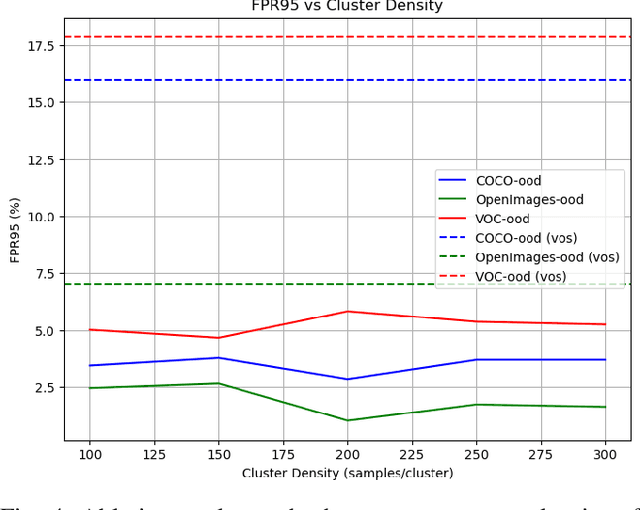

BAM: Box Abstraction Monitors for Real-time OoD Detection in Object Detection

Mar 27, 2024

Abstract:Out-of-distribution (OoD) detection techniques for deep neural networks (DNNs) become crucial thanks to their filtering of abnormal inputs, especially when DNNs are used in safety-critical applications and interact with an open and dynamic environment. Nevertheless, integrating OoD detection into state-of-the-art (SOTA) object detection DNNs poses significant challenges, partly due to the complexity introduced by the SOTA OoD construction methods, which require the modification of DNN architecture and the introduction of complex loss functions. This paper proposes a simple, yet surprisingly effective, method that requires neither retraining nor architectural change in object detection DNN, called Box Abstraction-based Monitors (BAM). The novelty of BAM stems from using a finite union of convex box abstractions to capture the learned features of objects for in-distribution (ID) data, and an important observation that features from OoD data are more likely to fall outside of these boxes. The union of convex regions within the feature space allows the formation of non-convex and interpretable decision boundaries, overcoming the limitations of VOS-like detectors without sacrificing real-time performance. Experiments integrating BAM into Faster R-CNN-based object detection DNNs demonstrate a considerably improved performance against SOTA OoD detection techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge