Harald Ruess

Fairness Analysis with Shapley-Owen Effects

Sep 28, 2024Abstract:We argue that relative importance and its equitable attribution in terms of Shapley-Owen effects is an appropriate one, and, if we accept a small number of reasonable imperatives for equitable attribution, the only way to measure fairness. On the other hand, the computation of Shapley-Owen effects can be very demanding. Our main technical result is a spectral decomposition of the Shapley-Owen effects, which decomposes the computation of these indices into a model-specific and a model-independent part. The model-independent part is precomputed once and for all, and the model-specific computation of Shapley-Owen effects is expressed analytically in terms of the coefficients of the model's \emph{polynomial chaos expansion} (PCE), which can now be reused to compute different Shapley-Owen effects. We also propose an algorithm for computing precise and sparse truncations of the PCE of the model and the spectral decomposition of the Shapley-Owen effects, together with upper bounds on the accumulated approximation errors. The approximations of both the PCE and the Shapley-Owen effects converge to their true values.

Formal Specification, Assessment, and Enforcement of Fairness for Generative AIs

Apr 26, 2024Abstract:Reinforcing or even exacerbating societal biases and inequalities will increase significantly as generative AI increasingly produces useful artifacts, from text to images and beyond, for the real world. We address these issues by formally characterizing the notion of fairness for generative AI as a basis for monitoring and enforcing fairness. We define two levels of fairness using the notion of infinite sequences of abstractions of AI-generated artifacts such as text or images. The first is the fairness demonstrated on the generated sequences, which is evaluated only on the outputs while agnostic to the prompts and models used. The second is the inherent fairness of the generative AI model, which requires that fairness be manifested when input prompts are neutral, that is, they do not explicitly instruct the generative AI to produce a particular type of output. We also study relative intersectional fairness to counteract the combinatorial explosion of fairness when considering multiple categories together with lazy fairness enforcement. Finally, fairness monitoring and enforcement are tested against some current generative AI models.

Safety Performance of Neural Networks in the Presence of Covariate Shift

Jul 24, 2023

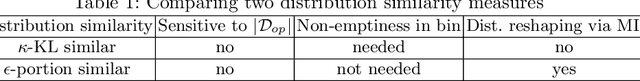

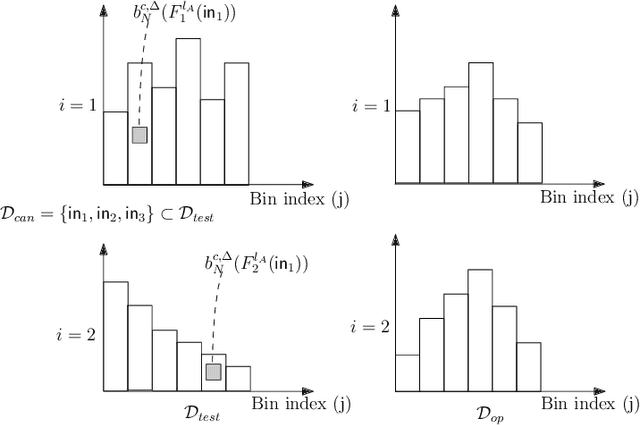

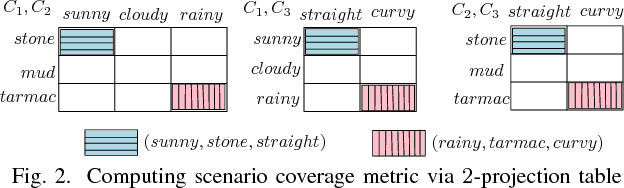

Abstract:Covariate shift may impact the operational safety performance of neural networks. A re-evaluation of the safety performance, however, requires collecting new operational data and creating corresponding ground truth labels, which often is not possible during operation. We are therefore proposing to reshape the initial test set, as used for the safety performance evaluation prior to deployment, based on an approximation of the operational data. This approximation is obtained by observing and learning the distribution of activation patterns of neurons in the network during operation. The reshaped test set reflects the distribution of neuron activation values as observed during operation, and may therefore be used for re-evaluating safety performance in the presence of covariate shift. First, we derive conservative bounds on the values of neurons by applying finite binning and static dataflow analysis. Second, we formulate a mixed integer linear programming (MILP) constraint for constructing the minimum set of data points to be removed in the test set, such that the difference between the discretized test and operational distributions is bounded. We discuss potential benefits and limitations of this constraint-based approach based on our initial experience with an implemented research prototype.

Towards Rigorous Design of OoD Detectors

Jun 14, 2023Abstract:Out-of-distribution (OoD) detection techniques are instrumental for safety-related neural networks. We are arguing, however, that current performance-oriented OoD detection techniques geared towards matching metrics such as expected calibration error, are not sufficient for establishing safety claims. What is missing is a rigorous design approach for developing, verifying, and validating OoD detectors. These design principles need to be aligned with the intended functionality and the operational domain. Here, we formulate some of the key technical challenges, together with a possible way forward, for developing a rigorous and safety-related design methodology for OoD detectors.

Evidential Transactions with Cyberlogic

Mar 20, 2023Abstract:Cyberlogic is an enabling logical foundation for building and analyzing digital transactions that involve the exchange of digital forms of evidence. It is based on an extension of (first-order) intuitionistic predicate logic with an attestation and a knowledge modality. The key ideas underlying Cyberlogic are extremely simple, as (1) public keys correspond to authorizations, (2) transactions are specified as distributed logic programs, and (3) verifiable evidence is collected by means of distributed proof search. Verifiable evidence, in particular, are constructed from extra-logical elements such as signed documents and cryptographic signatures. Despite this conceptual simplicity of Cyberlogic, central features of authorization policies including trust, delegation, and revocation of authority are definable. An expressive temporal-epistemic logic for specifying distributed authorization policies and protocols is therefore definable in Cyberlogic using a trusted time source. We describe the distributed execution of Cyberlogic programs based on the hereditary Harrop fragment in terms of distributed proof search, and we illustrate some fundamental issues in the distributed construction of certificates. The main principles of encoding and executing cryptographic protocols in Cyberlogic are demonstrated. Finally, a functional encryption scheme is proposed for checking certificates of evidential transactions when policies are kept private.

Systems Challenges for Trustworthy Embodied Systems

Jan 10, 2022

Abstract:A new generation of increasingly autonomous and self-learning systems, which we call embodied systems, is about to be developed. When deploying these systems into a real-life context we face various engineering challenges, as it is crucial to coordinate the behavior of embodied systems in a beneficial manner, ensure their compatibility with our human-centered social values, and design verifiably safe and reliable human-machine interaction. We are arguing that raditional systems engineering is coming to a climacteric from embedded to embodied systems, and with assuring the trustworthiness of dynamic federations of situationally aware, intent-driven, explorative, ever-evolving, largely non-predictable, and increasingly autonomous embodied systems in uncertain, complex, and unpredictable real-world contexts. We are also identifying a number of urgent systems challenges for trustworthy embodied systems, including robust and human-centric AI, cognitive architectures, uncertainty quantification, trustworthy self-integration, and continual analysis and assurance.

Towards Dependability Metrics for Neural Networks

Jun 08, 2018

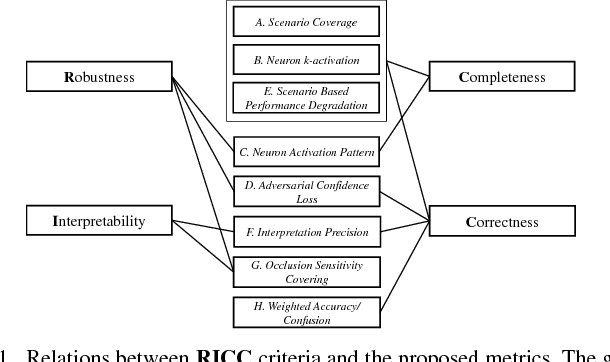

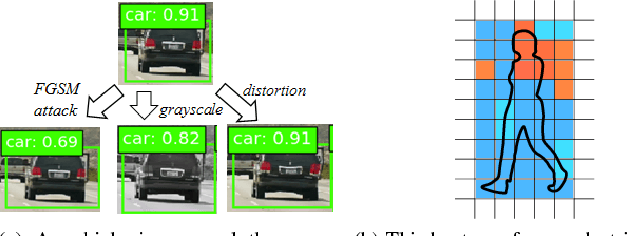

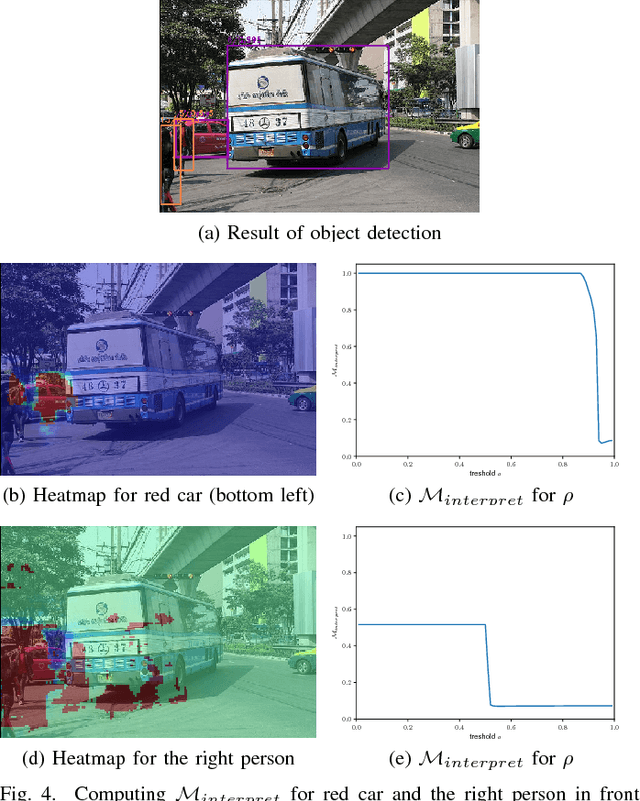

Abstract:Artificial neural networks (NN) are instrumental in realizing highly-automated driving functionality. An overarching challenge is to identify best safety engineering practices for NN and other learning-enabled components. In particular, there is an urgent need for an adequate set of metrics for measuring all-important NN dependability attributes. We address this challenge by proposing a number of NN-specific and efficiently computable metrics for measuring NN dependability attributes including robustness, interpretability, completeness, and correctness.

Verification of Binarized Neural Networks via Inter-Neuron Factoring

Jan 19, 2018

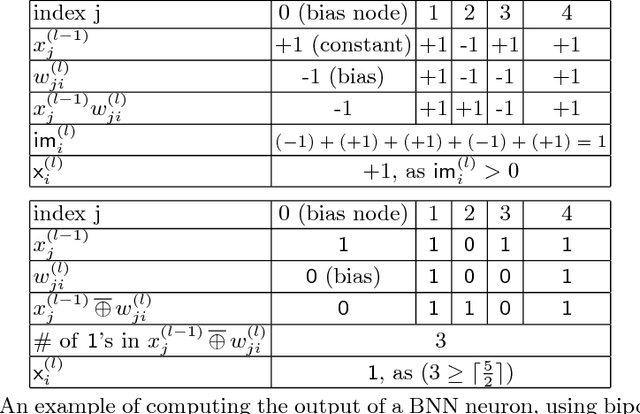

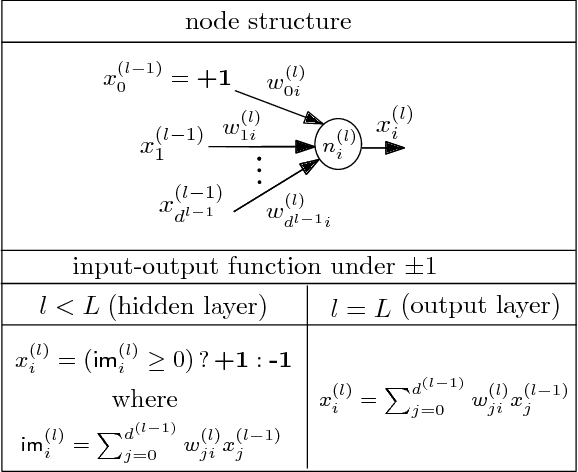

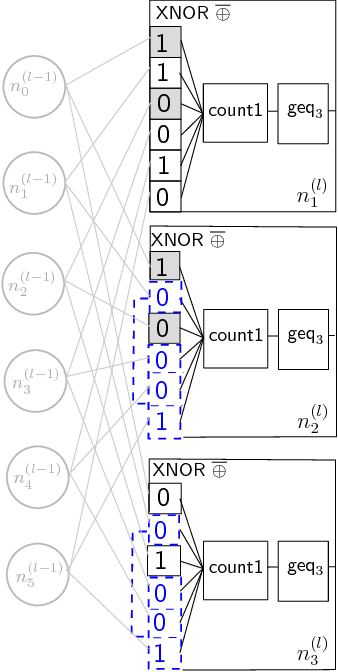

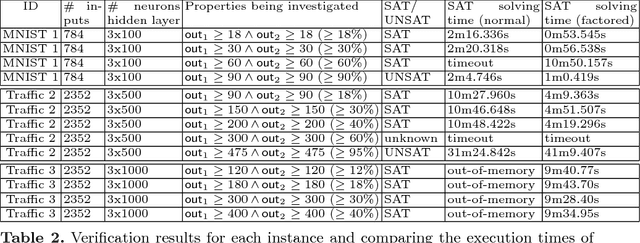

Abstract:We study the problem of formal verification of Binarized Neural Networks (BNN), which have recently been proposed as a energy-efficient alternative to traditional learning networks. The verification of BNNs, using the reduction to hardware verification, can be even more scalable by factoring computations among neurons within the same layer. By proving the NP-hardness of finding optimal factoring as well as the hardness of PTAS approximability, we design polynomial-time search heuristics to generate factoring solutions. The overall framework allows applying verification techniques to moderately-sized BNNs for embedded devices with thousands of neurons and inputs.

Neural Networks for Safety-Critical Applications - Challenges, Experiments and Perspectives

Sep 04, 2017

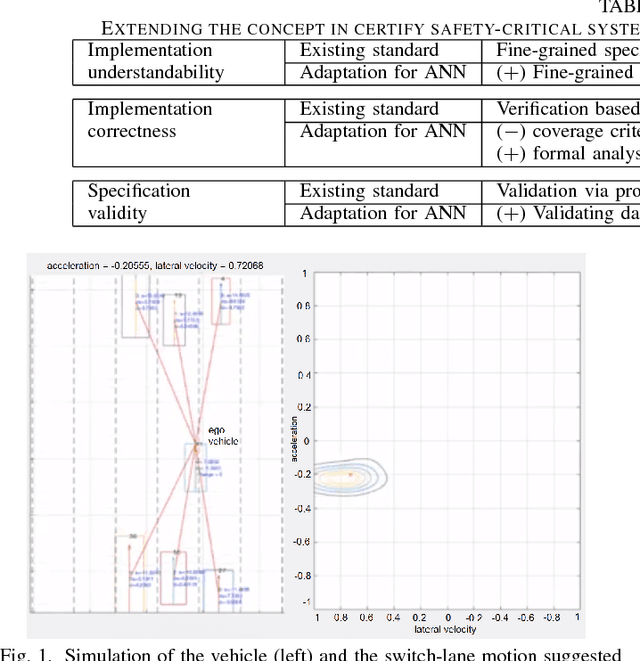

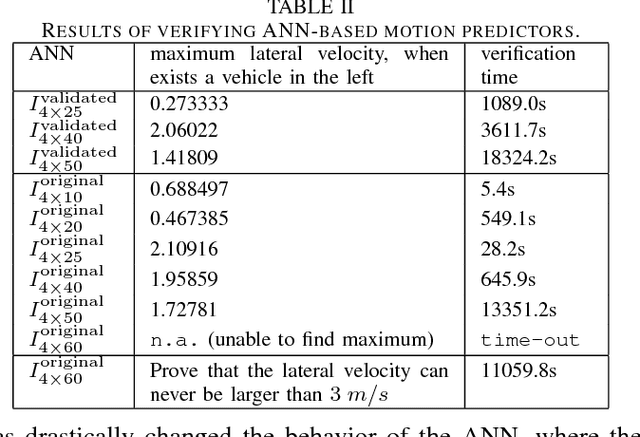

Abstract:We propose a methodology for designing dependable Artificial Neural Networks (ANN) by extending the concepts of understandability, correctness, and validity that are crucial ingredients in existing certification standards. We apply the concept in a concrete case study in designing a high-way ANN-based motion predictor to guarantee safety properties such as impossibility for the ego vehicle to suggest moving to the right lane if there exists another vehicle on its right.

Maximum Resilience of Artificial Neural Networks

Jul 05, 2017

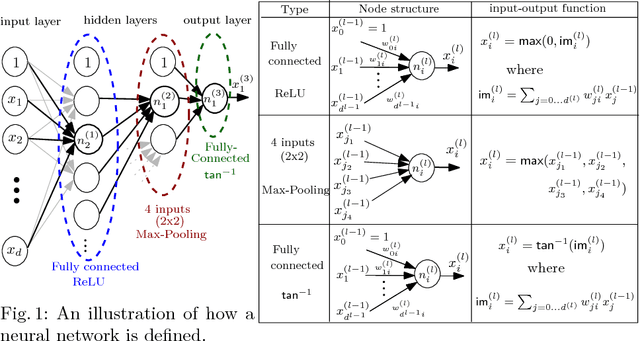

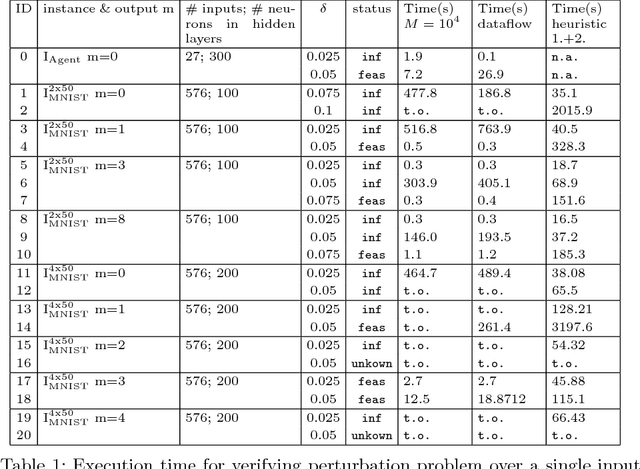

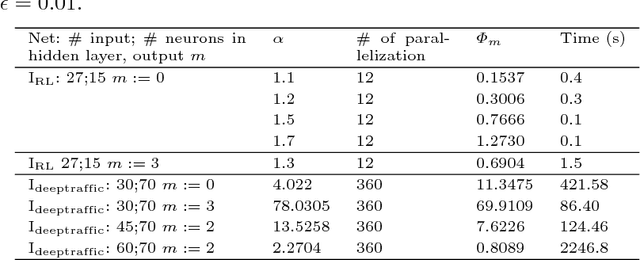

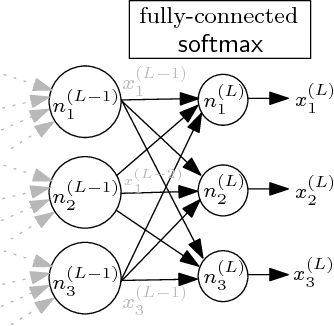

Abstract:The deployment of Artificial Neural Networks (ANNs) in safety-critical applications poses a number of new verification and certification challenges. In particular, for ANN-enabled self-driving vehicles it is important to establish properties about the resilience of ANNs to noisy or even maliciously manipulated sensory input. We are addressing these challenges by defining resilience properties of ANN-based classifiers as the maximal amount of input or sensor perturbation which is still tolerated. This problem of computing maximal perturbation bounds for ANNs is then reduced to solving mixed integer optimization problems (MIP). A number of MIP encoding heuristics are developed for drastically reducing MIP-solver runtimes, and using parallelization of MIP-solvers results in an almost linear speed-up in the number (up to a certain limit) of computing cores in our experiments. We demonstrate the effectiveness and scalability of our approach by means of computing maximal resilience bounds for a number of ANN benchmark sets ranging from typical image recognition scenarios to the autonomous maneuvering of robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge