Chung-Hao Huang

Towards Safety Verification of Direct Perception Neural Networks

Apr 09, 2019

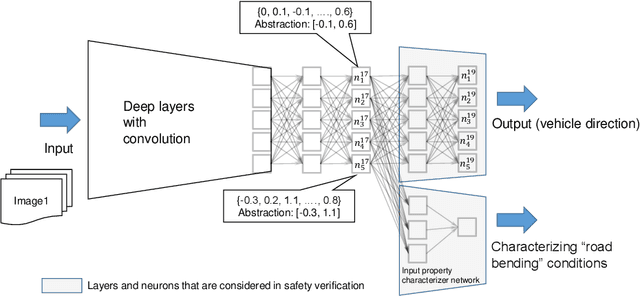

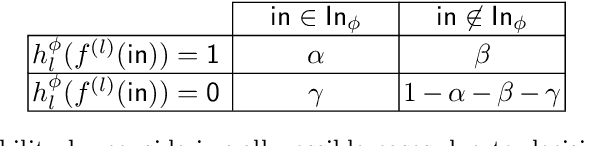

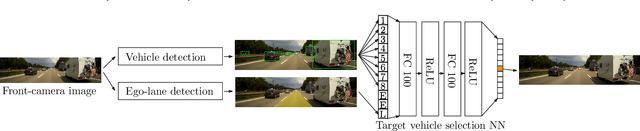

Abstract:We study the problem of safety verification of direct perception neural networks, which take camera images as inputs and produce high-level features for autonomous vehicles to make control decisions. Formal verification of direct perception neural networks is extremely challenging, as it is difficult to formulate the specification that requires characterizing input conditions, while the number of neurons in such a network can reach millions. We approach the specification problem by learning an input property characterizer which carefully extends a direct perception neural network at close-to-output layers, and address the scalability problem by only analyzing networks starting from shared neurons without losing soundness. The presented workflow is used to understand a direct perception neural network (developed by Audi) which computes the next waypoint and orientation for autonomous vehicles to follow.

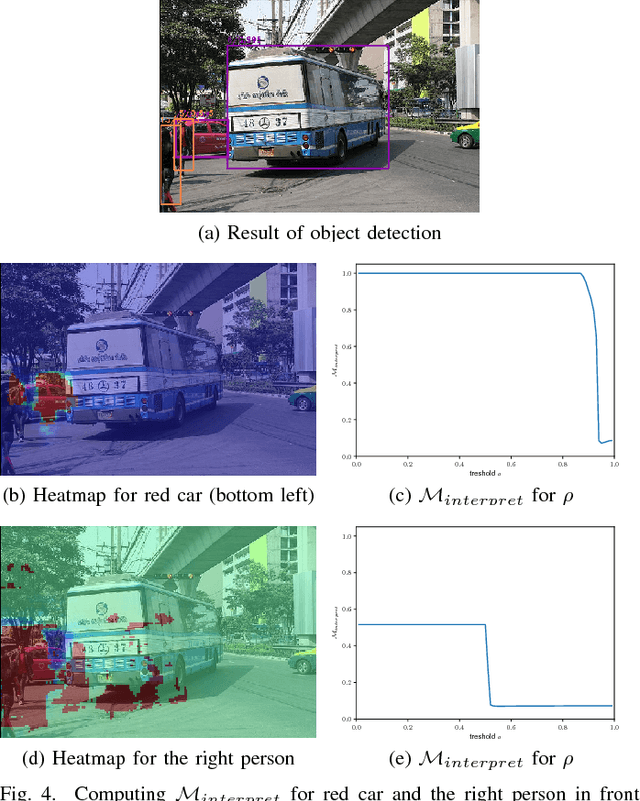

nn-dependability-kit: Engineering Neural Networks for Safety-Critical Systems

Nov 16, 2018

Abstract:nn-dependability-kit is an open-source toolbox to support safety engineering of neural networks. The key functionality of nn-dependability-kit includes (a) novel dependability metrics for indicating sufficient elimination of uncertainties in the product life cycle, (b) formal reasoning engine for ensuring that the generalization does not lead to undesired behaviors, and (c) runtime monitoring for reasoning whether a decision of a neural network in operation time is supported by prior similarities in the training data.

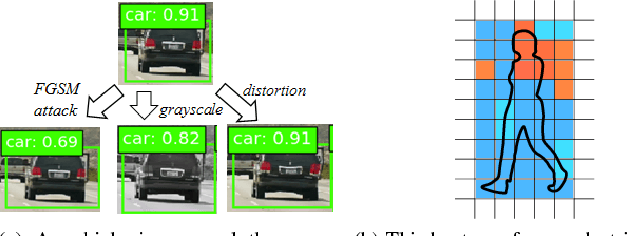

Towards Dependability Metrics for Neural Networks

Jun 08, 2018

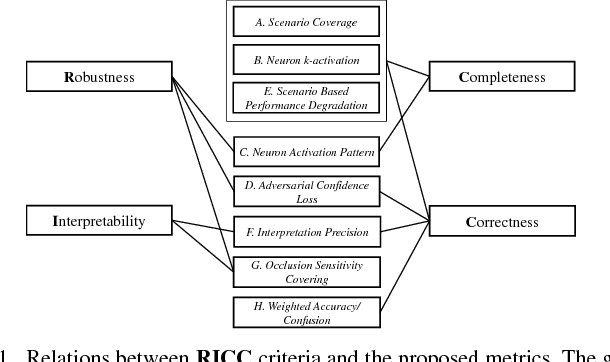

Abstract:Artificial neural networks (NN) are instrumental in realizing highly-automated driving functionality. An overarching challenge is to identify best safety engineering practices for NN and other learning-enabled components. In particular, there is an urgent need for an adequate set of metrics for measuring all-important NN dependability attributes. We address this challenge by proposing a number of NN-specific and efficiently computable metrics for measuring NN dependability attributes including robustness, interpretability, completeness, and correctness.

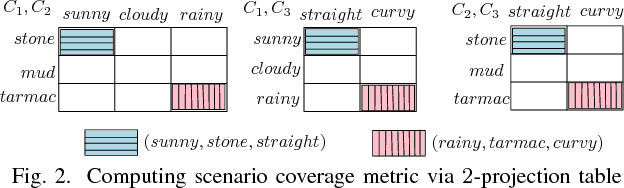

Quantitative Projection Coverage for Testing ML-enabled Autonomous Systems

May 11, 2018

Abstract:Systematically testing models learned from neural networks remains a crucial unsolved barrier to successfully justify safety for autonomous vehicles engineered using data-driven approach. We propose quantitative k-projection coverage as a metric to mediate combinatorial explosion while guiding the data sampling process. By assuming that domain experts propose largely independent environment conditions and by associating elements in each condition with weights, the product of these conditions forms scenarios, and one may interpret weights associated with each equivalence class as relative importance. Achieving full k-projection coverage requires that the data set, when being projected to the hyperplane formed by arbitrarily selected k-conditions, covers each class with number of data points no less than the associated weight. For the general case where scenario composition is constrained by rules, precisely computing k-projection coverage remains in NP. In terms of finding minimum test cases to achieve full coverage, we present theoretical complexity for important sub-cases and an encoding to 0-1 integer programming. We have implemented a research prototype that generates test cases for a visual object defection unit in automated driving, demonstrating the technological feasibility of our proposed coverage criterion.

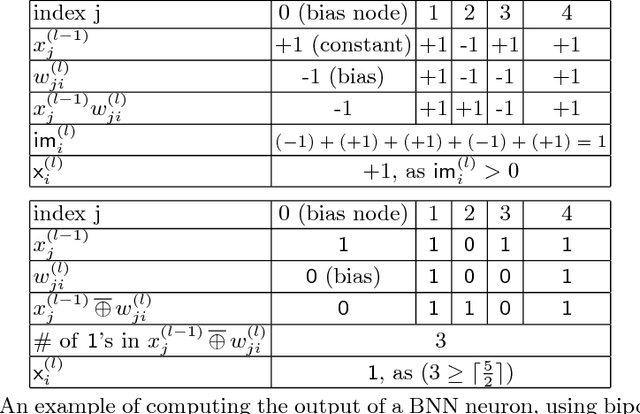

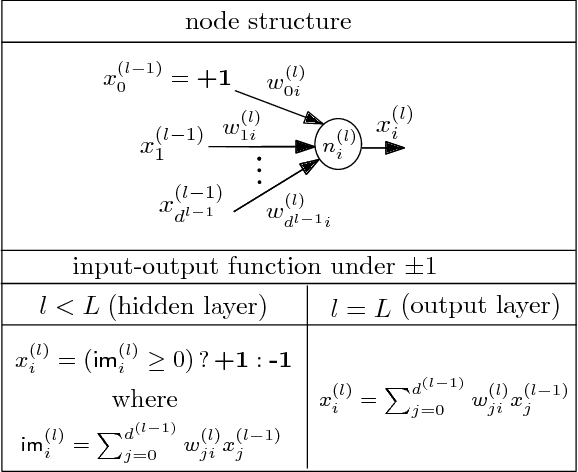

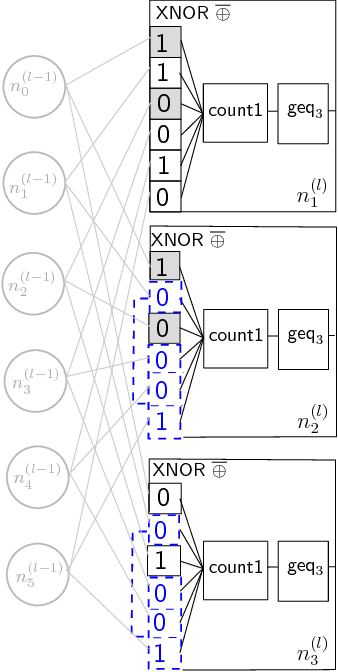

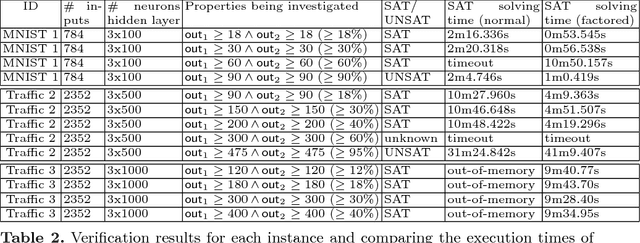

Verification of Binarized Neural Networks via Inter-Neuron Factoring

Jan 19, 2018

Abstract:We study the problem of formal verification of Binarized Neural Networks (BNN), which have recently been proposed as a energy-efficient alternative to traditional learning networks. The verification of BNNs, using the reduction to hardware verification, can be even more scalable by factoring computations among neurons within the same layer. By proving the NP-hardness of finding optimal factoring as well as the hardness of PTAS approximability, we design polynomial-time search heuristics to generate factoring solutions. The overall framework allows applying verification techniques to moderately-sized BNNs for embedded devices with thousands of neurons and inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge