Thomas Brunner

Evaluating the Adversarial Robustness of Adaptive Test-time Defenses

Feb 28, 2022

Abstract:Adaptive defenses that use test-time optimization promise to improve robustness to adversarial examples. We categorize such adaptive test-time defenses and explain their potential benefits and drawbacks. In the process, we evaluate some of the latest proposed adaptive defenses (most of them published at peer-reviewed conferences). Unfortunately, none significantly improve upon static models when evaluated appropriately. Some even weaken the underlying static model while simultaneously increasing inference cost. While these results are disappointing, we still believe that adaptive test-time defenses are a promising avenue of research and, as such, we provide recommendations on evaluating such defenses. We go beyond the checklist provided by Carlini et al. (2019) by providing concrete steps that are specific to this type of defense.

Copy and Paste: A Simple But Effective Initialization Method for Black-Box Adversarial Attacks

Jun 14, 2019

Abstract:Many optimization methods for generating black-box adversarial examples have been proposed, but the aspect of initializing said optimizers has not been considered in much detail. We show that the choice of starting points is indeed crucial, and that the performance of state-of-the-art attacks depends on it. First, we discuss desirable properties of starting points for attacking image classifiers, and how they can be chosen to increase query efficiency. Notably, we find that simply copying small patches from other images is a valid strategy. In an evaluation on ImageNet, we show that this initialization reduces the number of queries required for a state-of-the-art Boundary Attack by 81%, significantly outperforming previous results reported for targeted black-box adversarial examples.

Leveraging Semantic Embeddings for Safety-Critical Applications

May 19, 2019

Abstract:Semantic Embeddings are a popular way to represent knowledge in the field of zero-shot learning. We observe their interpretability and discuss their potential utility in a safety-critical context. Concretely, we propose to use them to add introspection and error detection capabilities to neural network classifiers. First, we show how to create embeddings from symbolic domain knowledge. We discuss how to use them for interpreting mispredictions and propose a simple error detection scheme. We then introduce the concept of semantic distance: a real-valued score that measures confidence in the semantic space. We evaluate this score on a traffic sign classifier and find that it achieves near state-of-the-art performance, while being significantly faster to compute than other confidence scores. Our approach requires no changes to the original network and is thus applicable to any task for which domain knowledge is available.

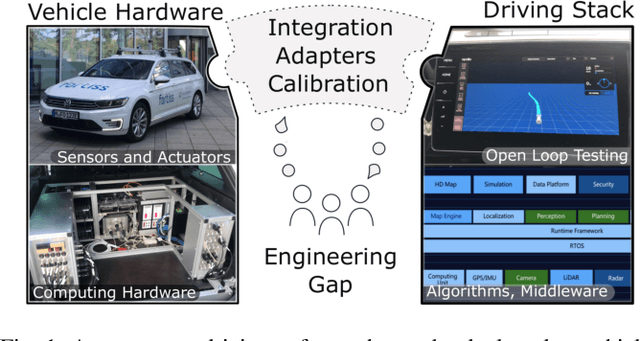

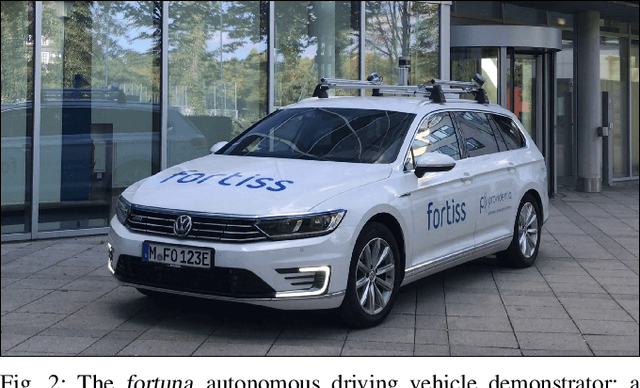

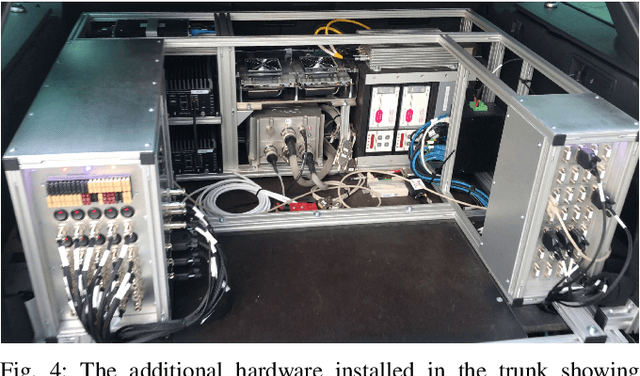

Bridging the Gap between Open Source Software and Vehicle Hardware for Autonomous Driving

May 08, 2019

Abstract:Although many research vehicle platforms for autonomous driving have been built in the past, hardware design, source code and lessons learned have not been made available for the next generation of demonstrators. This raises the efforts for the research community to contribute results based on real-world evaluations as engineering knowledge of building and maintaining a research vehicle is lost. In this paper, we deliver an analysis of our approach to transferring an open source driving stack to a research vehicle. We put the hardware and software setup in context to other demonstrators and explain the criteria that led to our chosen hardware and software design. Specifically, we discuss the mapping of the Apollo driving stack to the system layout of our research vehicle, fortuna, including communication with the actuators by a controller running on a real-time hardware platform and the integration of the sensor setup. With our collection of the lessons learned, we encourage a faster setup of such systems by other research groups in the future.

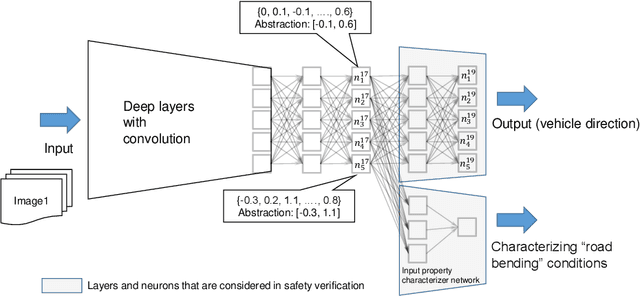

Towards Safety Verification of Direct Perception Neural Networks

Apr 09, 2019

Abstract:We study the problem of safety verification of direct perception neural networks, which take camera images as inputs and produce high-level features for autonomous vehicles to make control decisions. Formal verification of direct perception neural networks is extremely challenging, as it is difficult to formulate the specification that requires characterizing input conditions, while the number of neurons in such a network can reach millions. We approach the specification problem by learning an input property characterizer which carefully extends a direct perception neural network at close-to-output layers, and address the scalability problem by only analyzing networks starting from shared neurons without losing soundness. The presented workflow is used to understand a direct perception neural network (developed by Audi) which computes the next waypoint and orientation for autonomous vehicles to follow.

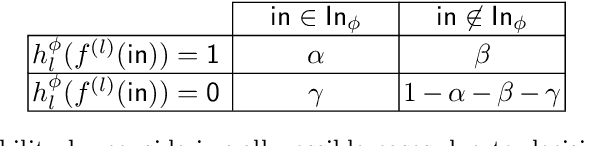

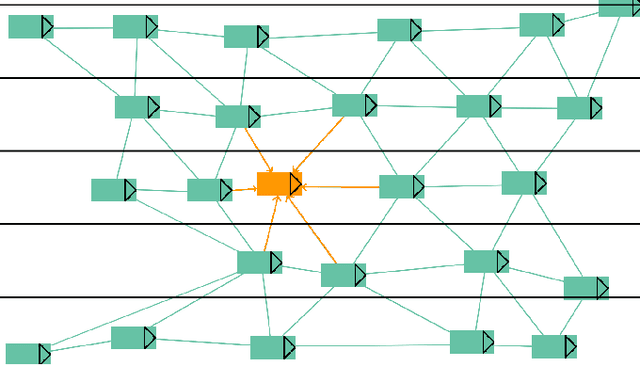

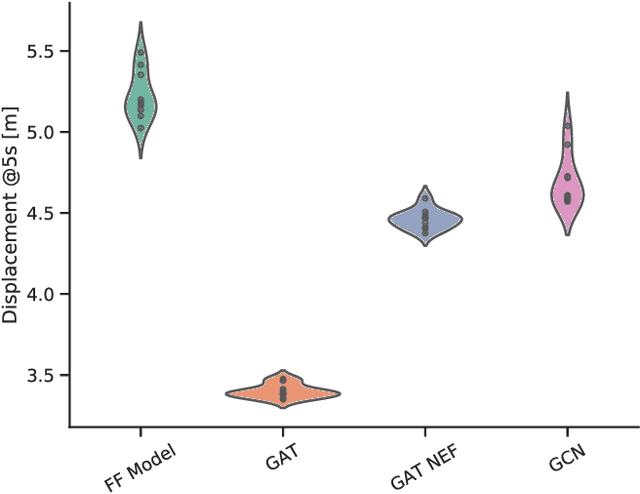

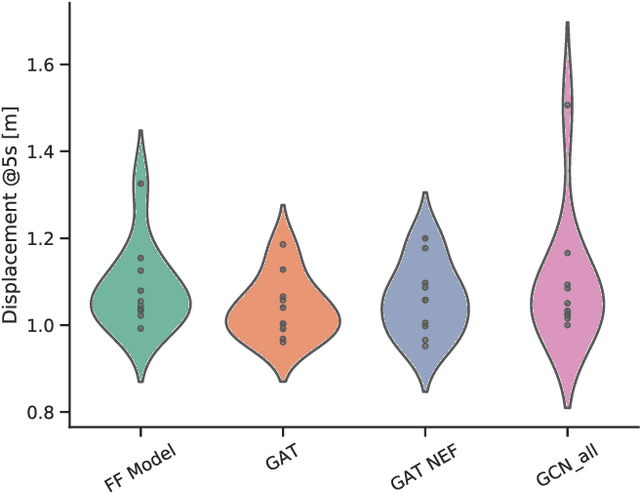

Graph Neural Networks for Modelling Traffic Participant Interaction

Mar 04, 2019

Abstract:By interpreting a traffic scene as a graph of interacting vehicles, we gain a flexible abstract representation which allows us to apply Graph Neural Network (GNN) models for traffic prediction. These naturally take interaction between traffic participants into account while being computationally efficient and providing large model capacity. We evaluate two state-of-the art GNN architectures and introduce several adaptations for our specific scenario. We show that prediction error in scenarios with much interaction decreases by 30% compared to a model that does not take interactions into account. This suggests a graph interpretation of interacting traffic participants is a worthwhile addition to traffic prediction systems.

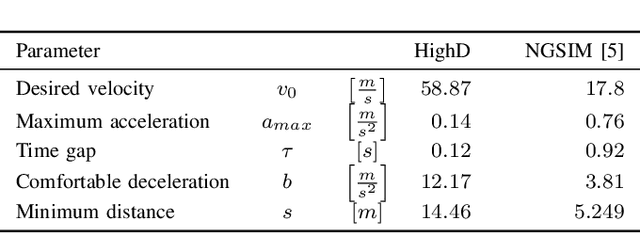

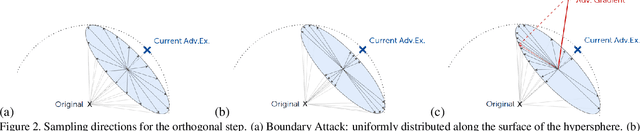

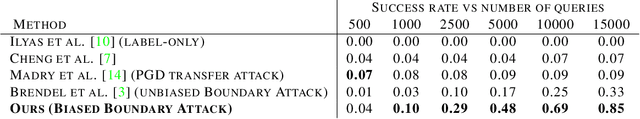

Guessing Smart: Biased Sampling for Efficient Black-Box Adversarial Attacks

Dec 24, 2018

Abstract:We consider adversarial examples in the black-box decision-based scenario. Here, an attacker has access to the final classification of a model, but not its parameters or softmax outputs. Most attacks for this scenario are based either on transferability, which is unreliable, or random sampling, which is extremely slow. Focusing on the latter, we propose to improve sampling-based attacks with prior beliefs about the target domain. We identify two such priors, image frequency and surrogate gradients, and discuss how to integrate them into a unified sampling procedure. We then formulate the Biased Boundary Attack, which achieves a drastic speedup over the original Boundary Attack. Finally, we demonstrate that our approach outperforms most state-of-the-art attacks in a query-limited scenario and is especially effective at breaking strong defenses: Our submission scored second place in the targeted attack track of the NeurIPS 2018 Adversarial Vision Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge