Guessing Smart: Biased Sampling for Efficient Black-Box Adversarial Attacks

Paper and Code

Dec 24, 2018

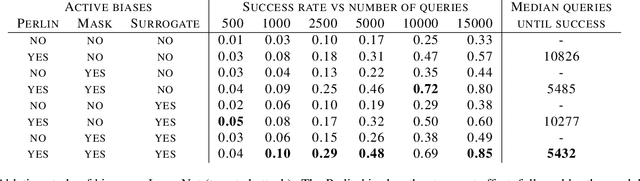

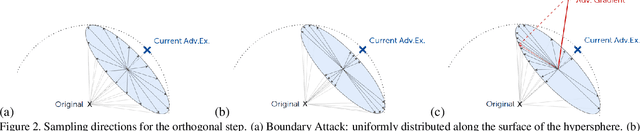

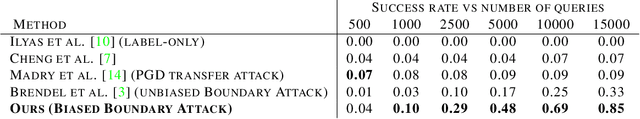

We consider adversarial examples in the black-box decision-based scenario. Here, an attacker has access to the final classification of a model, but not its parameters or softmax outputs. Most attacks for this scenario are based either on transferability, which is unreliable, or random sampling, which is extremely slow. Focusing on the latter, we propose to improve sampling-based attacks with prior beliefs about the target domain. We identify two such priors, image frequency and surrogate gradients, and discuss how to integrate them into a unified sampling procedure. We then formulate the Biased Boundary Attack, which achieves a drastic speedup over the original Boundary Attack. Finally, we demonstrate that our approach outperforms most state-of-the-art attacks in a query-limited scenario and is especially effective at breaking strong defenses: Our submission scored second place in the targeted attack track of the NeurIPS 2018 Adversarial Vision Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge