Hirotoshi Yasuoka

The missing link: Developing a safety case for perception components in automated driving

Aug 30, 2021

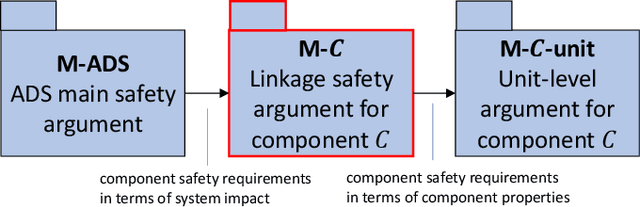

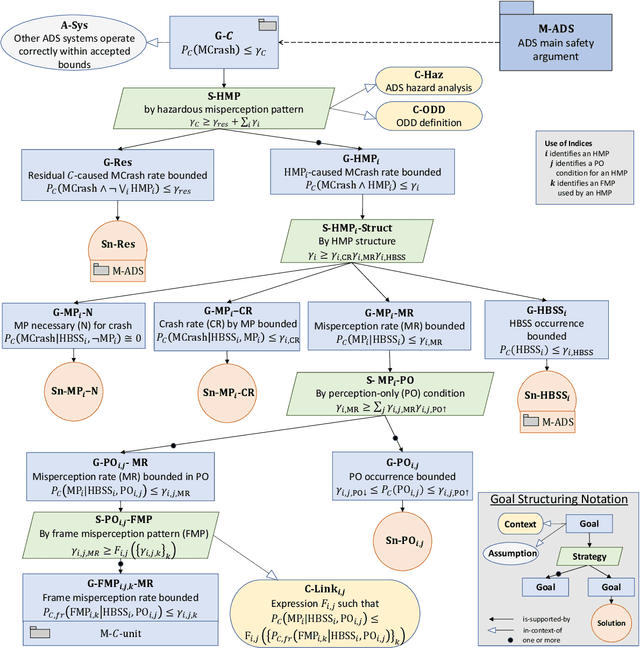

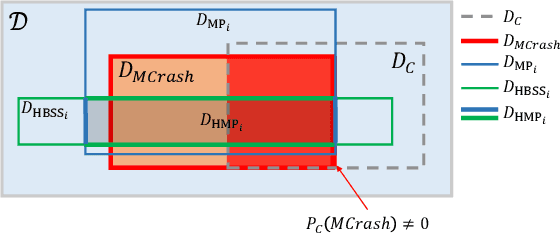

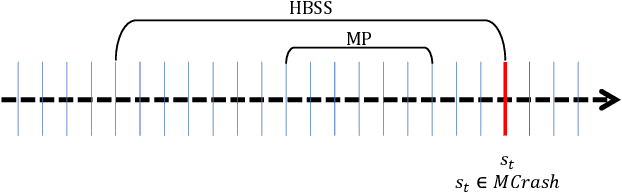

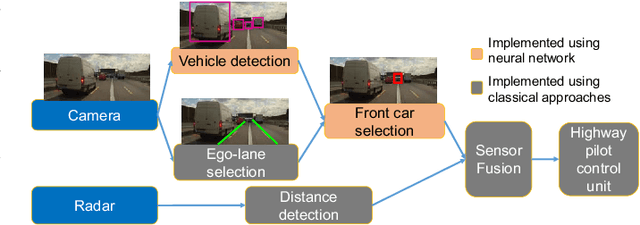

Abstract:Safety assurance is a central concern for the development and societal acceptance of automated driving (AD) systems. Perception is a key aspect of AD that relies heavily on Machine Learning (ML). Despite the known challenges with the safety assurance of ML-based components, proposals have recently emerged for unit-level safety cases addressing these components. Unfortunately, AD safety cases express safety requirements at the system-level and these efforts are missing the critical linking argument connecting safety requirements at the system-level to component performance requirements at the unit-level. In this paper, we propose a generic template for such a linking argument specifically tailored for perception components. The template takes a deductive and formal approach to define strong traceability between levels. We demonstrate the applicability of the template with a detailed case study and discuss its use as a tool to support incremental development of perception components.

Open Problems in Engineering Machine Learning Systems and the Quality Model

Apr 01, 2019

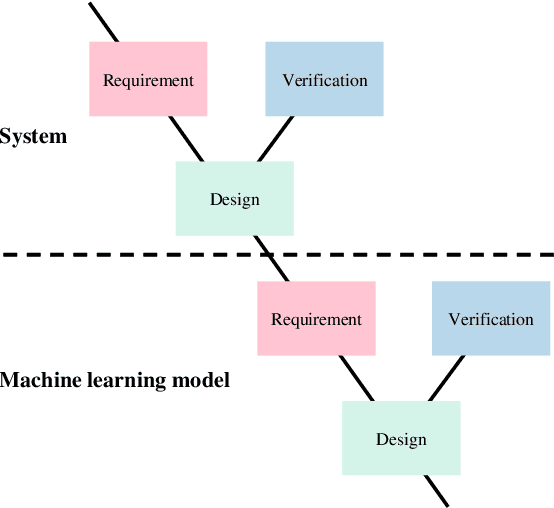

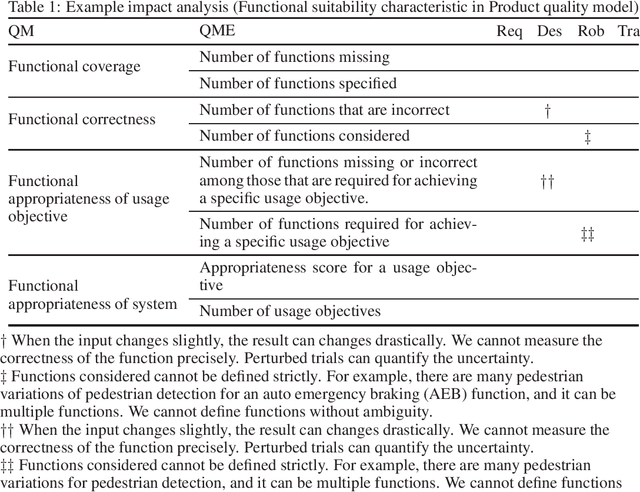

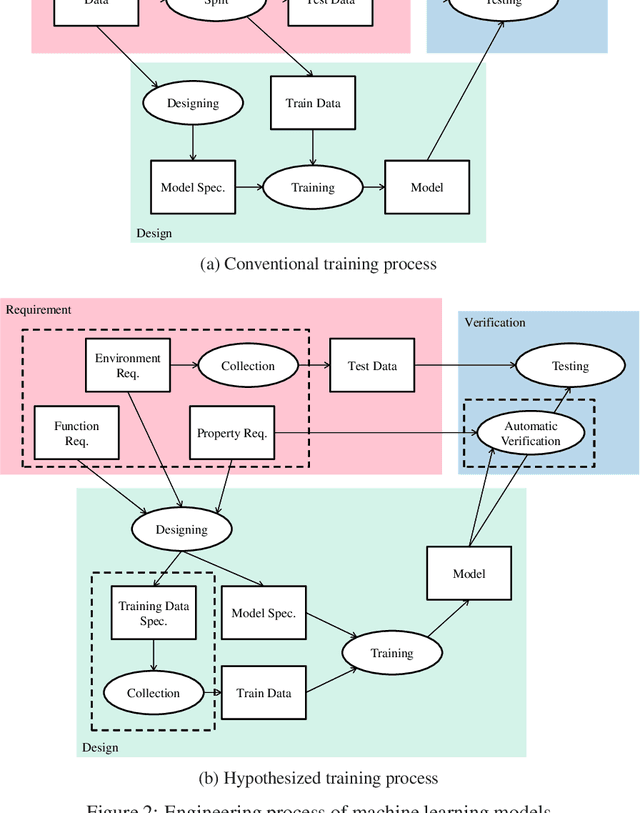

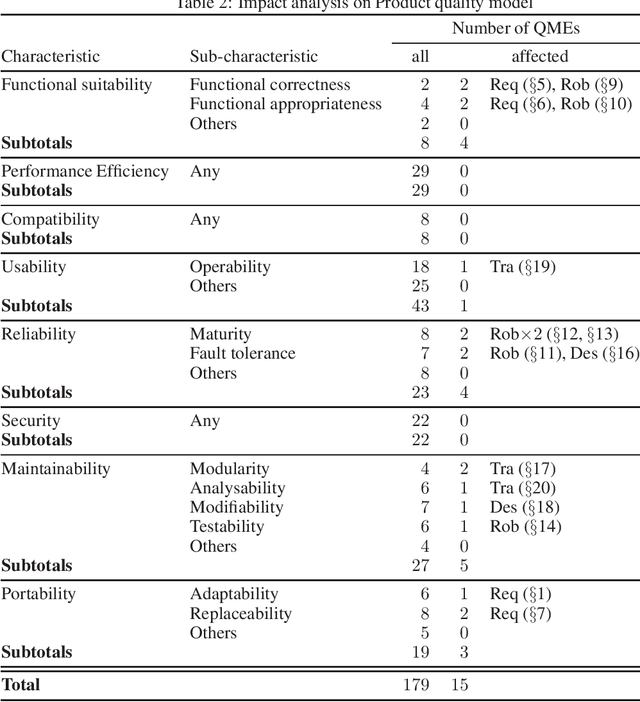

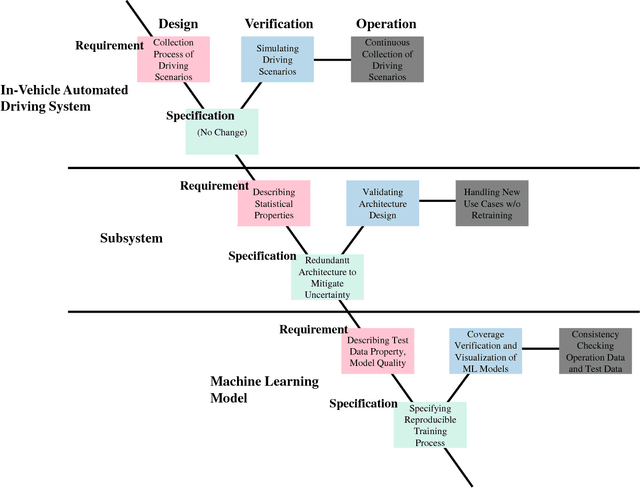

Abstract:Fatal accidents are a major issue hindering the wide acceptance of safety-critical systems that use machine learning and deep learning models, such as automated driving vehicles. To use machine learning in a safety-critical system, it is necessary to demonstrate the safety and security of the system to society through the engineering process. However, there have been no such total concepts or frameworks established for these systems that have been widely accepted, and needs or open problems are not organized in a way researchers can select a theme and work on. The key to using a machine learning model in a deductively engineered system, developed in a rigorous development lifecycle consisting of requirement, design, and verification, cf. V-Model, is decomposing the data-driven training of machine-learning models into requirement, design, and verification, especially for machine learning models used in safety-critical systems. In this study, we identify, classify, and explore the open problems in engineering (safety-critical) machine learning systems, i.e., requirement, design, and verification of machine learning models and systems, as well as related works and research directions, using automated driving vehicles as an example. We also discuss the introduction of machine-learning models into a conventional system quality model such as SQuARE to study the quality model for machine learning systems.

Open Problems in Engineering and Quality Assurance of Safety Critical Machine Learning Systems

Dec 07, 2018

Abstract:Fatal accidents are a major issue hindering the wide acceptance of safety-critical systems using machine-learning and deep-learning models, such as automated-driving vehicles. Quality assurance frameworks are required for such machine learning systems, but there are no widely accepted and established quality-assurance concepts and techniques. At the same time, open problems and the relevant technical fields are not organized. To establish standard quality assurance frameworks, it is necessary to visualize and organize these open problems in an interdisciplinary way, so that the experts from many different technical fields may discuss these problems in depth and develop solutions. In the present study, we identify, classify, and explore the open problems in quality assurance of safety-critical machine-learning systems, and their relevant corresponding industry and technological trends, using automated-driving vehicles as an example. Our results show that addressing these open problems requires incorporating knowledge from several different technological and industrial fields, including the automobile industry, statistics, software engineering, and machine learning.

Runtime Monitoring Neuron Activation Patterns

Sep 19, 2018

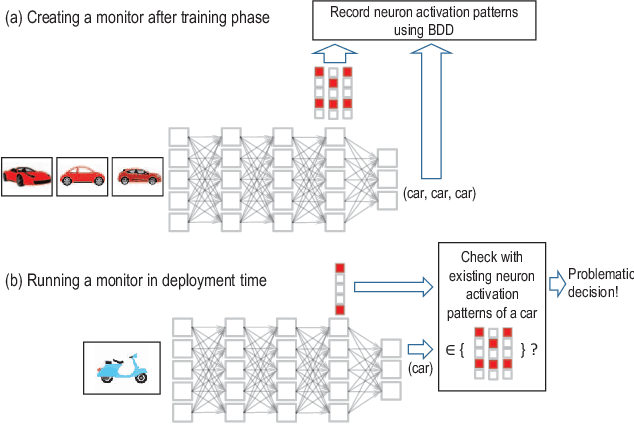

Abstract:For using neural networks in safety critical domains, it is important to know if a decision made by a neural network is supported by prior similarities in training. We propose runtime neuron activation pattern monitoring - after the standard training process, one creates a monitor by feeding the training data to the network again in order to store the neuron activation patterns in abstract form. In operation, a classification decision over an input is further supplemented by examining if a pattern similar (measured by Hamming distance) to the generated pattern is contained in the monitor. If the monitor does not contain any pattern similar to the generated pattern, it raises a warning that the decision is not based on the training data. Our experiments show that, by adjusting the similarity-threshold for activation patterns, the monitors can report a significant portion of misclassfications to be not supported by training with a small false-positive rate, when evaluated on a test set.

Towards Dependability Metrics for Neural Networks

Jun 08, 2018

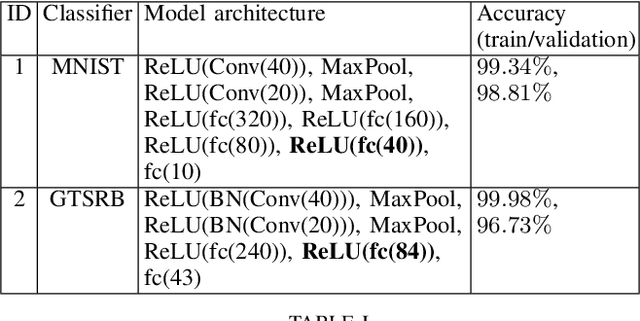

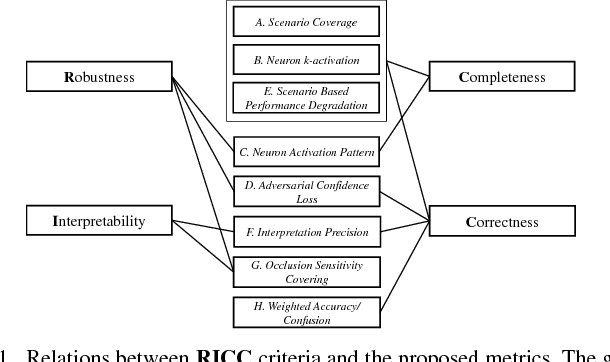

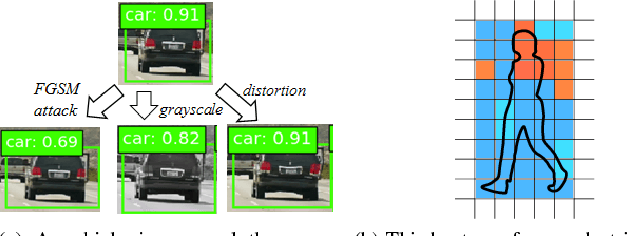

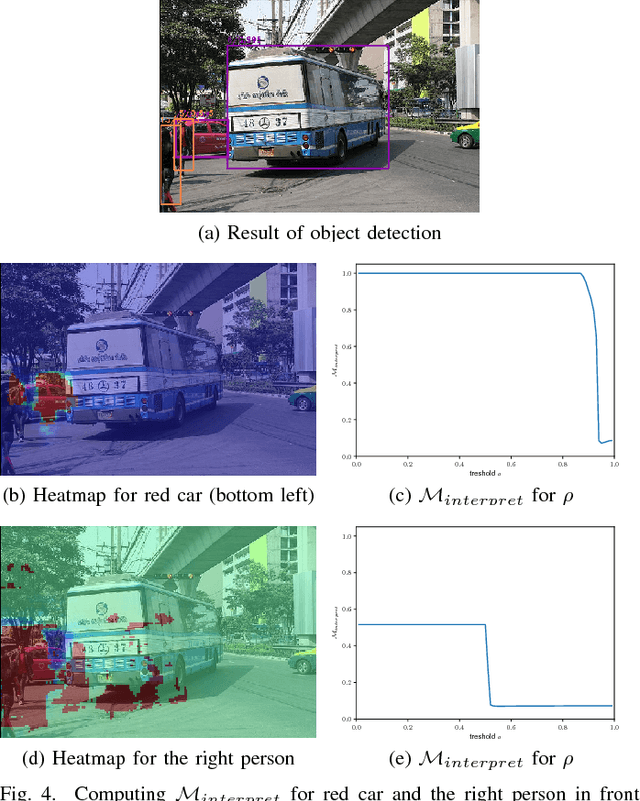

Abstract:Artificial neural networks (NN) are instrumental in realizing highly-automated driving functionality. An overarching challenge is to identify best safety engineering practices for NN and other learning-enabled components. In particular, there is an urgent need for an adequate set of metrics for measuring all-important NN dependability attributes. We address this challenge by proposing a number of NN-specific and efficiently computable metrics for measuring NN dependability attributes including robustness, interpretability, completeness, and correctness.

Quantitative Projection Coverage for Testing ML-enabled Autonomous Systems

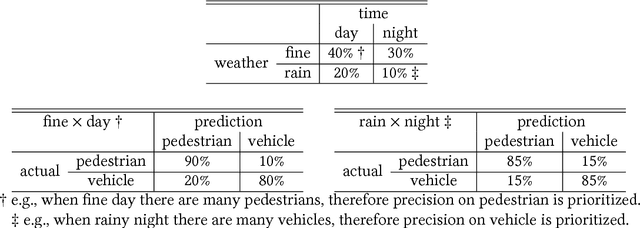

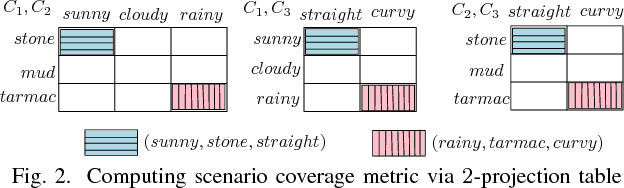

May 11, 2018

Abstract:Systematically testing models learned from neural networks remains a crucial unsolved barrier to successfully justify safety for autonomous vehicles engineered using data-driven approach. We propose quantitative k-projection coverage as a metric to mediate combinatorial explosion while guiding the data sampling process. By assuming that domain experts propose largely independent environment conditions and by associating elements in each condition with weights, the product of these conditions forms scenarios, and one may interpret weights associated with each equivalence class as relative importance. Achieving full k-projection coverage requires that the data set, when being projected to the hyperplane formed by arbitrarily selected k-conditions, covers each class with number of data points no less than the associated weight. For the general case where scenario composition is constrained by rules, precisely computing k-projection coverage remains in NP. In terms of finding minimum test cases to achieve full coverage, we present theoretical complexity for important sub-cases and an encoding to 0-1 integer programming. We have implemented a research prototype that generates test cases for a visual object defection unit in automated driving, demonstrating the technological feasibility of our proposed coverage criterion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge