Hiroshi Kuwajima

STEAM & MoSAFE: SOTIF Error-and-Failure Model & Analysis for AI-Enabled Driving Automation

Dec 15, 2023

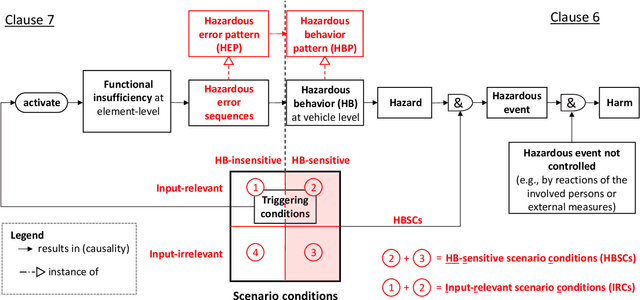

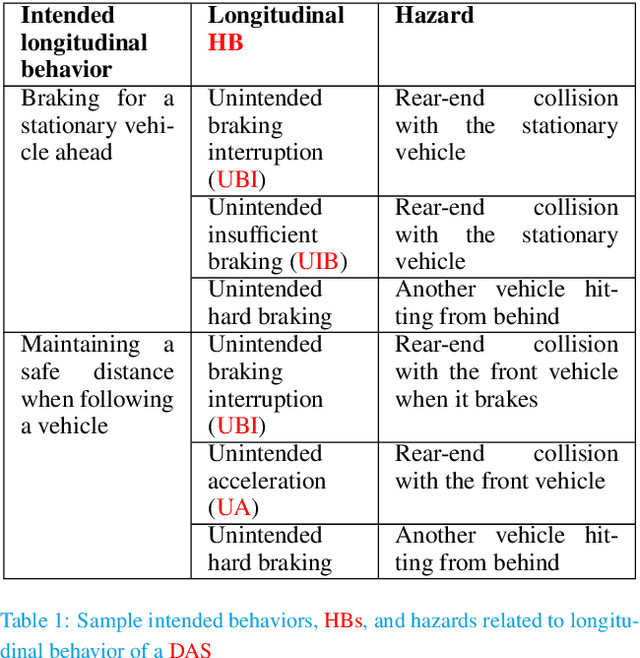

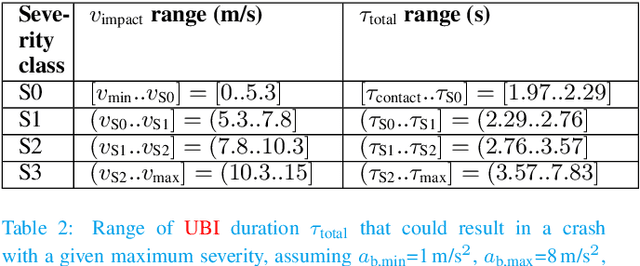

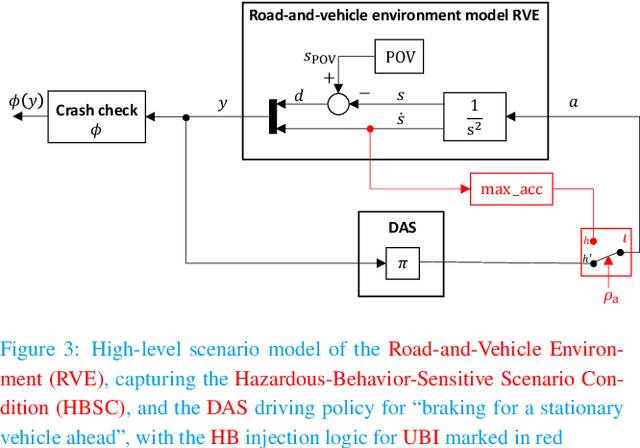

Abstract:Driving Automation Systems (DAS) are subject to complex road environments and vehicle behaviors and increasingly rely on sophisticated sensors and Artificial Intelligence (AI). These properties give rise to unique safety faults stemming from specification insufficiencies and technological performance limitations, where sensors and AI introduce errors that vary in magnitude and temporal patterns, posing potential safety risks. The Safety of the Intended Functionality (SOTIF) standard emerges as a promising framework for addressing these concerns, focusing on scenario-based analysis to identify hazardous behaviors and their causes. Although the current standard provides a basic cause-and-effect model and high-level process guidance, it lacks concepts required to identify and evaluate hazardous errors, especially within the context of AI. This paper introduces two key contributions to bridge this gap. First, it defines the SOTIF Temporal Error and Failure Model (STEAM) as a refinement of the SOTIF cause-and-effect model, offering a comprehensive system-design perspective. STEAM refines error definitions, introduces error sequences, and classifies them as error sequence patterns, providing particular relevance to systems employing advanced sensors and AI. Second, this paper proposes the Model-based SOTIF Analysis of Failures and Errors (MoSAFE) method, which allows instantiating STEAM based on system-design models by deriving hazardous error sequence patterns at module level from hazardous behaviors at vehicle level via weakest precondition reasoning. Finally, the paper presents a case study centered on an automated speed-control feature, illustrating the practical applicability of the refined model and the MoSAFE method in addressing complex safety challenges in DAS.

The missing link: Developing a safety case for perception components in automated driving

Aug 30, 2021

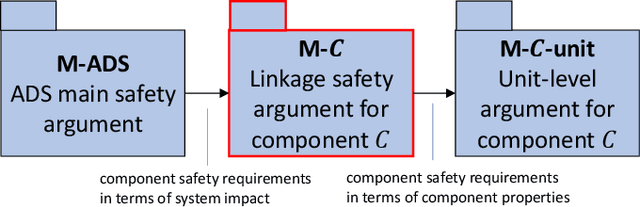

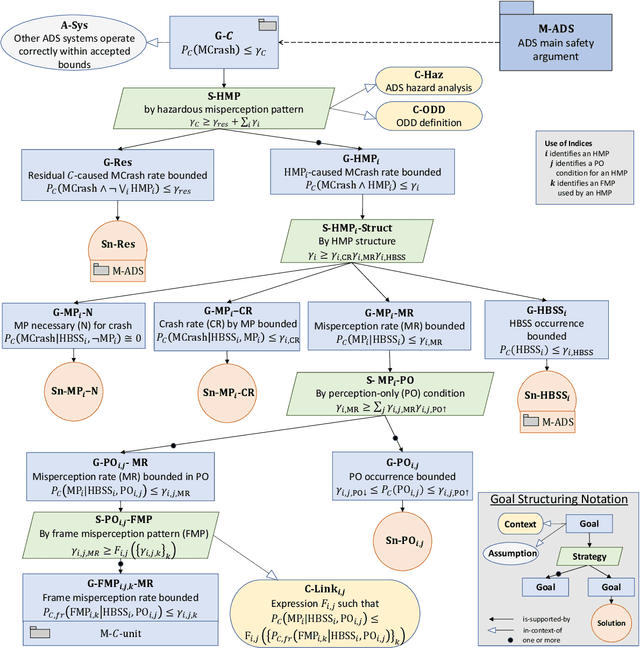

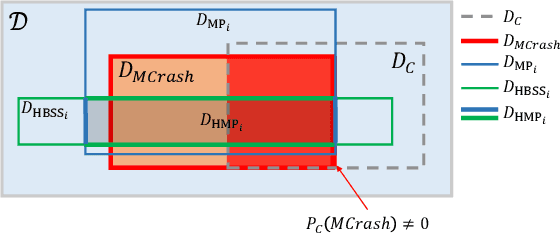

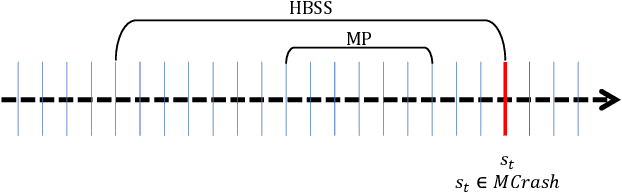

Abstract:Safety assurance is a central concern for the development and societal acceptance of automated driving (AD) systems. Perception is a key aspect of AD that relies heavily on Machine Learning (ML). Despite the known challenges with the safety assurance of ML-based components, proposals have recently emerged for unit-level safety cases addressing these components. Unfortunately, AD safety cases express safety requirements at the system-level and these efforts are missing the critical linking argument connecting safety requirements at the system-level to component performance requirements at the unit-level. In this paper, we propose a generic template for such a linking argument specifically tailored for perception components. The template takes a deductive and formal approach to define strong traceability between levels. We demonstrate the applicability of the template with a detailed case study and discuss its use as a tool to support incremental development of perception components.

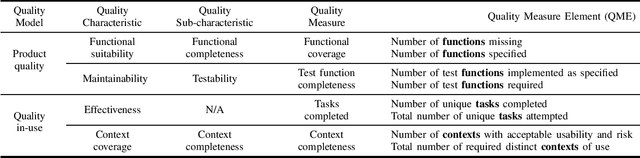

Adapting SQuaRE for Quality Assessment of Artificial Intelligence Systems

Jul 31, 2019

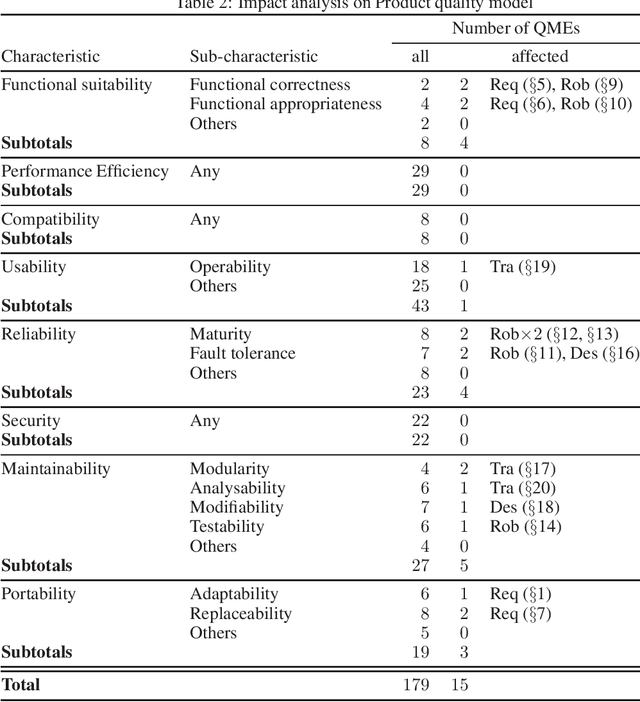

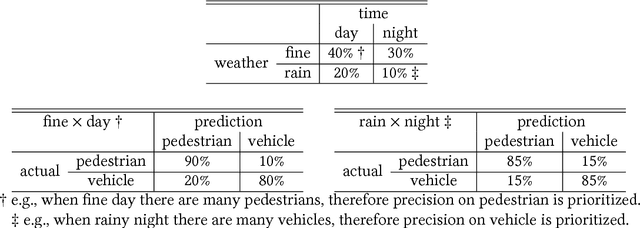

Abstract:More and more software practitioners are tackling towards industrial applications of artificial intelligence (AI) systems, especially those based on machine learning (ML). However, many of existing principles and approaches to traditional systems do not work effectively for the system behavior obtained by training not by logical design. In addition, unique kinds of requirements are emerging such as fairness and explainability. To provide clear guidance to understand and tackle these difficulties, we present an analysis on what quality concepts we should evaluate for AI systems. We base our discussion on ISO/IEC 25000 series, known as SQuaRE, and identify how it should be adapted for the unique nature of ML and $\textit{Ethics guidelines for trustworthy AI}$ from European Commission. We thus provide holistic insights for quality of AI systems by incorporating the ML nature and AI ethics to the traditional software quality concepts.

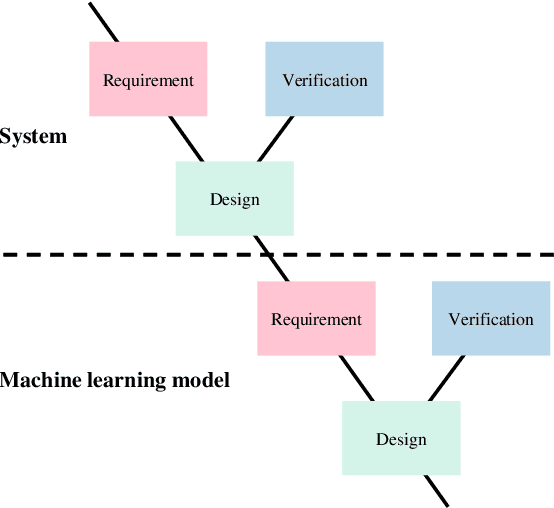

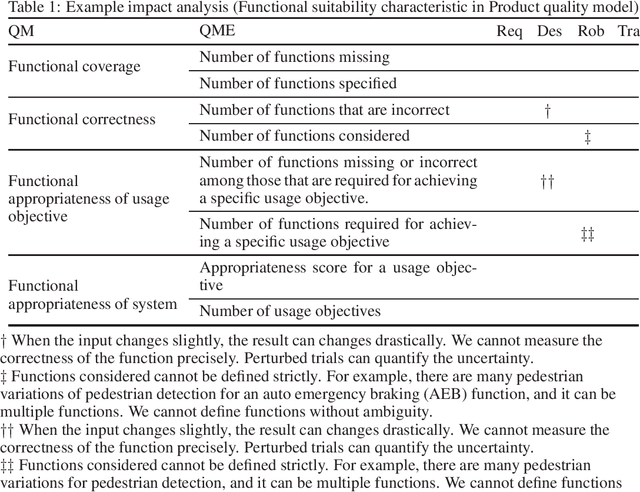

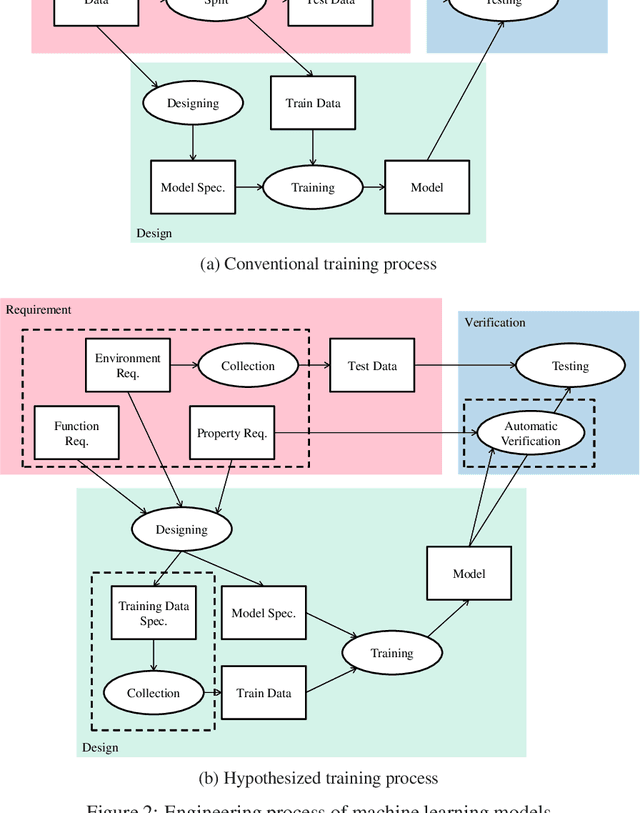

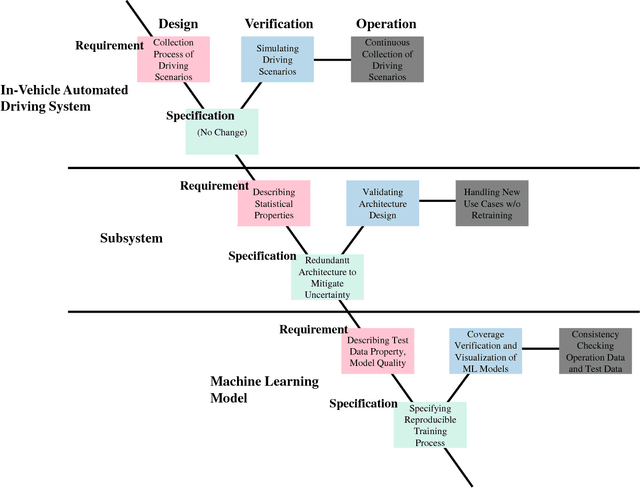

Open Problems in Engineering Machine Learning Systems and the Quality Model

Apr 01, 2019

Abstract:Fatal accidents are a major issue hindering the wide acceptance of safety-critical systems that use machine learning and deep learning models, such as automated driving vehicles. To use machine learning in a safety-critical system, it is necessary to demonstrate the safety and security of the system to society through the engineering process. However, there have been no such total concepts or frameworks established for these systems that have been widely accepted, and needs or open problems are not organized in a way researchers can select a theme and work on. The key to using a machine learning model in a deductively engineered system, developed in a rigorous development lifecycle consisting of requirement, design, and verification, cf. V-Model, is decomposing the data-driven training of machine-learning models into requirement, design, and verification, especially for machine learning models used in safety-critical systems. In this study, we identify, classify, and explore the open problems in engineering (safety-critical) machine learning systems, i.e., requirement, design, and verification of machine learning models and systems, as well as related works and research directions, using automated driving vehicles as an example. We also discuss the introduction of machine-learning models into a conventional system quality model such as SQuARE to study the quality model for machine learning systems.

Improving Transparency of Deep Neural Inference Process

Mar 13, 2019

Abstract:Deep learning techniques are rapidly advanced recently, and becoming a necessity component for widespread systems. However, the inference process of deep learning is black-box, and not very suitable to safety-critical systems which must exhibit high transparency. In this paper, to address this black-box limitation, we develop a simple analysis method which consists of 1) structural feature analysis: lists of the features contributing to inference process, 2) linguistic feature analysis: lists of the natural language labels describing the visual attributes for each feature contributing to inference process, and 3) consistency analysis: measuring consistency among input data, inference (label), and the result of our structural and linguistic feature analysis. Our analysis is simplified to reflect the actual inference process for high transparency, whereas it does not include any additional black-box mechanisms such as LSTM for highly human readable results. We conduct experiments and discuss the results of our analysis qualitatively and quantitatively, and come to believe that our work improves the transparency of neural networks. Evaluated through 12,800 human tasks, 75% workers answer that input data and result of our feature analysis are consistent, and 70% workers answer that inference (label) and result of our feature analysis are consistent. In addition to the evaluation of the proposed analysis, we find that our analysis also provide suggestions, or possible next actions such as expanding neural network complexity or collecting training data to improve a neural network.

Open Problems in Engineering and Quality Assurance of Safety Critical Machine Learning Systems

Dec 07, 2018

Abstract:Fatal accidents are a major issue hindering the wide acceptance of safety-critical systems using machine-learning and deep-learning models, such as automated-driving vehicles. Quality assurance frameworks are required for such machine learning systems, but there are no widely accepted and established quality-assurance concepts and techniques. At the same time, open problems and the relevant technical fields are not organized. To establish standard quality assurance frameworks, it is necessary to visualize and organize these open problems in an interdisciplinary way, so that the experts from many different technical fields may discuss these problems in depth and develop solutions. In the present study, we identify, classify, and explore the open problems in quality assurance of safety-critical machine-learning systems, and their relevant corresponding industry and technological trends, using automated-driving vehicles as an example. Our results show that addressing these open problems requires incorporating knowledge from several different technological and industrial fields, including the automobile industry, statistics, software engineering, and machine learning.

Network Analysis for Explanation

Dec 07, 2017

Abstract:Safety critical systems strongly require the quality aspects of artificial intelligence including explainability. In this paper, we analyzed a trained network to extract features which mainly contribute the inference. Based on the analysis, we developed a simple solution to generate explanations of the inference processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge