Rick Salay

Out-of-Distribution Detection for LiDAR-based 3D Object Detection

Sep 28, 2022

Abstract:3D object detection is an essential part of automated driving, and deep neural networks (DNNs) have achieved state-of-the-art performance for this task. However, deep models are notorious for assigning high confidence scores to out-of-distribution (OOD) inputs, that is, inputs that are not drawn from the training distribution. Detecting OOD inputs is challenging and essential for the safe deployment of models. OOD detection has been studied extensively for the classification task, but it has not received enough attention for the object detection task, specifically LiDAR-based 3D object detection. In this paper, we focus on the detection of OOD inputs for LiDAR-based 3D object detection. We formulate what OOD inputs mean for object detection and propose to adapt several OOD detection methods for object detection. We accomplish this by our proposed feature extraction method. To evaluate OOD detection methods, we develop a simple but effective technique of generating OOD objects for a given object detection model. Our evaluation based on the KITTI dataset shows that different OOD detection methods have biases toward detecting specific OOD objects. It emphasizes the importance of combined OOD detection methods and more research in this direction.

A Safety Assurable Human-Inspired Perception Architecture

May 10, 2022

Abstract:Although artificial intelligence-based perception (AIP) using deep neural networks (DNN) has achieved near human level performance, its well-known limitations are obstacles to the safety assurance needed in autonomous applications. These include vulnerability to adversarial inputs, inability to handle novel inputs and non-interpretability. While research in addressing these limitations is active, in this paper, we argue that a fundamentally different approach is needed to address them. Inspired by dual process models of human cognition, where Type 1 thinking is fast and non-conscious while Type 2 thinking is slow and based on conscious reasoning, we propose a dual process architecture for safe AIP. We review research on how humans address the simplest non-trivial perception problem, image classification, and sketch a corresponding AIP architecture for this task. We argue that this architecture can provide a systematic way of addressing the limitations of AIP using DNNs and an approach to assurance of human-level performance and beyond. We conclude by discussing what components of the architecture may already be addressed by existing work and what remains future work.

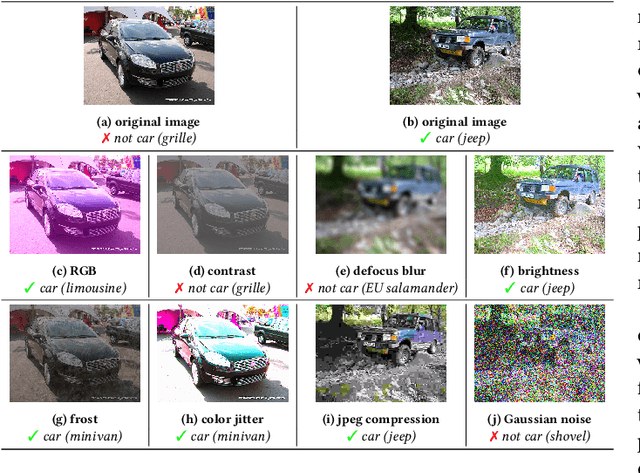

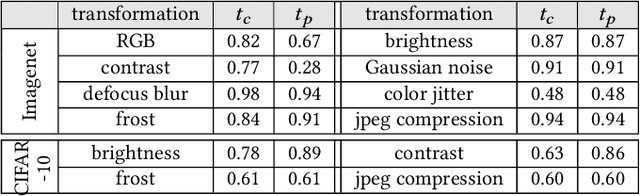

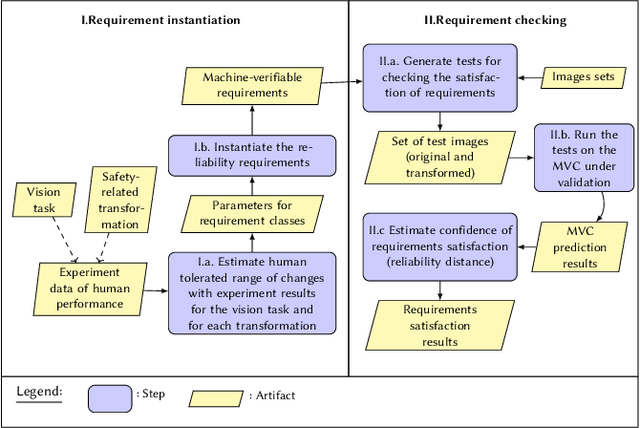

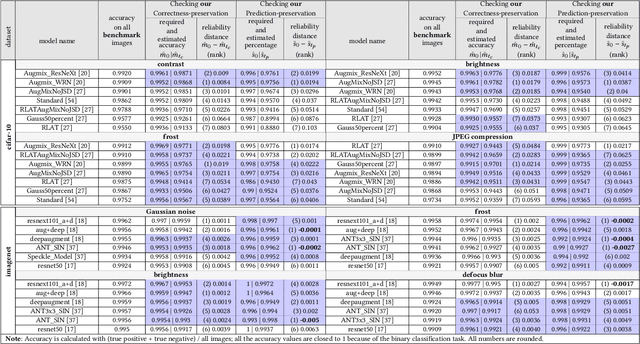

If a Human Can See It, So Should Your System: Reliability Requirements for Machine Vision Components

Feb 08, 2022

Abstract:Machine Vision Components (MVC) are becoming safety-critical. Assuring their quality, including safety, is essential for their successful deployment. Assurance relies on the availability of precisely specified and, ideally, machine-verifiable requirements. MVCs with state-of-the-art performance rely on machine learning (ML) and training data but largely lack such requirements. In this paper, we address the need for defining machine-verifiable reliability requirements for MVCs against transformations that simulate the full range of realistic and safety-critical changes in the environment. Using human performance as a baseline, we define reliability requirements as: 'if the changes in an image do not affect a human's decision, neither should they affect the MVC's.' To this end, we provide: (1) a class of safety-related image transformations; (2) reliability requirement classes to specify correctness-preservation and prediction-preservation for MVCs; (3) a method to instantiate machine-verifiable requirements from these requirements classes using human performance experiment data; (4) human performance experiment data for image recognition involving eight commonly used transformations, from about 2000 human participants; and (5) a method for automatically checking whether an MVC satisfies our requirements. Further, we show that our reliability requirements are feasible and reusable by evaluating our methods on 13 state-of-the-art pre-trained image classification models. Finally, we demonstrate that our approach detects reliability gaps in MVCs that other existing methods are unable to detect.

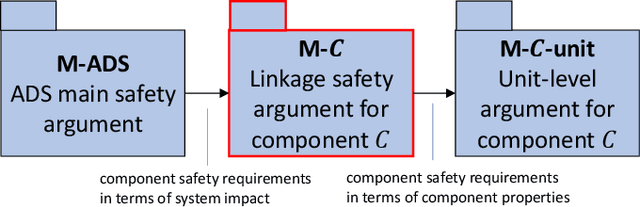

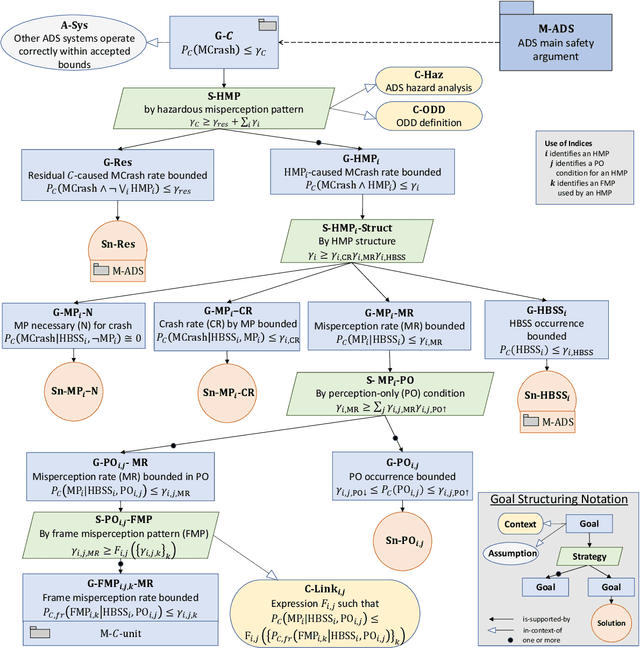

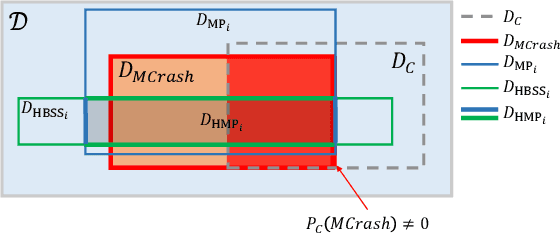

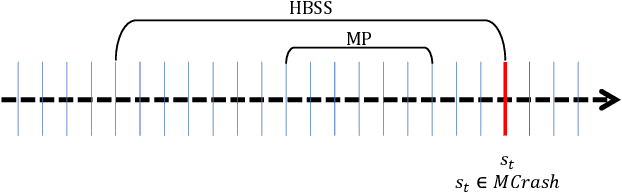

The missing link: Developing a safety case for perception components in automated driving

Aug 30, 2021

Abstract:Safety assurance is a central concern for the development and societal acceptance of automated driving (AD) systems. Perception is a key aspect of AD that relies heavily on Machine Learning (ML). Despite the known challenges with the safety assurance of ML-based components, proposals have recently emerged for unit-level safety cases addressing these components. Unfortunately, AD safety cases express safety requirements at the system-level and these efforts are missing the critical linking argument connecting safety requirements at the system-level to component performance requirements at the unit-level. In this paper, we propose a generic template for such a linking argument specifically tailored for perception components. The template takes a deductive and formal approach to define strong traceability between levels. We demonstrate the applicability of the template with a detailed case study and discuss its use as a tool to support incremental development of perception components.

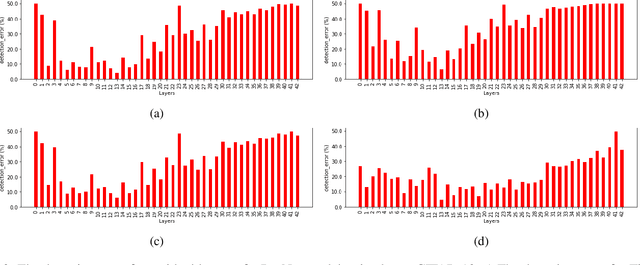

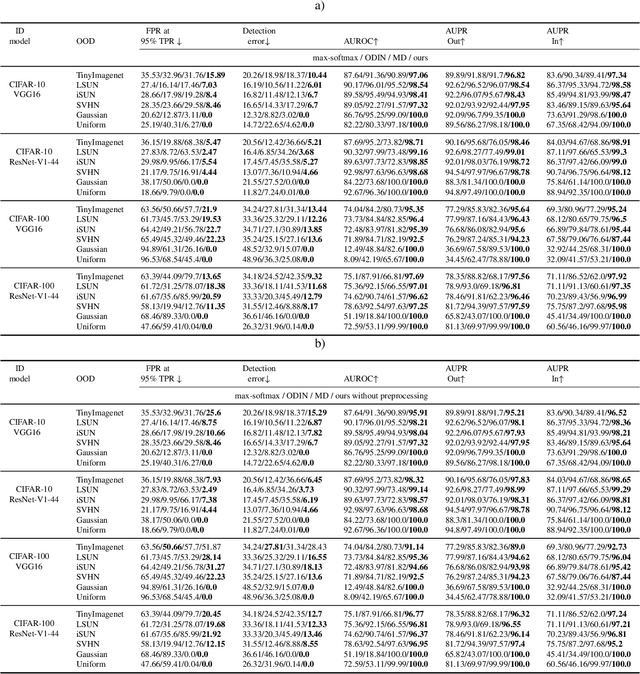

The Effect of Optimization Methods on the Robustness of Out-of-Distribution Detection Approaches

Jun 25, 2020

Abstract:Deep neural networks (DNNs) have become the de facto learning mechanism in different domains. Their tendency to perform unreliably on out-of-distribution (OOD) inputs hinders their adoption in critical domains. Several approaches have been proposed for detecting OOD inputs. However, existing approaches still lack robustness. In this paper, we shed light on the robustness of OOD detection (OODD) approaches by revealing the important role of optimization methods. We show that OODD approaches are sensitive to the type of optimization method used during training deep models. Optimization methods can provide different solutions to a non-convex problem and so these solutions may or may not satisfy the assumptions (e.g., distributions of deep features) made by OODD approaches. Furthermore, we propose a robustness score that takes into account the role of optimization methods. This provides a sound way to compare OODD approaches. In addition to comparing several OODD approaches using our proposed robustness score, we demonstrate that some optimization methods provide better solutions for OODD approaches.

Efficacy of Pixel-Level OOD Detection for Semantic Segmentation

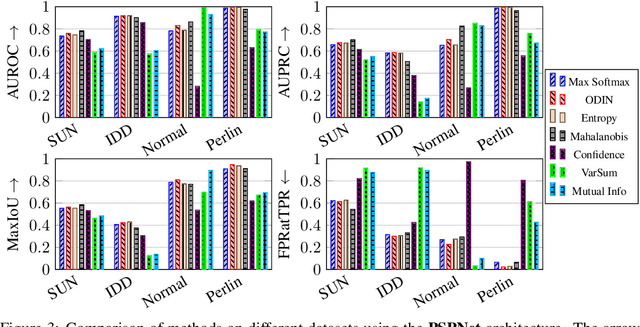

Nov 07, 2019

Abstract:The detection of out of distribution samples for image classification has been widely researched. Safety critical applications, such as autonomous driving, would benefit from the ability to localise the unusual objects causing the image to be out of distribution. This paper adapts state-of-the-art methods for detecting out of distribution images for image classification to the new task of detecting out of distribution pixels, which can localise the unusual objects. It further experimentally compares the adapted methods on two new datasets derived from existing semantic segmentation datasets using PSPNet and DeeplabV3+ architectures, as well as proposing a new metric for the task. The evaluation shows that the performance ranking of the compared methods does not transfer to the new task and every method performs significantly worse than their image-level counterparts.

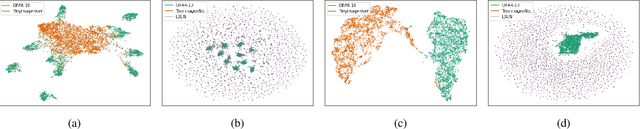

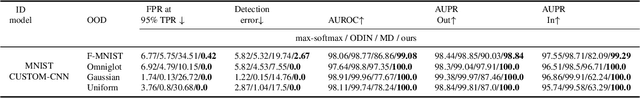

Detecting Out-of-Distribution Inputs in Deep Neural Networks Using an Early-Layer Output

Oct 23, 2019

Abstract:Deep neural networks achieve superior performance in challenging tasks such as image classification. However, deep classifiers tend to incorrectly classify out-of-distribution (OOD) inputs, which are inputs that do not belong to the classifier training distribution. Several approaches have been proposed to detect OOD inputs, but the detection task is still an ongoing challenge. In this paper, we propose a new OOD detection approach that can be easily applied to an existing classifier and does not need to have access to OOD samples. The detector is a one-class classifier trained on the output of an early layer of the original classifier fed with its original training set. We apply our approach to several low- and high-dimensional datasets and compare it to the state-of-the-art detection approaches. Our approach achieves substantially better results over multiple metrics.

Out-of-distribution Detection in Classifiers via Generation

Oct 09, 2019

Abstract:By design, discriminatively trained neural network classifiers produce reliable predictions only for in-distribution samples. For their real-world deployments, detecting out-of-distribution (OOD) samples is essential. Assuming OOD to be outside the closed boundary of in-distribution, typical neural classifiers do not contain the knowledge of this boundary for OOD detection during inference. There have been recent approaches to instill this knowledge in classifiers by explicitly training the classifier with OOD samples close to the in-distribution boundary. However, these generated samples fail to cover the entire in-distribution boundary effectively, thereby resulting in a sub-optimal OOD detector. In this paper, we analyze the feasibility of such approaches by investigating the complexity of producing such "effective" OOD samples. We also propose a novel algorithm to generate such samples using a manifold learning network (e.g., variational autoencoder) and then train an n+1 classifier for OOD detection, where the $n+1^{th}$ class represents the OOD samples. We compare our approach against several recent classifier-based OOD detectors on MNIST and Fashion-MNIST datasets. Overall the proposed approach consistently performs better than the others.

Analysis of Confident-Classifiers for Out-of-distribution Detection

Apr 27, 2019

Abstract:Discriminatively trained neural classifiers can be trusted, only when the input data comes from the training distribution (in-distribution). Therefore, detecting out-of-distribution (OOD) samples is very important to avoid classification errors. In the context of OOD detection for image classification, one of the recent approaches proposes training a classifier called "confident-classifier" by minimizing the standard cross-entropy loss on in-distribution samples and minimizing the KL divergence between the predictive distribution of OOD samples in the low-density regions of in-distribution and the uniform distribution (maximizing the entropy of the outputs). Thus, the samples could be detected as OOD if they have low confidence or high entropy. In this paper, we analyze this setting both theoretically and experimentally. We conclude that the resulting confident-classifier still yields arbitrarily high confidence for OOD samples far away from the in-distribution. We instead suggest training a classifier by adding an explicit "reject" class for OOD samples.

Towards a Framework to Manage Perceptual Uncertainty for Safe Automated Driving

Mar 03, 2019

Abstract:Perception is a safety-critical function of autonomous vehicles and machine learning (ML) plays a key role in its implementation. This position paper identifies (1) perceptual uncertainty as a performance measure used to define safety requirements and (2) its influence factors when using supervised ML. This work is a first step towards a framework for measuring and controling the effects of these factors and supplying evidence to support claims about perceptual uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge