Maximilian Kahn

I Know You Can't See Me: Dynamic Occlusion-Aware Safety Validation of Strategic Planners for Autonomous Vehicles Using Hypergames

Sep 20, 2021

Abstract:A particular challenge for both autonomous and human driving is dealing with risk associated with dynamic occlusion, i.e., occlusion caused by other vehicles in traffic. Based on the theory of hypergames, we develop a novel multi-agent dynamic occlusion risk (DOR) measure for assessing situational risk in dynamic occlusion scenarios. Furthermore, we present a white-box, scenario-based, accelerated safety validation framework for assessing safety of strategic planners in AV. Based on evaluation over a large naturalistic database, our proposed validation method achieves a 4000% speedup compared to direct validation on naturalistic data, a more diverse coverage, and ability to generalize beyond the dataset and generate commonly observed dynamic occlusion crashes in traffic in an automated manner.

The missing link: Developing a safety case for perception components in automated driving

Aug 30, 2021

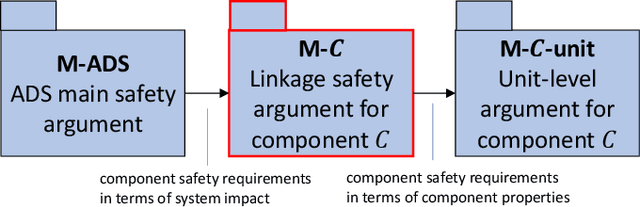

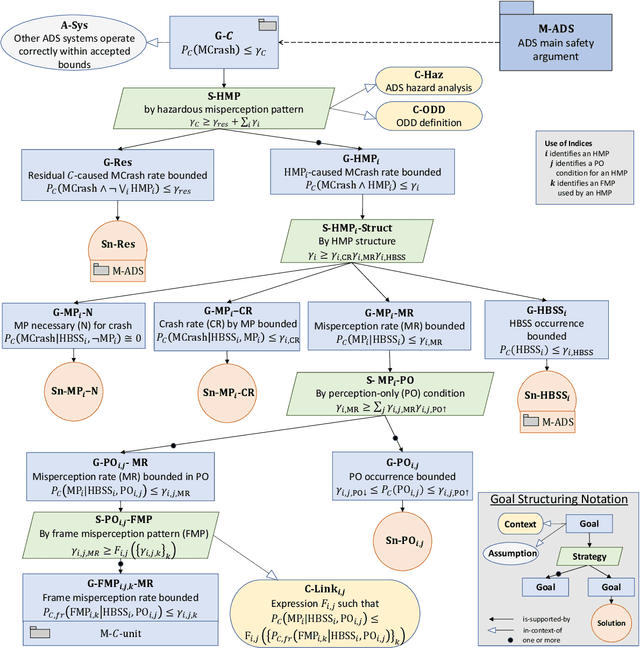

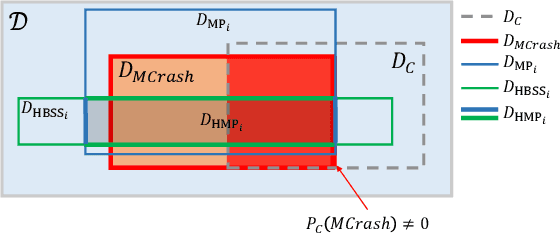

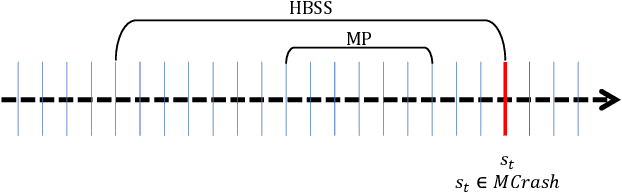

Abstract:Safety assurance is a central concern for the development and societal acceptance of automated driving (AD) systems. Perception is a key aspect of AD that relies heavily on Machine Learning (ML). Despite the known challenges with the safety assurance of ML-based components, proposals have recently emerged for unit-level safety cases addressing these components. Unfortunately, AD safety cases express safety requirements at the system-level and these efforts are missing the critical linking argument connecting safety requirements at the system-level to component performance requirements at the unit-level. In this paper, we propose a generic template for such a linking argument specifically tailored for perception components. The template takes a deductive and formal approach to define strong traceability between levels. We demonstrate the applicability of the template with a detailed case study and discuss its use as a tool to support incremental development of perception components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge