Fuyuki Ishikawa

Situating AI Agents in their World: Aspective Agentic AI for Dynamic Partially Observable Information Systems

Sep 03, 2025Abstract:Agentic LLM AI agents are often little more than autonomous chatbots: actors following scripts, often controlled by an unreliable director. This work introduces a bottom-up framework that situates AI agents in their environment, with all behaviors triggered by changes in their environments. It introduces the notion of aspects, similar to the idea of umwelt, where sets of agents perceive their environment differently to each other, enabling clearer control of information. We provide an illustrative implementation and show that compared to a typical architecture, which leaks up to 83% of the time, aspective agentic AI enables zero information leakage. We anticipate that this concept of specialist agents working efficiently in their own information niches can provide improvements to both security and efficiency.

* 9 pages

FairFLRep: Fairness aware fault localization and repair of Deep Neural Networks

Aug 11, 2025Abstract:Deep neural networks (DNNs) are being utilized in various aspects of our daily lives, including high-stakes decision-making applications that impact individuals. However, these systems reflect and amplify bias from the data used during training and testing, potentially resulting in biased behavior and inaccurate decisions. For instance, having different misclassification rates between white and black sub-populations. However, effectively and efficiently identifying and correcting biased behavior in DNNs is a challenge. This paper introduces FairFLRep, an automated fairness-aware fault localization and repair technique that identifies and corrects potentially bias-inducing neurons in DNN classifiers. FairFLRep focuses on adjusting neuron weights associated with sensitive attributes, such as race or gender, that contribute to unfair decisions. By analyzing the input-output relationships within the network, FairFLRep corrects neurons responsible for disparities in predictive quality parity. We evaluate FairFLRep on four image classification datasets using two DNN classifiers, and four tabular datasets with a DNN model. The results show that FairFLRep consistently outperforms existing methods in improving fairness while preserving accuracy. An ablation study confirms the importance of considering fairness during both fault localization and repair stages. Our findings also show that FairFLRep is more efficient than the baseline approaches in repairing the network.

An Experience Report on Regression-Free Repair of Deep Neural Network Model

Mar 10, 2025Abstract:Systems based on Deep Neural Networks (DNNs) are increasingly being used in industry. In the process of system operation, DNNs need to be updated in order to improve their performance. When updating DNNs, systems used in companies that require high reliability must have as few regressions as possible. Since the update of DNNs has a data-driven nature, it is difficult to suppress regressions as expected by developers. This paper identifies the requirements for DNN updating in industry and presents a case study using techniques to meet those requirements. In the case study, we worked on satisfying the requirement to update models trained on car images collected in Fujitsu assuming security applications without regression for a specific class. We were able to suppress regression by customizing the objective function based on NeuRecover, a DNN repair technique. Moreover, we discuss some of the challenges identified in the case study.

CLEAR: Cue Learning using Evolution for Accurate Recognition Applied to Sustainability Data Extraction

Jan 30, 2025

Abstract:Large Language Model (LLM) image recognition is a powerful tool for extracting data from images, but accuracy depends on providing sufficient cues in the prompt - requiring a domain expert for specialized tasks. We introduce Cue Learning using Evolution for Accurate Recognition (CLEAR), which uses a combination of LLMs and evolutionary computation to generate and optimize cues such that recognition of specialized features in images is improved. It achieves this by auto-generating a novel domain-specific representation and then using it to optimize suitable textual cues with a genetic algorithm. We apply CLEAR to the real-world task of identifying sustainability data from interior and exterior images of buildings. We investigate the effects of using a variable-length representation compared to fixed-length and show how LLM consistency can be improved by refactoring from categorical to real-valued estimates. We show that CLEAR enables higher accuracy compared to expert human recognition and human-authored prompts in every task with error rates improved by up to two orders of magnitude and an ablation study evincing solution concision.

SCAPE: Searching Conceptual Architecture Prompts using Evolution

Jan 31, 2024Abstract:Conceptual architecture involves a highly creative exploration of novel ideas, often taken from other disciplines as architects consider radical new forms, materials, textures and colors for buildings. While today's generative AI systems can produce remarkable results, they lack the creativity demonstrated for decades by evolutionary algorithms. SCAPE, our proposed tool, combines evolutionary search with generative AI, enabling users to explore creative and good quality designs inspired by their initial input through a simple point and click interface. SCAPE injects randomness into generative AI, and enables memory, making use of the built-in language skills of GPT-4 to vary prompts via text-based mutation and crossover. We demonstrate that compared to DALL-E 3, SCAPE enables a 67% improvement in image novelty, plus improvements in quality and effectiveness of use; we show that in just 3 iterations SCAPE has a 24% image novelty increase enabling effective exploration, plus optimization of images by users. We use more than 20 independent architects to assess SCAPE, who provide markedly positive feedback.

Using a Variational Autoencoder to Learn Valid Search Spaces of Safely Monitored Autonomous Robots for Last-Mile Delivery

Mar 06, 2023Abstract:The use of autonomous robots for delivery of goods to customers is an exciting new way to provide a reliable and sustainable service. However, in the real world, autonomous robots still require human supervision for safety reasons. We tackle the realworld problem of optimizing autonomous robot timings to maximize deliveries, while ensuring that there are never too many robots running simultaneously so that they can be monitored safely. We assess the use of a recent hybrid machine-learningoptimization approach COIL (constrained optimization in learned latent space) and compare it with a baseline genetic algorithm for the purposes of exploring variations of this problem. We also investigate new methods for improving the speed and efficiency of COIL. We show that only COIL can find valid solutions where appropriate numbers of robots run simultaneously for all problem variations tested. We also show that when COIL has learned its latent representation, it can optimize 10% faster than the GA, making it a good choice for daily re-optimization of robots where delivery requests for each day are allocated to robots while maintaining safe numbers of robots running at once.

Goal-Aware RSS for Complex Scenarios via Program Logic

Jul 06, 2022

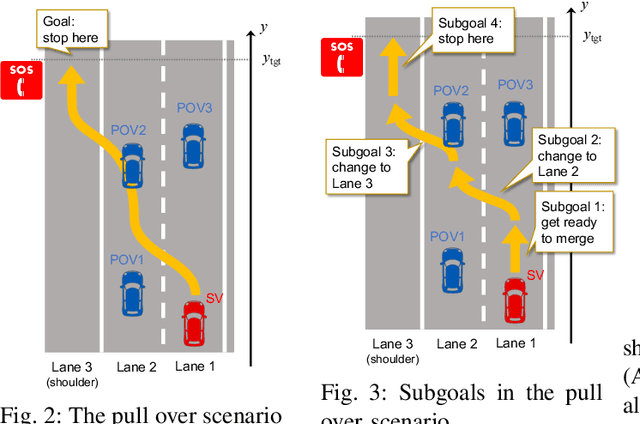

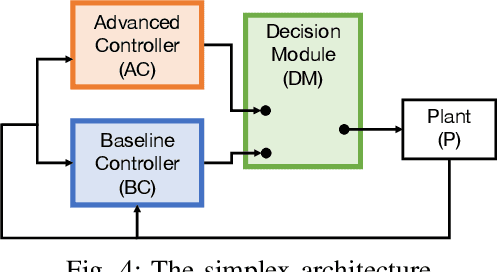

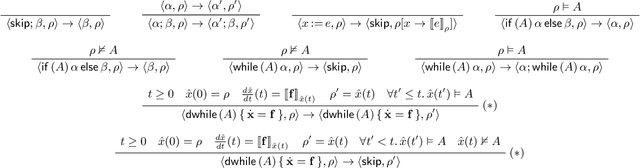

Abstract:We introduce a goal-aware extension of responsibility-sensitive safety (RSS), a recent methodology for rule-based safety guarantee for automated driving systems (ADS). Making RSS rules guarantee goal achievement -- in addition to collision avoidance as in the original RSS -- requires complex planning over long sequences of manoeuvres. To deal with the complexity, we introduce a compositional reasoning framework based on program logic, in which one can systematically develop RSS rules for smaller subscenarios and combine them to obtain RSS rules for bigger scenarios. As the basis of the framework, we introduce a program logic dFHL that accommodates continuous dynamics and safety conditions. Our framework presents a dFHL-based workflow for deriving goal-aware RSS rules; we discuss its software support, too. We conducted experimental evaluation using RSS rules in a safety architecture. Its results show that goal-aware RSS is indeed effective in realising both collision avoidance and goal achievement.

Practical Insights of Repairing Model Problems on Image Classification

May 14, 2022

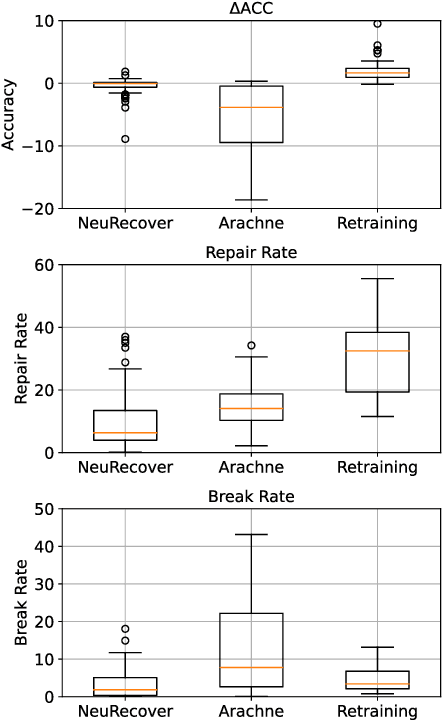

Abstract:Additional training of a deep learning model can cause negative effects on the results, turning an initially positive sample into a negative one (degradation). Such degradation is possible in real-world use cases due to the diversity of sample characteristics. That is, a set of samples is a mixture of critical ones which should not be missed and less important ones. Therefore, we cannot understand the performance by accuracy alone. While existing research aims to prevent a model degradation, insights into the related methods are needed to grasp their benefits and limitations. In this talk, we will present implications derived from a comparison of methods for reducing degradation. Especially, we formulated use cases for industrial settings in terms of arrangements of a data set. The results imply that a practitioner should care about better method continuously considering dataset availability and life cycle of an AI system because of a trade-off between accuracy and preventing degradation.

NeuRecover: Regression-Controlled Repair of Deep Neural Networks with Training History

Mar 04, 2022

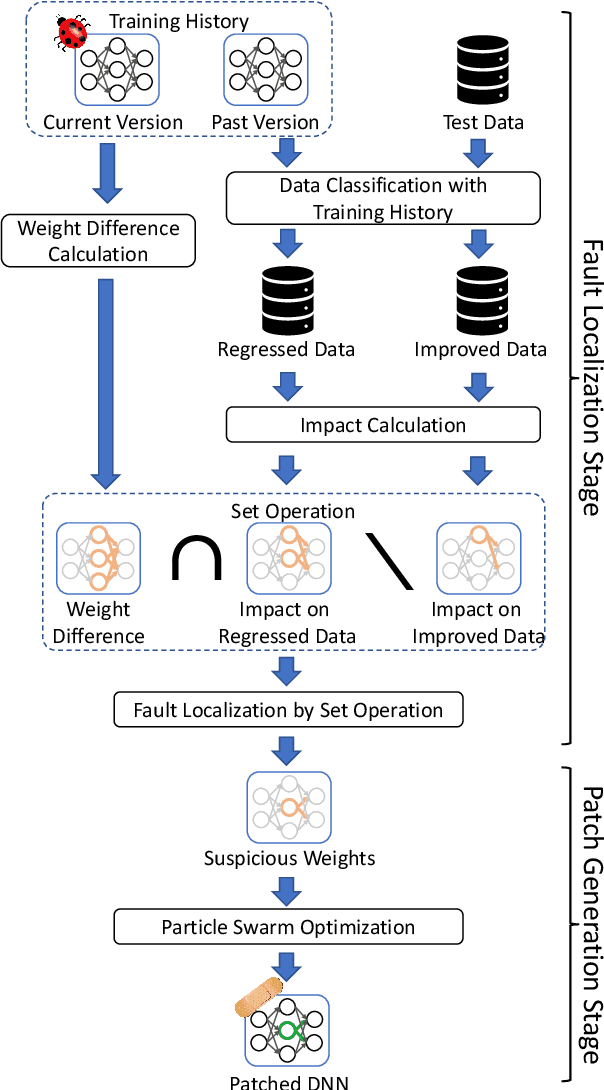

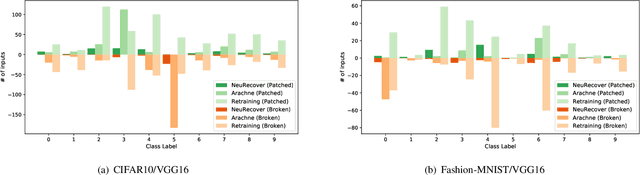

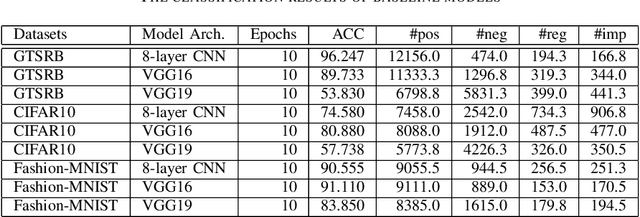

Abstract:Systematic techniques to improve quality of deep neural networks (DNNs) are critical given the increasing demand for practical applications including safety-critical ones. The key challenge comes from the little controllability in updating DNNs. Retraining to fix some behavior often has a destructive impact on other behavior, causing regressions, i.e., the updated DNN fails with inputs correctly handled by the original one. This problem is crucial when engineers are required to investigate failures in intensive assurance activities for safety or trust. Search-based repair techniques for DNNs have potentials to tackle this challenge by enabling localized updates only on "responsible parameters" inside the DNN. However, the potentials have not been explored to realize sufficient controllability to suppress regressions in DNN repair tasks. In this paper, we propose a novel DNN repair method that makes use of the training history for judging which DNN parameters should be changed or not to suppress regressions. We implemented the method into a tool called NeuRecover and evaluated it with three datasets. Our method outperformed the existing method by achieving often less than a quarter, even a tenth in some cases, number of regressions. Our method is especially effective when the repair requirements are tight to fix specific failure types. In such cases, our method showed stably low rates (<2%) of regressions, which were in many cases a tenth of regressions caused by retraining.

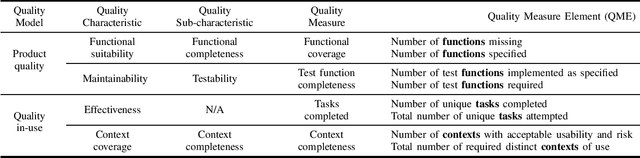

Adapting SQuaRE for Quality Assessment of Artificial Intelligence Systems

Jul 31, 2019

Abstract:More and more software practitioners are tackling towards industrial applications of artificial intelligence (AI) systems, especially those based on machine learning (ML). However, many of existing principles and approaches to traditional systems do not work effectively for the system behavior obtained by training not by logical design. In addition, unique kinds of requirements are emerging such as fairness and explainability. To provide clear guidance to understand and tackle these difficulties, we present an analysis on what quality concepts we should evaluate for AI systems. We base our discussion on ISO/IEC 25000 series, known as SQuaRE, and identify how it should be adapted for the unique nature of ML and $\textit{Ethics guidelines for trustworthy AI}$ from European Commission. We thus provide holistic insights for quality of AI systems by incorporating the ML nature and AI ethics to the traditional software quality concepts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge