Natarajan Shankar

Evidential Transactions with Cyberlogic

Mar 20, 2023Abstract:Cyberlogic is an enabling logical foundation for building and analyzing digital transactions that involve the exchange of digital forms of evidence. It is based on an extension of (first-order) intuitionistic predicate logic with an attestation and a knowledge modality. The key ideas underlying Cyberlogic are extremely simple, as (1) public keys correspond to authorizations, (2) transactions are specified as distributed logic programs, and (3) verifiable evidence is collected by means of distributed proof search. Verifiable evidence, in particular, are constructed from extra-logical elements such as signed documents and cryptographic signatures. Despite this conceptual simplicity of Cyberlogic, central features of authorization policies including trust, delegation, and revocation of authority are definable. An expressive temporal-epistemic logic for specifying distributed authorization policies and protocols is therefore definable in Cyberlogic using a trusted time source. We describe the distributed execution of Cyberlogic programs based on the hereditary Harrop fragment in terms of distributed proof search, and we illustrate some fundamental issues in the distributed construction of certificates. The main principles of encoding and executing cryptographic protocols in Cyberlogic are demonstrated. Finally, a functional encryption scheme is proposed for checking certificates of evidential transactions when policies are kept private.

Revisiting Variable Ordering for Real Quantifier Elimination using Machine Learning

Feb 27, 2023Abstract:Cylindrical Algebraic Decomposition (CAD) is a key proof technique for formal verification of cyber-physical systems. CAD is computationally expensive, with worst-case doubly-exponential complexity. Selecting an optimal variable ordering is paramount to efficient use of CAD. Prior work has demonstrated that machine learning can be useful in determining efficient variable orderings. Much of this work has been driven by CAD problems extracted from applications of the MetiTarski theorem prover. In this paper, we revisit this prior work and consider issues of bias in existing training and test data. We observe that the classical MetiTarski benchmarks are heavily biased towards particular variable orderings. To address this, we apply symmetries to create a new dataset containing more than 41K MetiTarski challenges designed to remove bias. Furthermore, we evaluate issues of information leakage, and test the generalizability of our models on the new dataset.

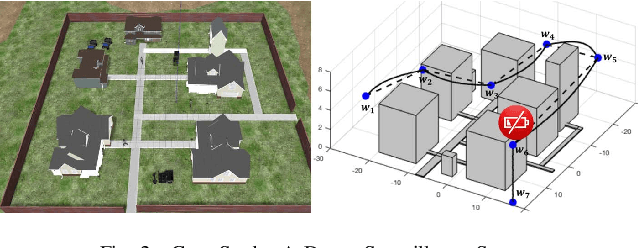

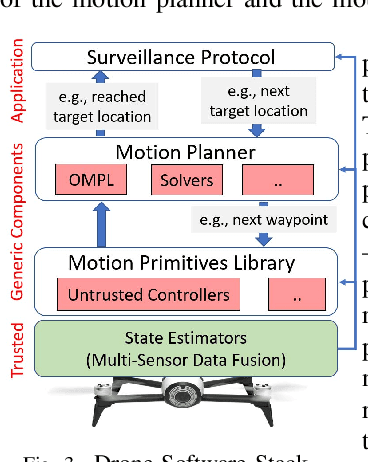

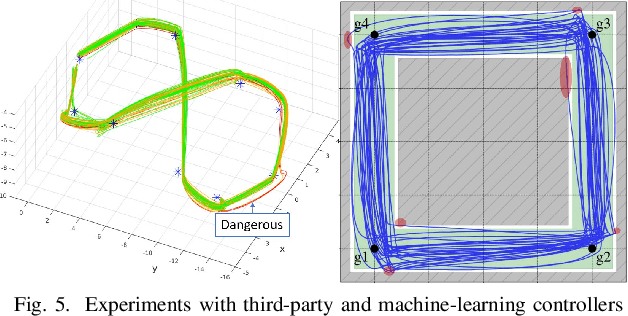

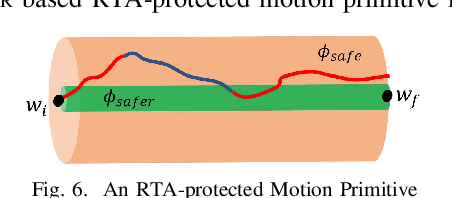

SOTER: Programming Safe Robotics System using Runtime Assurance

Feb 11, 2019

Abstract:The recent drive towards achieving greater autonomy and intelligence in robotics has led to high levels of complexity. Autonomous robots increasingly depend on third party off-the-shelf components and complex machine-learning techniques. This trend makes it challenging to provide strong design-time certification of correct operation. To address these challenges, we present SOTER, a robotics programming framework with two key components: (1) a programming language for implementing and testing high-level reactive robotics software and (2) an integrated runtime assurance (RTA) system that helps enable the use of uncertified components, while still providing safety guarantees. SOTER provides language primitives to declaratively construct a RTA module consisting of an advanced, high-performance controller (uncertified), a safe, lower-performance controller (certified), and the desired safety specification. The framework provides a formal guarantee that a well-formed RTA module always satisfies the safety specification, without completely sacrificing performance by using higher performance uncertified components whenever safe. SOTER allows the complex robotics software stack to be constructed as a composition of RTA modules, where each uncertified component is protected using a RTA module. To demonstrate the efficacy of our framework, we consider a real-world case-study of building a safe drone surveillance system. Our experiments both in simulation and on actual drones show that the SOTER-enabled RTA ensures the safety of the system, including when untrusted third-party components have bugs or deviate from the desired behavior.

ARSENAL: Automatic Requirements Specification Extraction from Natural Language

Apr 20, 2016

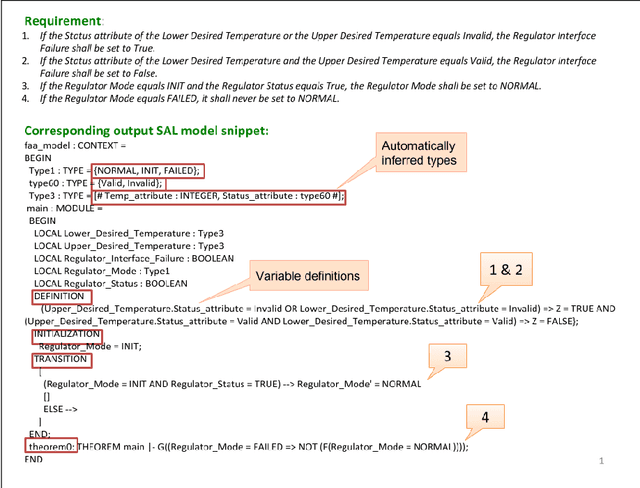

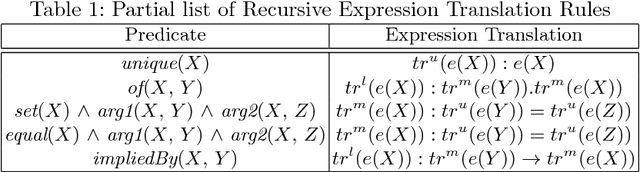

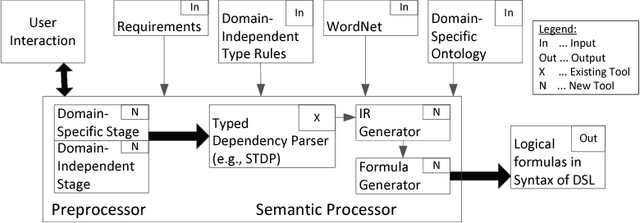

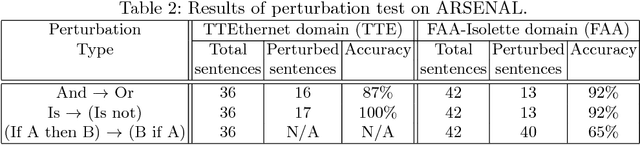

Abstract:Requirements are informal and semi-formal descriptions of the expected behavior of a complex system from the viewpoints of its stakeholders (customers, users, operators, designers, and engineers). However, for the purpose of design, testing, and verification for critical systems, we can transform requirements into formal models that can be analyzed automatically. ARSENAL is a framework and methodology for systematically transforming natural language (NL) requirements into analyzable formal models and logic specifications. These models can be analyzed for consistency and implementability. The ARSENAL methodology is specialized to individual domains, but the approach is general enough to be adapted to new domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge