Shromona Ghosh

Scenic: A Language for Scenario Specification and Data Generation

Oct 13, 2020

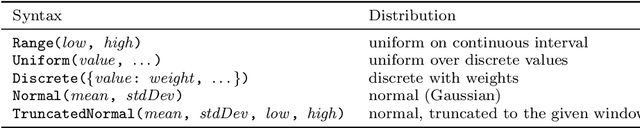

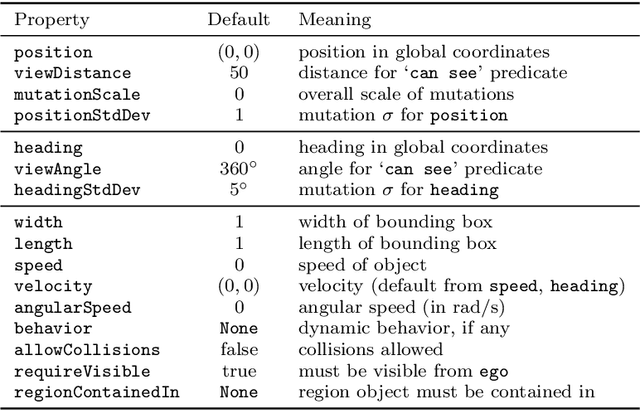

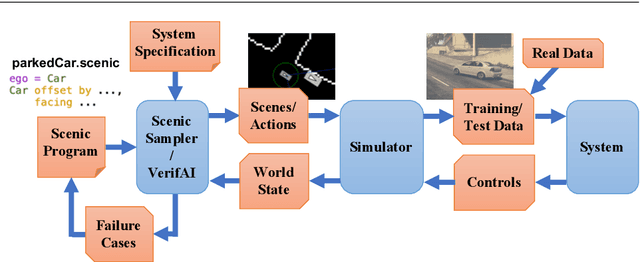

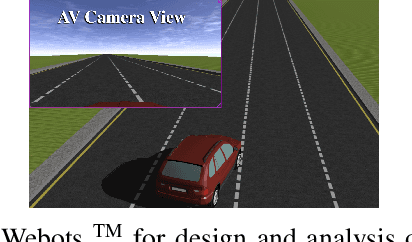

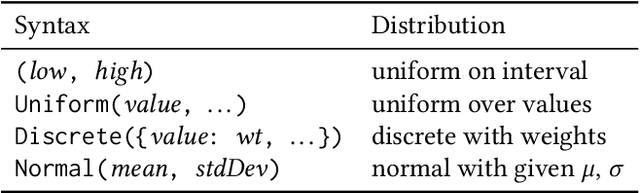

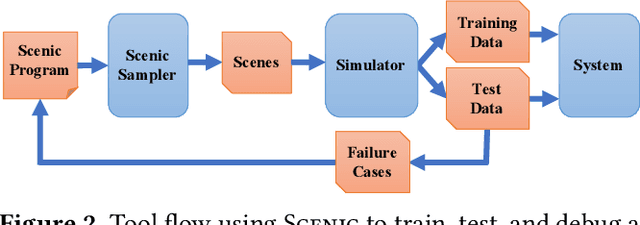

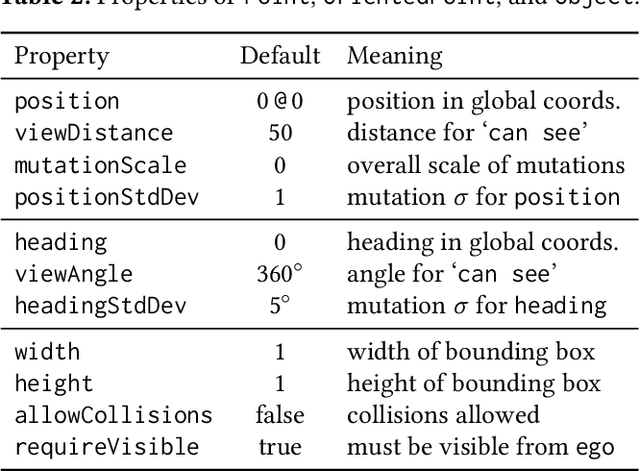

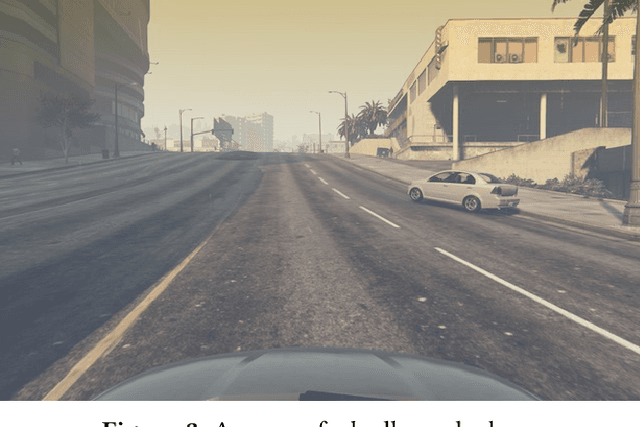

Abstract:We propose a new probabilistic programming language for the design and analysis of cyber-physical systems, especially those based on machine learning. Specifically, we consider the problems of training a system to be robust to rare events, testing its performance under different conditions, and debugging failures. We show how a probabilistic programming language can help address these problems by specifying distributions encoding interesting types of inputs, then sampling these to generate specialized training and test data. More generally, such languages can be used to write environment models, an essential prerequisite to any formal analysis. In this paper, we focus on systems like autonomous cars and robots, whose environment at any point in time is a 'scene', a configuration of physical objects and agents. We design a domain-specific language, Scenic, for describing scenarios that are distributions over scenes and the behaviors of their agents over time. As a probabilistic programming language, Scenic allows assigning distributions to features of the scene, as well as declaratively imposing hard and soft constraints over the scene. We develop specialized techniques for sampling from the resulting distribution, taking advantage of the structure provided by Scenic's domain-specific syntax. Finally, we apply Scenic in a case study on a convolutional neural network designed to detect cars in road images, improving its performance beyond that achieved by state-of-the-art synthetic data generation methods.

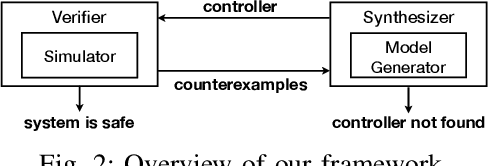

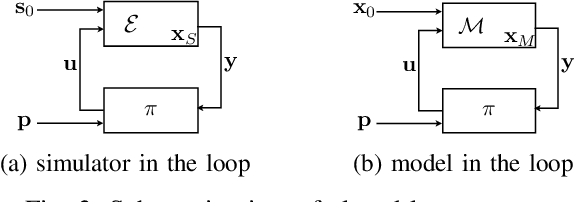

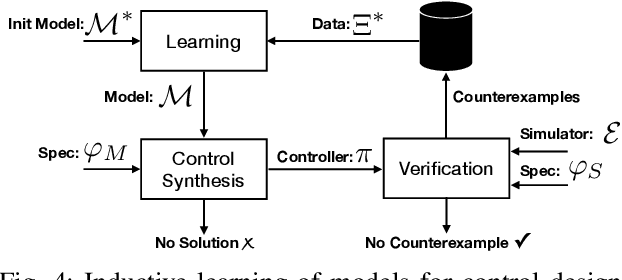

Counterexample-Guided Synthesis of Perception Models and Control

Nov 08, 2019

Abstract:We consider the problem of synthesizing safe and robust controllers for real world robotic systems like autonomous vehicles, which rely on complex perception modules. We propose a counterexample-guided synthesis framework which iteratively learns perception models that enable finding safe control policies. We use counterexamples to extract information relevant for modeling the errors in perception modules. Such models then can be used to synthesize controllers robust to errors in perception. If the resulting policy is not safe, we gather new counterexamples. By repeating the process, we eventually find a controller which can keep the system safe even when there is perception failure. Finally, we show that our framework computes robust controllers for autonomous vehicles in two different simulated scenarios: (i) lane keeping, and (ii) automatic braking.

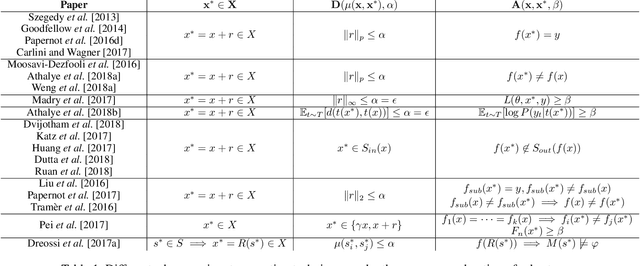

A Formalization of Robustness for Deep Neural Networks

Mar 24, 2019

Abstract:Deep neural networks have been shown to lack robustness to small input perturbations. The process of generating the perturbations that expose the lack of robustness of neural networks is known as adversarial input generation. This process depends on the goals and capabilities of the adversary, In this paper, we propose a unifying formalization of the adversarial input generation process from a formal methods perspective. We provide a definition of robustness that is general enough to capture different formulations. The expressiveness of our formalization is shown by modeling and comparing a variety of adversarial attack techniques.

A New Simulation Metric to Determine Safe Environments and Controllers for Systems with Unknown Dynamics

Feb 27, 2019

Abstract:We consider the problem of extracting safe environments and controllers for reach-avoid objectives for systems with known state and control spaces, but unknown dynamics. In a given environment, a common approach is to synthesize a controller from an abstraction or a model of the system (potentially learned from data). However, in many situations, the relationship between the dynamics of the model and the \textit{actual system} is not known; and hence it is difficult to provide safety guarantees for the system. In such cases, the Standard Simulation Metric (SSM), defined as the worst-case norm distance between the model and the system output trajectories, can be used to modify a reach-avoid specification for the system into a more stringent specification for the abstraction. Nevertheless, the obtained distance, and hence the modified specification, can be quite conservative. This limits the set of environments for which a safe controller can be obtained. We propose SPEC, a specification-centric simulation metric, which overcomes these limitations by computing the distance using only the trajectories that violate the specification for the system. We show that modifying a reach-avoid specification with SPEC allows us to synthesize a safe controller for a larger set of environments compared to SSM. We also propose a probabilistic method to compute SPEC for a general class of systems. Case studies using simulators for quadrotors and autonomous cars illustrate the advantages of the proposed metric for determining safe environment sets and controllers.

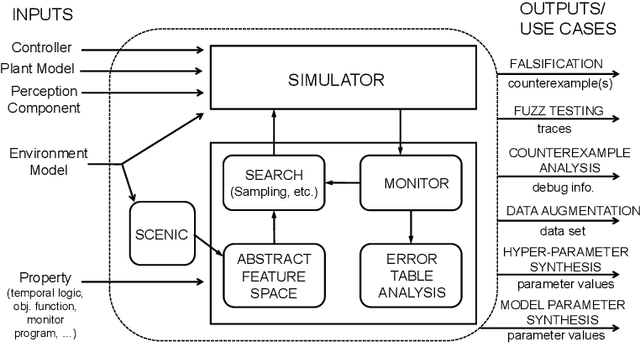

VERIFAI: A Toolkit for the Design and Analysis of Artificial Intelligence-Based Systems

Feb 14, 2019

Abstract:We present VERIFAI, a software toolkit for the formal design and analysis of systems that include artificial intelligence (AI) and machine learning (ML) components. VERIFAI particularly seeks to address challenges with applying formal methods to perception and ML components, including those based on neural networks, and to model and analyze system behavior in the presence of environment uncertainty. We describe the initial version of VERIFAI which centers on simulation guided by formal models and specifications. Several use cases are illustrated with examples, including temporal-logic falsification, model-based systematic fuzz testing, parameter synthesis, counterexample analysis, and data set augmentation.

SOTER: Programming Safe Robotics System using Runtime Assurance

Feb 11, 2019

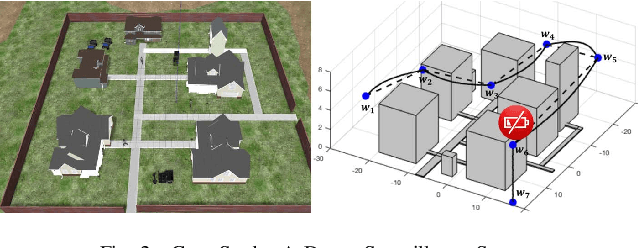

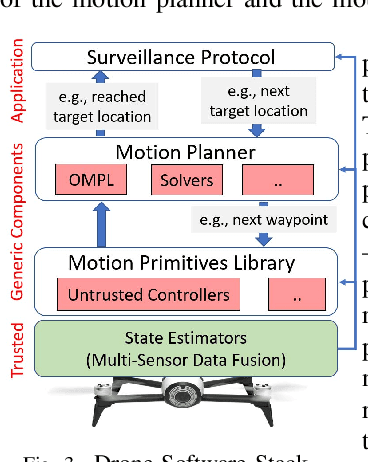

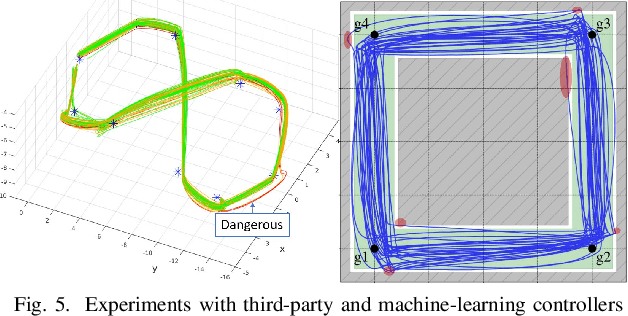

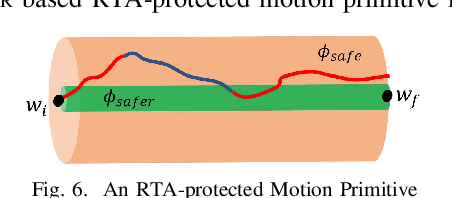

Abstract:The recent drive towards achieving greater autonomy and intelligence in robotics has led to high levels of complexity. Autonomous robots increasingly depend on third party off-the-shelf components and complex machine-learning techniques. This trend makes it challenging to provide strong design-time certification of correct operation. To address these challenges, we present SOTER, a robotics programming framework with two key components: (1) a programming language for implementing and testing high-level reactive robotics software and (2) an integrated runtime assurance (RTA) system that helps enable the use of uncertified components, while still providing safety guarantees. SOTER provides language primitives to declaratively construct a RTA module consisting of an advanced, high-performance controller (uncertified), a safe, lower-performance controller (certified), and the desired safety specification. The framework provides a formal guarantee that a well-formed RTA module always satisfies the safety specification, without completely sacrificing performance by using higher performance uncertified components whenever safe. SOTER allows the complex robotics software stack to be constructed as a composition of RTA modules, where each uncertified component is protected using a RTA module. To demonstrate the efficacy of our framework, we consider a real-world case-study of building a safe drone surveillance system. Our experiments both in simulation and on actual drones show that the SOTER-enabled RTA ensures the safety of the system, including when untrusted third-party components have bugs or deviate from the desired behavior.

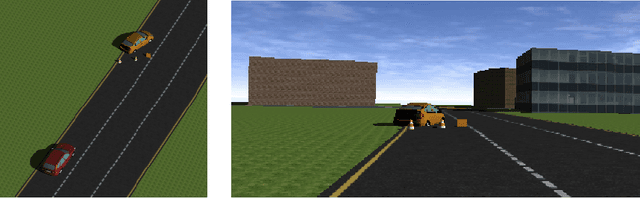

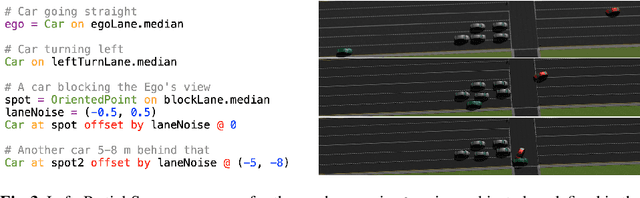

Scenic: Language-Based Scene Generation

Sep 25, 2018

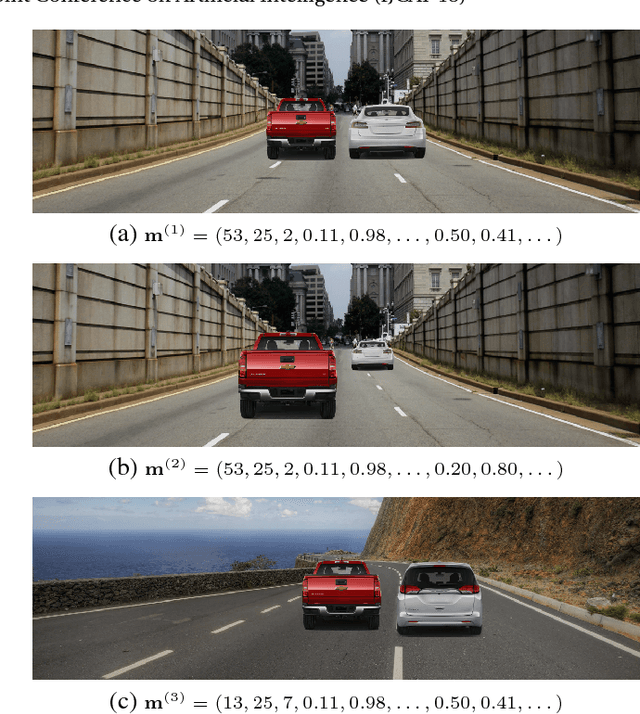

Abstract:Synthetic data has proved increasingly useful in both training and testing machine learning models such as neural networks. The major problem in synthetic data generation is producing meaningful data that is not simply random but reflects properties of real-world data or covers particular cases of interest. In this paper, we show how a probabilistic programming language can be used to guide data synthesis by encoding domain knowledge about what data is useful. Specifically, we focus on data sets arising from "scenes", configurations of physical objects; for example, images of cars on a road. We design a domain-specific language, Scenic, for describing "scenarios" that are distributions over scenes. The syntax of Scenic makes it easy to specify complex relationships between the positions and orientations of objects. As a probabilistic programming language, Scenic allows assigning distributions to features of the scene, as well as declaratively imposing hard and soft constraints over the scene. A Scenic scenario thereby implicitly defines a distribution over scenes, and we formulate the problem of sampling from this distribution as "scene improvisation". We implement an improviser for Scenic scenarios and apply it in a case study generating synthetic data sets for a convolutional neural network designed to detect cars in road images. Our experiments demonstrate the usefulness of our approach by using Scenic to analyze and improve the performance of the network in various scenarios.

A Minimum Discounted Reward Hamilton-Jacobi Formulation for Computing Reachable Sets

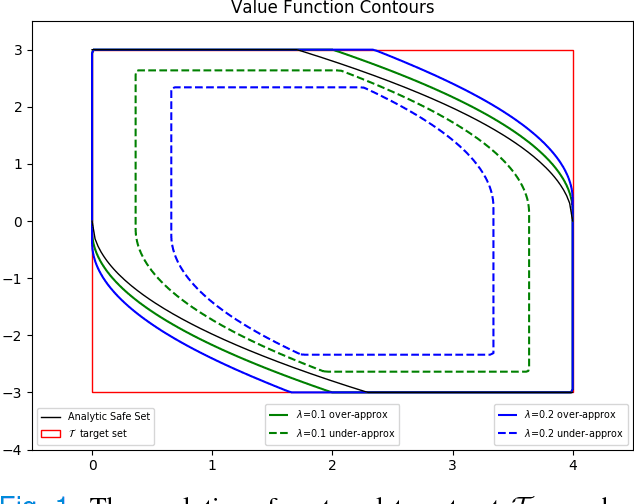

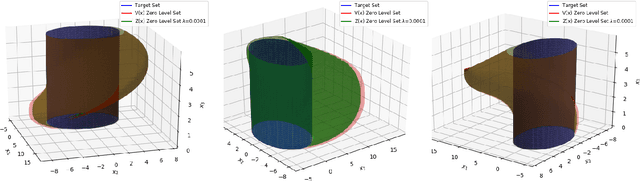

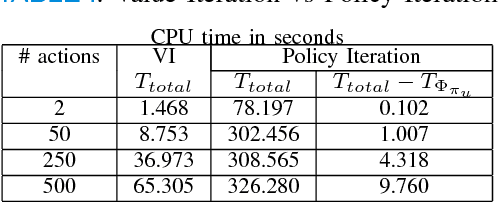

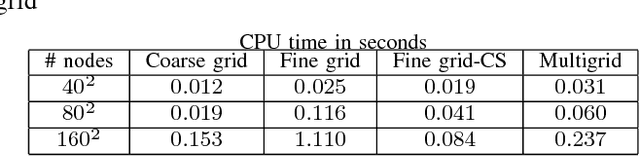

Sep 03, 2018

Abstract:We propose a novel formulation for approximating reachable sets through a minimum discounted reward optimal control problem. The formulation yields a continuous solution that can be obtained by solving a Hamilton-Jacobi equation. Furthermore, the numerical approximation to this solution can be obtained as the unique fixed-point to a contraction mapping. This allows for more efficient solution methods that could not be applied under traditional formulations for solving reachable sets. In addition, this formulation provides a link between reinforcement learning and learning reachable sets for systems with unknown dynamics, allowing algorithms from the former to be applied to the latter. We use two benchmark examples, double integrator, and pursuit-evasion games, to show the correctness of the formulation as well as its strengths in comparison to previous work.

Time Series Learning using Monotonic Logical Properties

Aug 01, 2018

Abstract:Cyber-physical systems of today are generating large volumes of time-series data. As manual inspection of such data is not tractable, the need for learning methods to help discover logical structure in the data has increased. We propose a logic-based framework that allows domain-specific knowledge to be embedded into formulas in a parametric logical specification over time-series data. The key idea is to then map a time series to a surface in the parameter space of the formula. Given this mapping, we identify the Hausdorff distance between boundaries as a natural distance metric between two time-series data under the lens of the parametric specification. This enables embedding non-trivial domain-specific knowledge into the distance metric and then using off-the-shelf machine learning tools to label the data. After labeling the data, we demonstrate how to extract a logical specification for each label. Finally, we showcase our technique on real world traffic data to learn classifiers/monitors for slow-downs and traffic jams.

Counterexample-Guided Data Augmentation

May 17, 2018

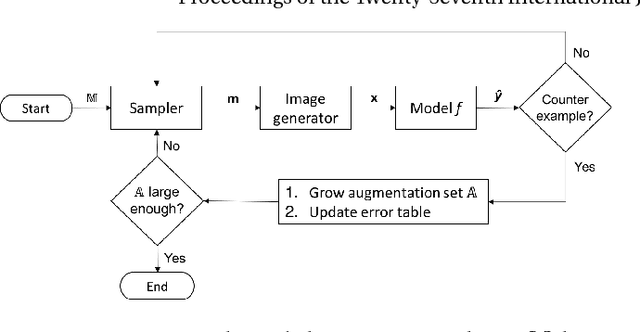

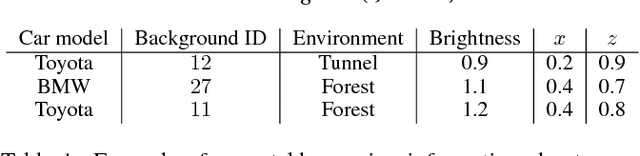

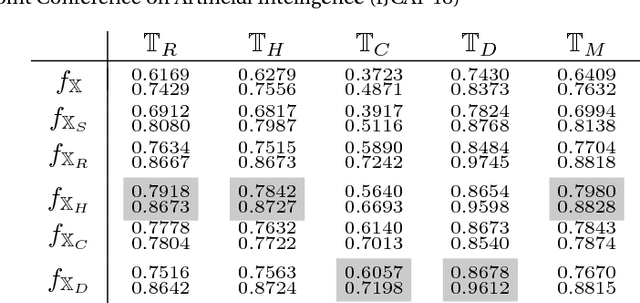

Abstract:We present a novel framework for augmenting data sets for machine learning based on counterexamples. Counterexamples are misclassified examples that have important properties for retraining and improving the model. Key components of our framework include a counterexample generator, which produces data items that are misclassified by the model and error tables, a novel data structure that stores information pertaining to misclassifications. Error tables can be used to explain the model's vulnerabilities and are used to efficiently generate counterexamples for augmentation. We show the efficacy of the proposed framework by comparing it to classical augmentation techniques on a case study of object detection in autonomous driving based on deep neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge