Hadi Ravanbakhsh

Counterexample-Guided Synthesis of Perception Models and Control

Nov 08, 2019

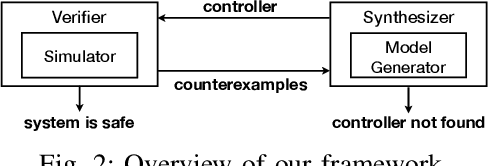

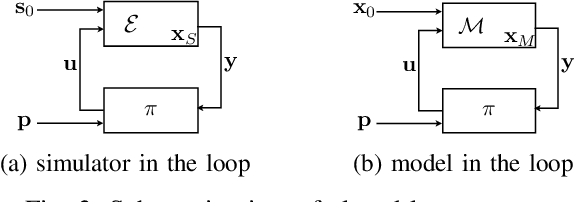

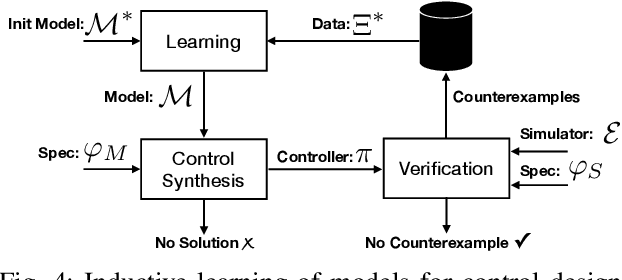

Abstract:We consider the problem of synthesizing safe and robust controllers for real world robotic systems like autonomous vehicles, which rely on complex perception modules. We propose a counterexample-guided synthesis framework which iteratively learns perception models that enable finding safe control policies. We use counterexamples to extract information relevant for modeling the errors in perception modules. Such models then can be used to synthesize controllers robust to errors in perception. If the resulting policy is not safe, we gather new counterexamples. By repeating the process, we eventually find a controller which can keep the system safe even when there is perception failure. Finally, we show that our framework computes robust controllers for autonomous vehicles in two different simulated scenarios: (i) lane keeping, and (ii) automatic braking.

Real-time Funnel Generation for Restricted Motion Planning

Nov 04, 2019

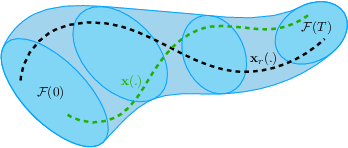

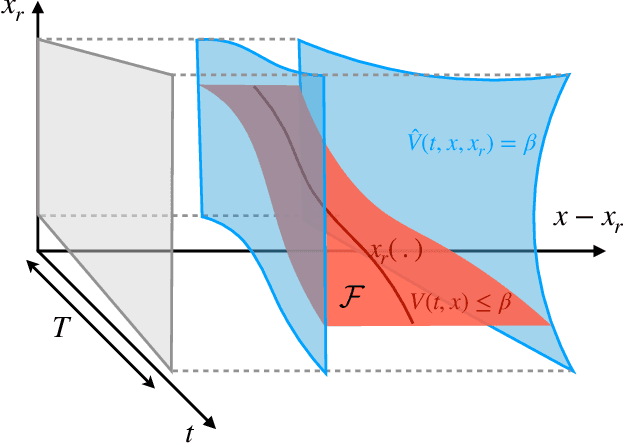

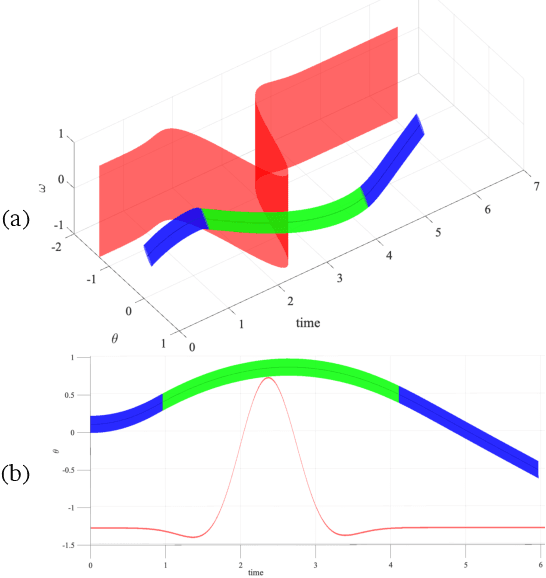

Abstract:In autonomous systems, a motion planner generates reference trajectories which are tracked by a low-level controller. For safe operation, the motion planner should account for inevitable controller tracking error when generating avoidance trajectories. In this article we present a method for generating provably safe tracking error bounds, while reducing over-conservatism that exists in existing methods. We achieve this goal by restricting possible behaviors for the motion planner. We provide an algebraic method based on sum-of-squares programming to define restrictions on the motion planner and find small bounds on the tracking error. We demonstrate our method on two case studies and show how we can integrate the method into already developed motion planning techniques. Results suggest that our method can provide acceptable tracking error wherein previous work were not applicable.

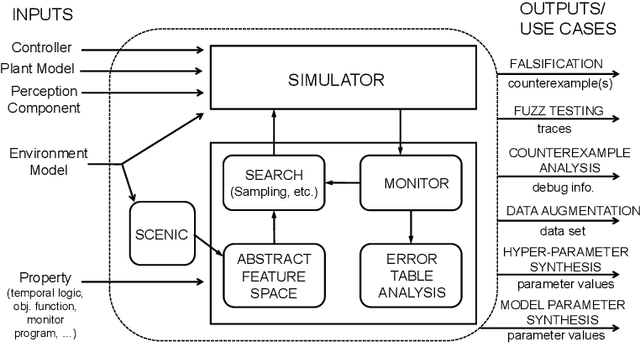

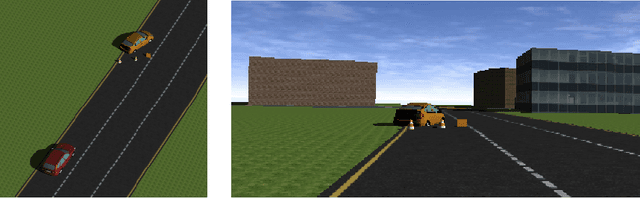

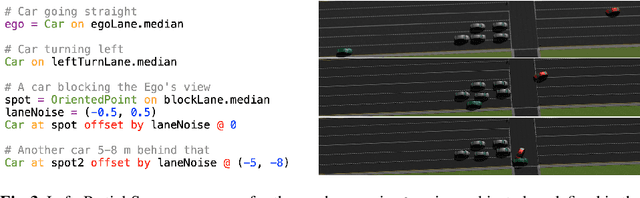

VERIFAI: A Toolkit for the Design and Analysis of Artificial Intelligence-Based Systems

Feb 14, 2019

Abstract:We present VERIFAI, a software toolkit for the formal design and analysis of systems that include artificial intelligence (AI) and machine learning (ML) components. VERIFAI particularly seeks to address challenges with applying formal methods to perception and ML components, including those based on neural networks, and to model and analyze system behavior in the presence of environment uncertainty. We describe the initial version of VERIFAI which centers on simulation guided by formal models and specifications. Several use cases are illustrated with examples, including temporal-logic falsification, model-based systematic fuzz testing, parameter synthesis, counterexample analysis, and data set augmentation.

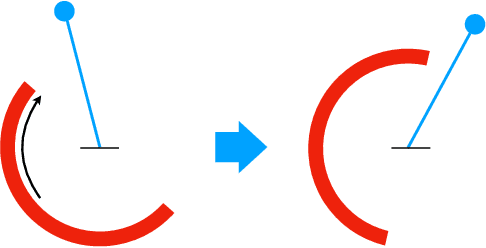

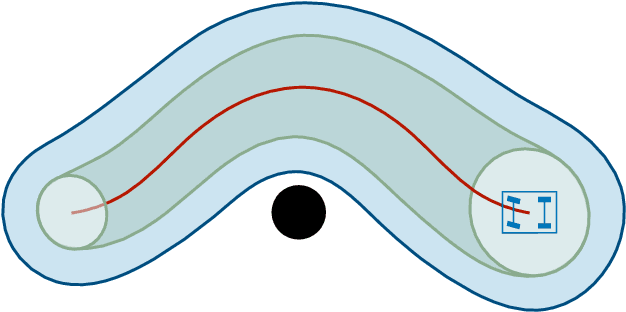

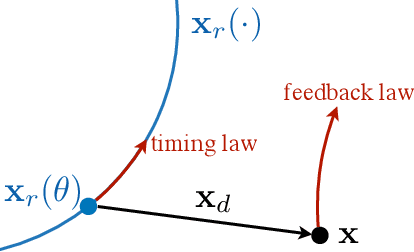

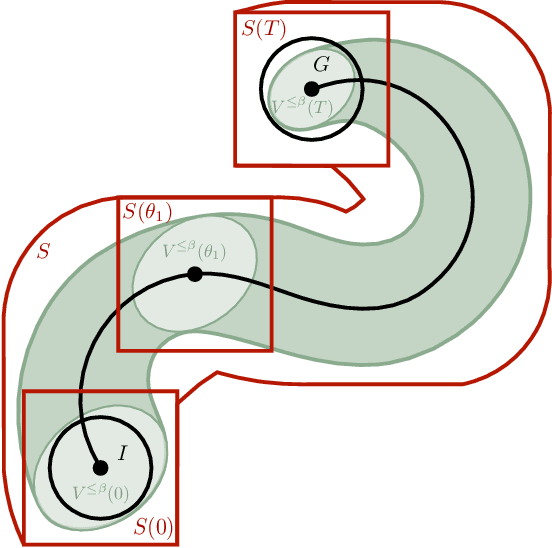

Path-Following through Control Funnel Functions

Aug 02, 2018

Abstract:We present an approach to path following using so-called control funnel functions. Synthesizing controllers to "robustly" follow a reference trajectory is a fundamental problem for autonomous vehicles. Robustness, in this context, requires our controllers to handle a specified amount of deviation from the desired trajectory. Our approach considers a timing law that describes how fast to move along a given reference trajectory and a control feedback law for reducing deviations from the reference. We synthesize both feedback laws using "control funnel functions" that jointly encode the control law as well as its correctness argument over a mathematical model of the vehicle dynamics. We adapt a previously described demonstration-based learning algorithm to synthesize a control funnel function as well as the associated feedback law. We implement this law on top of a 1/8th scale autonomous vehicle called the Parkour car. We compare the performance of our path following approach against a trajectory tracking approach by specifying trajectories of varying lengths and curvatures. Our experiments demonstrate the improved robustness obtained from the use of control funnel functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge