Daniel Elenius

Shrinking POMCP: A Framework for Real-Time UAV Search and Rescue

Nov 20, 2024

Abstract:Efficient path optimization for drones in search and rescue operations faces challenges, including limited visibility, time constraints, and complex information gathering in urban environments. We present a comprehensive approach to optimize UAV-based search and rescue operations in neighborhood areas, utilizing both a 3D AirSim-ROS2 simulator and a 2D simulator. The path planning problem is formulated as a partially observable Markov decision process (POMDP), and we propose a novel ``Shrinking POMCP'' approach to address time constraints. In the AirSim environment, we integrate our approach with a probabilistic world model for belief maintenance and a neurosymbolic navigator for obstacle avoidance. The 2D simulator employs surrogate ROS2 nodes with equivalent functionality. We compare trajectories generated by different approaches in the 2D simulator and evaluate performance across various belief types in the 3D AirSim-ROS simulator. Experimental results from both simulators demonstrate that our proposed shrinking POMCP solution achieves significant improvements in search times compared to alternative methods, showcasing its potential for enhancing the efficiency of UAV-assisted search and rescue operations.

Addressing Uncertainty in LLMs to Enhance Reliability in Generative AI

Nov 04, 2024

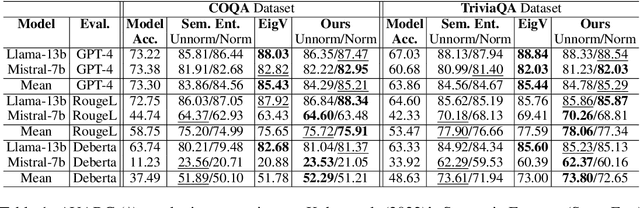

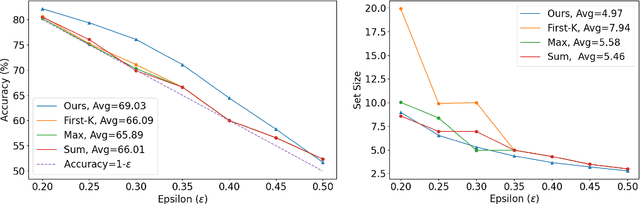

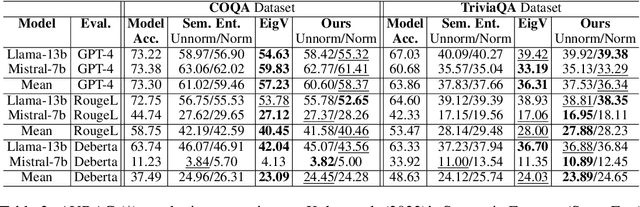

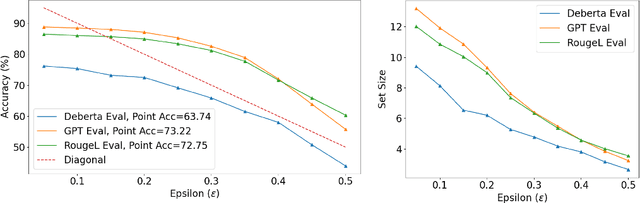

Abstract:In this paper, we present a dynamic semantic clustering approach inspired by the Chinese Restaurant Process, aimed at addressing uncertainty in the inference of Large Language Models (LLMs). We quantify uncertainty of an LLM on a given query by calculating entropy of the generated semantic clusters. Further, we propose leveraging the (negative) likelihood of these clusters as the (non)conformity score within Conformal Prediction framework, allowing the model to predict a set of responses instead of a single output, thereby accounting for uncertainty in its predictions. We demonstrate the effectiveness of our uncertainty quantification (UQ) technique on two well known question answering benchmarks, COQA and TriviaQA, utilizing two LLMs, Llama2 and Mistral. Our approach achieves SOTA performance in UQ, as assessed by metrics such as AUROC, AUARC, and AURAC. The proposed conformal predictor is also shown to produce smaller prediction sets while maintaining the same probabilistic guarantee of including the correct response, in comparison to existing SOTA conformal prediction baseline.

Resource-Constrained Heuristic for Max-SAT

Oct 11, 2024

Abstract:We propose a resource-constrained heuristic for instances of Max-SAT that iteratively decomposes a larger problem into smaller subcomponents that can be solved by optimized solvers and hardware. The unconstrained outer loop maintains the state space of a given problem and selects a subset of the SAT variables for optimization independent of previous calls. The resource-constrained inner loop maximizes the number of satisfiable clauses in the "sub-SAT" problem. Our outer loop is agnostic to the mechanisms of the inner loop, allowing for the use of traditional solvers for the optimization step. However, we can also transform the selected "sub-SAT" problem into a quadratic unconstrained binary optimization (QUBO) one and use specialized hardware for optimization. In contrast to existing solutions that convert a SAT instance into a QUBO one before decomposition, we choose a subset of the SAT variables before QUBO optimization. We analyze a set of variable selection methods, including a novel graph-based method that exploits the structure of a given SAT instance. The number of QUBO variables needed to encode a (sub-)SAT problem varies, so we additionally learn a model that predicts the size of sub-SAT problems that will fit a fixed-size QUBO solver. We empirically demonstrate our results on a set of randomly generated Max-SAT instances as well as real world examples from the Max-SAT evaluation benchmarks and outperform existing QUBO decomposer solutions.

Direct Amortized Likelihood Ratio Estimation

Nov 17, 2023Abstract:We introduce a new amortized likelihood ratio estimator for likelihood-free simulation-based inference (SBI). Our estimator is simple to train and estimates the likelihood ratio using a single forward pass of the neural estimator. Our approach directly computes the likelihood ratio between two competing parameter sets which is different from the previous approach of comparing two neural network output values. We refer to our model as the direct neural ratio estimator (DNRE). As part of introducing the DNRE, we derive a corresponding Monte Carlo estimate of the posterior. We benchmark our new ratio estimator and compare to previous ratio estimators in the literature. We show that our new ratio estimator often outperforms these previous approaches. As a further contribution, we introduce a new derivative estimator for likelihood ratio estimators that enables us to compare likelihood-free Hamiltonian Monte Carlo (HMC) with random-walk Metropolis-Hastings (MH). We show that HMC is equally competitive, which has not been previously shown. Finally, we include a novel real-world application of SBI by using our neural ratio estimator to design a quadcopter. Code is available at https://github.com/SRI-CSL/dnre.

AircraftVerse: A Large-Scale Multimodal Dataset of Aerial Vehicle Designs

Jun 08, 2023

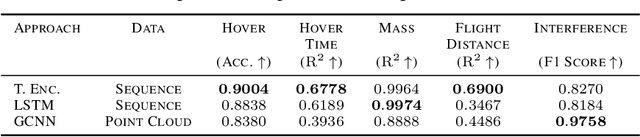

Abstract:We present AircraftVerse, a publicly available aerial vehicle design dataset. Aircraft design encompasses different physics domains and, hence, multiple modalities of representation. The evaluation of these cyber-physical system (CPS) designs requires the use of scientific analytical and simulation models ranging from computer-aided design tools for structural and manufacturing analysis, computational fluid dynamics tools for drag and lift computation, battery models for energy estimation, and simulation models for flight control and dynamics. AircraftVerse contains 27,714 diverse air vehicle designs - the largest corpus of engineering designs with this level of complexity. Each design comprises the following artifacts: a symbolic design tree describing topology, propulsion subsystem, battery subsystem, and other design details; a STandard for the Exchange of Product (STEP) model data; a 3D CAD design using a stereolithography (STL) file format; a 3D point cloud for the shape of the design; and evaluation results from high fidelity state-of-the-art physics models that characterize performance metrics such as maximum flight distance and hover-time. We also present baseline surrogate models that use different modalities of design representation to predict design performance metrics, which we provide as part of our dataset release. Finally, we discuss the potential impact of this dataset on the use of learning in aircraft design and, more generally, in CPS. AircraftVerse is accompanied by a data card, and it is released under Creative Commons Attribution-ShareAlike (CC BY-SA) license. The dataset is hosted at https://zenodo.org/record/6525446, baseline models and code at https://github.com/SRI-CSL/AircraftVerse, and the dataset description at https://aircraftverse.onrender.com/.

Design of Unmanned Air Vehicles Using Transformer Surrogate Models

Nov 11, 2022

Abstract:Computer-aided design (CAD) is a promising new area for the application of artificial intelligence (AI) and machine learning (ML). The current practice of design of cyber-physical systems uses the digital twin methodology, wherein the actual physical design is preceded by building detailed models that can be evaluated by physics simulation models. These physics models are often slow and the manual design process often relies on exploring near-by variations of existing designs. AI holds the promise of breaking these design silos and increasing the diversity and performance of designs by accelerating the exploration of the design space. In this paper, we focus on the design of electrical unmanned aerial vehicles (UAVs). The high-density batteries and purely electrical propulsion systems have disrupted the space of UAV design, making this domain an ideal target for AI-based design. In this paper, we develop an AI Designer that synthesizes novel UAV designs. Our approach uses a deep transformer model with a novel domain-specific encoding such that we can evaluate the performance of new proposed designs without running expensive flight dynamics models and CAD tools. We demonstrate that our approach significantly reduces the overall compute requirements for the design process and accelerates the design space exploration. Finally, we identify future research directions to achieve full-scale deployment of AI-assisted CAD for UAVs.

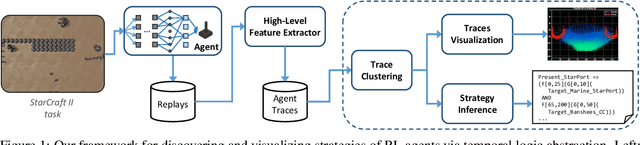

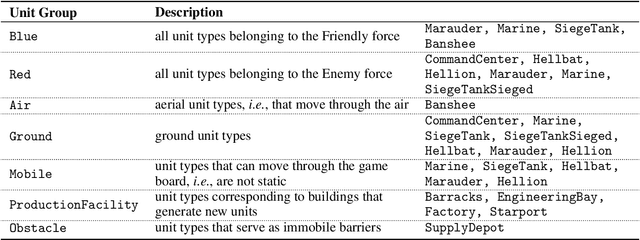

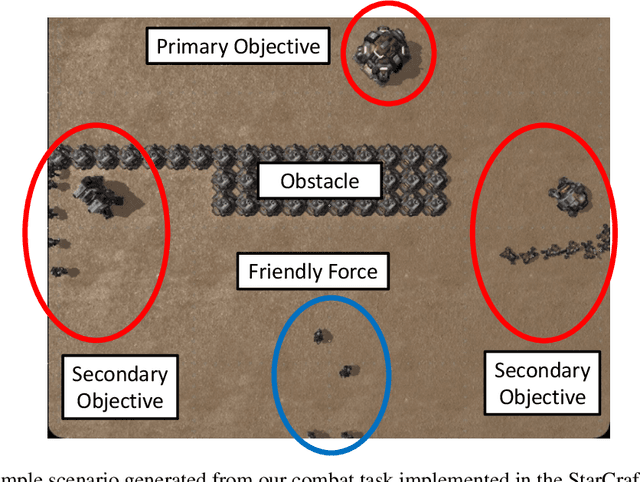

A Framework for Understanding and Visualizing Strategies of RL Agents

Aug 17, 2022

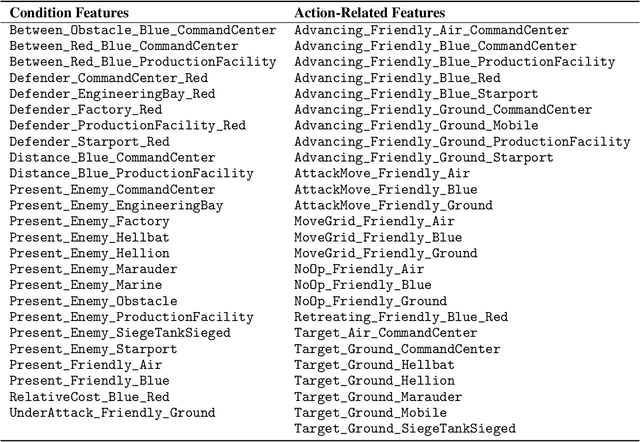

Abstract:Recent years have seen significant advances in explainable AI as the need to understand deep learning models has gained importance with the increased emphasis on trust and ethics in AI. Comprehensible models for sequential decision tasks are a particular challenge as they require understanding not only individual predictions but a series of predictions that interact with environmental dynamics. We present a framework for learning comprehensible models of sequential decision tasks in which agent strategies are characterized using temporal logic formulas. Given a set of agent traces, we first cluster the traces using a novel embedding method that captures frequent action patterns. We then search for logical formulas that explain the agent strategies in the different clusters. We evaluate our framework on combat scenarios in StarCraft II (SC2), using traces from a handcrafted expert policy and a trained reinforcement learning agent. We implemented a feature extractor for SC2 environments that extracts traces as sequences of high-level features describing both the state of the environment and the agent's local behavior from agent replays. We further designed a visualization tool depicting the movement of units in the environment that helps understand how different task conditions lead to distinct agent behavior patterns in each trace cluster. Experimental results show that our framework is capable of separating agent traces into distinct groups of behaviors for which our approach to strategy inference produces consistent, meaningful, and easily understood strategy descriptions.

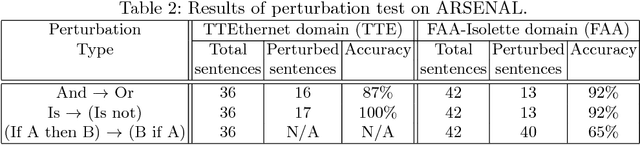

ARSENAL: Automatic Requirements Specification Extraction from Natural Language

Apr 20, 2016

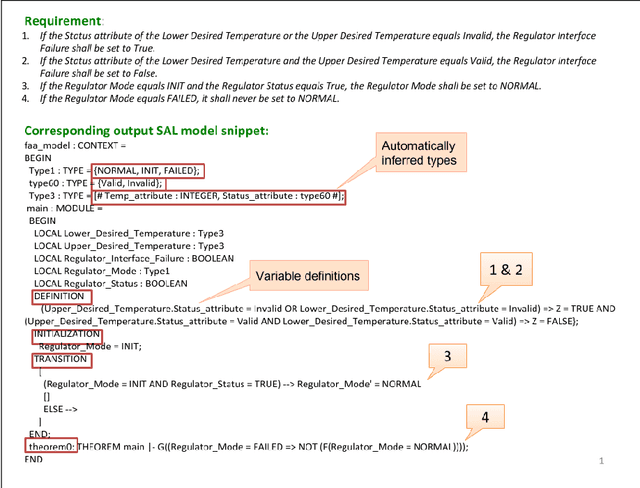

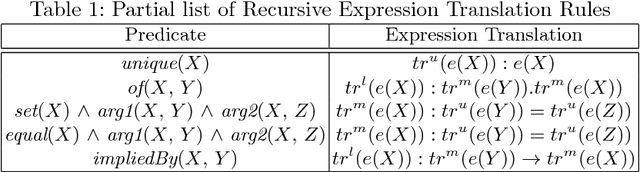

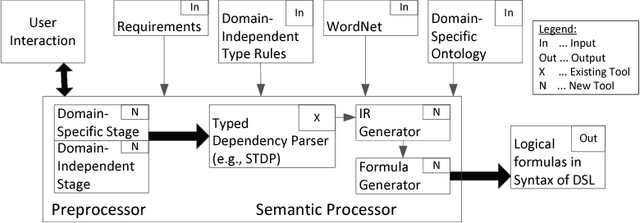

Abstract:Requirements are informal and semi-formal descriptions of the expected behavior of a complex system from the viewpoints of its stakeholders (customers, users, operators, designers, and engineers). However, for the purpose of design, testing, and verification for critical systems, we can transform requirements into formal models that can be analyzed automatically. ARSENAL is a framework and methodology for systematically transforming natural language (NL) requirements into analyzable formal models and logic specifications. These models can be analyzed for consistency and implementability. The ARSENAL methodology is specialized to individual domains, but the approach is general enough to be adapted to new domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge