Gabor Karsai

Formalizing Stateful Behavior Trees

Nov 21, 2024

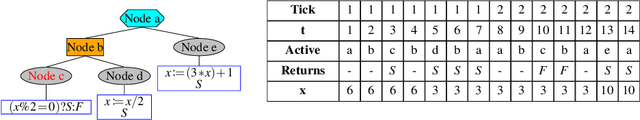

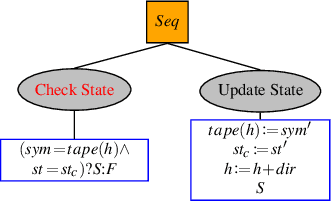

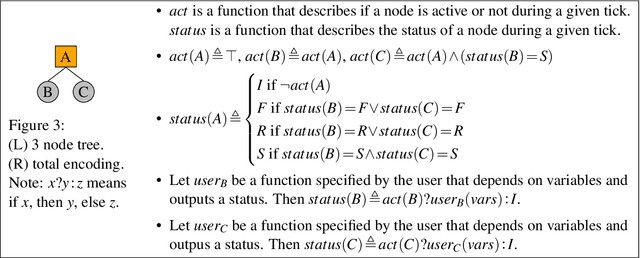

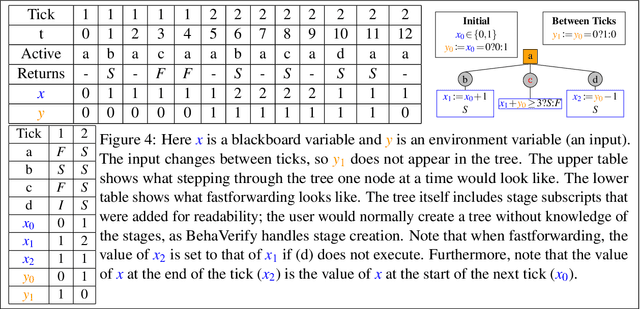

Abstract:Behavior Trees (BTs) are high-level controllers that are useful in a variety of planning tasks and are gaining traction in robotic mission planning. As they gain popularity in safety-critical domains, it is important to formalize their syntax and semantics, as well as verify properties for them. In this paper, we formalize a class of BTs we call Stateful Behavior Trees (SBTs) that have auxiliary variables and operate in an environment that can change over time. SBTs have access to persistent shared memory (often known as a blackboard) that keeps track of these auxiliary variables. We demonstrate that SBTs are equivalent in computational power to Turing Machines when the blackboard can store mathematical (i.e., unbounded) integers. We further identify syntactic assumptions where SBTs have computational power equivalent to finite state automata, specifically where the auxiliary variables are of finitary types. We present a domain specific language (DSL) for writing SBTs and adapt the tool BehaVerify for use with this DSL. This new DSL in BehaVerify supports interfacing with popular BT libraries in Python, and also provides generation of Haskell code and nuXmv models, the latter of which is used for model checking temporal logic specifications for the SBTs. We include examples and scalability results where BehaVerify outperforms another verification tool by a factor of 100.

* In Proceedings FMAS2024, arXiv:2411.13215

Shrinking POMCP: A Framework for Real-Time UAV Search and Rescue

Nov 20, 2024

Abstract:Efficient path optimization for drones in search and rescue operations faces challenges, including limited visibility, time constraints, and complex information gathering in urban environments. We present a comprehensive approach to optimize UAV-based search and rescue operations in neighborhood areas, utilizing both a 3D AirSim-ROS2 simulator and a 2D simulator. The path planning problem is formulated as a partially observable Markov decision process (POMDP), and we propose a novel ``Shrinking POMCP'' approach to address time constraints. In the AirSim environment, we integrate our approach with a probabilistic world model for belief maintenance and a neurosymbolic navigator for obstacle avoidance. The 2D simulator employs surrogate ROS2 nodes with equivalent functionality. We compare trajectories generated by different approaches in the 2D simulator and evaluate performance across various belief types in the 3D AirSim-ROS simulator. Experimental results from both simulators demonstrate that our proposed shrinking POMCP solution achieves significant improvements in search times compared to alternative methods, showcasing its potential for enhancing the efficiency of UAV-assisted search and rescue operations.

Dynamic Simplex: Balancing Safety and Performance in Autonomous Cyber Physical Systems

Feb 20, 2023

Abstract:Learning Enabled Components (LEC) have greatly assisted cyber-physical systems in achieving higher levels of autonomy. However, LEC's susceptibility to dynamic and uncertain operating conditions is a critical challenge for the safety of these systems. Redundant controller architectures have been widely adopted for safety assurance in such contexts. These architectures augment LEC "performant" controllers that are difficult to verify with "safety" controllers and the decision logic to switch between them. While these architectures ensure safety, we point out two limitations. First, they are trained offline to learn a conservative policy of always selecting a controller that maintains the system's safety, which limits the system's adaptability to dynamic and non-stationary environments. Second, they do not support reverse switching from the safety controller to the performant controller, even when the threat to safety is no longer present. To address these limitations, we propose a dynamic simplex strategy with an online controller switching logic that allows two-way switching. We consider switching as a sequential decision-making problem and model it as a semi-Markov decision process. We leverage a combination of a myopic selector using surrogate models (for the forward switch) and a non-myopic planner (for the reverse switch) to balance safety and performance. We evaluate this approach using an autonomous vehicle case study in the CARLA simulator using different driving conditions, locations, and component failures. We show that the proposed approach results in fewer collisions and higher performance than state-of-the-art alternatives.

Risk-Aware Scene Sampling for Dynamic Assurance of Autonomous Systems

Feb 28, 2022

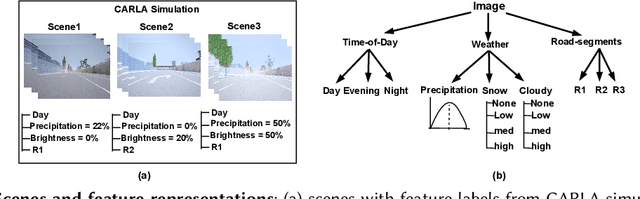

Abstract:Autonomous Cyber-Physical Systems must often operate under uncertainties like sensor degradation and shifts in the operating conditions, which increases its operational risk. Dynamic Assurance of these systems requires designing runtime safety components like Out-of-Distribution detectors and risk estimators, which require labeled data from different operating modes of the system that belong to scenes with adverse operating conditions, sensors, and actuator faults. Collecting real-world data of these scenes can be expensive and sometimes not feasible. So, scenario description languages with samplers like random and grid search are available to generate synthetic data from simulators, replicating these real-world scenes. However, we point out three limitations in using these conventional samplers. First, they are passive samplers, which do not use the feedback of previous results in the sampling process. Second, the variables to be sampled may have constraints that are often not included. Third, they do not balance the tradeoff between exploration and exploitation, which we hypothesize is necessary for better search space coverage. We present a scene generation approach with two samplers called Random Neighborhood Search (RNS) and Guided Bayesian Optimization (GBO), which extend the conventional random search and Bayesian Optimization search to include the limitations. Also, to facilitate the samplers, we use a risk-based metric that evaluates how risky the scene was for the system. We demonstrate our approach using an Autonomous Vehicle example in CARLA simulation. To evaluate our samplers, we compared them against the baselines of random search, grid search, and Halton sequence search. Our samplers of RNS and GBO sampled a higher percentage of high-risk scenes of 83% and 92%, compared to 56%, 66% and 71% of the grid, random and Halton samplers, respectively.

Efficient Out-of-Distribution Detection Using Latent Space of $β$-VAE for Cyber-Physical Systems

Aug 26, 2021

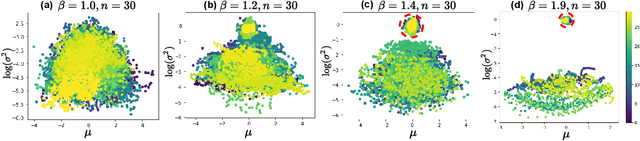

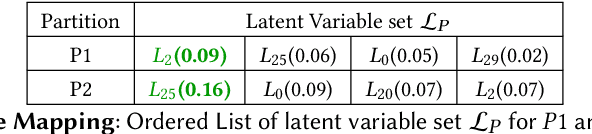

Abstract:Deep Neural Networks are actively being used in the design of autonomous Cyber-Physical Systems (CPSs). The advantage of these models is their ability to handle high-dimensional state-space and learn compact surrogate representations of the operational state spaces. However, the problem is that the sampled observations used for training the model may never cover the entire state space of the physical environment, and as a result, the system will likely operate in conditions that do not belong to the training distribution. These conditions that do not belong to training distribution are referred to as Out-of-Distribution (OOD). Detecting OOD conditions at runtime is critical for the safety of CPS. In addition, it is also desirable to identify the context or the feature(s) that are the source of OOD to select an appropriate control action to mitigate the consequences that may arise because of the OOD condition. In this paper, we study this problem as a multi-labeled time series OOD detection problem over images, where the OOD is defined both sequentially across short time windows (change points) as well as across the training data distribution. A common approach to solving this problem is the use of multi-chained one-class classifiers. However, this approach is expensive for CPSs that have limited computational resources and require short inference times. Our contribution is an approach to design and train a single $\beta$-Variational Autoencoder detector with a partially disentangled latent space sensitive to variations in image features. We use the feature sensitive latent variables in the latent space to detect OOD images and identify the most likely feature(s) responsible for the OOD. We demonstrate our approach using an Autonomous Vehicle in the CARLA simulator and a real-world automotive dataset called nuImages.

ReSonAte: A Runtime Risk Assessment Framework for Autonomous Systems

Feb 18, 2021

Abstract:Autonomous CPSs are often required to handle uncertainties and self-manage the system operation in response to problems and increasing risk in the operating paradigm. This risk may arise due to distribution shifts, environmental context, or failure of software or hardware components. Traditional techniques for risk assessment focus on design-time techniques such as hazard analysis, risk reduction, and assurance cases among others. However, these static, design-time techniques do not consider the dynamic contexts and failures the systems face at runtime. We hypothesize that this requires a dynamic assurance approach that computes the likelihood of unsafe conditions or system failures considering the safety requirements, assumptions made at design time, past failures in a given operating context, and the likelihood of system component failures. We introduce the ReSonAte dynamic risk estimation framework for autonomous systems. ReSonAte reasons over Bow-Tie Diagrams (BTDs) which capture information about hazard propagation paths and control strategies. Our innovation is the extension of the BTD formalism with attributes for modeling the conditional relationships with the state of the system and environment. We also describe a technique for estimating these conditional relationships and equations for estimating risk based on the state of the system and environment. To help with this process, we provide a scenario modeling procedure that can use the prior distributions of the scenes and threat conditions to generate the data required for estimating the conditional relationships. To improve scalability and reduce the amount of data required, this process considers each control strategy in isolation and composes several single-variate distributions into one complete multi-variate distribution for the control strategy in question.

Workflow Automation for Cyber Physical System Development Processes

Apr 12, 2020

Abstract:Development of Cyber Physical Systems (CPSs) requires close interaction between developers with expertise in many domains to achieve ever-increasing demands for improved performance, reduced cost, and more system autonomy. Each engineering discipline commonly relies on domain-specific modeling languages, and analysis and execution of these models is often automated with appropriate tooling. However, integration between these heterogeneous models and tools is often lacking, and most of the burden for inter-operation of these tools is placed on system developers. To address this problem, we introduce a workflow modeling language for the automation of complex CPS development processes and implement a platform for execution of these models in the Assurance-based Learning-enabled CPS (ALC) Toolchain. Several illustrative examples are provided which show how these workflow models are able to automate many time-consuming integration tasks previously performed manually by system developers.

A Methodology for Automating Assurance Case Generation

Mar 11, 2020

Abstract:Safety Case has become an integral component for safety-certification in various Cyber Physical System domains including automotive, aviation, medical devices, and military. The certification processes for these systems are stringent and require robust safety assurance arguments and substantial evidence backing. Despite the strict requirements, current practices still rely on manual methods that are brittle, do not have a systematic approach or thorough consideration of sound arguments. In addition, stringent certification requirements and ever-increasing system complexity make ad-hoc, manual assurance case generation (ACG) inefficient, time consuming, and expensive. To improve the current state of practice, we introduce a structured ACG tool which uses system design artifacts, accumulated evidence, and developer expertise to construct a safety case and evaluate it in an automated manner. We also illustrate the applicability of the ACG tool on a remote-control car testbed case study.

BARISTA: Efficient and Scalable Serverless Serving System for Deep Learning Prediction Services

Apr 11, 2019

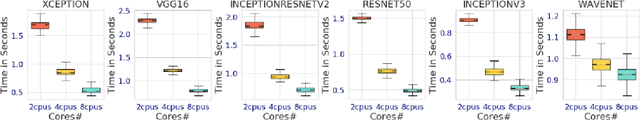

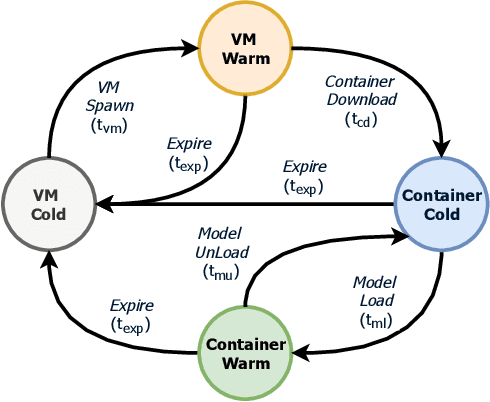

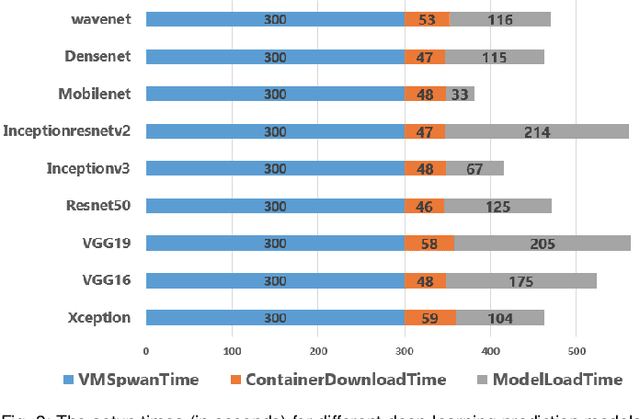

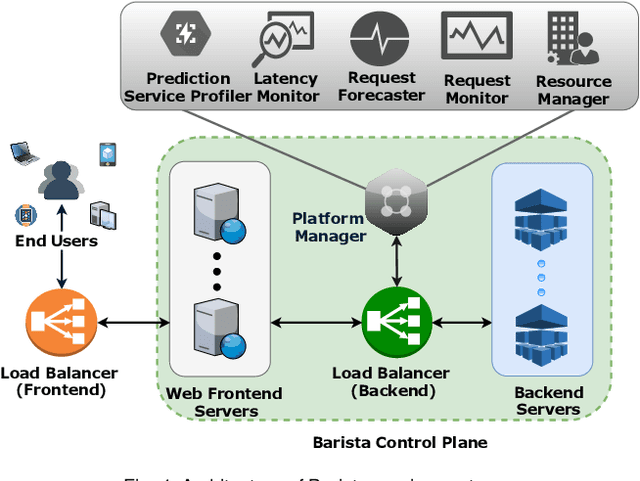

Abstract:Pre-trained deep learning models are increasingly being used to offer a variety of compute-intensive predictive analytics services such as fitness tracking, speech and image recognition. The stateless and highly parallelizable nature of deep learning models makes them well-suited for serverless computing paradigm. However, making effective resource management decisions for these services is a hard problem due to the dynamic workloads and diverse set of available resource configurations that have their deployment and management costs. To address these challenges, we present a distributed and scalable deep-learning prediction serving system called Barista and make the following contributions. First, we present a fast and effective methodology for forecasting workloads by identifying various trends. Second, we formulate an optimization problem to minimize the total cost incurred while ensuring bounded prediction latency with reasonable accuracy. Third, we propose an efficient heuristic to identify suitable compute resource configurations. Fourth, we propose an intelligent agent to allocate and manage the compute resources by horizontal and vertical scaling to maintain the required prediction latency. Finally, using representative real-world workloads for urban transportation service, we demonstrate and validate the capabilities of Barista.

Augmenting Learning Components for Safety in Resource Constrained Autonomous Robots

Apr 04, 2019

Abstract:Learning enabled components (LECs) trained using data-driven algorithms are increasingly being used in autonomous robots commonly found in factories, hospitals, and educational laboratories. However, these LECs do not provide any safety guarantees, and testing them is challenging. In this paper, we introduce a framework that performs weighted simplex strategy based supervised safety control, resource management and confidence estimation of autonomous robots. Specifically, we describe two weighted simplex strategies: (a) simple weighted simplex strategy (SW-Simplex) that computes a weighted controller output by comparing the decisions between a safety supervisor and an LEC, and (b) a context-sensitive weighted simplex strategy (CSW-Simplex) that computes a context-aware weighted controller output. We use reinforcement learning to learn the contextual weights. We also introduce a system monitor that uses the current state information and a Bayesian network model learned from past data to estimate the probability of the robotic system staying in the safe working region. To aid resource constrained robots in performing complex computations of these weighted simplex strategies, we describe a resource manager that offloads tasks to an available fog nodes. The paper also describes a hardware testbed called DeepNNCar, which is a low cost resource-constrained RC car, built to perform autonomous driving. Using the hardware, we show that both SW-Simplex and CSW-Simplex have 40\% and 60\% fewer safety violations, while demonstrating higher optimized speed during indoor driving \textbf{($\sim\,0.40\,m/s$)} than the original system (using only LECs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge