Saddek Bensalem

Randomized Smoothing Meets Vision-Language Models

Sep 19, 2025Abstract:Randomized smoothing (RS) is one of the prominent techniques to ensure the correctness of machine learning models, where point-wise robustness certificates can be derived analytically. While RS is well understood for classification, its application to generative models is unclear, since their outputs are sequences rather than labels. We resolve this by connecting generative outputs to an oracle classification task and showing that RS can still be enabled: the final response can be classified as a discrete action (e.g., service-robot commands in VLAs), as harmful vs. harmless (content moderation or toxicity detection in VLMs), or even applying oracles to cluster answers into semantically equivalent ones. Provided that the error rate for the oracle classifier comparison is bounded, we develop the theory that associates the number of samples with the corresponding robustness radius. We further derive improved scaling laws analytically relating the certified radius and accuracy to the number of samples, showing that the earlier result of 2 to 3 orders of magnitude fewer samples sufficing with minimal loss remains valid even under weaker assumptions. Together, these advances make robustness certification both well-defined and computationally feasible for state-of-the-art VLMs, as validated against recent jailbreak-style adversarial attacks.

Mitigating Hallucinations in YOLO-based Object Detection Models: A Revisit to Out-of-Distribution Detection

Mar 10, 2025Abstract:Object detection systems must reliably perceive objects of interest without being overly confident to ensure safe decision-making in dynamic environments. Filtering techniques based on out-of-distribution (OoD) detection are commonly added as an extra safeguard to filter hallucinations caused by overconfidence in novel objects. Nevertheless, evaluating YOLO-family detectors and their filters under existing OoD benchmarks often leads to unsatisfactory performance. This paper studies the underlying reasons for performance bottlenecks and proposes a methodology to improve performance fundamentally. Our first contribution is a calibration of all existing evaluation results: Although images in existing OoD benchmark datasets are claimed not to have objects within in-distribution (ID) classes (i.e., categories defined in the training dataset), around 13% of objects detected by the object detector are actually ID objects. Dually, the ID dataset containing OoD objects can also negatively impact the decision boundary of filters. These ultimately lead to a significantly imprecise performance estimation. Our second contribution is to consider the task of hallucination reduction as a joint pipeline of detectors and filters. By developing a methodology to carefully synthesize an OoD dataset that semantically resembles the objects to be detected, and using the crafted OoD dataset in the fine-tuning of YOLO detectors to suppress the objectness score, we achieve a 88% reduction in overall hallucination error with a combined fine-tuned detection and filtering system on the self-driving benchmark BDD-100K. Our code and dataset are available at: https://gricad-gitlab.univ-grenoble-alpes.fr/dnn-safety/m-hood.

Safeguarding Large Language Models: A Survey

Jun 03, 2024

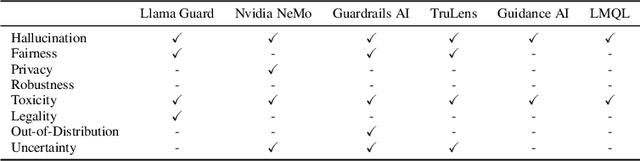

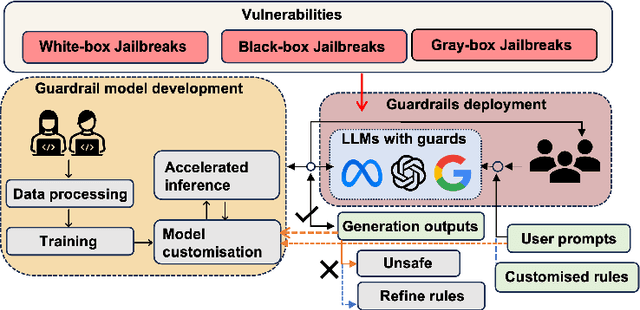

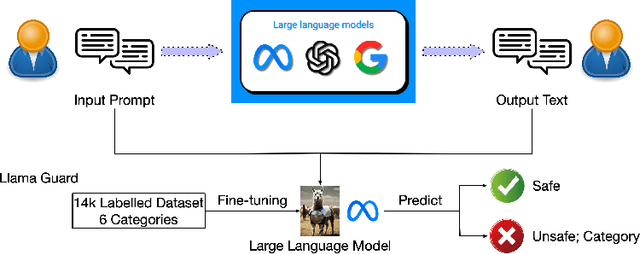

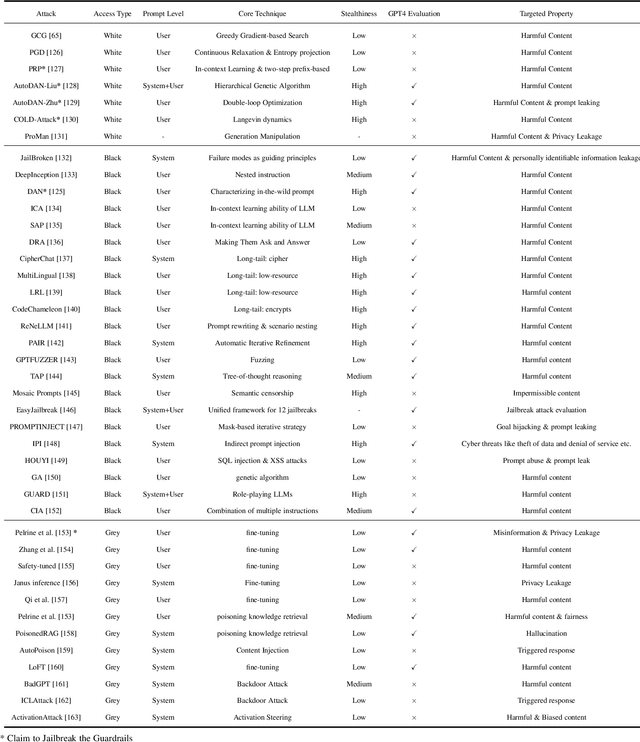

Abstract:In the burgeoning field of Large Language Models (LLMs), developing a robust safety mechanism, colloquially known as "safeguards" or "guardrails", has become imperative to ensure the ethical use of LLMs within prescribed boundaries. This article provides a systematic literature review on the current status of this critical mechanism. It discusses its major challenges and how it can be enhanced into a comprehensive mechanism dealing with ethical issues in various contexts. First, the paper elucidates the current landscape of safeguarding mechanisms that major LLM service providers and the open-source community employ. This is followed by the techniques to evaluate, analyze, and enhance some (un)desirable properties that a guardrail might want to enforce, such as hallucinations, fairness, privacy, and so on. Based on them, we review techniques to circumvent these controls (i.e., attacks), to defend the attacks, and to reinforce the guardrails. While the techniques mentioned above represent the current status and the active research trends, we also discuss several challenges that cannot be easily dealt with by the methods and present our vision on how to implement a comprehensive guardrail through the full consideration of multi-disciplinary approach, neural-symbolic method, and systems development lifecycle.

Formal Specification, Assessment, and Enforcement of Fairness for Generative AIs

Apr 26, 2024Abstract:Reinforcing or even exacerbating societal biases and inequalities will increase significantly as generative AI increasingly produces useful artifacts, from text to images and beyond, for the real world. We address these issues by formally characterizing the notion of fairness for generative AI as a basis for monitoring and enforcing fairness. We define two levels of fairness using the notion of infinite sequences of abstractions of AI-generated artifacts such as text or images. The first is the fairness demonstrated on the generated sequences, which is evaluated only on the outputs while agnostic to the prompts and models used. The second is the inherent fairness of the generative AI model, which requires that fairness be manifested when input prompts are neutral, that is, they do not explicitly instruct the generative AI to produce a particular type of output. We also study relative intersectional fairness to counteract the combinatorial explosion of fairness when considering multiple categories together with lazy fairness enforcement. Finally, fairness monitoring and enforcement are tested against some current generative AI models.

BAM: Box Abstraction Monitors for Real-time OoD Detection in Object Detection

Mar 27, 2024

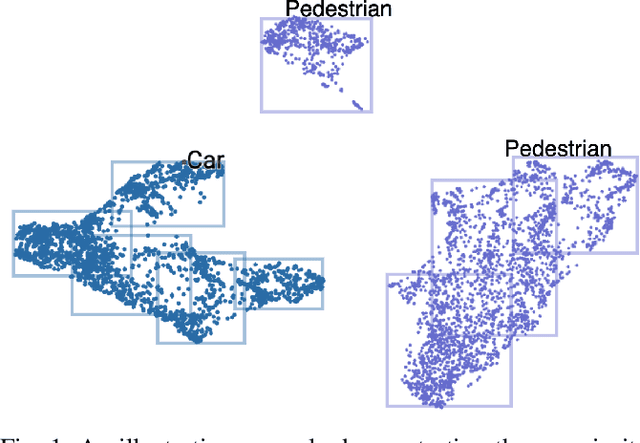

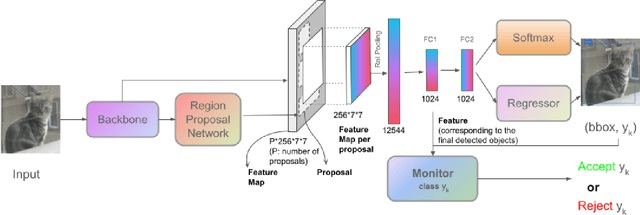

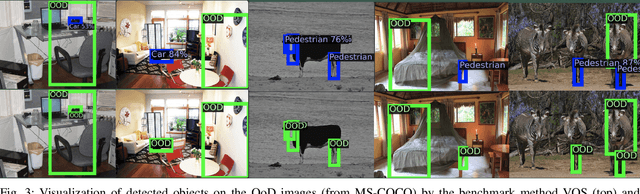

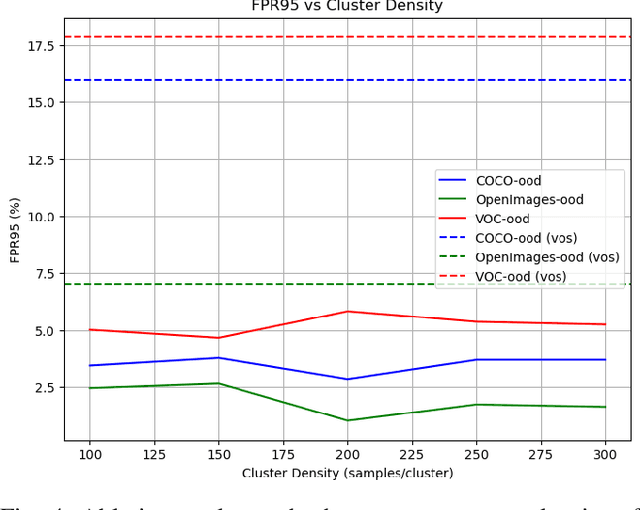

Abstract:Out-of-distribution (OoD) detection techniques for deep neural networks (DNNs) become crucial thanks to their filtering of abnormal inputs, especially when DNNs are used in safety-critical applications and interact with an open and dynamic environment. Nevertheless, integrating OoD detection into state-of-the-art (SOTA) object detection DNNs poses significant challenges, partly due to the complexity introduced by the SOTA OoD construction methods, which require the modification of DNN architecture and the introduction of complex loss functions. This paper proposes a simple, yet surprisingly effective, method that requires neither retraining nor architectural change in object detection DNN, called Box Abstraction-based Monitors (BAM). The novelty of BAM stems from using a finite union of convex box abstractions to capture the learned features of objects for in-distribution (ID) data, and an important observation that features from OoD data are more likely to fall outside of these boxes. The union of convex regions within the feature space allows the formation of non-convex and interpretable decision boundaries, overcoming the limitations of VOS-like detectors without sacrificing real-time performance. Experiments integrating BAM into Faster R-CNN-based object detection DNNs demonstrate a considerably improved performance against SOTA OoD detection techniques.

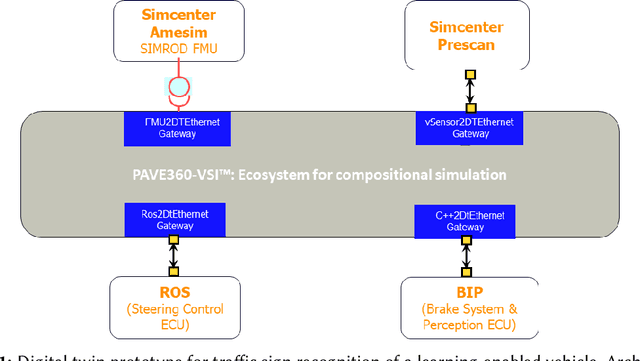

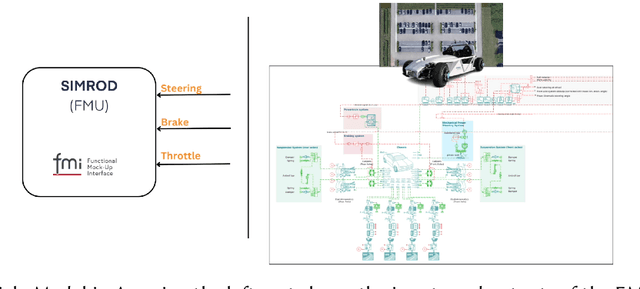

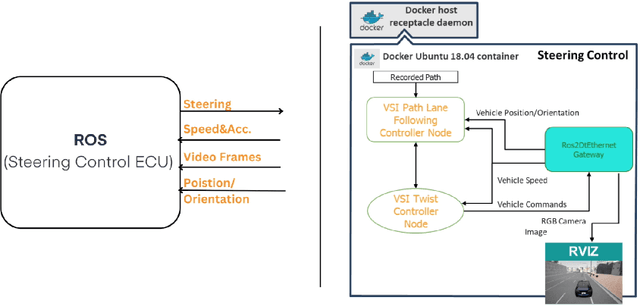

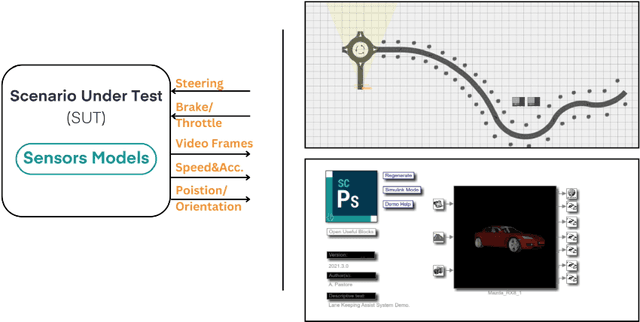

A Digital Twin prototype for traffic sign recognition of a learning-enabled autonomous vehicle

Feb 14, 2024

Abstract:In this paper, we present a novel digital twin prototype for a learning-enabled self-driving vehicle. The primary objective of this digital twin is to perform traffic sign recognition and lane keeping. The digital twin architecture relies on co-simulation and uses the Functional Mock-up Interface and SystemC Transaction Level Modeling standards. The digital twin consists of four clients, i) a vehicle model that is designed in Amesim tool, ii) an environment model developed in Prescan, iii) a lane-keeping controller designed in Robot Operating System, and iv) a perception and speed control module developed in the formal modeling language of BIP (Behavior, Interaction, Priority). These clients interface with the digital twin platform, PAVE360-Veloce System Interconnect (PAVE360-VSI). PAVE360-VSI acts as the co-simulation orchestrator and is responsible for synchronization, interconnection, and data exchange through a server. The server establishes connections among the different clients and also ensures adherence to the Ethernet protocol. We conclude with illustrative digital twin simulations and recommendations for future work.

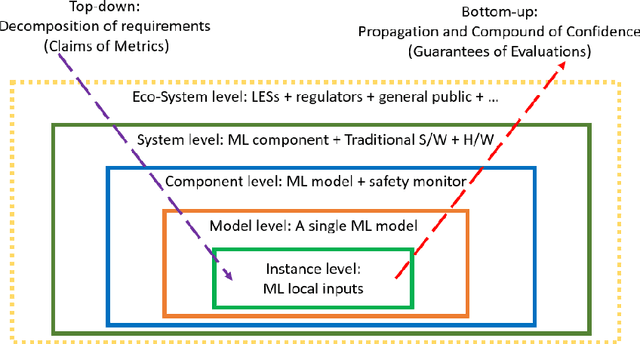

What, Indeed, is an Achievable Provable Guarantee for Learning-Enabled Safety Critical Systems

Jul 20, 2023

Abstract:Machine learning has made remarkable advancements, but confidently utilising learning-enabled components in safety-critical domains still poses challenges. Among the challenges, it is known that a rigorous, yet practical, way of achieving safety guarantees is one of the most prominent. In this paper, we first discuss the engineering and research challenges associated with the design and verification of such systems. Then, based on the observation that existing works cannot actually achieve provable guarantees, we promote a two-step verification method for the ultimate achievement of provable statistical guarantees.

Towards Rigorous Design of OoD Detectors

Jun 14, 2023Abstract:Out-of-distribution (OoD) detection techniques are instrumental for safety-related neural networks. We are arguing, however, that current performance-oriented OoD detection techniques geared towards matching metrics such as expected calibration error, are not sufficient for establishing safety claims. What is missing is a rigorous design approach for developing, verifying, and validating OoD detectors. These design principles need to be aligned with the intended functionality and the operational domain. Here, we formulate some of the key technical challenges, together with a possible way forward, for developing a rigorous and safety-related design methodology for OoD detectors.

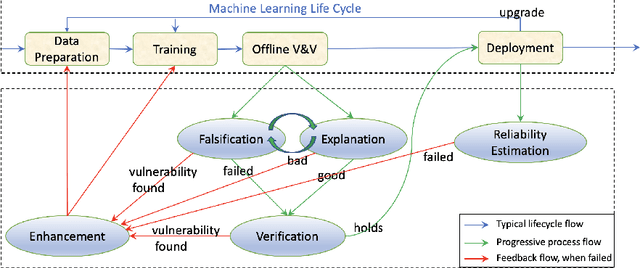

A Survey of Safety and Trustworthiness of Large Language Models through the Lens of Verification and Validation

May 19, 2023

Abstract:Large Language Models (LLMs) have exploded a new heatwave of AI, for their ability to engage end-users in human-level conversations with detailed and articulate answers across many knowledge domains. In response to their fast adoption in many industrial applications, this survey concerns their safety and trustworthiness. First, we review known vulnerabilities of the LLMs, categorising them into inherent issues, intended attacks, and unintended bugs. Then, we consider if and how the Verification and Validation (V&V) techniques, which have been widely developed for traditional software and deep learning models such as convolutional neural networks, can be integrated and further extended throughout the lifecycle of the LLMs to provide rigorous analysis to the safety and trustworthiness of LLMs and their applications. Specifically, we consider four complementary techniques: falsification and evaluation, verification, runtime monitoring, and ethical use. Considering the fast development of LLMs, this survey does not intend to be complete (although it includes 300 references), especially when it comes to the applications of LLMs in various domains, but rather a collection of organised literature reviews and discussions to support the quick understanding of the safety and trustworthiness issues from the perspective of V&V.

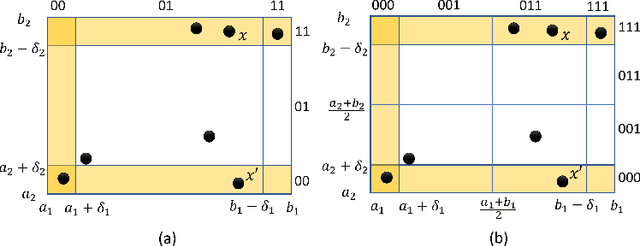

Prioritizing Corners in OoD Detectors via Symbolic String Manipulation

May 16, 2022

Abstract:For safety assurance of deep neural networks (DNNs), out-of-distribution (OoD) monitoring techniques are essential as they filter spurious input that is distant from the training dataset. This paper studies the problem of systematically testing OoD monitors to avoid cases where an input data point is tested as in-distribution by the monitor, but the DNN produces spurious output predictions. We consider the definition of "in-distribution" characterized in the feature space by a union of hyperrectangles learned from the training dataset. Thus the testing is reduced to finding corners in hyperrectangles distant from the available training data in the feature space. Concretely, we encode the abstract location of every data point as a finite-length binary string, and the union of all binary strings is stored compactly using binary decision diagrams (BDDs). We demonstrate how to use BDDs to symbolically extract corners distant from all data points within the training set. Apart from test case generation, we explain how to use the proposed corners to fine-tune the DNN to ensure that it does not predict overly confidently. The result is evaluated over examples such as number and traffic sign recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge