Hasan Esen

Online Collision Risk Estimation via Monocular Depth-Aware Object Detectors and Fuzzy Inference

Nov 09, 2024Abstract:This paper presents a monitoring framework that infers the level of autonomous vehicle (AV) collision risk based on its object detector's performance using only monocular camera images. Essentially, the framework takes two sets of predictions produced by different algorithms and associates their inconsistencies with the collision risk via fuzzy inference. The first set of predictions is obtained through retrieving safety-critical 2.5D objects from a depth map, and the second set comes from the AV's 3D object detector. We experimentally validate that, based on Intersection-over-Union (IoU) and a depth discrepancy measure, the inconsistencies between the two sets of predictions strongly correlate to the safety-related error of the 3D object detector against ground truths. This correlation allows us to construct a fuzzy inference system and map the inconsistency measures to an existing collision risk indicator. In particular, we apply various knowledge- and data-driven techniques and find using particle swarm optimization that learns general fuzzy rules gives the best mapping result. Lastly, we validate our monitor's capability to produce relevant risk estimates with the large-scale nuScenes dataset and show it can safeguard an AV in closed-loop simulations.

EC-IoU: Orienting Safety for Object Detectors via Ego-Centric Intersection-over-Union

Mar 20, 2024

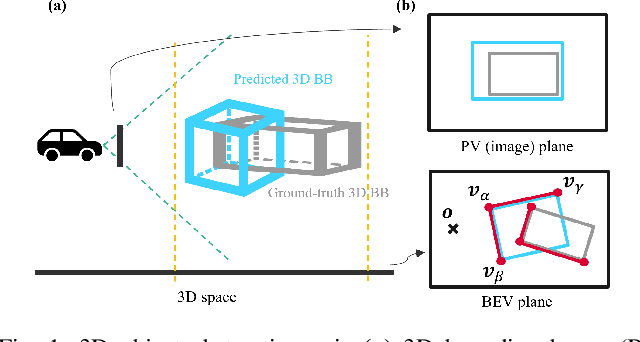

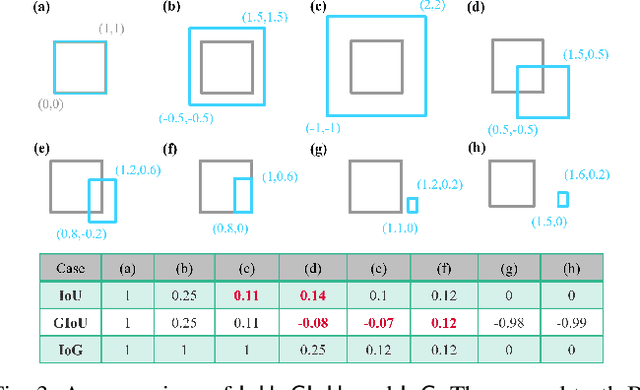

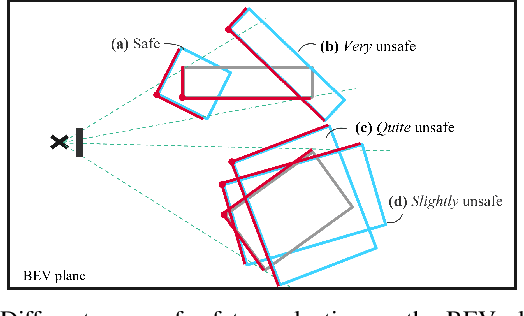

Abstract:This paper presents safety-oriented object detection via a novel Ego-Centric Intersection-over-Union (EC-IoU) measure, addressing practical concerns when applying state-of-the-art learning-based perception models in safety-critical domains such as autonomous driving. Concretely, we propose a weighting mechanism to refine the widely used IoU measure, allowing it to assign a higher score to a prediction that covers closer points of a ground-truth object from the ego agent's perspective. The proposed EC-IoU measure can be used in typical evaluation processes to select object detectors with higher safety-related performance for downstream tasks. It can also be integrated into common loss functions for model fine-tuning. While geared towards safety, our experiment with the KITTI dataset demonstrates the performance of a model trained on EC-IoU can be better than that of a variant trained on IoU in terms of mean Average Precision as well.

Safety Metrics and Losses for Object Detection in Autonomous Driving

Sep 21, 2022

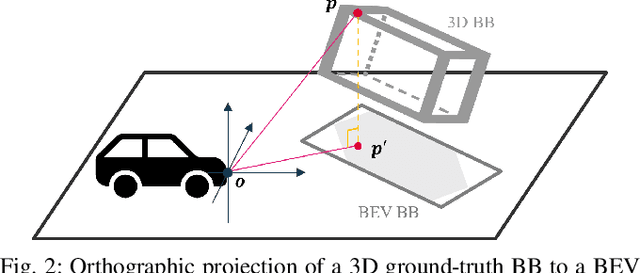

Abstract:State-of-the-art object detectors have been shown effective in many applications. Usually, their performance is evaluated based on accuracy metrics such as mean Average Precision. In this paper, we consider a safety property of 3D object detectors in the context of Autonomous Driving (AD). In particular, we propose an essential safety requirement for object detectors in AD and formulate it into a specification. During the formulation, we find that abstracting 3D objects with projected 2D bounding boxes on the image and bird's-eye-view planes allows for a necessary and sufficient condition to the proposed safety requirement. We then leverage the analysis and derive qualitative and quantitative safety metrics based on the Intersection-over-Ground-Truth measure and a distance ratio between predictions and ground truths. Finally, for continual improvement, we formulate safety losses that can be used to optimize object detectors towards higher safety scores. Our experiments with public models on the MMDetection3D library and the nuScenes datasets demonstrate the validity of our consideration and proposals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge