Rongjie Yan

Validating Generalist Robots with Situation Calculus and STL Falsification

Jan 06, 2026Abstract:Generalist robots are becoming a reality, capable of interpreting natural language instructions and executing diverse operations. However, their validation remains challenging because each task induces its own operational context and correctness specification, exceeding the assumptions of traditional validation methods. We propose a two-layer validation framework that combines abstract reasoning with concrete system falsification. At the abstract layer, situation calculus models the world and derives weakest preconditions, enabling constraint-aware combinatorial testing to systematically generate diverse, semantically valid world-task configurations with controllable coverage strength. At the concrete layer, these configurations are instantiated for simulation-based falsification with STL monitoring. Experiments on tabletop manipulation tasks show that our framework effectively uncovers failure cases in the NVIDIA GR00T controller, demonstrating its promise for validating general-purpose robot autonomy.

Runtime Monitoring DNN-Based Perception

Oct 06, 2023Abstract:Deep neural networks (DNNs) are instrumental in realizing complex perception systems. As many of these applications are safety-critical by design, engineering rigor is required to ensure that the functional insufficiency of the DNN-based perception is not the source of harm. In addition to conventional static verification and testing techniques employed during the design phase, there is a need for runtime verification techniques that can detect critical events, diagnose issues, and even enforce requirements. This tutorial aims to provide readers with a glimpse of techniques proposed in the literature. We start with classical methods proposed in the machine learning community, then highlight a few techniques proposed by the formal methods community. While we surely can observe similarities in the design of monitors, how the decision boundaries are created vary between the two communities. We conclude by highlighting the need to rigorously design monitors, where data availability outside the operational domain plays an important role.

ComOpT: Combination and Optimization for Testing Autonomous Driving Systems

Oct 02, 2021

Abstract:ComOpT is an open-source research tool for coverage-driven testing of autonomous driving systems, focusing on planning and control. Starting with (i) a meta-model characterizing discrete conditions to be considered and (ii) constraints specifying the impossibility of certain combinations, ComOpT first generates constraint-feasible abstract scenarios while maximally increasing the coverage of k-way combinatorial testing. Each abstract scenario can be viewed as a conceptual equivalence class, which is then instantiated into multiple concrete scenarios by (1) randomly picking one local map that fulfills the specified geographical condition, and (2) assigning all actors accordingly with parameters within the range. Finally, ComOpT evaluates each concrete scenario against a set of KPIs and performs local scenario variation via spawning a new agent that might lead to a collision at designated points. We use ComOpT to test the Apollo~6 autonomous driving software stack. ComOpT can generate highly diversified scenarios with limited test budgets while uncovering problematic situations such as inabilities to make simple right turns, uncomfortable accelerations, and dangerous driving patterns. ComOpT participated in the 2021 IEEE AI Autonomous Vehicle Testing Challenge and won first place among more than 110 contending teams.

Monitoring Object Detection Abnormalities via Data-Label and Post-Algorithm Abstractions

Mar 29, 2021

Abstract:While object detection modules are essential functionalities for any autonomous vehicle, the performance of such modules that are implemented using deep neural networks can be, in many cases, unreliable. In this paper, we develop abstraction-based monitoring as a logical framework for filtering potentially erroneous detection results. Concretely, we consider two types of abstraction, namely data-label abstraction and post-algorithm abstraction. Operated on the training dataset, the construction of data-label abstraction iterates each input, aggregates region-wise information over its associated labels, and stores the vector under a finite history length. Post-algorithm abstraction builds an abstract transformer for the tracking algorithm. Elements being associated together by the abstract transformer can be checked against consistency over their original values. We have implemented the overall framework to a research prototype and validated it using publicly available object detection datasets.

Testing Autonomous Systems with Believed Equivalence Refinement

Mar 08, 2021

Abstract:Continuous engineering of autonomous driving functions commonly requires deploying vehicles in road testing to obtain inputs that cause problematic decisions. Although the discovery leads to producing an improved system, it also challenges the foundation of testing using equivalence classes and the associated relative test coverage criterion. In this paper, we propose believed equivalence, where the establishment of an equivalence class is initially based on expert belief and is subject to a set of available test cases having a consistent valuation. Upon a newly encountered test case that breaks the consistency, one may need to refine the established categorization in order to split the originally believed equivalence into two. Finally, we focus on modules implemented using deep neural networks where every category partitions an input over the real domain. We establish new equivalence classes by guiding the new test cases following directions suggested by its k-nearest neighbors, complemented by local robustness testing. The concept is demonstrated in a lane-keeping assist module indicating the potential of our proposed approach.

Continuous Safety Verification of Neural Networks

Oct 12, 2020

Abstract:Deploying deep neural networks (DNNs) as core functions in autonomous driving creates unique verification and validation challenges. In particular, the continuous engineering paradigm of gradually perfecting a DNN-based perception can make the previously established result of safety verification no longer valid. This can occur either due to the newly encountered examples (i.e., input domain enlargement) inside the Operational Design Domain or due to the subsequent parameter fine-tuning activities of a DNN. This paper considers approaches to transfer results established in the previous DNN safety verification problem to the modified problem setting. By considering the reuse of state abstractions, network abstractions, and Lipschitz constants, we develop several sufficient conditions that only require formally analyzing a small part of the DNN in the new problem. The overall concept is evaluated in a $1/10$-scaled vehicle that equips a DNN controller to determine the visual waypoint from the perceived image.

Architecting Dependable Learning-enabled Autonomous Systems: A Survey

Feb 27, 2019

Abstract:We provide a summary over architectural approaches that can be used to construct dependable learning-enabled autonomous systems, with a focus on automated driving. We consider three technology pillars for architecting dependable autonomy, namely diverse redundancy, information fusion, and runtime monitoring. For learning-enabled components, we additionally summarize recent architectural approaches to increase the dependability beyond standard convolutional neural networks. We conclude the study with a list of promising research directions addressing the challenges of existing approaches.

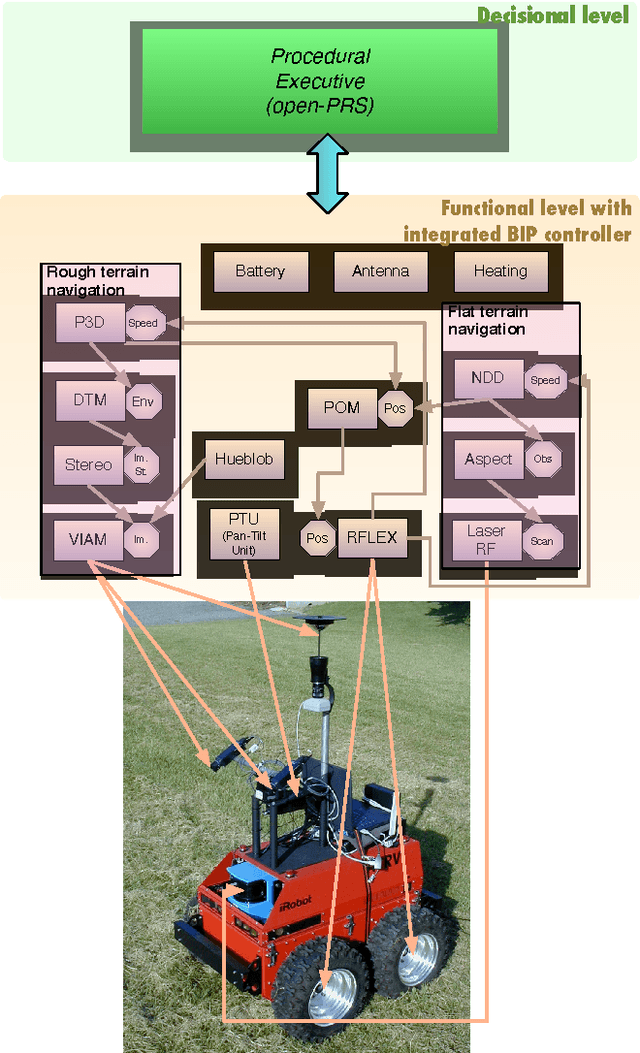

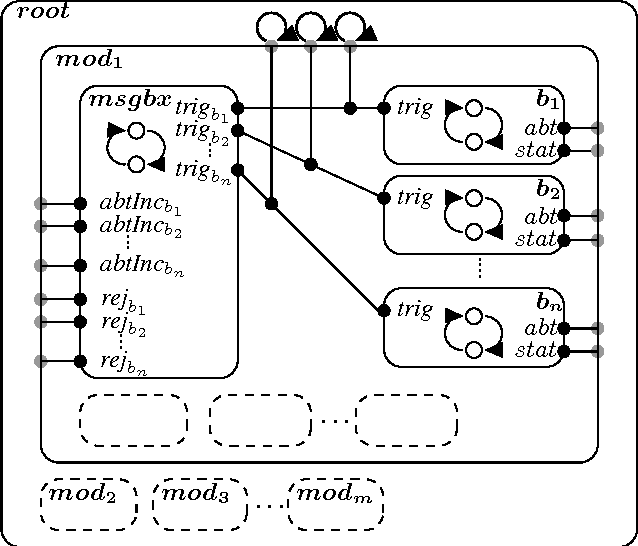

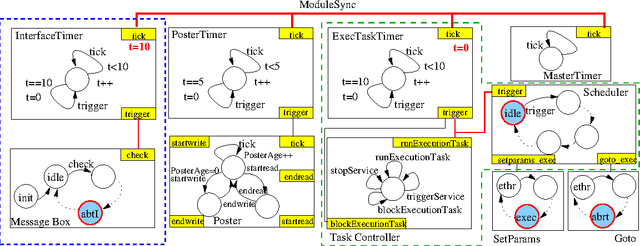

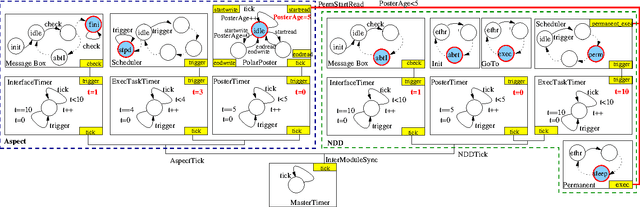

A Verifiable and Correct-by-Construction Controller for Robot Functional Levels

Sep 02, 2013

Abstract:Autonomous robots are complex systems that require the interaction and cooperation between numerous heterogeneous software components. In recent times, robots are being increasingly used for complex and safety-critical tasks, such as exploring Mars and assisting/replacing humans. Consequently, robots are becoming critical systems that must meet safety properties, in particular, logical, temporal and real-time constraints. To this end, we present an evolution of the LAAS architecture for autonomous systems, in particular its GenoM tool. This evolution relies on the BIP component-based design framework, which has been successfully used in other domains such as embedded systems. We show how we integrate BIP into our existing methodology for developing the lowest (functional) level of robots. Particularly, we discuss the componentization of the functional level, the synthesis of an execution controller for it, and how we verify whether the resulting functional level conforms to properties such as deadlock-freedom. We also show through experimentation that the verification is feasible and usable for complex, real world robotic systems, and that the BIP-based functional levels resulting from our new methodology are, despite an overhead during execution, still practical on real world robotic platforms. Our approach has been fully implemented in the LAAS architecture, and the implementation has been used in several experiments on a real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge