Erwin Jose Lopez Pulgarin

Preference-Driven Active 3D Scene Representation for Robotic Inspection in Nuclear Decommissioning

Apr 02, 2025Abstract:Active 3D scene representation is pivotal in modern robotics applications, including remote inspection, manipulation, and telepresence. Traditional methods primarily optimize geometric fidelity or rendering accuracy, but often overlook operator-specific objectives, such as safety-critical coverage or task-driven viewpoints. This limitation leads to suboptimal viewpoint selection, particularly in constrained environments such as nuclear decommissioning. To bridge this gap, we introduce a novel framework that integrates expert operator preferences into the active 3D scene representation pipeline. Specifically, we employ Reinforcement Learning from Human Feedback (RLHF) to guide robotic path planning, reshaping the reward function based on expert input. To capture operator-specific priorities, we conduct interactive choice experiments that evaluate user preferences in 3D scene representation. We validate our framework using a UR3e robotic arm for reactor tile inspection in a nuclear decommissioning scenario. Compared to baseline methods, our approach enhances scene representation while optimizing trajectory efficiency. The RLHF-based policy consistently outperforms random selection, prioritizing task-critical details. By unifying explicit 3D geometric modeling with implicit human-in-the-loop optimization, this work establishes a foundation for adaptive, safety-critical robotic perception systems, paving the way for enhanced automation in nuclear decommissioning, remote maintenance, and other high-risk environments.

Drivers' Manoeuvre Modelling and Prediction for Safe HRI

Jun 03, 2021

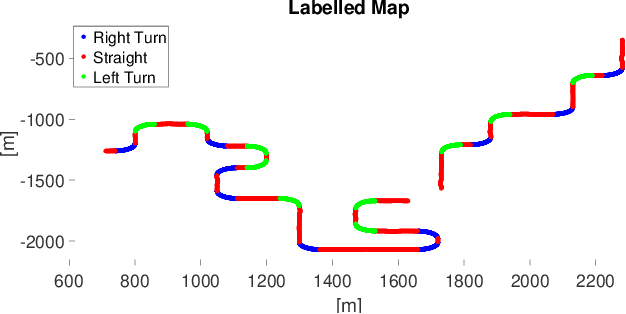

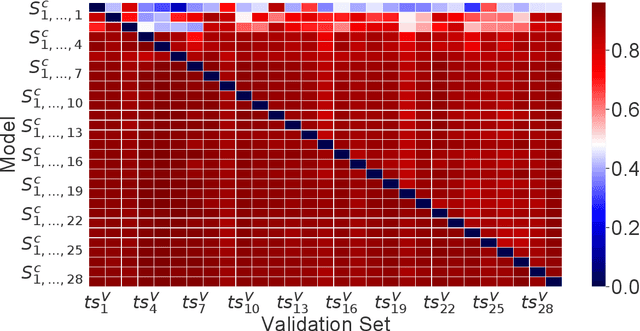

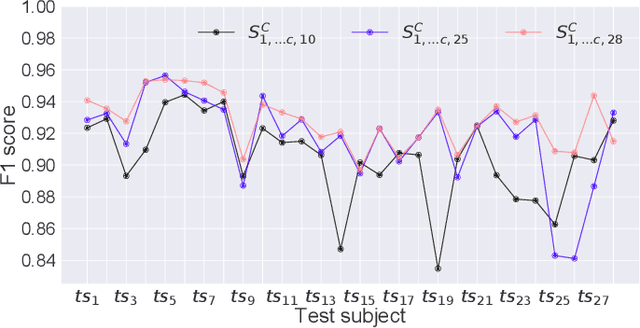

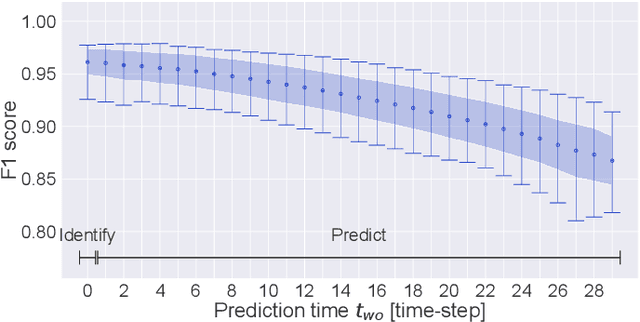

Abstract:As autonomous machines such as robots and vehicles start performing tasks involving human users, ensuring a safe interaction between them becomes an important issue. Translating methods from human-robot interaction (HRI) studies to the interaction between humans and other highly complex machines (e.g. semi-autonomous vehicles) could help advance the use of those machines in scenarios requiring human interaction. One method involves understanding human intentions and decision-making to estimate the human's present and near-future actions whilst interacting with a robot. This idea originates from the psychological concept of Theory of Mind, which has been broadly explored for robotics and recently for autonomous and semi-autonomous vehicles. In this work, we explored how to predict human intentions before an action is performed by combining data from human-motion, vehicle-state and human inputs (e.g. steering wheel, pedals). A data-driven approach based on Recurrent Neural Network models was used to classify the current driving manoeuvre and to predict the future manoeuvre to be performed. A state-transition model was used with a fixed set of manoeuvres to label data recorded during the trials for real-time applications. Models were trained and tested using drivers of different seat preferences, driving expertise and arm-length; precision and recall metrics over 95% for manoeuvre identification and 86% for manoeuvre prediction were achieved, with prediction time-windows of up to 1 second for both known and unknown test subjects. Compared to our previous results, performance improved and manoeuvre prediction was possible for unknown test subjects without knowing the current manoeuvre.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge