Anxing Xiao

Robot Operation of Home Appliances by Reading User Manuals

May 26, 2025

Abstract:Operating home appliances, among the most common tools in every household, is a critical capability for assistive home robots. This paper presents ApBot, a robot system that operates novel household appliances by "reading" their user manuals. ApBot faces multiple challenges: (i) infer goal-conditioned partial policies from their unstructured, textual descriptions in a user manual document, (ii) ground the policies to the appliance in the physical world, and (iii) execute the policies reliably over potentially many steps, despite compounding errors. To tackle these challenges, ApBot constructs a structured, symbolic model of an appliance from its manual, with the help of a large vision-language model (VLM). It grounds the symbolic actions visually to control panel elements. Finally, ApBot closes the loop by updating the model based on visual feedback. Our experiments show that across a wide range of simulated and real-world appliances, ApBot achieves consistent and statistically significant improvements in task success rate, compared with state-of-the-art large VLMs used directly as control policies. These results suggest that a structured internal representations plays an important role in robust robot operation of home appliances, especially, complex ones.

CHD: Coupled Hierarchical Diffusion for Long-Horizon Tasks

May 13, 2025

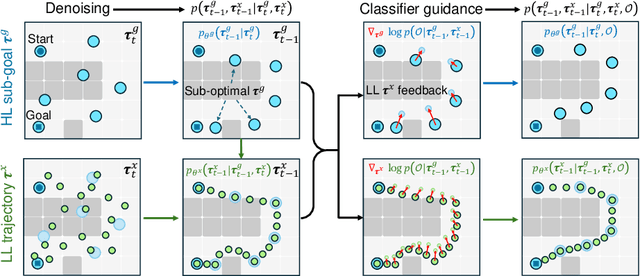

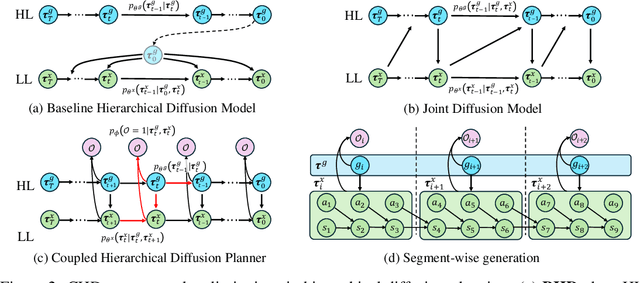

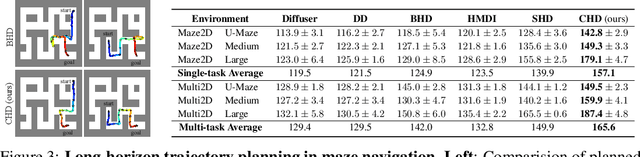

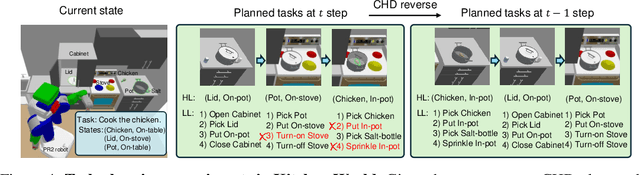

Abstract:Diffusion-based planners have shown strong performance in short-horizon tasks but often fail in complex, long-horizon settings. We trace the failure to loose coupling between high-level (HL) sub-goal selection and low-level (LL) trajectory generation, which leads to incoherent plans and degraded performance. We propose Coupled Hierarchical Diffusion (CHD), a framework that models HL sub-goals and LL trajectories jointly within a unified diffusion process. A shared classifier passes LL feedback upstream so that sub-goals self-correct while sampling proceeds. This tight HL-LL coupling improves trajectory coherence and enables scalable long-horizon diffusion planning. Experiments across maze navigation, tabletop manipulation, and household environments show that CHD consistently outperforms both flat and hierarchical diffusion baselines. Our website is: https://sites.google.com/view/chd2025/home

FUNCTO: Function-Centric One-Shot Imitation Learning for Tool Manipulation

Feb 17, 2025

Abstract:Learning tool use from a single human demonstration video offers a highly intuitive and efficient approach to robot teaching. While humans can effortlessly generalize a demonstrated tool manipulation skill to diverse tools that support the same function (e.g., pouring with a mug versus a teapot), current one-shot imitation learning (OSIL) methods struggle to achieve this. A key challenge lies in establishing functional correspondences between demonstration and test tools, considering significant geometric variations among tools with the same function (i.e., intra-function variations). To address this challenge, we propose FUNCTO (Function-Centric OSIL for Tool Manipulation), an OSIL method that establishes function-centric correspondences with a 3D functional keypoint representation, enabling robots to generalize tool manipulation skills from a single human demonstration video to novel tools with the same function despite significant intra-function variations. With this formulation, we factorize FUNCTO into three stages: (1) functional keypoint extraction, (2) function-centric correspondence establishment, and (3) functional keypoint-based action planning. We evaluate FUNCTO against exiting modular OSIL methods and end-to-end behavioral cloning methods through real-robot experiments on diverse tool manipulation tasks. The results demonstrate the superiority of FUNCTO when generalizing to novel tools with intra-function geometric variations. More details are available at https://sites.google.com/view/functo.

Robi Butler: Remote Multimodal Interactions with Household Robot Assistant

Sep 30, 2024Abstract:In this paper, we introduce Robi Butler, a novel household robotic system that enables multimodal interactions with remote users. Building on the advanced communication interfaces, Robi Butler allows users to monitor the robot's status, send text or voice instructions, and select target objects by hand pointing. At the core of our system is a high-level behavior module, powered by Large Language Models (LLMs), that interprets multimodal instructions to generate action plans. These plans are composed of a set of open vocabulary primitives supported by Vision Language Models (VLMs) that handle both text and pointing queries. The integration of the above components allows Robi Butler to ground remote multimodal instructions in the real-world home environment in a zero-shot manner. We demonstrate the effectiveness and efficiency of this system using a variety of daily household tasks that involve remote users giving multimodal instructions. Additionally, we conducted a user study to analyze how multimodal interactions affect efficiency and user experience during remote human-robot interaction and discuss the potential improvements.

GSON: A Group-based Social Navigation Framework with Large Multimodal Model

Sep 26, 2024Abstract:As the number of service robots and autonomous vehicles in human-centered environments grows, their requirements go beyond simply navigating to a destination. They must also take into account dynamic social contexts and ensure respect and comfort for others in shared spaces, which poses significant challenges for perception and planning. In this paper, we present a group-based social navigation framework GSON to enable mobile robots to perceive and exploit the social group of their surroundings by leveling the visual reasoning capability of the Large Multimodal Model (LMM). For perception, we apply visual prompting techniques to zero-shot extract the social relationship among pedestrians and combine the result with a robust pedestrian detection and tracking pipeline to alleviate the problem of low inference speed of the LMM. Given the perception result, the planning system is designed to avoid disrupting the current social structure. We adopt a social structure-based mid-level planner as a bridge between global path planning and local motion planning to preserve the global context and reactive response. The proposed method is validated on real-world mobile robot navigation tasks involving complex social structure understanding and reasoning. Experimental results demonstrate the effectiveness of the system in these scenarios compared with several baselines.

Octopi: Object Property Reasoning with Large Tactile-Language Models

May 05, 2024

Abstract:Physical reasoning is important for effective robot manipulation. Recent work has investigated both vision and language modalities for physical reasoning; vision can reveal information about objects in the environment and language serves as an abstraction and communication medium for additional context. Although these works have demonstrated success on a variety of physical reasoning tasks, they are limited to physical properties that can be inferred from visual or language inputs. In this work, we investigate combining tactile perception with language, which enables embodied systems to obtain physical properties through interaction and apply common-sense reasoning. We contribute a new dataset PhysiCleAR, which comprises both physical/property reasoning tasks and annotated tactile videos obtained using a GelSight tactile sensor. We then introduce Octopi, a system that leverages both tactile representation learning and large vision-language models to predict and reason about tactile inputs with minimal language fine-tuning. Our evaluations on PhysiCleAR show that Octopi is able to effectively use intermediate physical property predictions to improve physical reasoning in both trained tasks and for zero-shot reasoning. PhysiCleAR and Octopi are available on https://github.com/clear-nus/octopi.

LLM-State: Expandable State Representation for Long-horizon Task Planning in the Open World

Nov 29, 2023

Abstract:This work addresses the problem of long-horizon task planning with the Large Language Model (LLM) in an open-world household environment. Existing works fail to explicitly track key objects and attributes, leading to erroneous decisions in long-horizon tasks, or rely on highly engineered state features and feedback, which is not generalizable. We propose a novel, expandable state representation that provides continuous expansion and updating of object attributes from the LLM's inherent capabilities for context understanding and historical action reasoning. Our proposed representation maintains a comprehensive record of an object's attributes and changes, enabling robust retrospective summary of the sequence of actions leading to the current state. This allows enhanced context understanding for decision-making in task planning. We validate our model through experiments across simulated and real-world task planning scenarios, demonstrating significant improvements over baseline methods in a variety of tasks requiring long-horizon state tracking and reasoning.

GVD-Exploration: An Efficient Autonomous Robot Exploration Framework Based on Fast Generalized Voronoi Diagram Extraction

Sep 12, 2023Abstract:Rapidly-exploring Random Trees (RRTs) are a popular technique for autonomous exploration of mobile robots. However, the random sampling used by RRTs can result in inefficient and inaccurate frontiers extraction, which affects the exploration performance. To address the issues of slow path planning and high path cost, we propose a framework that uses a generalized Voronoi diagram (GVD) based multi-choice strategy for robot exploration. Our framework consists of three components: a novel mapping model that uses an end-to-end neural network to construct GVDs of the environments in real time; a GVD-based heuristic scheme that accelerates frontiers extraction and reduces frontiers redundancy; and a multi-choice frontiers assignment scheme that considers different types of frontiers and enables the robot to make rational decisions during the exploration process. We evaluate our method on simulation and real-world experiments and show that it outperforms RRT-based exploration methods in terms of efficiency and robustness.

Collaborative Trolley Transportation System with Autonomous Nonholonomic Robots

Apr 03, 2023

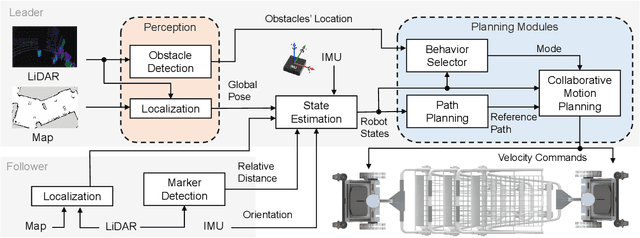

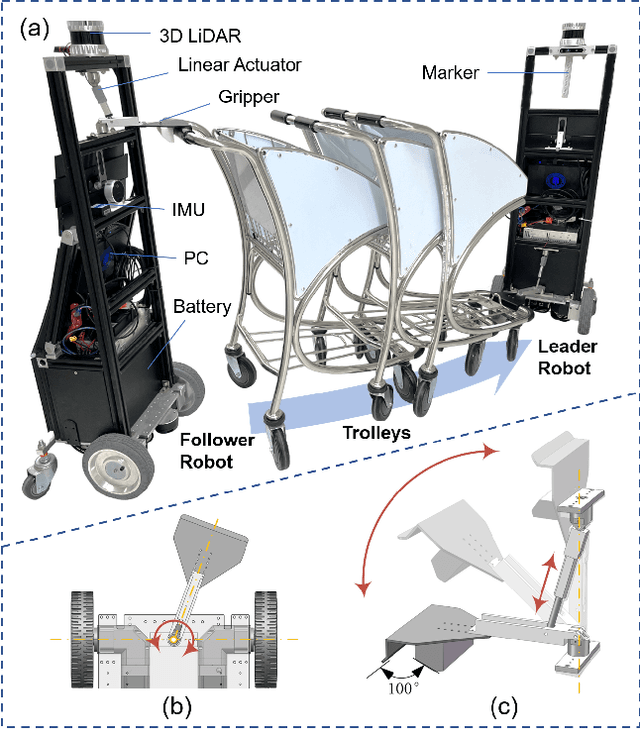

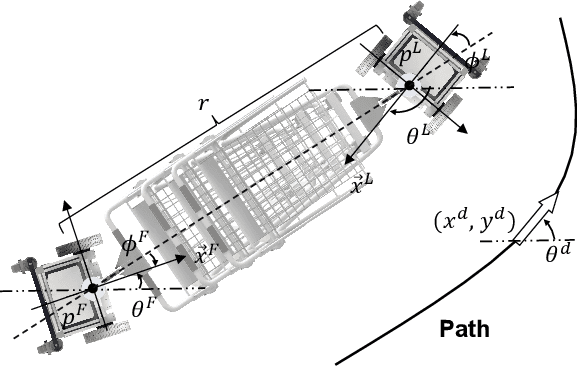

Abstract:Cooperative object transportation using multiple robots has been intensively studied in the control and robotics literature, but most approaches are either only applicable to omnidirectional robots or lack a complete navigation and decision-making framework that operates in real time. This paper presents an autonomous nonholonomic multi-robot system and an end-to-end hierarchical autonomy framework for collaborative luggage trolley transportation. This framework finds kinematic-feasible paths, computes online motion plans, and provides feedback that enables the multi-robot system to handle long lines of luggage trolleys and navigate obstacles and pedestrians while dealing with multiple inherently complex and coupled constraints. We demonstrate the designed collaborative trolley transportation system through practical transportation tasks, and the experiment results reveal their effectiveness and reliability in complex and dynamic environments.

PUTN: A Plane-fitting based Uneven Terrain Navigation Framework

Mar 09, 2022

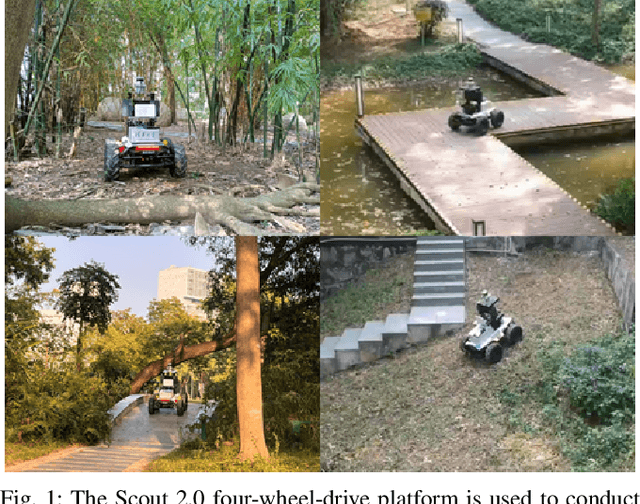

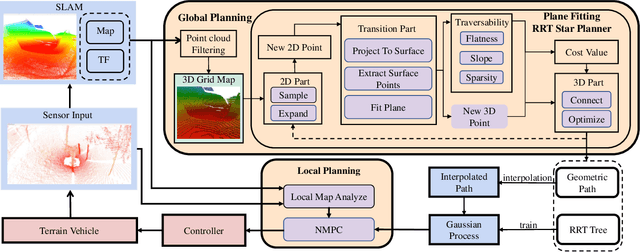

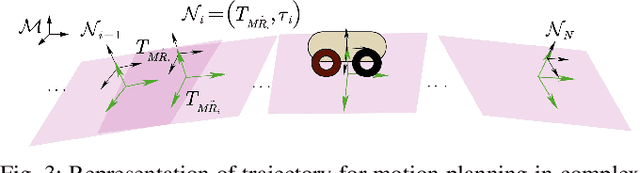

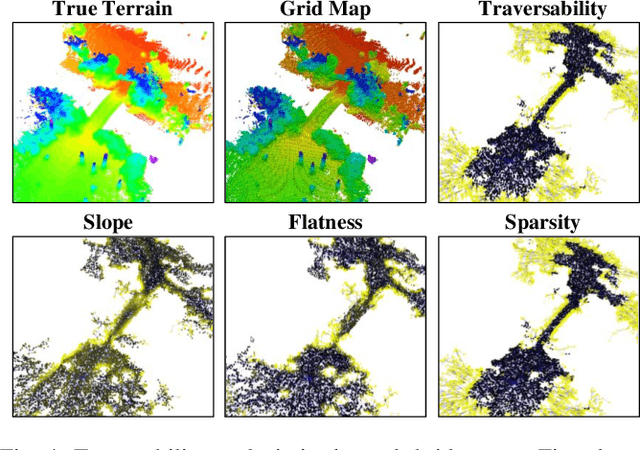

Abstract:Autonomous navigation of ground robots has been widely used in indoor structured 2D environments, but there are still many challenges in outdoor 3D unstructured environments, especially in rough, uneven terrains. This paper proposed a plane-fitting based uneven terrain navigation framework (PUTN) to solve this problem. The implementation of PUTN is divided into three steps. First, based on Rapidly-exploring Random Trees (RRT), an improved sample-based algorithm called Plane Fitting RRT* (PF-RRT*) is proposed to obtain a sparse trajectory. Each sampling point corresponds to a custom traversability index and a fitted plane on the point cloud. These planes are connected in series to form a traversable strip. Second, Gaussian Process Regression is used to generate traversability of the dense trajectory interpolated from the sparse trajectory, and the sampling tree is used as the training set. Finally, local planning is performed using nonlinear model predictive control (NMPC). By adding the traversability index and uncertainty to the cost function, and adding obstacles generated by the real-time point cloud to the constraint function, a safe motion planning algorithm with smooth speed and strong robustness is available. Experiments in real scenarios are conducted to verify the effectiveness of the method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge