Zonglin Li

Near-Light Color Photometric Stereo for mono-Chromaticity non-lambertian surface

Jan 19, 2026Abstract:Color photometric stereo enables single-shot surface reconstruction, extending conventional photometric stereo that requires multiple images of a static scene under varying illumination to dynamic scenarios. However, most existing approaches assume ideal distant lighting and Lambertian reflectance, leaving more practical near-light conditions and non-Lambertian surfaces underexplored. To overcome this limitation, we propose a framework that leverages neural implicit representations for depth and BRDF modeling under the assumption of mono-chromaticity (uniform chromaticity and homogeneous material), which alleviates the inherent ill-posedness of color photometric stereo and allows for detailed surface recovery from just one image. Furthermore, we design a compact optical tactile sensor to validate our approach. Experiments on both synthetic and real-world datasets demonstrate that our method achieves accurate and robust surface reconstruction.

A Survey of Reinforcement Learning for Large Reasoning Models

Sep 10, 2025Abstract:In this paper, we survey recent advances in Reinforcement Learning (RL) for reasoning with Large Language Models (LLMs). RL has achieved remarkable success in advancing the frontier of LLM capabilities, particularly in addressing complex logical tasks such as mathematics and coding. As a result, RL has emerged as a foundational methodology for transforming LLMs into LRMs. With the rapid progress of the field, further scaling of RL for LRMs now faces foundational challenges not only in computational resources but also in algorithm design, training data, and infrastructure. To this end, it is timely to revisit the development of this domain, reassess its trajectory, and explore strategies to enhance the scalability of RL toward Artificial SuperIntelligence (ASI). In particular, we examine research applying RL to LLMs and LRMs for reasoning abilities, especially since the release of DeepSeek-R1, including foundational components, core problems, training resources, and downstream applications, to identify future opportunities and directions for this rapidly evolving area. We hope this review will promote future research on RL for broader reasoning models. Github: https://github.com/TsinghuaC3I/Awesome-RL-for-LRMs

Generalizable and Relightable Gaussian Splatting for Human Novel View Synthesis

May 27, 2025Abstract:We propose GRGS, a generalizable and relightable 3D Gaussian framework for high-fidelity human novel view synthesis under diverse lighting conditions. Unlike existing methods that rely on per-character optimization or ignore physical constraints, GRGS adopts a feed-forward, fully supervised strategy that projects geometry, material, and illumination cues from multi-view 2D observations into 3D Gaussian representations. Specifically, to reconstruct lighting-invariant geometry, we introduce a Lighting-aware Geometry Refinement (LGR) module trained on synthetically relit data to predict accurate depth and surface normals. Based on the high-quality geometry, a Physically Grounded Neural Rendering (PGNR) module is further proposed to integrate neural prediction with physics-based shading, supporting editable relighting with shadows and indirect illumination. Besides, we design a 2D-to-3D projection training scheme that leverages differentiable supervision from ambient occlusion, direct, and indirect lighting maps, which alleviates the computational cost of explicit ray tracing. Extensive experiments demonstrate that GRGS achieves superior visual quality, geometric consistency, and generalization across characters and lighting conditions.

Shape-Guided Clothing Warping for Virtual Try-On

Apr 21, 2025Abstract:Image-based virtual try-on aims to seamlessly fit in-shop clothing to a person image while maintaining pose consistency. Existing methods commonly employ the thin plate spline (TPS) transformation or appearance flow to deform in-shop clothing for aligning with the person's body. Despite their promising performance, these methods often lack precise control over fine details, leading to inconsistencies in shape between clothing and the person's body as well as distortions in exposed limb regions. To tackle these challenges, we propose a novel shape-guided clothing warping method for virtual try-on, dubbed SCW-VTON, which incorporates global shape constraints and additional limb textures to enhance the realism and consistency of the warped clothing and try-on results. To integrate global shape constraints for clothing warping, we devise a dual-path clothing warping module comprising a shape path and a flow path. The former path captures the clothing shape aligned with the person's body, while the latter path leverages the mapping between the pre- and post-deformation of the clothing shape to guide the estimation of appearance flow. Furthermore, to alleviate distortions in limb regions of try-on results, we integrate detailed limb guidance by developing a limb reconstruction network based on masked image modeling. Through the utilization of SCW-VTON, we are able to generate try-on results with enhanced clothing shape consistency and precise control over details. Extensive experiments demonstrate the superiority of our approach over state-of-the-art methods both qualitatively and quantitatively. The code is available at https://github.com/xyhanHIT/SCW-VTON.

Progressive Limb-Aware Virtual Try-On

Mar 16, 2025Abstract:Existing image-based virtual try-on methods directly transfer specific clothing to a human image without utilizing clothing attributes to refine the transferred clothing geometry and textures, which causes incomplete and blurred clothing appearances. In addition, these methods usually mask the limb textures of the input for the clothing-agnostic person representation, which results in inaccurate predictions for human limb regions (i.e., the exposed arm skin), especially when transforming between long-sleeved and short-sleeved garments. To address these problems, we present a progressive virtual try-on framework, named PL-VTON, which performs pixel-level clothing warping based on multiple attributes of clothing and embeds explicit limb-aware features to generate photo-realistic try-on results. Specifically, we design a Multi-attribute Clothing Warping (MCW) module that adopts a two-stage alignment strategy based on multiple attributes to progressively estimate pixel-level clothing displacements. A Human Parsing Estimator (HPE) is then introduced to semantically divide the person into various regions, which provides structural constraints on the human body and therefore alleviates texture bleeding between clothing and limb regions. Finally, we propose a Limb-aware Texture Fusion (LTF) module to estimate high-quality details in limb regions by fusing textures of the clothing and the human body with the guidance of explicit limb-aware features. Extensive experiments demonstrate that our proposed method outperforms the state-of-the-art virtual try-on methods both qualitatively and quantitatively. The code is available at https://github.com/xyhanHIT/PL-VTON.

Path-Adaptive Matting for Efficient Inference Under Various Computational Cost Constraints

Mar 05, 2025

Abstract:In this paper, we explore a novel image matting task aimed at achieving efficient inference under various computational cost constraints, specifically FLOP limitations, using a single matting network. Existing matting methods which have not explored scalable architectures or path-learning strategies, fail to tackle this challenge. To overcome these limitations, we introduce Path-Adaptive Matting (PAM), a framework that dynamically adjusts network paths based on image contexts and computational cost constraints. We formulate the training of the computational cost-constrained matting network as a bilevel optimization problem, jointly optimizing the matting network and the path estimator. Building on this formalization, we design a path-adaptive matting architecture by incorporating path selection layers and learnable connect layers to estimate optimal paths and perform efficient inference within a unified network. Furthermore, we propose a performance-aware path-learning strategy to generate path labels online by evaluating a few paths sampled from the prior distribution of optimal paths and network estimations, enabling robust and efficient online path learning. Experiments on five image matting datasets demonstrate that the proposed PAM framework achieves competitive performance across a range of computational cost constraints.

Continuous Approximations for Improving Quantization Aware Training of LLMs

Oct 06, 2024Abstract:Model compression methods are used to reduce the computation and energy requirements for Large Language Models (LLMs). Quantization Aware Training (QAT), an effective model compression method, is proposed to reduce performance degradation after quantization. To further minimize this degradation, we introduce two continuous approximations to the QAT process on the rounding function, traditionally approximated by the Straight-Through Estimator (STE), and the clamping function. By applying both methods, the perplexity (PPL) on the WikiText-v2 dataset of the quantized model reaches 9.0815, outperforming 9.9621 by the baseline. Also, we achieve a 2.76% improvement on BoolQ, and a 5.47% improvement on MMLU, proving that the step sizes and weights can be learned more accurately with our approach. Our method achieves better performance with the same precision, model size, and training setup, contributing to the development of more energy-efficient LLMs technology that aligns with global sustainability goals.

Gemini: A Family of Highly Capable Multimodal Models

Dec 19, 2023Abstract:This report introduces a new family of multimodal models, Gemini, that exhibit remarkable capabilities across image, audio, video, and text understanding. The Gemini family consists of Ultra, Pro, and Nano sizes, suitable for applications ranging from complex reasoning tasks to on-device memory-constrained use-cases. Evaluation on a broad range of benchmarks shows that our most-capable Gemini Ultra model advances the state of the art in 30 of 32 of these benchmarks - notably being the first model to achieve human-expert performance on the well-studied exam benchmark MMLU, and improving the state of the art in every one of the 20 multimodal benchmarks we examined. We believe that the new capabilities of Gemini models in cross-modal reasoning and language understanding will enable a wide variety of use cases and we discuss our approach toward deploying them responsibly to users.

ReST meets ReAct: Self-Improvement for Multi-Step Reasoning LLM Agent

Dec 15, 2023Abstract:Answering complex natural language questions often necessitates multi-step reasoning and integrating external information. Several systems have combined knowledge retrieval with a large language model (LLM) to answer such questions. These systems, however, suffer from various failure cases, and we cannot directly train them end-to-end to fix such failures, as interaction with external knowledge is non-differentiable. To address these deficiencies, we define a ReAct-style LLM agent with the ability to reason and act upon external knowledge. We further refine the agent through a ReST-like method that iteratively trains on previous trajectories, employing growing-batch reinforcement learning with AI feedback for continuous self-improvement and self-distillation. Starting from a prompted large model and after just two iterations of the algorithm, we can produce a fine-tuned small model that achieves comparable performance on challenging compositional question-answering benchmarks with two orders of magnitude fewer parameters.

ResMem: Learn what you can and memorize the rest

Feb 03, 2023

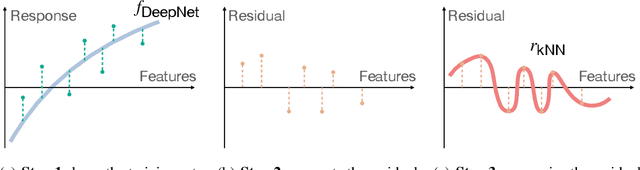

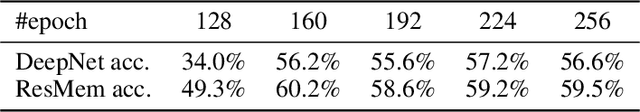

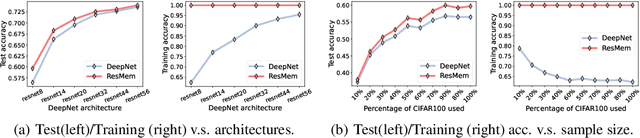

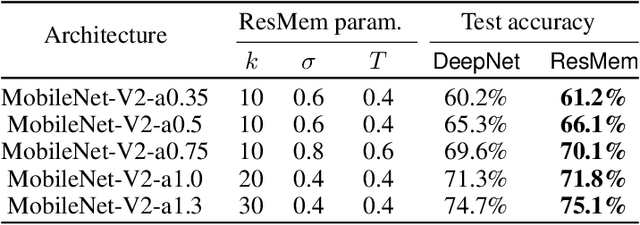

Abstract:The impressive generalization performance of modern neural networks is attributed in part to their ability to implicitly memorize complex training patterns. Inspired by this, we explore a novel mechanism to improve model generalization via explicit memorization. Specifically, we propose the residual-memorization (ResMem) algorithm, a new method that augments an existing prediction model (e.g. a neural network) by fitting the model's residuals with a $k$-nearest neighbor based regressor. The final prediction is then the sum of the original model and the fitted residual regressor. By construction, ResMem can explicitly memorize the training labels. Empirically, we show that ResMem consistently improves the test set generalization of the original prediction model across various standard vision and natural language processing benchmarks. Theoretically, we formulate a stylized linear regression problem and rigorously show that ResMem results in a more favorable test risk over the base predictor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge