Yunhe Feng

OLC-WA: Drift Aware Tuning-Free Online Classification with Weighted Average

Dec 14, 2025

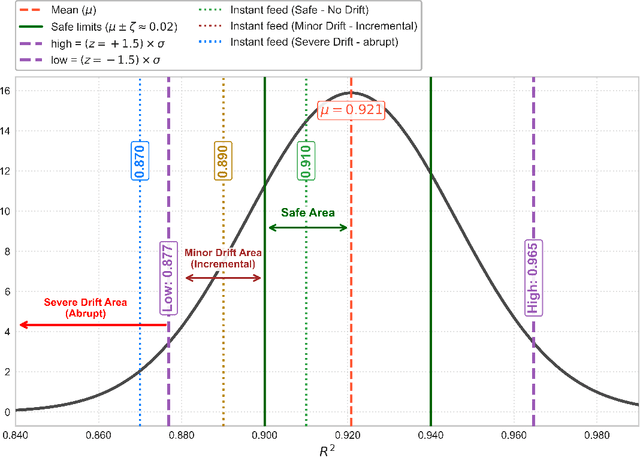

Abstract:Real-world data sets often exhibit temporal dynamics characterized by evolving data distributions. Disregarding this phenomenon, commonly referred to as concept drift, can significantly diminish a model's predictive accuracy. Furthermore, the presence of hyperparameters in online models exacerbates this issue. These parameters are typically fixed and cannot be dynamically adjusted by the user in response to the evolving data distribution. This paper introduces Online Classification with Weighted Average (OLC-WA), an adaptive, hyperparameter-free online classification model equipped with an automated optimization mechanism. OLC-WA operates by blending incoming data streams with an existing base model. This blending is facilitated by an exponentially weighted moving average. Furthermore, an integrated optimization mechanism dynamically detects concept drift, quantifies its magnitude, and adjusts the model based on the observed data stream characteristics. This approach empowers the model to effectively adapt to evolving data distributions within streaming environments. Rigorous empirical evaluation across diverse benchmark datasets shows that OLC-WA achieves performance comparable to batch models in stationary environments, maintaining accuracy within 1-3%, and surpasses leading online baselines by 10-25% under drift, demonstrating its effectiveness in adapting to dynamic data streams.

Poly-FEVER: A Multilingual Fact Verification Benchmark for Hallucination Detection in Large Language Models

Mar 19, 2025Abstract:Hallucinations in generative AI, particularly in Large Language Models (LLMs), pose a significant challenge to the reliability of multilingual applications. Existing benchmarks for hallucination detection focus primarily on English and a few widely spoken languages, lacking the breadth to assess inconsistencies in model performance across diverse linguistic contexts. To address this gap, we introduce Poly-FEVER, a large-scale multilingual fact verification benchmark specifically designed for evaluating hallucination detection in LLMs. Poly-FEVER comprises 77,973 labeled factual claims spanning 11 languages, sourced from FEVER, Climate-FEVER, and SciFact. It provides the first large-scale dataset tailored for analyzing hallucination patterns across languages, enabling systematic evaluation of LLMs such as ChatGPT and the LLaMA series. Our analysis reveals how topic distribution and web resource availability influence hallucination frequency, uncovering language-specific biases that impact model accuracy. By offering a multilingual benchmark for fact verification, Poly-FEVER facilitates cross-linguistic comparisons of hallucination detection and contributes to the development of more reliable, language-inclusive AI systems. The dataset is publicly available to advance research in responsible AI, fact-checking methodologies, and multilingual NLP, promoting greater transparency and robustness in LLM performance. The proposed Poly-FEVER is available at: https://huggingface.co/datasets/HanzhiZhang/Poly-FEVER.

DP-GTR: Differentially Private Prompt Protection via Group Text Rewriting

Mar 06, 2025

Abstract:Prompt privacy is crucial, especially when using online large language models (LLMs), due to the sensitive information often contained within prompts. While LLMs can enhance prompt privacy through text rewriting, existing methods primarily focus on document-level rewriting, neglecting the rich, multi-granular representations of text. This limitation restricts LLM utilization to specific tasks, overlooking their generalization and in-context learning capabilities, thus hindering practical application. To address this gap, we introduce DP-GTR, a novel three-stage framework that leverages local differential privacy (DP) and the composition theorem via group text rewriting. DP-GTR is the first framework to integrate both document-level and word-level information while exploiting in-context learning to simultaneously improve privacy and utility, effectively bridging local and global DP mechanisms at the individual data point level. Experiments on CommonSense QA and DocVQA demonstrate that DP-GTR outperforms existing approaches, achieving a superior privacy-utility trade-off. Furthermore, our framework is compatible with existing rewriting techniques, serving as a plug-in to enhance privacy protection. Our code is publicly available at https://github.com/FatShion-FTD/DP-GTR for reproducibility.

PRVQL: Progressive Knowledge-guided Refinement for Robust Egocentric Visual Query Localization

Feb 11, 2025Abstract:Egocentric visual query localization (EgoVQL) focuses on localizing the target of interest in space and time from first-person videos, given a visual query. Despite recent progressive, existing methods often struggle to handle severe object appearance changes and cluttering background in the video due to lacking sufficient target cues, leading to degradation. Addressing this, we introduce PRVQL, a novel Progressive knowledge-guided Refinement framework for EgoVQL. The core is to continuously exploit target-relevant knowledge directly from videos and utilize it as guidance to refine both query and video features for improving target localization. Our PRVQL contains multiple processing stages. The target knowledge from one stage, comprising appearance and spatial knowledge extracted via two specially designed knowledge learning modules, are utilized as guidance to refine the query and videos features for the next stage, which are used to generate more accurate knowledge for further feature refinement. With such a progressive process, target knowledge in PRVQL can be gradually improved, which, in turn, leads to better refined query and video features for localization in the final stage. Compared to previous methods, our PRVQL, besides the given object cues, enjoys additional crucial target information from a video as guidance to refine features, and hence enhances EgoVQL in complicated scenes. In our experiments on challenging Ego4D, PRVQL achieves state-of-the-art result and largely surpasses other methods, showing its efficacy. Our code, model and results will be released at https://github.com/fb-reps/PRVQL.

Unlocking Cross-Lingual Sentiment Analysis through Emoji Interpretation: A Multimodal Generative AI Approach

Dec 23, 2024

Abstract:Emojis have become ubiquitous in online communication, serving as a universal medium to convey emotions and decorative elements. Their widespread use transcends language and cultural barriers, enhancing understanding and fostering more inclusive interactions. While existing work gained valuable insight into emojis understanding, exploring emojis' capability to serve as a universal sentiment indicator leveraging large language models (LLMs) has not been thoroughly examined. Our study aims to investigate the capacity of emojis to serve as reliable sentiment markers through LLMs across languages and cultures. We leveraged the multimodal capabilities of ChatGPT to explore the sentiments of various representations of emojis and evaluated how well emoji-conveyed sentiment aligned with text sentiment on a multi-lingual dataset collected from 32 countries. Our analysis reveals that the accuracy of LLM-based emoji-conveyed sentiment is 81.43%, underscoring emojis' significant potential to serve as a universal sentiment marker. We also found a consistent trend that the accuracy of sentiment conveyed by emojis increased as the number of emojis grew in text. The results reinforce the potential of emojis to serve as global sentiment indicators, offering insight into fields such as cross-lingual and cross-cultural sentiment analysis on social media platforms. Code: https://github.com/ResponsibleAILab/emoji-universal-sentiment.

MNIST-Fraction: Enhancing Math Education with AI-Driven Fraction Detection and Analysis

Dec 11, 2024Abstract:Mathematics education, a crucial and basic field, significantly influences students' learning in related subjects and their future careers. Utilizing artificial intelligence to interpret and comprehend math problems in education is not yet fully explored. This is due to the scarcity of quality datasets and the intricacies of processing handwritten information. In this paper, we present a novel contribution to the field of mathematics education through the development of MNIST-Fraction, a dataset inspired by the renowned MNIST, specifically tailored for the recognition and understanding of handwritten math fractions. Our approach is the utilization of deep learning, specifically Convolutional Neural Networks (CNNs), for the recognition and understanding of handwritten math fractions to effectively detect and analyze fractions, along with their numerators and denominators. This capability is pivotal in calculating the value of fractions, a fundamental aspect of math learning. The MNIST-Fraction dataset is designed to closely mimic real-world scenarios, providing a reliable and relevant resource for AI-driven educational tools. Furthermore, we conduct a comprehensive comparison of our dataset with the original MNIST dataset using various classifiers, demonstrating the effectiveness and versatility of MNIST-Fraction in both detection and classification tasks. This comparative analysis not only validates the practical utility of our dataset but also offers insights into its potential applications in math education. To foster collaboration and further research within the computational and educational communities. Our work aims to bridge the gap in high-quality educational resources for math learning, offering a valuable tool for both educators and researchers in the field.

HALO: Hallucination Analysis and Learning Optimization to Empower LLMs with Retrieval-Augmented Context for Guided Clinical Decision Making

Sep 16, 2024

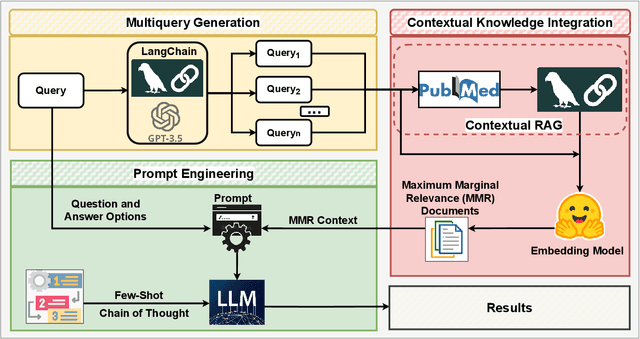

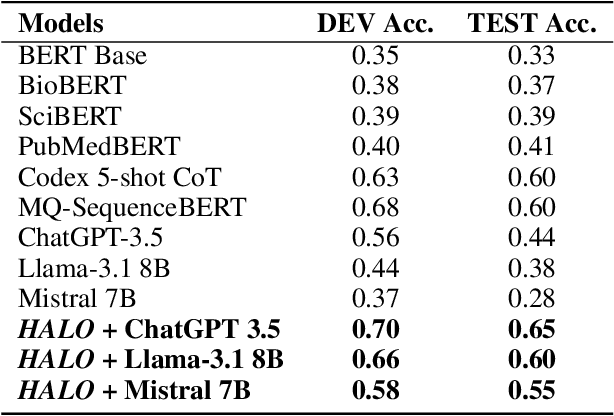

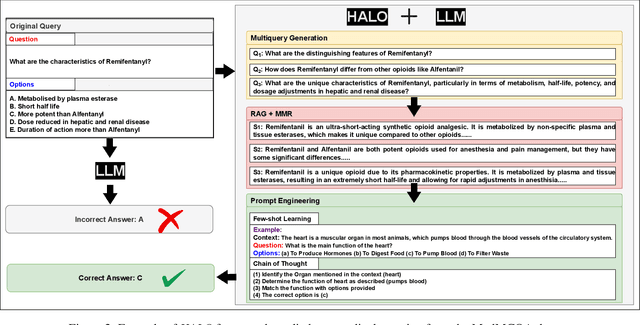

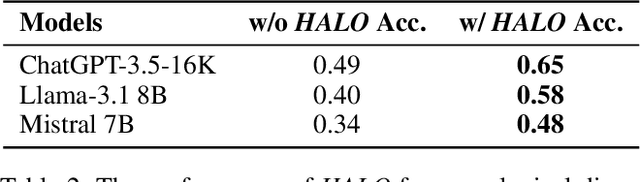

Abstract:Large language models (LLMs) have significantly advanced natural language processing tasks, yet they are susceptible to generating inaccurate or unreliable responses, a phenomenon known as hallucination. In critical domains such as health and medicine, these hallucinations can pose serious risks. This paper introduces HALO, a novel framework designed to enhance the accuracy and reliability of medical question-answering (QA) systems by focusing on the detection and mitigation of hallucinations. Our approach generates multiple variations of a given query using LLMs and retrieves relevant information from external open knowledge bases to enrich the context. We utilize maximum marginal relevance scoring to prioritize the retrieved context, which is then provided to LLMs for answer generation, thereby reducing the risk of hallucinations. The integration of LangChain further streamlines this process, resulting in a notable and robust increase in the accuracy of both open-source and commercial LLMs, such as Llama-3.1 (from 44% to 65%) and ChatGPT (from 56% to 70%). This framework underscores the critical importance of addressing hallucinations in medical QA systems, ultimately improving clinical decision-making and patient care. The open-source HALO is available at: https://github.com/ResponsibleAILab/HALO.

RedAgent: Red Teaming Large Language Models with Context-aware Autonomous Language Agent

Jul 23, 2024

Abstract:Recently, advanced Large Language Models (LLMs) such as GPT-4 have been integrated into many real-world applications like Code Copilot. These applications have significantly expanded the attack surface of LLMs, exposing them to a variety of threats. Among them, jailbreak attacks that induce toxic responses through jailbreak prompts have raised critical safety concerns. To identify these threats, a growing number of red teaming approaches simulate potential adversarial scenarios by crafting jailbreak prompts to test the target LLM. However, existing red teaming methods do not consider the unique vulnerabilities of LLM in different scenarios, making it difficult to adjust the jailbreak prompts to find context-specific vulnerabilities. Meanwhile, these methods are limited to refining jailbreak templates using a few mutation operations, lacking the automation and scalability to adapt to different scenarios. To enable context-aware and efficient red teaming, we abstract and model existing attacks into a coherent concept called "jailbreak strategy" and propose a multi-agent LLM system named RedAgent that leverages these strategies to generate context-aware jailbreak prompts. By self-reflecting on contextual feedback in an additional memory buffer, RedAgent continuously learns how to leverage these strategies to achieve effective jailbreaks in specific contexts. Extensive experiments demonstrate that our system can jailbreak most black-box LLMs in just five queries, improving the efficiency of existing red teaming methods by two times. Additionally, RedAgent can jailbreak customized LLM applications more efficiently. By generating context-aware jailbreak prompts towards applications on GPTs, we discover 60 severe vulnerabilities of these real-world applications with only two queries per vulnerability. We have reported all found issues and communicated with OpenAI and Meta for bug fixes.

LoReTrack: Efficient and Accurate Low-Resolution Transformer Tracking

May 27, 2024

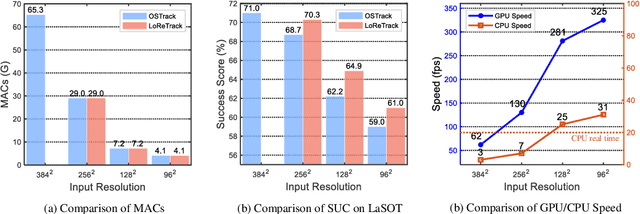

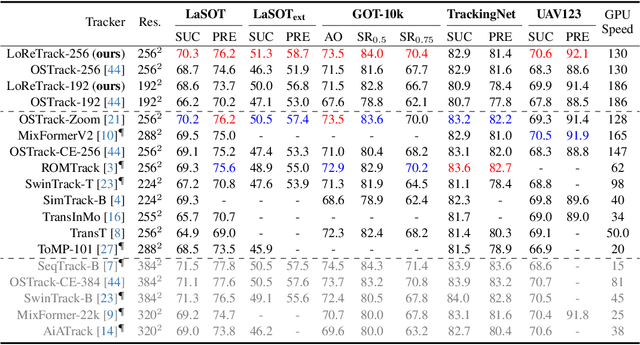

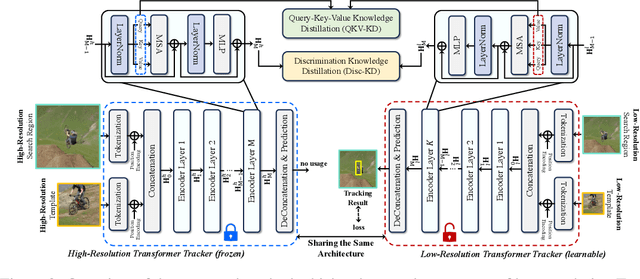

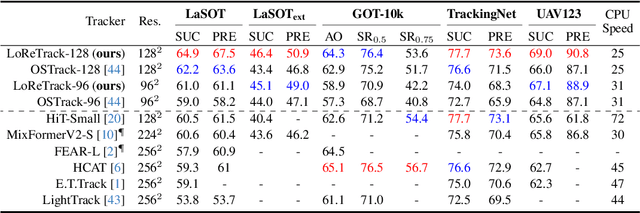

Abstract:High-performance Transformer trackers have shown excellent results, yet they often bear a heavy computational load. Observing that a smaller input can immediately and conveniently reduce computations without changing the model, an easy solution is to adopt the low-resolution input for efficient Transformer tracking. Albeit faster, this hurts tracking accuracy much due to information loss in low resolution tracking. In this paper, we aim to mitigate such information loss to boost the performance of the low-resolution Transformer tracking via dual knowledge distillation from a frozen high-resolution (but not a larger) Transformer tracker. The core lies in two simple yet effective distillation modules, comprising query-key-value knowledge distillation (QKV-KD) and discrimination knowledge distillation (Disc-KD), across resolutions. The former, from the global view, allows the low-resolution tracker to inherit the features and interactions from the high-resolution tracker, while the later, from the target-aware view, enhances the target-background distinguishing capacity via imitating discriminative regions from its high-resolution counterpart. With the dual knowledge distillation, our Low-Resolution Transformer Tracker (LoReTrack) enjoys not only high efficiency owing to reduced computation but also enhanced accuracy by distilling knowledge from the high-resolution tracker. In extensive experiments, LoReTrack with a 256x256 resolution consistently improves baseline with the same resolution, and shows competitive or even better results compared to 384x384 high-resolution Transformer tracker, while running 52% faster and saving 56% MACs. Moreover, LoReTrack is resolution-scalable. With a 128x128 resolution, it runs 25 fps on a CPU with 64.9%/46.4% SUC scores on LaSOT/LaSOText, surpassing all other CPU real-time trackers. Code will be released.

Benchmarking the Robustness of UAV Tracking Against Common Corruptions

Mar 18, 2024Abstract:The robustness of unmanned aerial vehicle (UAV) tracking is crucial in many tasks like surveillance and robotics. Despite its importance, little attention is paid to the performance of UAV trackers under common corruptions due to lack of a dedicated platform. Addressing this, we propose UAV-C, a large-scale benchmark for assessing robustness of UAV trackers under common corruptions. Specifically, UAV-C is built upon two popular UAV datasets by introducing 18 common corruptions from 4 representative categories including adversarial, sensor, blur, and composite corruptions in different levels. Finally, UAV-C contains more than 10K sequences. To understand the robustness of existing UAV trackers against corruptions, we extensively evaluate 12 representative algorithms on UAV-C. Our study reveals several key findings: 1) Current trackers are vulnerable to corruptions, indicating more attention needed in enhancing the robustness of UAV trackers; 2) When accompanying together, composite corruptions result in more severe degradation to trackers; and 3) While each tracker has its unique performance profile, some trackers may be more sensitive to specific corruptions. By releasing UAV-C, we hope it, along with comprehensive analysis, serves as a valuable resource for advancing the robustness of UAV tracking against corruption. Our UAV-C will be available at https://github.com/Xiaoqiong-Liu/UAV-C.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge