Xiaopeng Zhao

Integrating Reinforcement Learning and AI Agents for Adaptive Robotic Interaction and Assistance in Dementia Care

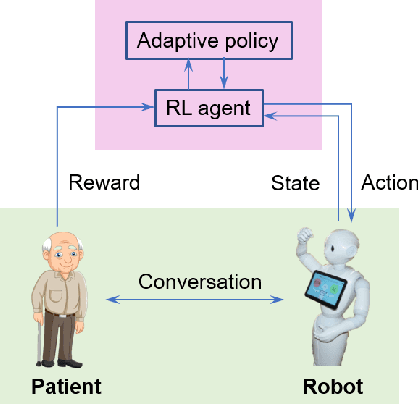

Jan 28, 2025Abstract:This study explores a novel approach to advancing dementia care by integrating socially assistive robotics, reinforcement learning (RL), large language models (LLMs), and clinical domain expertise within a simulated environment. This integration addresses the critical challenge of limited experimental data in socially assistive robotics for dementia care, providing a dynamic simulation environment that realistically models interactions between persons living with dementia (PLWDs) and robotic caregivers. The proposed framework introduces a probabilistic model to represent the cognitive and emotional states of PLWDs, combined with an LLM-based behavior simulation to emulate their responses. We further develop and train an adaptive RL system enabling humanoid robots, such as Pepper, to deliver context-aware and personalized interactions and assistance based on PLWDs' cognitive and emotional states. The framework also generalizes to computer-based agents, highlighting its versatility. Results demonstrate that the RL system, enhanced by LLMs, effectively interprets and responds to the complex needs of PLWDs, providing tailored caregiving strategies. This research contributes to human-computer and human-robot interaction by offering a customizable AI-driven caregiving platform, advancing understanding of dementia-related challenges, and fostering collaborative innovation in assistive technologies. The proposed approach has the potential to enhance the independence and quality of life for PLWDs while alleviating caregiver burden, underscoring the transformative role of interaction-focused AI systems in dementia care.

Human-Computer Interaction and Human-AI Collaboration in Advanced Air Mobility: A Comprehensive Review

Dec 10, 2024

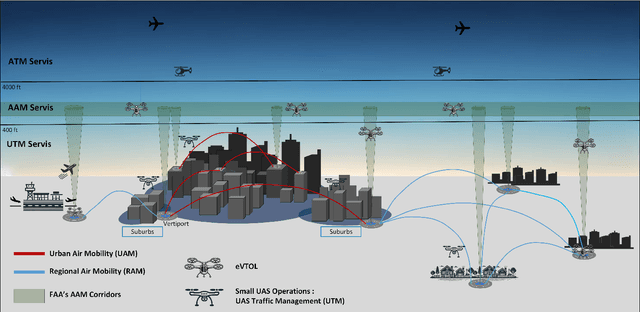

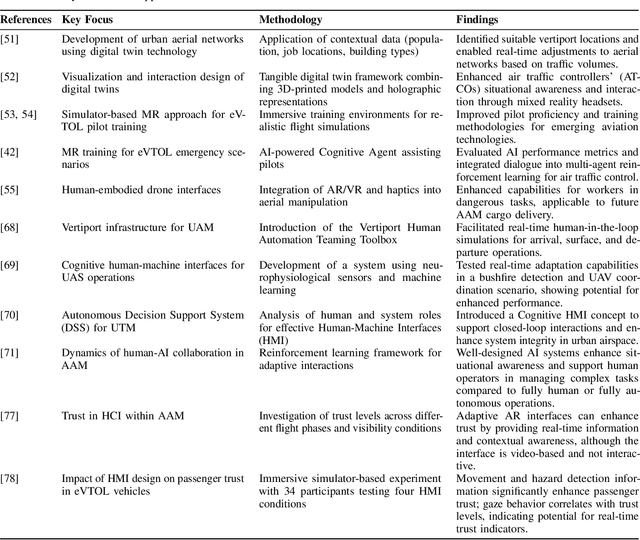

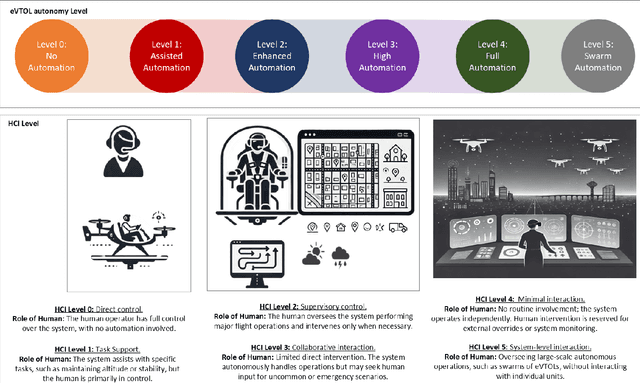

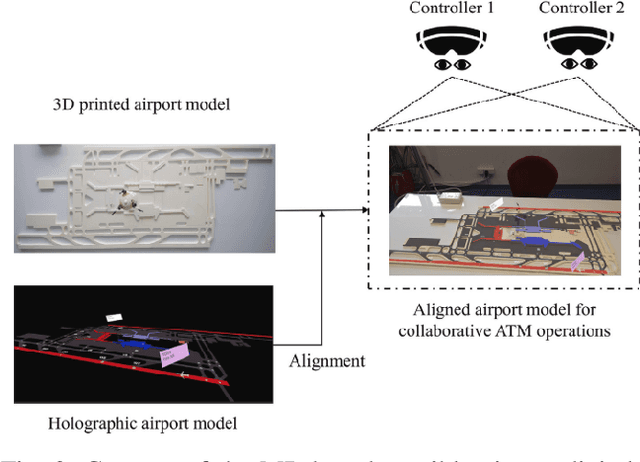

Abstract:The increasing rates of global urbanization and vehicle usage are leading to a shift of mobility to the third dimension-through Advanced Air Mobility (AAM)-offering a promising solution for faster, safer, cleaner, and more efficient transportation. As air transportation continues to evolve with more automated and autonomous systems, advancements in AAM require a deep understanding of human-computer interaction and human-AI collaboration to ensure safe and effective operations in complex urban and regional environments. There has been a significant increase in publications regarding these emerging applications; thus, there is a need to review developments in this area. This paper comprehensively reviews the current state of research on human-computer interaction and human-AI collaboration in AAM. Specifically, we focus on AAM applications related to the design of human-machine interfaces for various uses, including pilot training, air traffic management, and the integration of AI-assisted decision-making systems with immersive technologies such as extended, virtual, mixed, and augmented reality devices. Additionally, we provide a comprehensive analysis of the challenges AAM encounters in integrating human-computer frameworks, including unique challenges associated with these interactions, such as trust in AI systems and safety concerns. Finally, we highlight emerging opportunities and propose future research directions to bridge the gap between human factors and technological advancements in AAM.

HALO: Hallucination Analysis and Learning Optimization to Empower LLMs with Retrieval-Augmented Context for Guided Clinical Decision Making

Sep 16, 2024

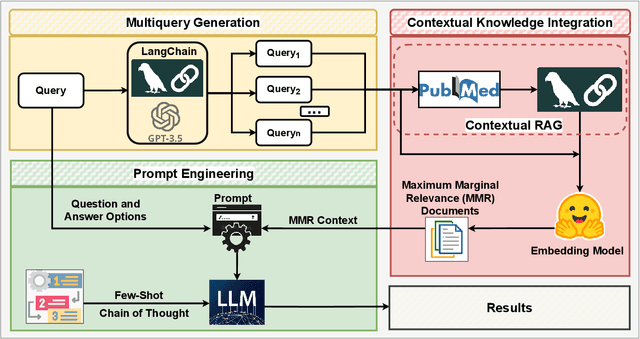

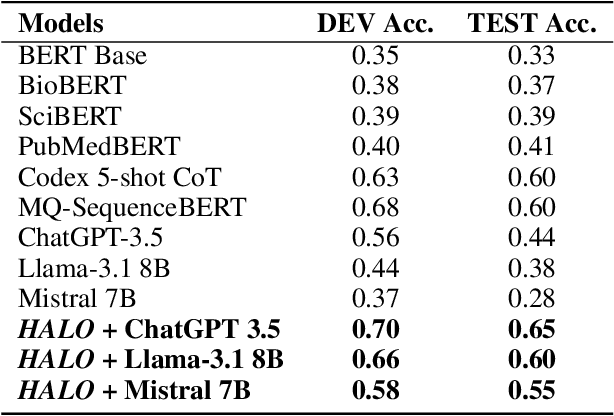

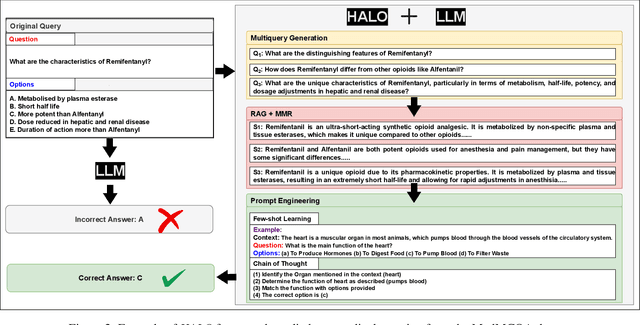

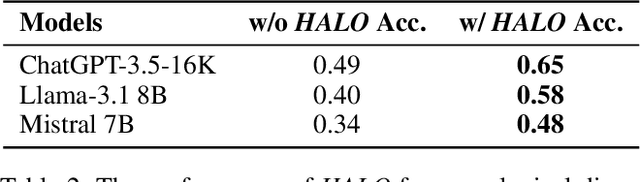

Abstract:Large language models (LLMs) have significantly advanced natural language processing tasks, yet they are susceptible to generating inaccurate or unreliable responses, a phenomenon known as hallucination. In critical domains such as health and medicine, these hallucinations can pose serious risks. This paper introduces HALO, a novel framework designed to enhance the accuracy and reliability of medical question-answering (QA) systems by focusing on the detection and mitigation of hallucinations. Our approach generates multiple variations of a given query using LLMs and retrieves relevant information from external open knowledge bases to enrich the context. We utilize maximum marginal relevance scoring to prioritize the retrieved context, which is then provided to LLMs for answer generation, thereby reducing the risk of hallucinations. The integration of LangChain further streamlines this process, resulting in a notable and robust increase in the accuracy of both open-source and commercial LLMs, such as Llama-3.1 (from 44% to 65%) and ChatGPT (from 56% to 70%). This framework underscores the critical importance of addressing hallucinations in medical QA systems, ultimately improving clinical decision-making and patient care. The open-source HALO is available at: https://github.com/ResponsibleAILab/HALO.

Exploring Nutritional Impact on Alzheimer's Mortality: An Explainable AI Approach

May 26, 2024

Abstract:This article uses machine learning (ML) and explainable artificial intelligence (XAI) techniques to investigate the relationship between nutritional status and mortality rates associated with Alzheimers disease (AD). The Third National Health and Nutrition Examination Survey (NHANES III) database is employed for analysis. The random forest model is selected as the base model for XAI analysis, and the Shapley Additive Explanations (SHAP) method is used to assess feature importance. The results highlight significant nutritional factors such as serum vitamin B12 and glycated hemoglobin. The study demonstrates the effectiveness of random forests in predicting AD mortality compared to other diseases. This research provides insights into the impact of nutrition on AD and contributes to a deeper understanding of disease progression.

Smart Driver Monitoring Robotic System to Enhance Road Safety : A Comprehensive Review

Jan 28, 2024Abstract:The future of transportation is being shaped by technology, and one revolutionary step in improving road safety is the incorporation of robotic systems into driver monitoring infrastructure. This literature review explores the current landscape of driver monitoring systems, ranging from traditional physiological parameter monitoring to advanced technologies such as facial recognition to steering analysis. Exploring the challenges faced by existing systems, the review then investigates the integration of robots as intelligent entities within this framework. These robotic systems, equipped with artificial intelligence and sophisticated sensors, not only monitor but actively engage with the driver, addressing cognitive and emotional states in real-time. The synthesis of existing research reveals a dynamic interplay between human and machine, offering promising avenues for innovation in adaptive, personalized, and ethically responsible human-robot interactions for driver monitoring. This review establishes a groundwork for comprehending the intricacies and potential avenues within this dynamic field. It encourages further investigation and advancement at the intersection of human-robot interaction and automotive safety, introducing a novel direction. This involves various sections detailing technological enhancements that can be integrated to propose an innovative and improved driver monitoring system.

NeRF$^\textbf{2}$: Neural Radio-Frequency Radiance Fields

May 10, 2023

Abstract:Although Maxwell discovered the physical laws of electromagnetic waves 160 years ago, how to precisely model the propagation of an RF signal in an electrically large and complex environment remains a long-standing problem. The difficulty is in the complex interactions between the RF signal and the obstacles (e.g., reflection, diffraction, etc.). Inspired by the great success of using a neural network to describe the optical field in computer vision, we propose a neural radio-frequency radiance field, NeRF$^\textbf{2}$, which represents a continuous volumetric scene function that makes sense of an RF signal's propagation. Particularly, after training with a few signal measurements, NeRF$^\textbf{2}$ can tell how/what signal is received at any position when it knows the position of a transmitter. As a physical-layer neural network, NeRF$^\textbf{2}$ can take advantage of the learned statistic model plus the physical model of ray tracing to generate a synthetic dataset that meets the training demands of application-layer artificial neural networks (ANNs). Thus, we can boost the performance of ANNs by the proposed turbo-learning, which mixes the true and synthetic datasets to intensify the training. Our experiment results show that turbo-learning can enhance performance with an approximate 50% increase. We also demonstrate the power of NeRF$^\textbf{2}$ in the field of indoor localization and 5G MIMO.

Learning-Based Strategy Design for Robot-Assisted Reminiscence Therapy Based on a Developed Model for People with Dementia

Sep 06, 2021

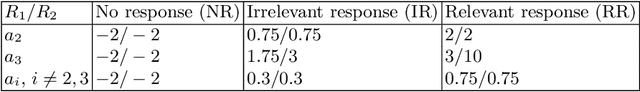

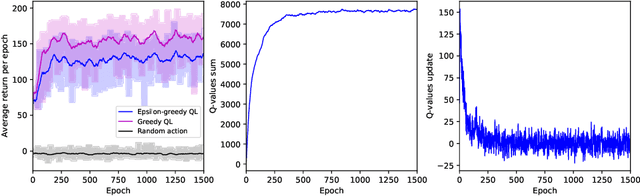

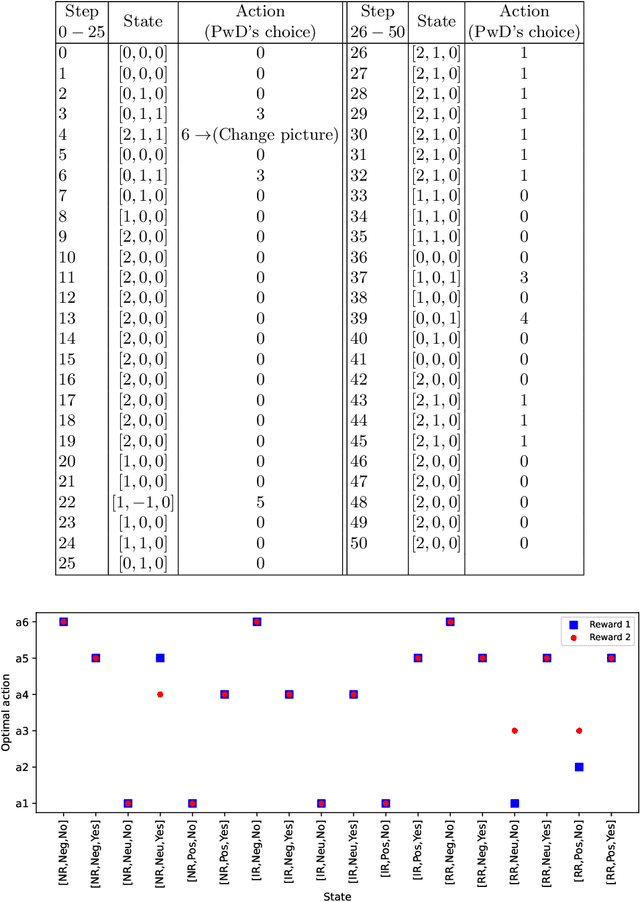

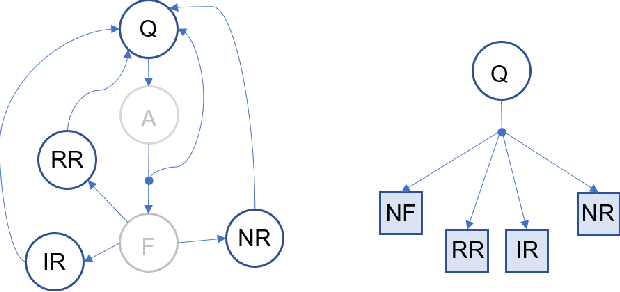

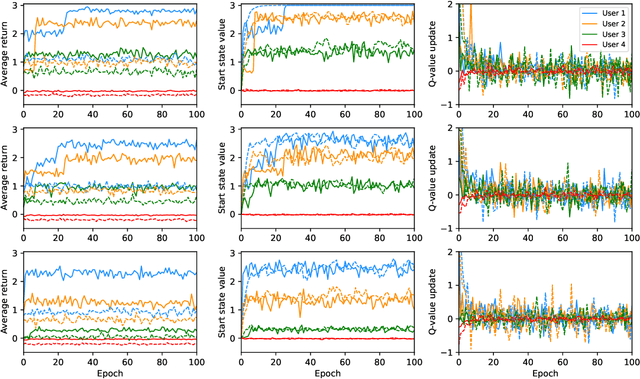

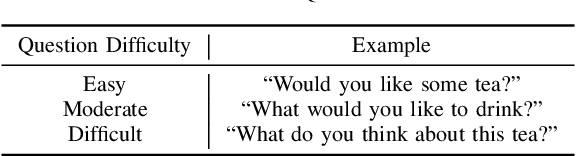

Abstract:In this paper, the robot-assisted Reminiscence Therapy (RT) is studied as a psychosocial intervention to persons with dementia (PwDs). We aim at a conversation strategy for the robot by reinforcement learning to stimulate the PwD to talk. Specifically, to characterize the stochastic reactions of a PwD to the robot's actions, a simulation model of a PwD is developed which features the transition probabilities among different PwD states consisting of the response relevance, emotion levels and confusion conditions. A Q-learning (QL) algorithm is then designed to achieve the best conversation strategy for the robot. The objective is to stimulate the PwD to talk as much as possible while keeping the PwD's states as positive as possible. In certain conditions, the achieved strategy gives the PwD choices to continue or change the topic, or stop the conversation, so that the PwD has a sense of control to mitigate the conversation stress. To achieve this, the standard QL algorithm is revised to deliberately integrate the impact of PwD's choices into the Q-value updates. Finally, the simulation results demonstrate the learning convergence and validate the efficacy of the achieved strategy. Tests show that the strategy is capable to duly adjust the difficulty level of prompt according to the PwD's states, take actions (e.g., repeat or explain the prompt, or comfort) to help the PwD out of bad states, and allow the PwD to control the conversation tendency when bad states continue.

Assessing the Acceptability of a Humanoid Robot for Alzheimer's Disease and Related Dementia Care Using an Online Survey

Apr 26, 2021

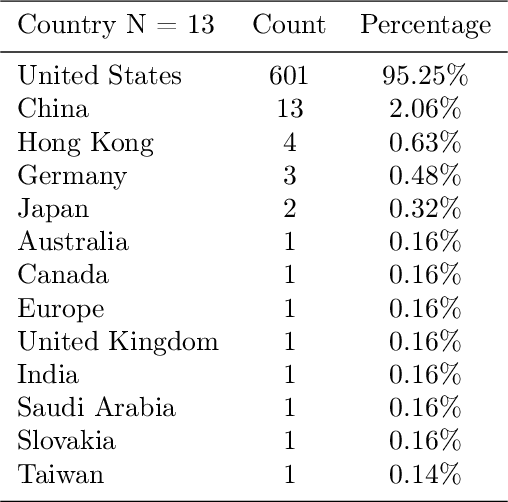

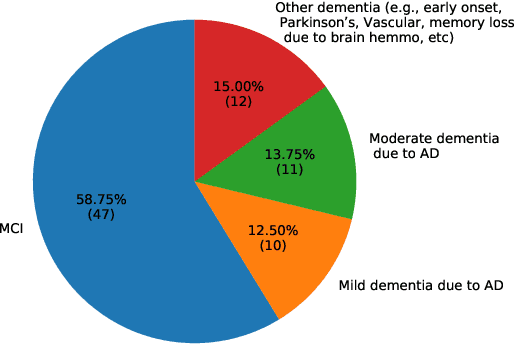

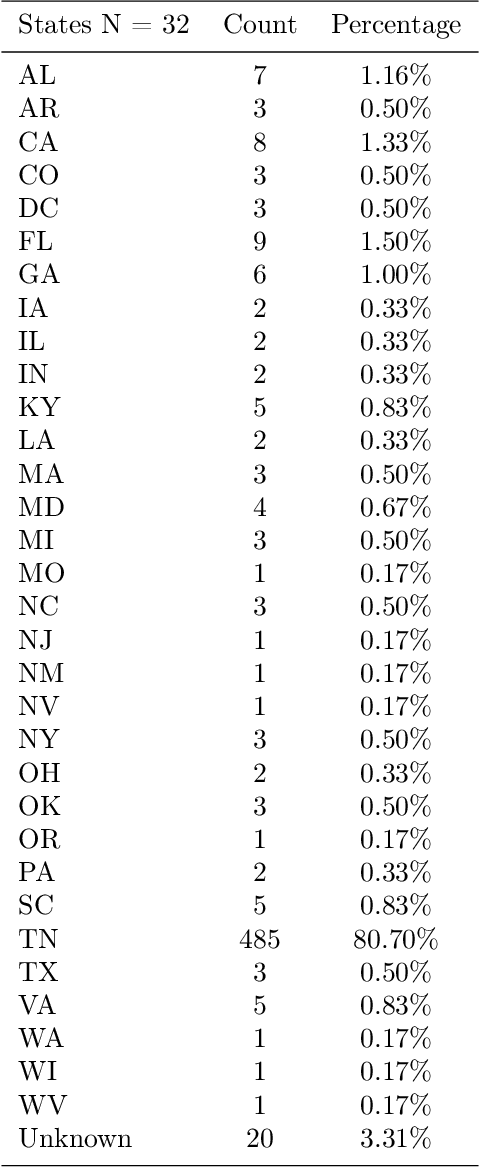

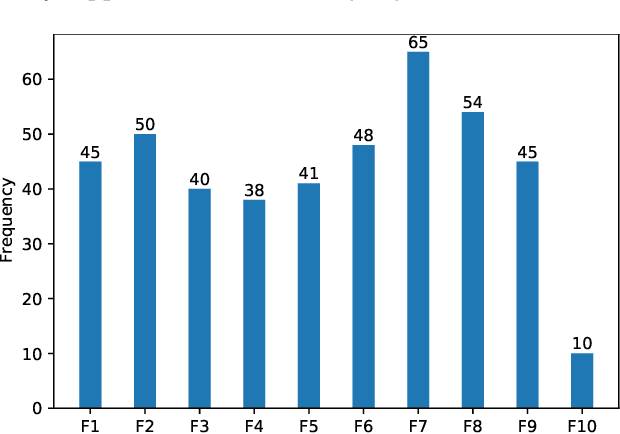

Abstract:In this work, an online survey was used to understand the acceptability of humanoid robots and users' needs in using these robots to assist with care among people with Alzheimer's disease and related dementias (ADRD), their family caregivers, health care professionals, and the general public. From November 12, 2020 to March 13, 2021, a total of 631 complete responses were collected, including 80 responses from people with mild cognitive impairment or ADRD, 245 responses from caregivers and health care professionals, and 306 responses from the general public. Overall, people with ADRD, caregivers, and the general public showed positive attitudes towards using the robot to assist with care for people with ADRD. The top three functions of robots required by the group of people with ADRD were reminders to take medicine, emergency call service, and helping contact medical services. Additional comments, suggestions, and concerns provided by caregivers and the general public are also discussed.

A Simulated Experiment to Explore Robotic Dialogue Strategies for People with Dementia

Apr 18, 2021

Abstract:People with Alzheimer's disease and related dementias (ADRD) often show the problem of repetitive questioning, which brings a great burden on persons with ADRD (PwDs) and their caregivers. Conversational robots hold promise of coping with this problem and hence alleviating the burdens on caregivers. In this paper, we proposed a partially observable markov decision process (POMDP) model for the PwD-robot interaction in the context of repetitive questioning, and used Q-learning to learn an adaptive conversation strategy (i.e., rate of follow-up question and difficulty of follow-up question) towards PwDs with different cognitive capabilities and different engagement levels. The results indicated that Q-learning was helpful for action selection for the robot. This may be a useful step towards the application of conversational social robots to cope with repetitive questioning in PwDs.

Brain Computer Interface for Gesture Control of a Social Robot: an Offline Study

Jul 23, 2017

Abstract:Brain computer interface (BCI) provides promising applications in neuroprosthesis and neurorehabilitation by controlling computers and robotic devices based on the patient's intentions. Here, we have developed a novel BCI platform that controls a personalized social robot using noninvasively acquired brain signals. Scalp electroencephalogram (EEG) signals are collected from a user in real-time during tasks of imaginary movements. The imagined body kinematics are decoded using a regression model to calculate the user-intended velocity. Then, the decoded kinematic information is mapped to control the gestures of a social robot. The platform here may be utilized as a human-robot-interaction framework by combining with neurofeedback mechanisms to enhance the cognitive capability of persons with dementia.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge