Shahinul Hoque

Accuracy is Not Enough: Poisoning Interpretability in Federated Learning via Color Skew

Nov 18, 2025Abstract:As machine learning models are increasingly deployed in safety-critical domains, visual explanation techniques have become essential tools for supporting transparency. In this work, we reveal a new class of attacks that compromise model interpretability without affecting accuracy. Specifically, we show that small color perturbations applied by adversarial clients in a federated learning setting can shift a model's saliency maps away from semantically meaningful regions while keeping the prediction unchanged. The proposed saliency-aware attack framework, called Chromatic Perturbation Module, systematically crafts adversarial examples by altering the color contrast between foreground and background in a way that disrupts explanation fidelity. These perturbations accumulate across training rounds, poisoning the global model's internal feature attributions in a stealthy and persistent manner. Our findings challenge a common assumption in model auditing that correct predictions imply faithful explanations and demonstrate that interpretability itself can be an attack surface. We evaluate this vulnerability across multiple datasets and show that standard training pipelines are insufficient to detect or mitigate explanation degradation, especially in the federated learning setting, where subtle color perturbations are harder to discern. Our attack reduces peak activation overlap in Grad-CAM explanations by up to 35% while preserving classification accuracy above 96% on all evaluated datasets.

Smart Driver Monitoring Robotic System to Enhance Road Safety : A Comprehensive Review

Jan 28, 2024Abstract:The future of transportation is being shaped by technology, and one revolutionary step in improving road safety is the incorporation of robotic systems into driver monitoring infrastructure. This literature review explores the current landscape of driver monitoring systems, ranging from traditional physiological parameter monitoring to advanced technologies such as facial recognition to steering analysis. Exploring the challenges faced by existing systems, the review then investigates the integration of robots as intelligent entities within this framework. These robotic systems, equipped with artificial intelligence and sophisticated sensors, not only monitor but actively engage with the driver, addressing cognitive and emotional states in real-time. The synthesis of existing research reveals a dynamic interplay between human and machine, offering promising avenues for innovation in adaptive, personalized, and ethically responsible human-robot interactions for driver monitoring. This review establishes a groundwork for comprehending the intricacies and potential avenues within this dynamic field. It encourages further investigation and advancement at the intersection of human-robot interaction and automotive safety, introducing a novel direction. This involves various sections detailing technological enhancements that can be integrated to propose an innovative and improved driver monitoring system.

HRI Challenges Influencing Low Usage of Robotic Systems in Disaster Response and Rescue Operations

Jan 28, 2024Abstract:The breakthrough in AI and Machine Learning has brought a new revolution in robotics, resulting in the construction of more sophisticated robotic systems. Not only can these robotic systems benefit all domains, but also can accomplish tasks that seemed to be unimaginable a few years ago. From swarms of autonomous small robots working together to more very heavy and large objects, to seemingly indestructible robots capable of going to the harshest environments, we can see robotic systems designed for every task imaginable. Among them, a key scenario where robotic systems can benefit is in disaster response scenarios and rescue operations. Robotic systems are capable of successfully conducting tasks such as removing heavy materials, utilizing multiple advanced sensors for finding objects of interest, moving through debris and various inhospitable environments, and not the least have flying capabilities. Even with so much potential, we rarely see the utilization of robotic systems in disaster response scenarios and rescue missions. Many factors could be responsible for the low utilization of robotic systems in such scenarios. One of the key factors involve challenges related to Human-Robot Interaction (HRI) issues. Therefore, in this paper, we try to understand the HRI challenges involving the utilization of robotic systems in disaster response and rescue operations. Furthermore, we go through some of the proposed robotic systems designed for disaster response scenarios and identify the HRI challenges of those systems. Finally, we try to address the challenges by introducing ideas from various proposed research works.

Effects of Real-Life Traffic Sign Alteration on YOLOv7- an Object Recognition Model

May 09, 2023

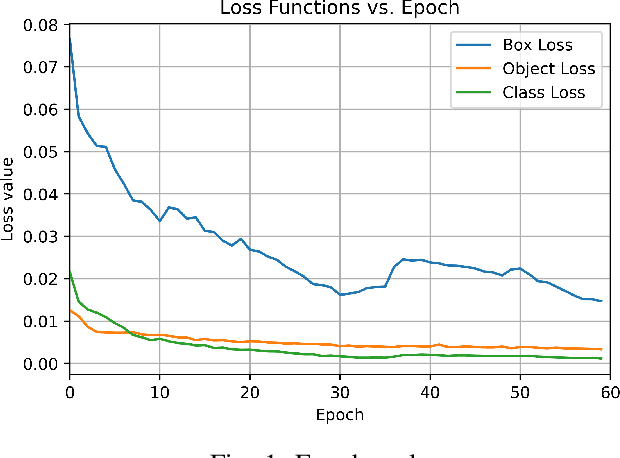

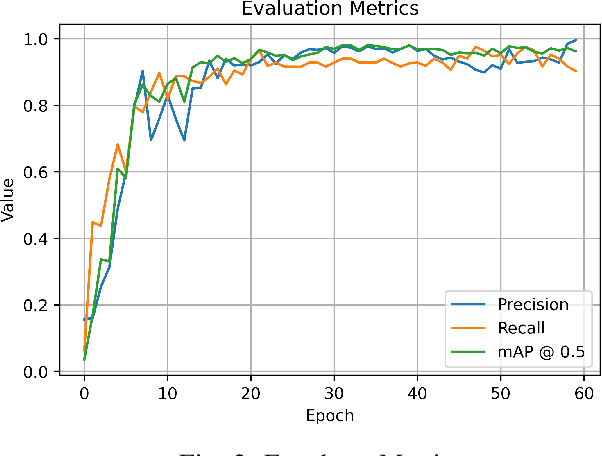

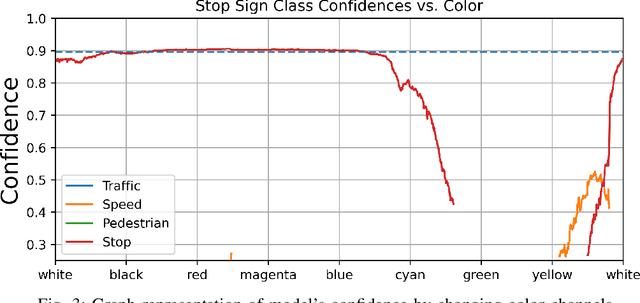

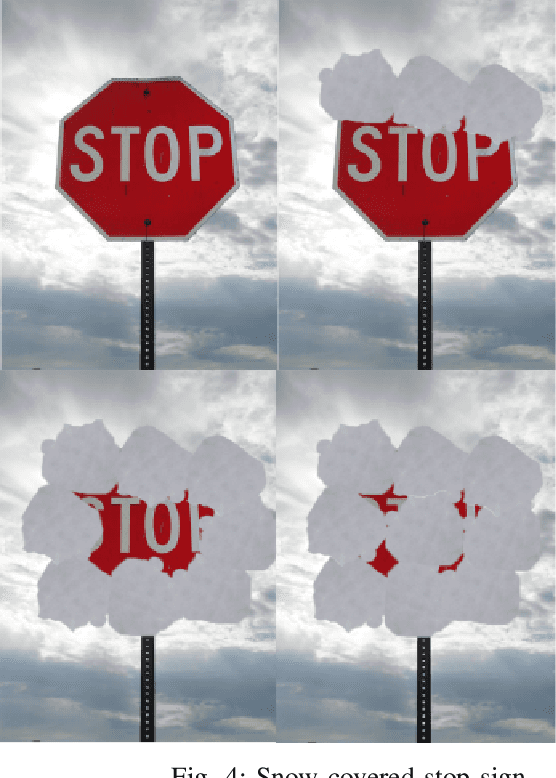

Abstract:The advancement of Image Processing has led to the widespread use of Object Recognition (OR) models in various applications, such as airport security and mail sorting. These models have become essential in signifying the capabilities of AI and supporting vital services like national postal operations. However, the performance of OR models can be impeded by real-life scenarios, such as traffic sign alteration. Therefore, this research investigates the effects of altered traffic signs on the accuracy and performance of object recognition models. To this end, a publicly available dataset was used to create different types of traffic sign alterations, including changes to size, shape, color, visibility, and angles. The impact of these alterations on the YOLOv7 (You Only Look Once) model's detection and classification abilities were analyzed. It reveals that the accuracy of object detection models decreases significantly when exposed to modified traffic signs under unlikely conditions. This study highlights the significance of enhancing the robustness of object detection models in real-life scenarios and the need for further investigation in this area to improve their accuracy and reliability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge