LoReTrack: Efficient and Accurate Low-Resolution Transformer Tracking

Paper and Code

May 27, 2024

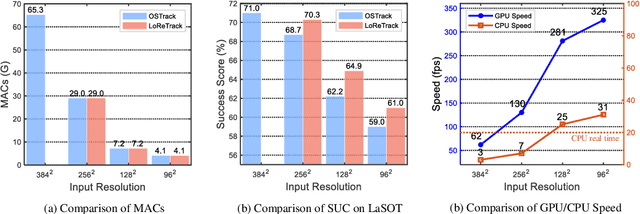

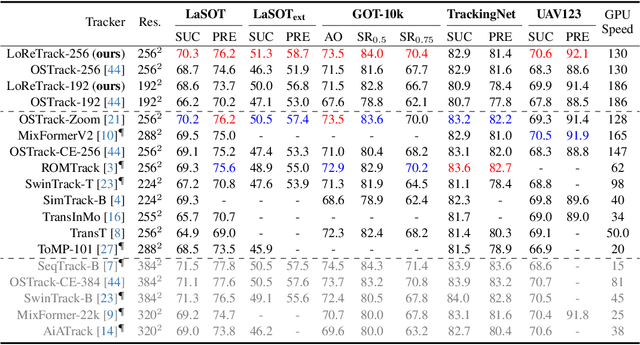

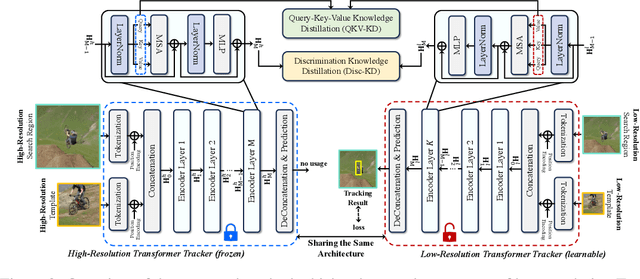

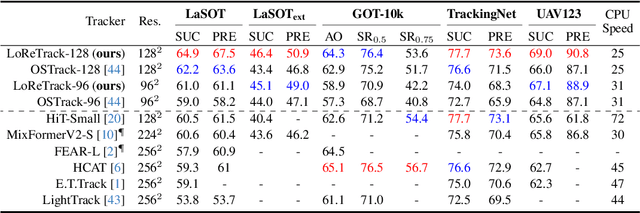

High-performance Transformer trackers have shown excellent results, yet they often bear a heavy computational load. Observing that a smaller input can immediately and conveniently reduce computations without changing the model, an easy solution is to adopt the low-resolution input for efficient Transformer tracking. Albeit faster, this hurts tracking accuracy much due to information loss in low resolution tracking. In this paper, we aim to mitigate such information loss to boost the performance of the low-resolution Transformer tracking via dual knowledge distillation from a frozen high-resolution (but not a larger) Transformer tracker. The core lies in two simple yet effective distillation modules, comprising query-key-value knowledge distillation (QKV-KD) and discrimination knowledge distillation (Disc-KD), across resolutions. The former, from the global view, allows the low-resolution tracker to inherit the features and interactions from the high-resolution tracker, while the later, from the target-aware view, enhances the target-background distinguishing capacity via imitating discriminative regions from its high-resolution counterpart. With the dual knowledge distillation, our Low-Resolution Transformer Tracker (LoReTrack) enjoys not only high efficiency owing to reduced computation but also enhanced accuracy by distilling knowledge from the high-resolution tracker. In extensive experiments, LoReTrack with a 256x256 resolution consistently improves baseline with the same resolution, and shows competitive or even better results compared to 384x384 high-resolution Transformer tracker, while running 52% faster and saving 56% MACs. Moreover, LoReTrack is resolution-scalable. With a 128x128 resolution, it runs 25 fps on a CPU with 64.9%/46.4% SUC scores on LaSOT/LaSOText, surpassing all other CPU real-time trackers. Code will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge