Xiaoqiong Liu

CGTrack: Cascade Gating Network with Hierarchical Feature Aggregation for UAV Tracking

May 09, 2025

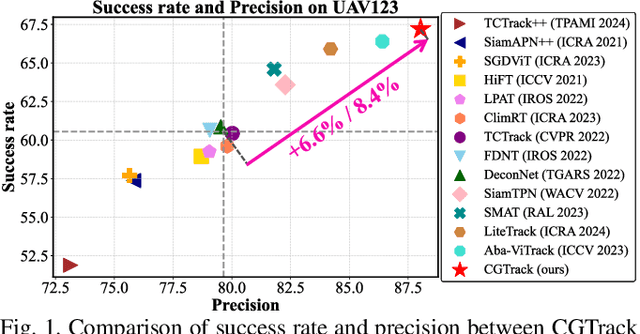

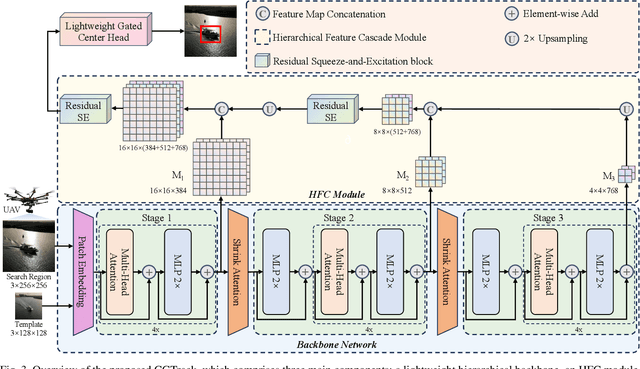

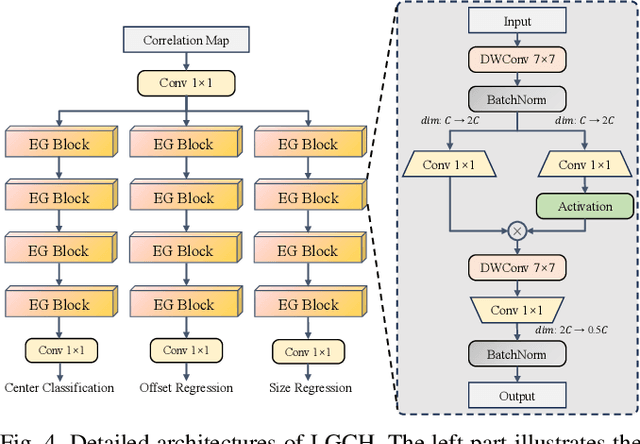

Abstract:Recent advancements in visual object tracking have markedly improved the capabilities of unmanned aerial vehicle (UAV) tracking, which is a critical component in real-world robotics applications. While the integration of hierarchical lightweight networks has become a prevalent strategy for enhancing efficiency in UAV tracking, it often results in a significant drop in network capacity, which further exacerbates challenges in UAV scenarios, such as frequent occlusions and extreme changes in viewing angles. To address these issues, we introduce a novel family of UAV trackers, termed CGTrack, which combines explicit and implicit techniques to expand network capacity within a coarse-to-fine framework. Specifically, we first introduce a Hierarchical Feature Cascade (HFC) module that leverages the spirit of feature reuse to increase network capacity by integrating the deep semantic cues with the rich spatial information, incurring minimal computational costs while enhancing feature representation. Based on this, we design a novel Lightweight Gated Center Head (LGCH) that utilizes gating mechanisms to decouple target-oriented coordinates from previously expanded features, which contain dense local discriminative information. Extensive experiments on three challenging UAV tracking benchmarks demonstrate that CGTrack achieves state-of-the-art performance while running fast. Code will be available at https://github.com/Nightwatch-Fox11/CGTrack.

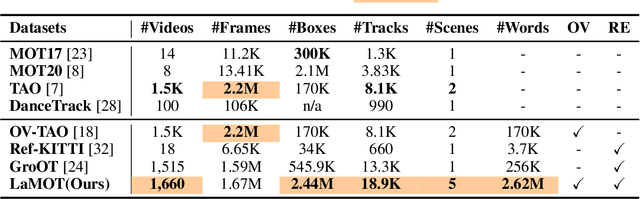

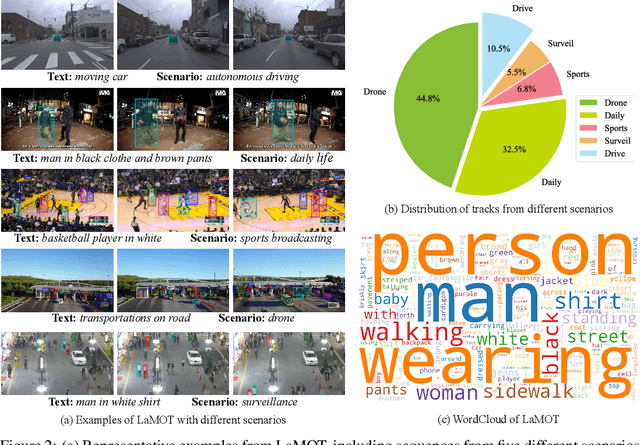

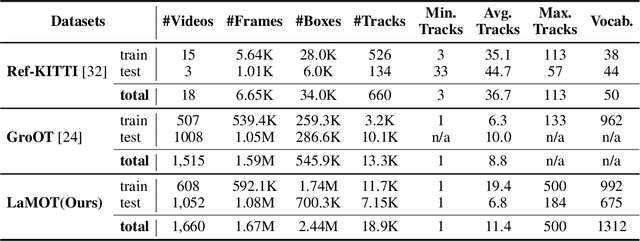

LaMOT: Language-Guided Multi-Object Tracking

Jun 12, 2024

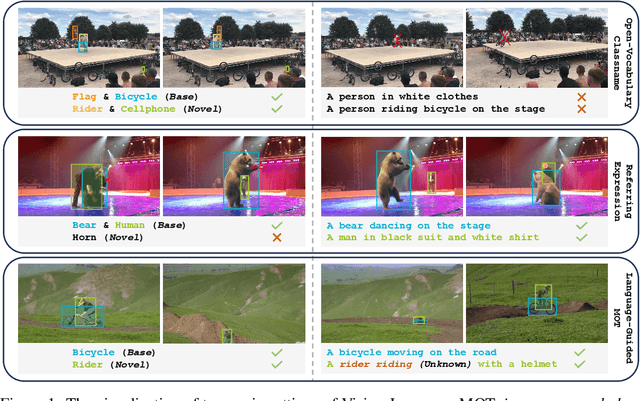

Abstract:Vision-Language MOT is a crucial tracking problem and has drawn increasing attention recently. It aims to track objects based on human language commands, replacing the traditional use of templates or pre-set information from training sets in conventional tracking tasks. Despite various efforts, a key challenge lies in the lack of a clear understanding of why language is used for tracking, which hinders further development in this field. In this paper, we address this challenge by introducing Language-Guided MOT, a unified task framework, along with a corresponding large-scale benchmark, termed LaMOT, which encompasses diverse scenarios and language descriptions. Specially, LaMOT comprises 1,660 sequences from 4 different datasets and aims to unify various Vision-Language MOT tasks while providing a standardized evaluation platform. To ensure high-quality annotations, we manually assign appropriate descriptive texts to each target in every video and conduct careful inspection and correction. To the best of our knowledge, LaMOT is the first benchmark dedicated to Language-Guided MOT. Additionally, we propose a simple yet effective tracker, termed LaMOTer. By establishing a unified task framework, providing challenging benchmarks, and offering insights for future algorithm design and evaluation, we expect to contribute to the advancement of research in Vision-Language MOT. We will release the data at https://github.com/Nathan-Li123/LaMOT.

Benchmarking the Robustness of UAV Tracking Against Common Corruptions

Mar 18, 2024Abstract:The robustness of unmanned aerial vehicle (UAV) tracking is crucial in many tasks like surveillance and robotics. Despite its importance, little attention is paid to the performance of UAV trackers under common corruptions due to lack of a dedicated platform. Addressing this, we propose UAV-C, a large-scale benchmark for assessing robustness of UAV trackers under common corruptions. Specifically, UAV-C is built upon two popular UAV datasets by introducing 18 common corruptions from 4 representative categories including adversarial, sensor, blur, and composite corruptions in different levels. Finally, UAV-C contains more than 10K sequences. To understand the robustness of existing UAV trackers against corruptions, we extensively evaluate 12 representative algorithms on UAV-C. Our study reveals several key findings: 1) Current trackers are vulnerable to corruptions, indicating more attention needed in enhancing the robustness of UAV trackers; 2) When accompanying together, composite corruptions result in more severe degradation to trackers; and 3) While each tracker has its unique performance profile, some trackers may be more sensitive to specific corruptions. By releasing UAV-C, we hope it, along with comprehensive analysis, serves as a valuable resource for advancing the robustness of UAV tracking against corruption. Our UAV-C will be available at https://github.com/Xiaoqiong-Liu/UAV-C.

PlanarTrack: A Large-scale Challenging Benchmark for Planar Object Tracking

Mar 14, 2023

Abstract:Planar object tracking is a critical computer vision problem and has drawn increasing interest owing to its key roles in robotics, augmented reality, etc. Despite rapid progress, its further development, especially in the deep learning era, is largely hindered due to the lack of large-scale challenging benchmarks. Addressing this, we introduce PlanarTrack, a large-scale challenging planar tracking benchmark. Specifically, PlanarTrack consists of 1,000 videos with more than 490K images. All these videos are collected in complex unconstrained scenarios from the wild, which makes PlanarTrack, compared with existing benchmarks, more challenging but realistic for real-world applications. To ensure the high-quality annotation, each frame in PlanarTrack is manually labeled using four corners with multiple-round careful inspection and refinement. To our best knowledge, PlanarTrack, to date, is the largest and most challenging dataset dedicated to planar object tracking. In order to analyze the proposed PlanarTrack, we evaluate 10 planar trackers and conduct comprehensive comparisons and in-depth analysis. Our results, not surprisingly, demonstrate that current top-performing planar trackers degenerate significantly on the challenging PlanarTrack and more efforts are needed to improve planar tracking in the future. In addition, we further derive a variant named PlanarTrack$_{\mathbf{BB}}$ for generic object tracking from PlanarTrack. Our evaluation of 10 excellent generic trackers on PlanarTrack$_{\mathrm{BB}}$ manifests that, surprisingly, PlanarTrack$_{\mathrm{BB}}$ is even more challenging than several popular generic tracking benchmarks and more attention should be paid to handle such planar objects, though they are rigid. All benchmarks and evaluations will be released at the project webpage.

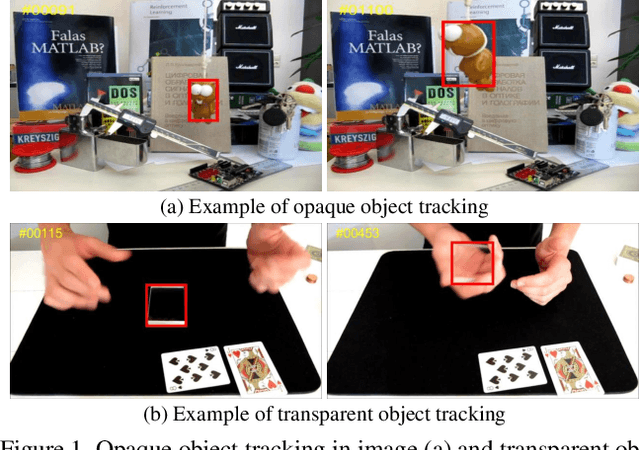

Transparent Object Tracking Benchmark

Nov 21, 2020

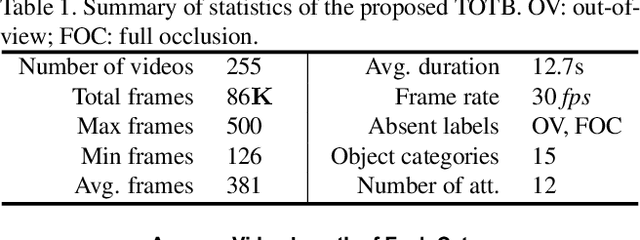

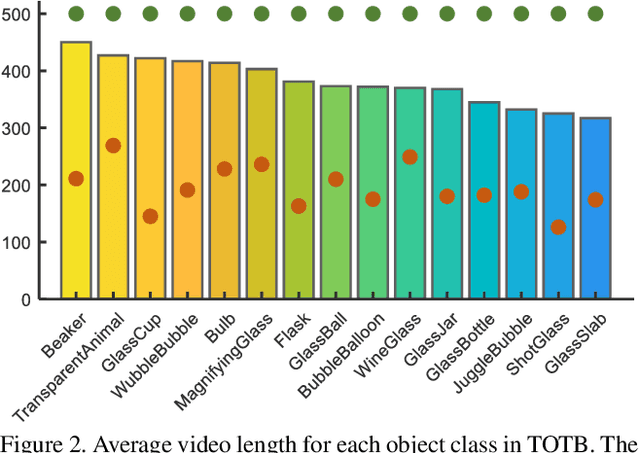

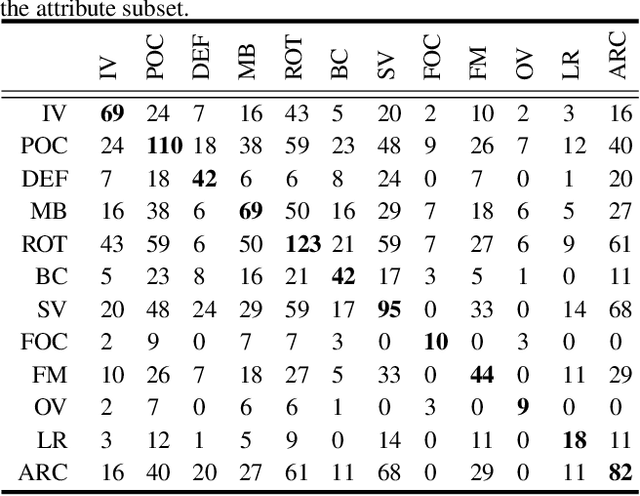

Abstract:Visual tracking has achieved considerable progress in recent years. However, current research in the field mainly focuses on tracking of opaque objects, while little attention is paid to transparent object tracking. In this paper, we make the first attempt in exploring this problem by proposing a Transparent Object Tracking Benchmark (TOTB). Specifically, TOTB consists of 225 videos (86K frames) from 15 diverse transparent object categories. Each sequence is manually labeled with axis-aligned bounding boxes. To the best of our knowledge, TOTB is the first benchmark dedicated to transparent object tracking. In order to understand how existing trackers perform and to provide comparison for future research on TOTB, we extensively evaluate 25 state-of-the-art tracking algorithms. The evaluation results exhibit that more efforts are needed to improve transparent object tracking. Besides, we observe some nontrivial findings from the evaluation that are discrepant with some common beliefs in opaque object tracking. For example, we find that deep(er) features are not always good for improvements. Moreover, to encourage future research, we introduce a novel tracker, named TransATOM, which leverages transparency features for tracking and surpasses all 25 evaluated approaches by a large margin. By releasing TOTB, we expect to facilitate future research and application of transparent object tracking in both the academia and industry. The TOTB and evaluation results as well as TransATOM will be made available at https://hengfan2010.github.io/projects/TOTB.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge