Yiwei Liu

GUI-ReWalk: Massive Data Generation for GUI Agent via Stochastic Exploration and Intent-Aware Reasoning

Sep 19, 2025Abstract:Graphical User Interface (GUI) Agents, powered by large language and vision-language models, hold promise for enabling end-to-end automation in digital environments. However, their progress is fundamentally constrained by the scarcity of scalable, high-quality trajectory data. Existing data collection strategies either rely on costly and inconsistent manual annotations or on synthetic generation methods that trade off between diversity and meaningful task coverage. To bridge this gap, we present GUI-ReWalk: a reasoning-enhanced, multi-stage framework for synthesizing realistic and diverse GUI trajectories. GUI-ReWalk begins with a stochastic exploration phase that emulates human trial-and-error behaviors, and progressively transitions into a reasoning-guided phase where inferred goals drive coherent and purposeful interactions. Moreover, it supports multi-stride task generation, enabling the construction of long-horizon workflows across multiple applications. By combining randomness for diversity with goal-aware reasoning for structure, GUI-ReWalk produces data that better reflects the intent-aware, adaptive nature of human-computer interaction. We further train Qwen2.5-VL-7B on the GUI-ReWalk dataset and evaluate it across multiple benchmarks, including Screenspot-Pro, OSWorld-G, UI-Vision, AndroidControl, and GUI-Odyssey. Results demonstrate that GUI-ReWalk enables superior coverage of diverse interaction flows, higher trajectory entropy, and more realistic user intent. These findings establish GUI-ReWalk as a scalable and data-efficient framework for advancing GUI agent research and enabling robust real-world automation.

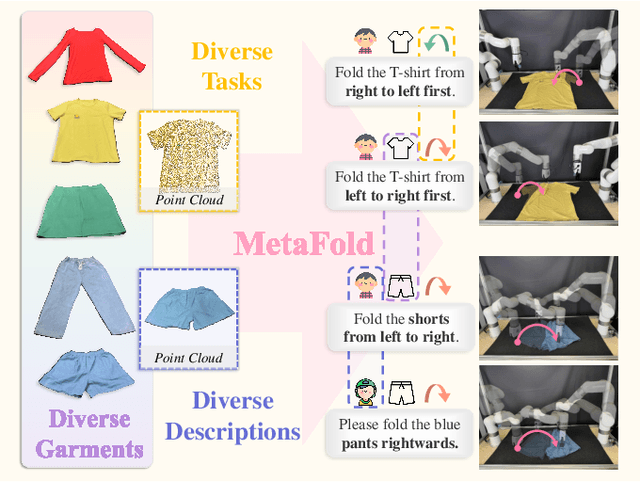

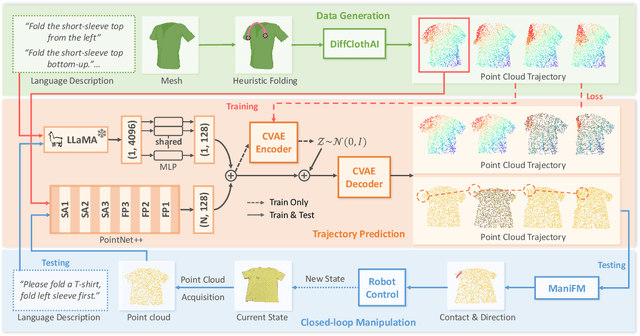

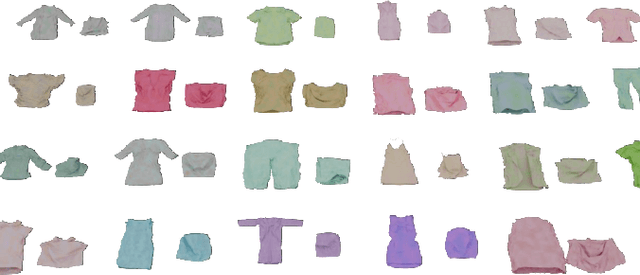

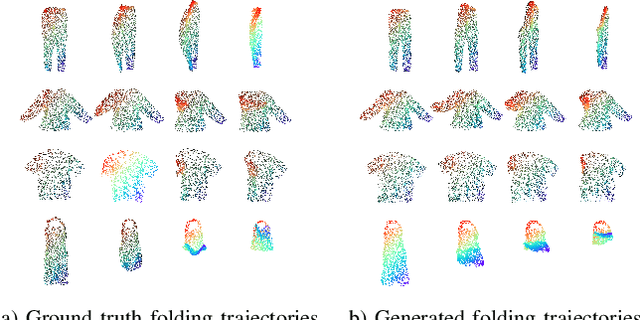

MetaFold: Language-Guided Multi-Category Garment Folding Framework via Trajectory Generation and Foundation Model

Mar 11, 2025

Abstract:Garment folding is a common yet challenging task in robotic manipulation. The deformability of garments leads to a vast state space and complex dynamics, which complicates precise and fine-grained manipulation. Previous approaches often rely on predefined key points or demonstrations, limiting their generalization across diverse garment categories. This paper presents a framework, MetaFold, that disentangles task planning from action prediction, learning each independently to enhance model generalization. It employs language-guided point cloud trajectory generation for task planning and a low-level foundation model for action prediction. This structure facilitates multi-category learning, enabling the model to adapt flexibly to various user instructions and folding tasks. Experimental results demonstrate the superiority of our proposed framework. Supplementary materials are available on our website: https://meta-fold.github.io/.

Memory-based Ensemble Learning in CMR Semantic Segmentation

Feb 13, 2025Abstract:Existing models typically segment either the entire 3D frame or 2D slices independently to derive clinical functional metrics from ventricular segmentation in cardiac cine sequences. While performing well overall, they struggle at the end slices. To address this, we leverage spatial continuity to extract global uncertainty from segmentation variance and use it as memory in our ensemble learning method, Streaming, for classifier weighting, balancing overall and end-slice performance. Additionally, we introduce the End Coefficient (EC) to quantify end-slice accuracy. Experiments on ACDC and M\&Ms datasets show that our framework achieves near-state-of-the-art Dice Similarity Coefficient (DSC) and outperforms all models on end-slice performance, improving patient-specific segmentation accuracy.

Ethereum Fraud Detection via Joint Transaction Language Model and Graph Representation Learning

Sep 09, 2024Abstract:Ethereum faces growing fraud threats. Current fraud detection methods, whether employing graph neural networks or sequence models, fail to consider the semantic information and similarity patterns within transactions. Moreover, these approaches do not leverage the potential synergistic benefits of combining both types of models. To address these challenges, we propose TLMG4Eth that combines a transaction language model with graph-based methods to capture semantic, similarity, and structural features of transaction data in Ethereum. We first propose a transaction language model that converts numerical transaction data into meaningful transaction sentences, enabling the model to learn explicit transaction semantics. Then, we propose a transaction attribute similarity graph to learn transaction similarity information, enabling us to capture intuitive insights into transaction anomalies. Additionally, we construct an account interaction graph to capture the structural information of the account transaction network. We employ a deep multi-head attention network to fuse transaction semantic and similarity embeddings, and ultimately propose a joint training approach for the multi-head attention network and the account interaction graph to obtain the synergistic benefits of both.

The Role of Transformer Models in Advancing Blockchain Technology: A Systematic Review

Sep 02, 2024Abstract:As blockchain technology rapidly evolves, the demand for enhanced efficiency, security, and scalability grows.Transformer models, as powerful deep learning architectures,have shown unprecedented potential in addressing various blockchain challenges. However, a systematic review of Transformer applications in blockchain is lacking. This paper aims to fill this research gap by surveying over 200 relevant papers, comprehensively reviewing practical cases and research progress of Transformers in blockchain applications. Our survey covers key areas including anomaly detection, smart contract security analysis, cryptocurrency prediction and trend analysis, and code summary generation. To clearly articulate the advancements of Transformers across various blockchain domains, we adopt a domain-oriented classification system, organizing and introducing representative methods based on major challenges in current blockchain research. For each research domain,we first introduce its background and objectives, then review previous representative methods and analyze their limitations,and finally introduce the advancements brought by Transformer models. Furthermore, we explore the challenges of utilizing Transformer, such as data privacy, model complexity, and real-time processing requirements. Finally, this article proposes future research directions, emphasizing the importance of exploring the Transformer architecture in depth to adapt it to specific blockchain applications, and discusses its potential role in promoting the development of blockchain technology. This review aims to provide new perspectives and a research foundation for the integrated development of blockchain technology and machine learning, supporting further innovation and application expansion of blockchain technology.

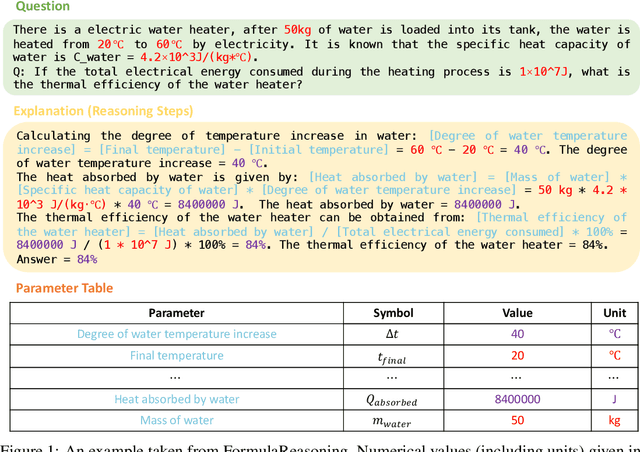

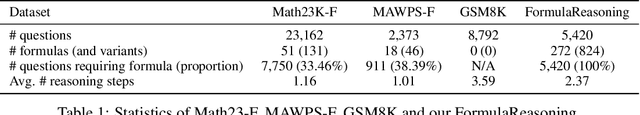

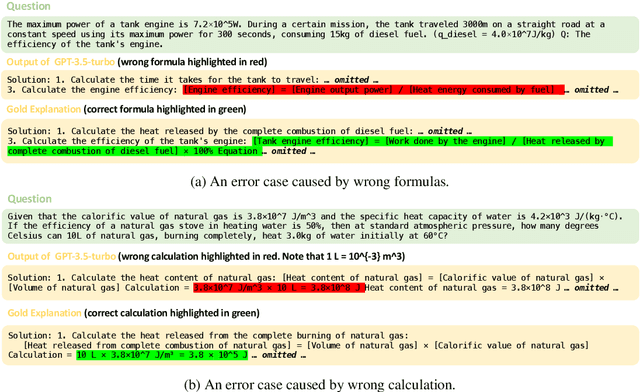

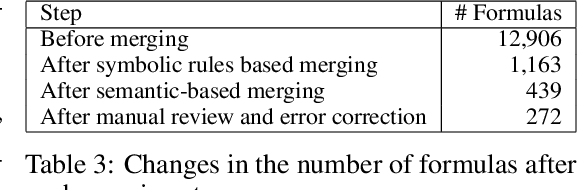

FormulaQA: A Question Answering Dataset for Formula-Based Numerical Reasoning

Feb 21, 2024

Abstract:The application of formulas is a fundamental ability of humans when addressing numerical reasoning problems. However, existing numerical reasoning datasets seldom explicitly indicate the formulas employed during the reasoning steps. To bridge this gap, we propose a question answering dataset for formula-based numerical reasoning called FormulaQA, from junior high school physics examinations. We further conduct evaluations on LLMs with size ranging from 7B to over 100B parameters utilizing zero-shot and few-shot chain-of-thoughts methods and we explored the approach of using retrieval-augmented LLMs when providing an external formula database. We also fine-tune on smaller models with size not exceeding 2B. Our empirical findings underscore the significant potential for improvement in existing models when applied to our complex, formula-driven FormulaQA.

MSDC: Exploiting Multi-State Power Consumption in Non-intrusive Load Monitoring based on A Dual-CNN Model

Feb 11, 2023

Abstract:Non-intrusive load monitoring (NILM) aims to decompose aggregated electrical usage signal into appliance-specific power consumption and it amounts to a classical example of blind source separation tasks. Leveraging recent progress on deep learning techniques, we design a new neural NILM model Multi-State Dual CNN (MSDC). Different from previous models, MSDC explicitly extracts information about the appliance's multiple states and state transitions, which in turn regulates the prediction of signals for appliances. More specifically, we employ a dual-CNN architecture: one CNN for outputting state distributions and the other for predicting the power of each state. A new technique is invented that utilizes conditional random fields (CRF) to capture state transitions. Experiments on two real-world datasets REDD and UK-DALE demonstrate that our model significantly outperform state-of-the-art models while having good generalization capacity, achieving 6%-10% MAE gain and 33%-51% SAE gain to unseen appliances.

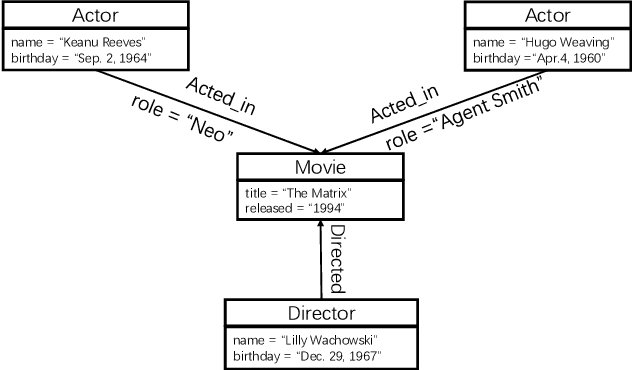

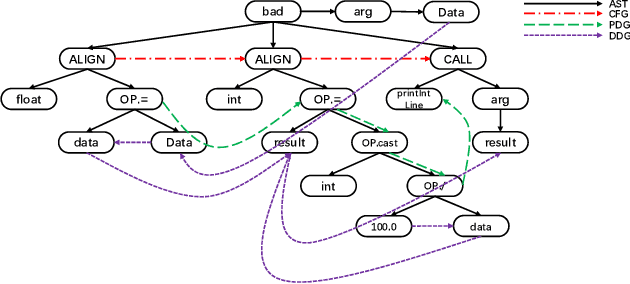

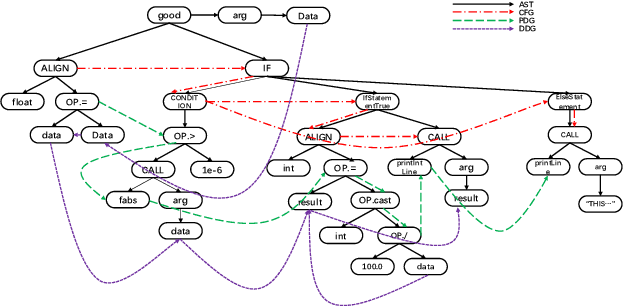

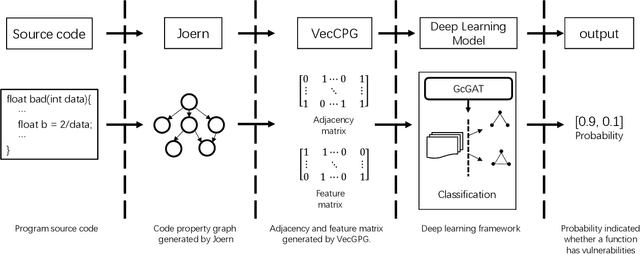

GraphEye: A Novel Solution for Detecting Vulnerable Functions Based on Graph Attention Network

Feb 05, 2022

Abstract:With the continuous extension of the Industrial Internet, cyber incidents caused by software vulnerabilities have been increasing in recent years. However, software vulnerabilities detection is still heavily relying on code review done by experts, and how to automatedly detect software vulnerabilities is an open problem so far. In this paper, we propose a novel solution named GraphEye to identify whether a function of C/C++ code has vulnerabilities, which can greatly alleviate the burden of code auditors. GraphEye is originated from the observation that the code property graph of a non-vulnerable function naturally differs from the code property graph of a vulnerable function with the same functionality. Hence, detecting vulnerable functions is attributed to the graph classification problem.GraphEye is comprised of VecCPG and GcGAT. VecCPG is a vectorization for the code property graph, which is proposed to characterize the key syntax and semantic features of the corresponding source code. GcGAT is a deep learning model based on the graph attention graph, which is proposed to solve the graph classification problem according to VecCPG. Finally, GraphEye is verified by the SARD Stack-based Buffer Overflow, Divide-Zero, Null Pointer Deference, Buffer Error, and Resource Error datasets, the corresponding F1 scores are 95.6%, 95.6%,96.1%,92.6%, and 96.1% respectively, which validate the effectiveness of the proposed solution.

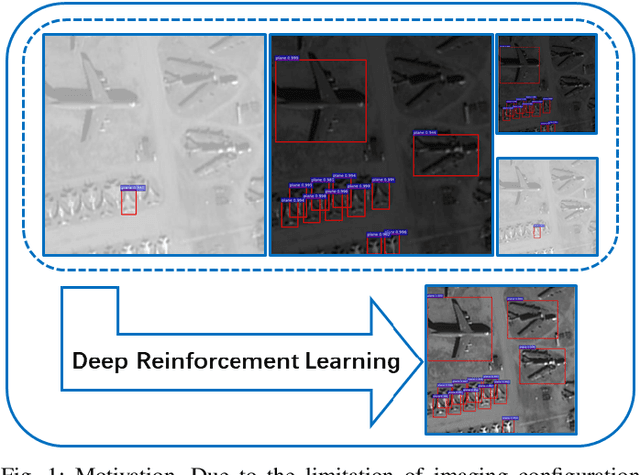

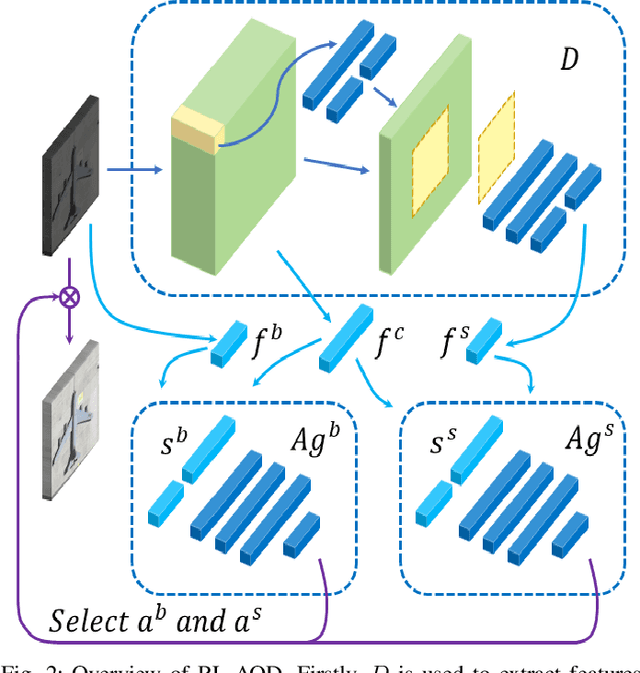

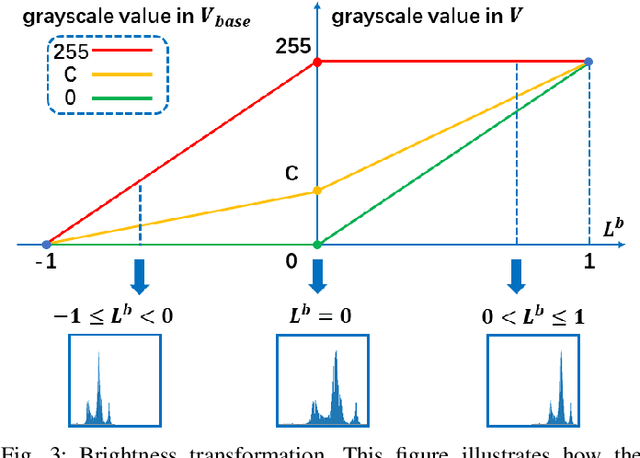

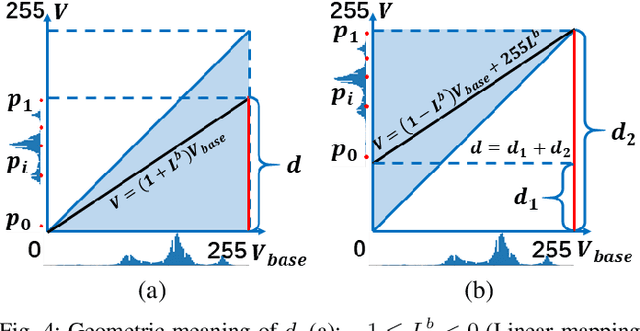

Adaptive Remote Sensing Image Attribute Learning for Active Object Detection

Jan 16, 2021

Abstract:In recent years, deep learning methods bring incredible progress to the field of object detection. However, in the field of remote sensing image processing, existing methods neglect the relationship between imaging configuration and detection performance, and do not take into account the importance of detection performance feedback for improving image quality. Therefore, detection performance is limited by the passive nature of the conventional object detection framework. In order to solve the above limitations, this paper takes adaptive brightness adjustment and scale adjustment as examples, and proposes an active object detection method based on deep reinforcement learning. The goal of adaptive image attribute learning is to maximize the detection performance. With the help of active object detection and image attribute adjustment strategies, low-quality images can be converted into high-quality images, and the overall performance is improved without retraining the detector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge