Li Zhou

Theory Lab, Central Research Institute, 2012 Labs, Huawei Technology Co. Ltd

EmoShift: Lightweight Activation Steering for Enhanced Emotion-Aware Speech Synthesis

Jan 30, 2026Abstract:Achieving precise and controllable emotional expression is crucial for producing natural and context-appropriate speech in text-to-speech (TTS) synthesis. However, many emotion-aware TTS systems, including large language model (LLM)-based designs, rely on scaling fixed emotion embeddings or external guidance, limiting their ability to model emotion-specific latent characteristics. To address this gap, we present EmoShift, a lightweight activation-steering framework incorporating a EmoSteer layer, which learns a steering vector for each target emotion in the output embedding space to capture its latent offset and maintain stable, appropriate expression across utterances and categories. With only 10M trainable parameters,less than 1/30 of full fine-tuning, EmoShift outperforms zero-shot and fully fine-tuned baselines in objective and subjective evaluations, enhancing emotional expressiveness while preserving naturalness and speaker similarity. Further analysis confirms the proposed EmoSteer layer's effectiveness and reveals its potential for controllable emotional intensity in speech synthesis.

Detecting Performance Degradation under Data Shift in Pathology Vision-Language Model

Jan 02, 2026Abstract:Vision-Language Models have demonstrated strong potential in medical image analysis and disease diagnosis. However, after deployment, their performance may deteriorate when the input data distribution shifts from that observed during development. Detecting such performance degradation is essential for clinical reliability, yet remains challenging for large pre-trained VLMs operating without labeled data. In this study, we investigate performance degradation detection under data shift in a state-of-the-art pathology VLM. We examine both input-level data shift and output-level prediction behavior to understand their respective roles in monitoring model reliability. To facilitate systematic analysis of input data shift, we develop DomainSAT, a lightweight toolbox with a graphical interface that integrates representative shift detection algorithms and enables intuitive exploration of data shift. Our analysis shows that while input data shift detection is effective at identifying distributional changes and providing early diagnostic signals, it does not always correspond to actual performance degradation. Motivated by this observation, we further study output-based monitoring and introduce a label-free, confidence-based degradation indicator that directly captures changes in model prediction confidence. We find that this indicator exhibits a close relationship with performance degradation and serves as an effective complement to input shift detection. Experiments on a large-scale pathology dataset for tumor classification demonstrate that combining input data shift detection and output confidence-based indicators enables more reliable detection and interpretation of performance degradation in VLMs under data shift. These findings provide a practical and complementary framework for monitoring the reliability of foundation models in digital pathology.

Visionary: The World Model Carrier Built on WebGPU-Powered Gaussian Splatting Platform

Dec 09, 2025Abstract:Neural rendering, particularly 3D Gaussian Splatting (3DGS), has evolved rapidly and become a key component for building world models. However, existing viewer solutions remain fragmented, heavy, or constrained by legacy pipelines, resulting in high deployment friction and limited support for dynamic content and generative models. In this work, we present Visionary, an open, web-native platform for real-time various Gaussian Splatting and meshes rendering. Built on an efficient WebGPU renderer with per-frame ONNX inference, Visionary enables dynamic neural processing while maintaining a lightweight, "click-to-run" browser experience. It introduces a standardized Gaussian Generator contract, which not only supports standard 3DGS rendering but also allows plug-and-play algorithms to generate or update Gaussians each frame. Such inference also enables us to apply feedforward generative post-processing. The platform further offers a plug in three.js library with a concise TypeScript API for seamless integration into existing web applications. Experiments show that, under identical 3DGS assets, Visionary achieves superior rendering efficiency compared to current Web viewers due to GPU-based primitive sorting. It already supports multiple variants, including MLP-based 3DGS, 4DGS, neural avatars, and style transformation or enhancement networks. By unifying inference and rendering directly in the browser, Visionary significantly lowers the barrier to reproduction, comparison, and deployment of 3DGS-family methods, serving as a unified World Model Carrier for both reconstructive and generative paradigms.

PoCGM: Poisson-Conditioned Generative Model for Sparse-View CT Reconstruction

Nov 17, 2025Abstract:In computed tomography (CT), reducing the number of projection views is an effective strategy to lower radiation exposure and/or improve temporal resolution. However, this often results in severe aliasing artifacts and loss of structural details in reconstructed images, posing significant challenges for clinical applications. Inspired by the success of the Poisson Flow Generative Model (PFGM++) in natural image generation, we propose a PoCGM (Poisson-Conditioned Generative Model) to address the challenges of sparse-view CT reconstruction. Since PFGM++ was originally designed for unconditional generation, it lacks direct applicability to medical imaging tasks that require integrating conditional inputs. To overcome this limitation, the PoCGM reformulates PFGM++ into a conditional generative framework by incorporating sparse-view data as guidance during both training and sampling phases. By modeling the posterior distribution of full-view reconstructions conditioned on sparse observations, PoCGM effectively suppresses artifacts while preserving fine structural details. Qualitative and quantitative evaluations demonstrate that PoCGM outperforms the baselines, achieving improved artifact suppression, enhanced detail preservation, and reliable performance in dose-sensitive and time-critical imaging scenarios.

EchoMind: An Interrelated Multi-level Benchmark for Evaluating Empathetic Speech Language Models

Oct 26, 2025Abstract:Speech Language Models (SLMs) have made significant progress in spoken language understanding. Yet it remains unclear whether they can fully perceive non lexical vocal cues alongside spoken words, and respond with empathy that aligns with both emotional and contextual factors. Existing benchmarks typically evaluate linguistic, acoustic, reasoning, or dialogue abilities in isolation, overlooking the integration of these skills that is crucial for human-like, emotionally intelligent conversation. We present EchoMind, the first interrelated, multi-level benchmark that simulates the cognitive process of empathetic dialogue through sequential, context-linked tasks: spoken-content understanding, vocal-cue perception, integrated reasoning, and response generation. All tasks share identical and semantically neutral scripts that are free of explicit emotional or contextual cues, and controlled variations in vocal style are used to test the effect of delivery independent of the transcript. EchoMind is grounded in an empathy-oriented framework spanning 3 coarse and 12 fine-grained dimensions, encompassing 39 vocal attributes, and evaluated using both objective and subjective metrics. Testing 12 advanced SLMs reveals that even state-of-the-art models struggle with high-expressive vocal cues, limiting empathetic response quality. Analyses of prompt strength, speech source, and ideal vocal cue recognition reveal persistent weaknesses in instruction-following, resilience to natural speech variability, and effective use of vocal cues for empathy. These results underscore the need for SLMs that integrate linguistic content with diverse vocal cues to achieve truly empathetic conversational ability.

Step-Audio 2 Technical Report

Jul 24, 2025

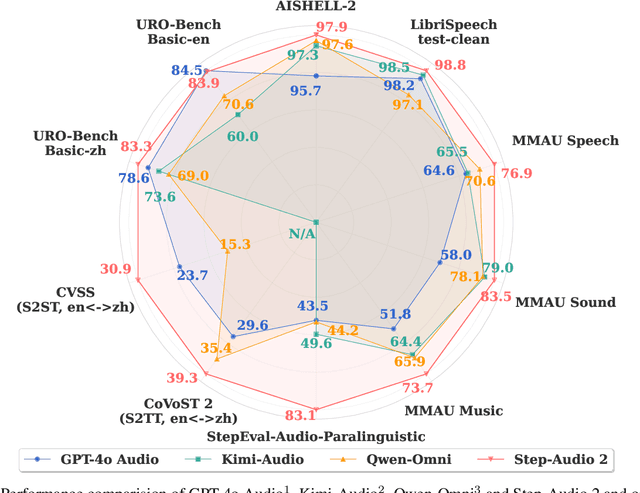

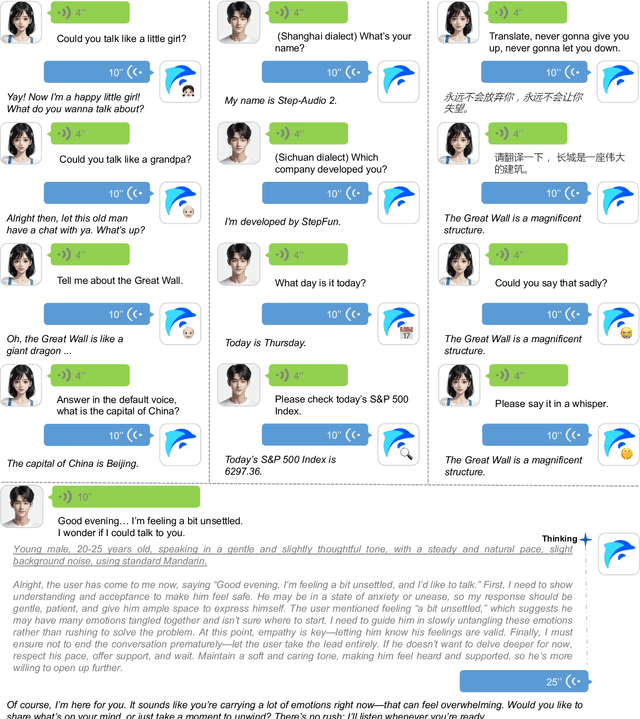

Abstract:This paper presents Step-Audio 2, an end-to-end multi-modal large language model designed for industry-strength audio understanding and speech conversation. By integrating a latent audio encoder and reasoning-centric reinforcement learning (RL), Step-Audio 2 achieves promising performance in automatic speech recognition (ASR) and audio understanding. To facilitate genuine end-to-end speech conversation, Step-Audio 2 incorporates the generation of discrete audio tokens into language modeling, significantly enhancing its responsiveness to paralinguistic information such as speaking styles and emotions. To effectively leverage the rich textual and acoustic knowledge in real-world data, Step-Audio 2 integrates retrieval-augmented generation (RAG) and is able to call external tools such as web search to mitigate hallucination and audio search to switch timbres. Trained on millions of hours of speech and audio data, Step-Audio 2 delivers intelligence and expressiveness across diverse conversational scenarios. Evaluation results demonstrate that Step-Audio 2 achieves state-of-the-art performance on various audio understanding and conversational benchmarks compared to other open-source and commercial solutions. Please visit https://github.com/stepfun-ai/Step-Audio2 for more information.

Solving the Constrained Random Disambiguation Path Problem via Lagrangian Relaxation and Graph Reduction

Jul 08, 2025Abstract:We study a resource-constrained variant of the Random Disambiguation Path (RDP) problem, a generalization of the Stochastic Obstacle Scene (SOS) problem, in which a navigating agent must reach a target in a spatial environment populated with uncertain obstacles. Each ambiguous obstacle may be disambiguated at a (possibly) heterogeneous resource cost, subject to a global disambiguation budget. We formulate this constrained planning problem as a Weight-Constrained Shortest Path Problem (WCSPP) with risk-adjusted edge costs that incorporate probabilistic blockage and traversal penalties. To solve it, we propose a novel algorithmic framework-COLOGR-combining Lagrangian relaxation with a two-phase vertex elimination (TPVE) procedure. The method prunes infeasible and suboptimal paths while provably preserving the optimal solution, and leverages dual bounds to guide efficient search. We establish correctness, feasibility guarantees, and surrogate optimality under mild assumptions. Our analysis also demonstrates that COLOGR frequently achieves zero duality gap and offers improved computational complexity over prior constrained path-planning methods. Extensive simulation experiments validate the algorithm's robustness across varying obstacle densities, sensor accuracies, and risk models, consistently outperforming greedy baselines and approaching offline-optimal benchmarks. The proposed framework is broadly applicable to stochastic network design, mobility planning, and constrained decision-making under uncertainty.

ResPF: Residual Poisson Flow for Efficient and Physically Consistent Sparse-View CT Reconstruction

Jun 06, 2025Abstract:Sparse-view computed tomography (CT) is a practical solution to reduce radiation dose, but the resulting ill-posed inverse problem poses significant challenges for accurate image reconstruction. Although deep learning and diffusion-based methods have shown promising results, they often lack physical interpretability or suffer from high computational costs due to iterative sampling starting from random noise. Recent advances in generative modeling, particularly Poisson Flow Generative Models (PFGM), enable high-fidelity image synthesis by modeling the full data distribution. In this work, we propose Residual Poisson Flow (ResPF) Generative Models for efficient and accurate sparse-view CT reconstruction. Based on PFGM++, ResPF integrates conditional guidance from sparse measurements and employs a hijacking strategy to significantly reduce sampling cost by skipping redundant initial steps. However, skipping early stages can degrade reconstruction quality and introduce unrealistic structures. To address this, we embed a data-consistency into each iteration, ensuring fidelity to sparse-view measurements. Yet, PFGM sampling relies on a fixed ordinary differential equation (ODE) trajectory induced by electrostatic fields, which can be disrupted by step-wise data consistency, resulting in unstable or degraded reconstructions. Inspired by ResNet, we introduce a residual fusion module to linearly combine generative outputs with data-consistent reconstructions, effectively preserving trajectory continuity. To the best of our knowledge, this is the first application of Poisson flow models to sparse-view CT. Extensive experiments on synthetic and clinical datasets demonstrate that ResPF achieves superior reconstruction quality, faster inference, and stronger robustness compared to state-of-the-art iterative, learning-based, and diffusion models.

From Word to World: Evaluate and Mitigate Culture Bias via Word Association Test

May 24, 2025Abstract:The human-centered word association test (WAT) serves as a cognitive proxy, revealing sociocultural variations through lexical-semantic patterns. We extend this test into an LLM-adaptive, free-relation task to assess the alignment of large language models (LLMs) with cross-cultural cognition. To mitigate the culture preference, we propose CultureSteer, an innovative approach that integrates a culture-aware steering mechanism to guide semantic representations toward culturally specific spaces. Experiments show that current LLMs exhibit significant bias toward Western cultural (notably in American) schemas at the word association level. In contrast, our model substantially improves cross-cultural alignment, surpassing prompt-based methods in capturing diverse semantic associations. Further validation on culture-sensitive downstream tasks confirms its efficacy in fostering cognitive alignment across cultures. This work contributes a novel methodological paradigm for enhancing cultural awareness in LLMs, advancing the development of more inclusive language technologies.

Selective Structured State Space for Multispectral-fused Small Target Detection

May 21, 2025Abstract:Target detection in high-resolution remote sensing imagery faces challenges due to the low recognition accuracy of small targets and high computational costs. The computational complexity of the Transformer architecture increases quadratically with image resolution, while Convolutional Neural Networks (CNN) architectures are forced to stack deeper convolutional layers to expand their receptive fields, leading to an explosive growth in computational demands. To address these computational constraints, we leverage Mamba's linear complexity for efficiency. However, Mamba's performance declines for small targets, primarily because small targets occupy a limited area in the image and have limited semantic information. Accurate identification of these small targets necessitates not only Mamba's global attention capabilities but also the precise capture of fine local details. To this end, we enhance Mamba by developing the Enhanced Small Target Detection (ESTD) module and the Convolutional Attention Residual Gate (CARG) module. The ESTD module bolsters local attention to capture fine-grained details, while the CARG module, built upon Mamba, emphasizes spatial and channel-wise information, collectively improving the model's ability to capture distinctive representations of small targets. Additionally, to highlight the semantic representation of small targets, we design a Mask Enhanced Pixel-level Fusion (MEPF) module for multispectral fusion, which enhances target features by effectively fusing visible and infrared multimodal information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge