Hengyong Yu

University of Massachusetts Lowell

PoCGM: Poisson-Conditioned Generative Model for Sparse-View CT Reconstruction

Nov 17, 2025Abstract:In computed tomography (CT), reducing the number of projection views is an effective strategy to lower radiation exposure and/or improve temporal resolution. However, this often results in severe aliasing artifacts and loss of structural details in reconstructed images, posing significant challenges for clinical applications. Inspired by the success of the Poisson Flow Generative Model (PFGM++) in natural image generation, we propose a PoCGM (Poisson-Conditioned Generative Model) to address the challenges of sparse-view CT reconstruction. Since PFGM++ was originally designed for unconditional generation, it lacks direct applicability to medical imaging tasks that require integrating conditional inputs. To overcome this limitation, the PoCGM reformulates PFGM++ into a conditional generative framework by incorporating sparse-view data as guidance during both training and sampling phases. By modeling the posterior distribution of full-view reconstructions conditioned on sparse observations, PoCGM effectively suppresses artifacts while preserving fine structural details. Qualitative and quantitative evaluations demonstrate that PoCGM outperforms the baselines, achieving improved artifact suppression, enhanced detail preservation, and reliable performance in dose-sensitive and time-critical imaging scenarios.

Large Language Model Evaluated Stand-alone Attention-Assisted Graph Neural Network with Spatial and Structural Information Interaction for Precise Endoscopic Image Segmentation

Aug 09, 2025Abstract:Accurate endoscopic image segmentation on the polyps is critical for early colorectal cancer detection. However, this task remains challenging due to low contrast with surrounding mucosa, specular highlights, and indistinct boundaries. To address these challenges, we propose FOCUS-Med, which stands for Fusion of spatial and structural graph with attentional context-aware polyp segmentation in endoscopic medical imaging. FOCUS-Med integrates a Dual Graph Convolutional Network (Dual-GCN) module to capture contextual spatial and topological structural dependencies. This graph-based representation enables the model to better distinguish polyps from background tissues by leveraging topological cues and spatial connectivity, which are often obscured in raw image intensities. It enhances the model's ability to preserve boundaries and delineate complex shapes typical of polyps. In addition, a location-fused stand-alone self-attention is employed to strengthen global context integration. To bridge the semantic gap between encoder-decoder layers, we incorporate a trainable weighted fast normalized fusion strategy for efficient multi-scale aggregation. Notably, we are the first to introduce the use of a Large Language Model (LLM) to provide detailed qualitative evaluations of segmentation quality. Extensive experiments on public benchmarks demonstrate that FOCUS-Med achieves state-of-the-art performance across five key metrics, underscoring its effectiveness and clinical potential for AI-assisted colonoscopy.

ResPF: Residual Poisson Flow for Efficient and Physically Consistent Sparse-View CT Reconstruction

Jun 06, 2025Abstract:Sparse-view computed tomography (CT) is a practical solution to reduce radiation dose, but the resulting ill-posed inverse problem poses significant challenges for accurate image reconstruction. Although deep learning and diffusion-based methods have shown promising results, they often lack physical interpretability or suffer from high computational costs due to iterative sampling starting from random noise. Recent advances in generative modeling, particularly Poisson Flow Generative Models (PFGM), enable high-fidelity image synthesis by modeling the full data distribution. In this work, we propose Residual Poisson Flow (ResPF) Generative Models for efficient and accurate sparse-view CT reconstruction. Based on PFGM++, ResPF integrates conditional guidance from sparse measurements and employs a hijacking strategy to significantly reduce sampling cost by skipping redundant initial steps. However, skipping early stages can degrade reconstruction quality and introduce unrealistic structures. To address this, we embed a data-consistency into each iteration, ensuring fidelity to sparse-view measurements. Yet, PFGM sampling relies on a fixed ordinary differential equation (ODE) trajectory induced by electrostatic fields, which can be disrupted by step-wise data consistency, resulting in unstable or degraded reconstructions. Inspired by ResNet, we introduce a residual fusion module to linearly combine generative outputs with data-consistent reconstructions, effectively preserving trajectory continuity. To the best of our knowledge, this is the first application of Poisson flow models to sparse-view CT. Extensive experiments on synthetic and clinical datasets demonstrate that ResPF achieves superior reconstruction quality, faster inference, and stronger robustness compared to state-of-the-art iterative, learning-based, and diffusion models.

$ρ$-NeRF: Leveraging Attenuation Priors in Neural Radiance Field for 3D Computed Tomography Reconstruction

Dec 03, 2024

Abstract:This paper introduces $\rho$-NeRF, a self-supervised approach that sets a new standard in novel view synthesis (NVS) and computed tomography (CT) reconstruction by modeling a continuous volumetric radiance field enriched with physics-based attenuation priors. The $\rho$-NeRF represents a three-dimensional (3D) volume through a fully-connected neural network that takes a single continuous four-dimensional (4D) coordinate, spatial location $(x, y, z)$ and an initialized attenuation value ($\rho$), and outputs the attenuation coefficient at that position. By querying these 4D coordinates along X-ray paths, the classic forward projection technique is applied to integrate attenuation data across the 3D space. By matching and refining pre-initialized attenuation values derived from traditional reconstruction algorithms like Feldkamp-Davis-Kress algorithm (FDK) or conjugate gradient least squares (CGLS), the enriched schema delivers superior fidelity in both projection synthesis and image recognition.

Physics-informed Score-based Diffusion Model for Limited-angle Reconstruction of Cardiac Computed Tomography

May 23, 2024Abstract:Cardiac computed tomography (CT) has emerged as a major imaging modality for the diagnosis and monitoring of cardiovascular diseases. High temporal resolution is essential to ensure diagnostic accuracy. Limited-angle data acquisition can reduce scan time and improve temporal resolution, but typically leads to severe image degradation and motivates for improved reconstruction techniques. In this paper, we propose a novel physics-informed score-based diffusion model (PSDM) for limited-angle reconstruction of cardiac CT. At the sampling time, we combine a data prior from a diffusion model and a model prior obtained via an iterative algorithm and Fourier fusion to further enhance the image quality. Specifically, our approach integrates the primal-dual hybrid gradient (PDHG) algorithm with score-based diffusion models, thereby enabling us to reconstruct high-quality cardiac CT images from limited-angle data. The numerical simulations and real data experiments confirm the effectiveness of our proposed approach.

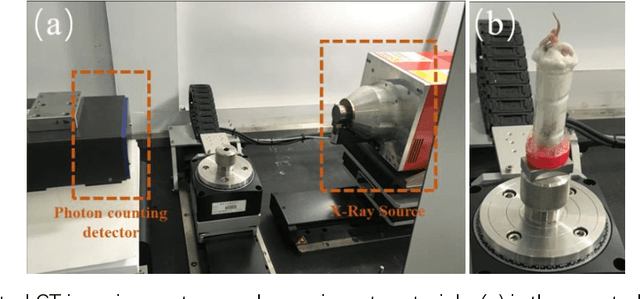

Deep Few-view High-resolution Photon-counting Extremity CT at Halved Dose for a Clinical Trial

Mar 19, 2024Abstract:The latest X-ray photon-counting computed tomography (PCCT) for extremity allows multi-energy high-resolution (HR) imaging for tissue characterization and material decomposition. However, both radiation dose and imaging speed need improvement for contrast-enhanced and other studies. Despite the success of deep learning methods for 2D few-view reconstruction, applying them to HR volumetric reconstruction of extremity scans for clinical diagnosis has been limited due to GPU memory constraints, training data scarcity, and domain gap issues. In this paper, we propose a deep learning-based approach for PCCT image reconstruction at halved dose and doubled speed in a New Zealand clinical trial. Particularly, we present a patch-based volumetric refinement network to alleviate the GPU memory limitation, train network with synthetic data, and use model-based iterative refinement to bridge the gap between synthetic and real-world data. The simulation and phantom experiments demonstrate consistently improved results under different acquisition conditions on both in- and off-domain structures using a fixed network. The image quality of 8 patients from the clinical trial are evaluated by three radiologists in comparison with the standard image reconstruction with a full-view dataset. It is shown that our proposed approach is essentially identical to or better than the clinical benchmark in terms of diagnostic image quality scores. Our approach has a great potential to improve the safety and efficiency of PCCT without compromising image quality.

LoMAE: Low-level Vision Masked Autoencoders for Low-dose CT Denoising

Oct 19, 2023

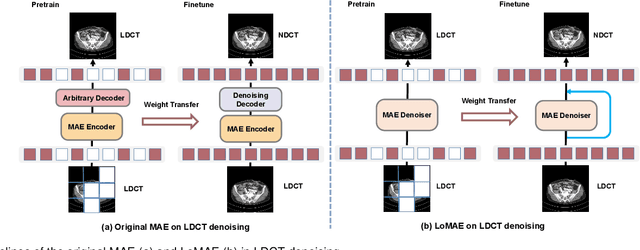

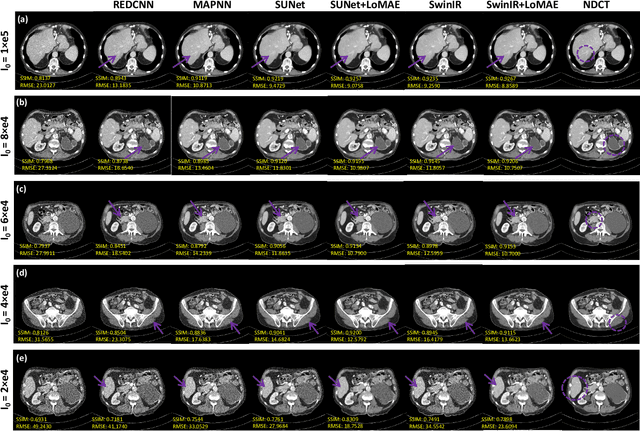

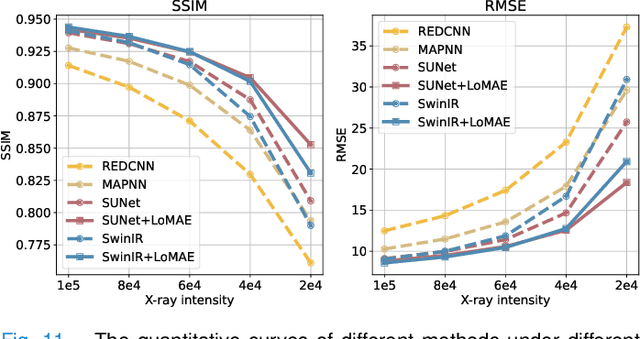

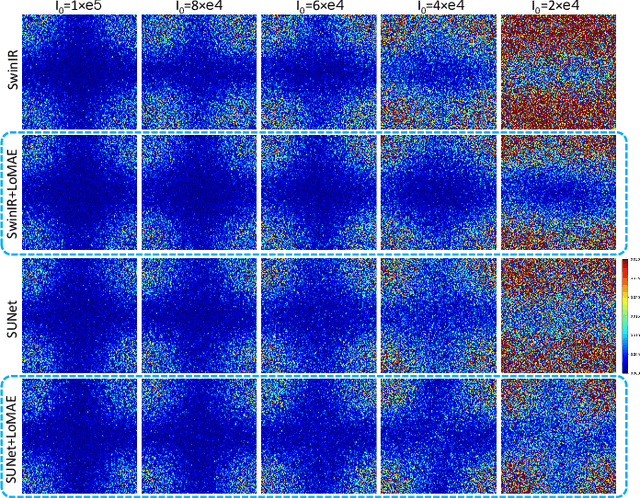

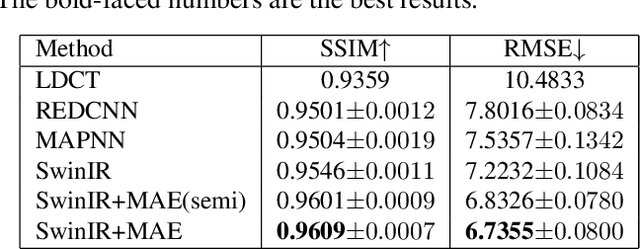

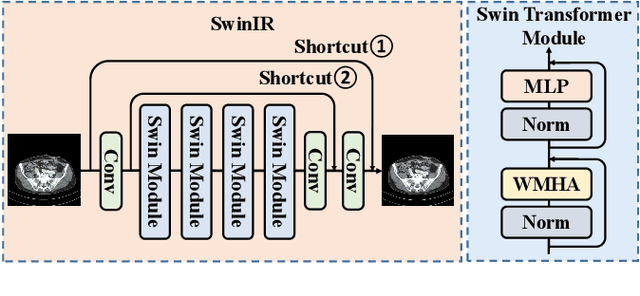

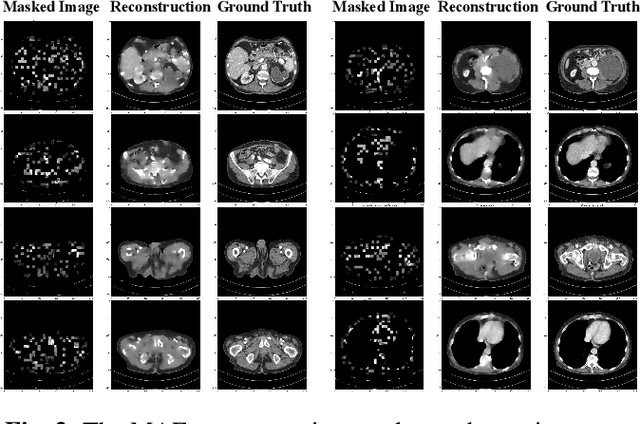

Abstract:Low-dose computed tomography (LDCT) offers reduced X-ray radiation exposure but at the cost of compromised image quality, characterized by increased noise and artifacts. Recently, transformer models emerged as a promising avenue to enhance LDCT image quality. However, the success of such models relies on a large amount of paired noisy and clean images, which are often scarce in clinical settings. In the fields of computer vision and natural language processing, masked autoencoders (MAE) have been recognized as an effective label-free self-pretraining method for transformers, due to their exceptional feature representation ability. However, the original pretraining and fine-tuning design fails to work in low-level vision tasks like denoising. In response to this challenge, we redesign the classical encoder-decoder learning model and facilitate a simple yet effective low-level vision MAE, referred to as LoMAE, tailored to address the LDCT denoising problem. Moreover, we introduce an MAE-GradCAM method to shed light on the latent learning mechanisms of the MAE/LoMAE. Additionally, we explore the LoMAE's robustness and generability across a variety of noise levels. Experiments results show that the proposed LoMAE can enhance the transformer's denoising performance and greatly relieve the dependence on the ground truth clean data. It also demonstrates remarkable robustness and generalizability over a spectrum of noise levels.

Two-and-a-half Order Score-based Model for Solving 3D Ill-posed Inverse Problems

Aug 17, 2023

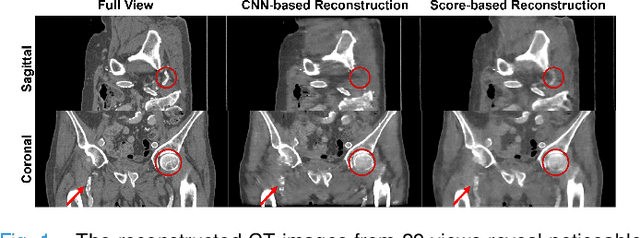

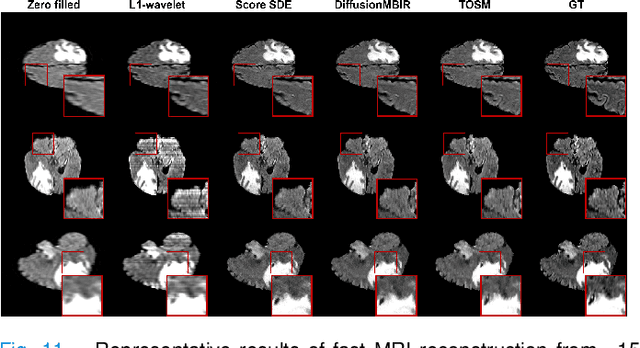

Abstract:Computed Tomography (CT) and Magnetic Resonance Imaging (MRI) are crucial technologies in the field of medical imaging. Score-based models have proven to be effective in addressing different inverse problems encountered in CT and MRI, such as sparse-view CT and fast MRI reconstruction. However, these models face challenges in achieving accurate three dimensional (3D) volumetric reconstruction. The existing score-based models primarily focus on reconstructing two dimensional (2D) data distribution, leading to inconsistencies between adjacent slices in the reconstructed 3D volumetric images. To overcome this limitation, we propose a novel two-and-a-half order score-based model (TOSM). During the training phase, our TOSM learns data distributions in 2D space, which reduces the complexity of training compared to directly working on 3D volumes. However, in the reconstruction phase, the TOSM updates the data distribution in 3D space, utilizing complementary scores along three directions (sagittal, coronal, and transaxial) to achieve a more precise reconstruction. The development of TOSM is built on robust theoretical principles, ensuring its reliability and efficacy. Through extensive experimentation on large-scale sparse-view CT and fast MRI datasets, our method demonstrates remarkable advancements and attains state-of-the-art results in solving 3D ill-posed inverse problems. Notably, the proposed TOSM effectively addresses the inter-slice inconsistency issue, resulting in high-quality 3D volumetric reconstruction.

Masked Autoencoders for Low dose CT denoising

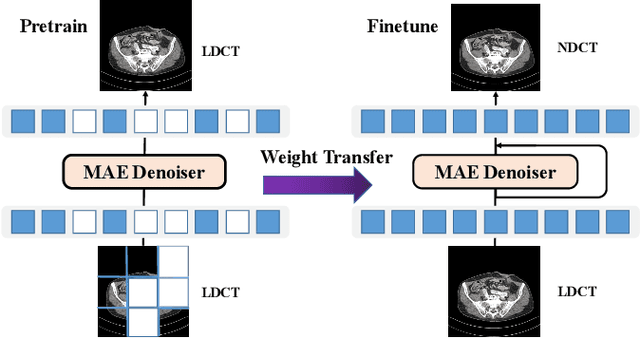

Oct 10, 2022

Abstract:Low-dose computed tomography (LDCT) reduces the X-ray radiation but compromises image quality with more noises and artifacts. A plethora of transformer models have been developed recently to improve LDCT image quality. However, the success of a transformer model relies on a large amount of paired noisy and clean data, which is often unavailable in clinical applications. In computer vision and natural language processing fields, masked autoencoders (MAE) have been proposed as an effective label-free self-pretraining method for transformers, due to its excellent feature representation ability. Here, we redesign the classical encoder-decoder learning model to match the denoising task and apply it to LDCT denoising problem. The MAE can leverage the unlabeled data and facilitate structural preservation for the LDCT denoising model when ground truth data are missing. Experiments on the Mayo dataset validate that the MAE can boost the transformer's denoising performance and relieve the dependence on the ground truth data.

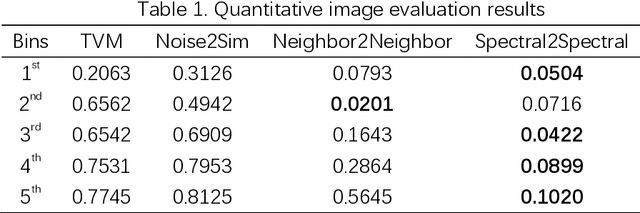

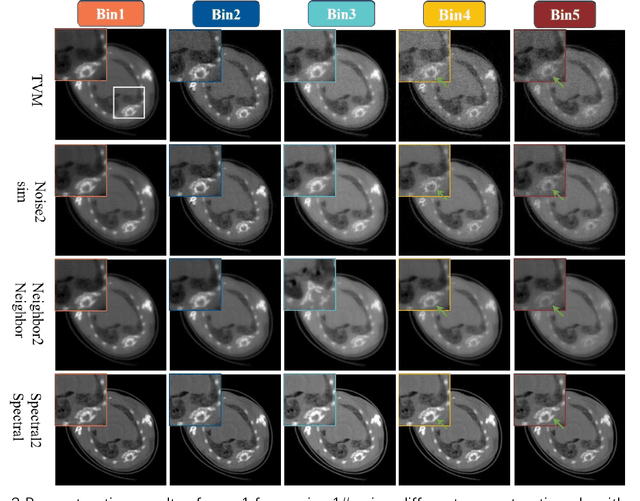

Spectral2Spectral: Image-spectral Similarity Assisted Spectral CT Deep Reconstruction without Reference

Oct 03, 2022

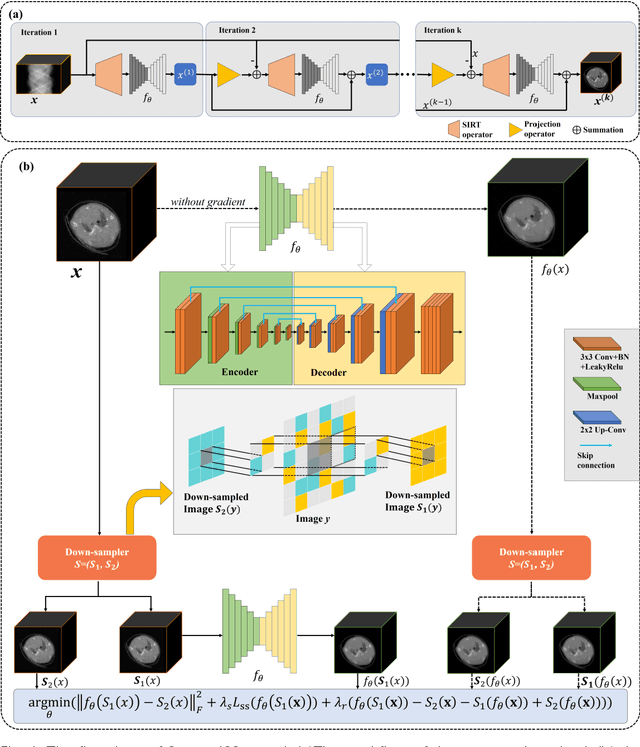

Abstract:The photon-counting detector (PCD) based spectral computed tomography attracts much more attentions since it has the capability to provide more accurate identification and quantitative analysis for biomedical materials. The limited number of photons within narrow energy-bin leads to low signal-noise ratio data. The existing supervised deep reconstruction networks for CT reconstruction are difficult to address these challenges. In this paper, we propose an iterative deep reconstruction network to synergize model and data priors into a unified framework, named as Spectral2Spectral. Our Spectral2Spectral employs an unsupervised deep training strategy to obtain high-quality images from noisy data with an end-to-end fashion. The structural similarity prior within image-spectral domain is refined as a regularization term to further constrain the network training. The weights of neural network are automatically updated to capture image features and structures with iterative process. Three large-scale preclinical datasets experiments demonstrate that the Spectral2spectral reconstruct better image quality than other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge