Jin Li

SoundAI Technology Co., Ltd

Efficient endometrial carcinoma screening via cross-modal synthesis and gradient distillation

Feb 23, 2026Abstract:Early detection of myometrial invasion is critical for the staging and life-saving management of endometrial carcinoma (EC), a prevalent global malignancy. Transvaginal ultrasound serves as the primary, accessible screening modality in resource-constrained primary care settings; however, its diagnostic reliability is severely hindered by low tissue contrast, high operator dependence, and a pronounced scarcity of positive pathological samples. Existing artificial intelligence solutions struggle to overcome this severe class imbalance and the subtle imaging features of invasion, particularly under the strict computational limits of primary care clinics. Here we present an automated, highly efficient two-stage deep learning framework that resolves both data and computational bottlenecks in EC screening. To mitigate pathological data scarcity, we develop a structure-guided cross-modal generation network that synthesizes diverse, high-fidelity ultrasound images from unpaired magnetic resonance imaging (MRI) data, strictly preserving clinically essential anatomical junctions. Furthermore, we introduce a lightweight screening network utilizing gradient distillation, which transfers discriminative knowledge from a high-capacity teacher model to dynamically guide sparse attention towards task-critical regions. Evaluated on a large, multicenter cohort of 7,951 participants, our model achieves a sensitivity of 99.5\%, a specificity of 97.2\%, and an area under the curve of 0.987 at a minimal computational cost (0.289 GFLOPs), substantially outperforming the average diagnostic accuracy of expert sonographers. Our approach demonstrates that combining cross-modal synthetic augmentation with knowledge-driven efficient modeling can democratize expert-level, real-time cancer screening for resource-constrained primary care settings.

Drift-Aware Variational Autoencoder-based Anomaly Detection with Two-level Ensembling

Feb 13, 2026Abstract:In today's digital world, the generation of vast amounts of streaming data in various domains has become ubiquitous. However, many of these data are unlabeled, making it challenging to identify events, particularly anomalies. This task becomes even more formidable in nonstationary environments where model performance can deteriorate over time due to concept drift. To address these challenges, this paper presents a novel method, VAE++ESDD, which employs incremental learning and two-level ensembling: an ensemble of Variational AutoEncoder(VAEs) for anomaly prediction, along with an ensemble of concept drift detectors. Each drift detector utilizes a statistical-based concept drift mechanism. To evaluate the effectiveness of VAE++ESDD, we conduct a comprehensive experimental study using real-world and synthetic datasets characterized by severely or extremely low anomalous rates and various drift characteristics. Our study reveals that the proposed method significantly outperforms both strong baselines and state-of-the-art methods.

Resilient Class-Incremental Learning: on the Interplay of Drifting, Unlabelled and Imbalanced Data Streams

Feb 10, 2026Abstract:In today's connected world, the generation of massive streaming data across diverse domains has become commonplace. In the presence of concept drift, class imbalance, label scarcity, and new class emergence, they jointly degrade representation stability, bias learning toward outdated distributions, and reduce the resilience and reliability of detection in dynamic environments. This paper proposes SCIL (Streaming Class-Incremental Learning) to address these challenges. The SCIL framework integrates an autoencoder (AE) with a multi-layer perceptron for multi-class prediction, uses a dual-loss strategy (classification and reconstruction) for prediction and new class detection, employs corrected pseudo-labels for online training, manages classes with queues, and applies oversampling to handle imbalance. The rationale behind the method's structure is elucidated through ablation studies and a comprehensive experimental evaluation is performed using both real-world and synthetic datasets that feature class imbalance, incremental classes, and concept drifts. Our results demonstrate that SCIL outperforms strong baselines and state-of-the-art methods. Based on our commitment to Open Science, we make our code and datasets available to the community.

Unraveling the Hidden Dynamical Structure in Recurrent Neural Policies

Feb 01, 2026Abstract:Recurrent neural policies are widely used in partially observable control and meta-RL tasks. Their abilities to maintain internal memory and adapt quickly to unseen scenarios have offered them unparalleled performance when compared to non-recurrent counterparts. However, until today, the underlying mechanisms for their superior generalization and robustness performance remain poorly understood. In this study, by analyzing the hidden state domain of recurrent policies learned over a diverse set of training methods, model architectures, and tasks, we find that stable cyclic structures consistently emerge during interaction with the environment. Such cyclic structures share a remarkable similarity with \textit{limit cycles} in dynamical system analysis, if we consider the policy and the environment as a joint hybrid dynamical system. Moreover, we uncover that the geometry of such limit cycles also has a structured correspondence with the policies' behaviors. These findings offer new perspectives to explain many nice properties of recurrent policies: the emergence of limit cycles stabilizes both the policies' internal memory and the task-relevant environmental states, while suppressing nuisance variability arising from environmental uncertainty; the geometry of limit cycles also encodes relational structures of behaviors, facilitating easier skill adaptation when facing non-stationary environments.

OpenVTON-Bench: A Large-Scale High-Resolution Benchmark for Controllable Virtual Try-On Evaluation

Jan 30, 2026Abstract:Recent advances in diffusion models have significantly elevated the visual fidelity of Virtual Try-On (VTON) systems, yet reliable evaluation remains a persistent bottleneck. Traditional metrics struggle to quantify fine-grained texture details and semantic consistency, while existing datasets fail to meet commercial standards in scale and diversity. We present OpenVTON-Bench, a large-scale benchmark comprising approximately 100K high-resolution image pairs (up to $1536 \times 1536$). The dataset is constructed using DINOv3-based hierarchical clustering for semantically balanced sampling and Gemini-powered dense captioning, ensuring a uniform distribution across 20 fine-grained garment categories. To support reliable evaluation, we propose a multi-modal protocol that measures VTON quality along five interpretable dimensions: background consistency, identity fidelity, texture fidelity, shape plausibility, and overall realism. The protocol integrates VLM-based semantic reasoning with a novel Multi-Scale Representation Metric based on SAM3 segmentation and morphological erosion, enabling the separation of boundary alignment errors from internal texture artifacts. Experimental results show strong agreement with human judgments (Kendall's $τ$ of 0.833 vs. 0.611 for SSIM), establishing a robust benchmark for VTON evaluation.

Signal-Adaptive Trust Regions for Gradient-Free Optimization of Recurrent Spiking Neural Networks

Jan 29, 2026Abstract:Recurrent spiking neural networks (RSNNs) are a promising substrate for energy-efficient control policies, but training them for high-dimensional, long-horizon reinforcement learning remains challenging. Population-based, gradient-free optimization circumvents backpropagation through non-differentiable spike dynamics by estimating gradients. However, with finite populations, high variance of these estimates can induce harmful and overly aggressive update steps. Inspired by trust-region methods in reinforcement learning that constrain policy updates in distribution space, we propose \textbf{Signal-Adaptive Trust Regions (SATR)}, a distributional update rule that constrains relative change by bounding KL divergence normalized by an estimated signal energy. SATR automatically expands the trust region under strong signals and contracts it when updates are noise-dominated. We instantiate SATR for Bernoulli connectivity distributions, which have shown strong empirical performance for RSNN optimization. Across a suite of high-dimensional continuous-control benchmarks, SATR improves stability under limited populations and reaches competitive returns against strong baselines including PPO-LSTM. In addition, to make SATR practical at scale, we introduce a bitset implementation for binary spiking and binary weights, substantially reducing wall-clock training time and enabling fast RSNN policy search.

Towards Fair Large Language Model-based Recommender Systems without Costly Retraining

Jan 24, 2026Abstract:Large Language Models (LLMs) have revolutionized Recommender Systems (RS) through advanced generative user modeling. However, LLM-based RS (LLM-RS) often inadvertently perpetuates bias present in the training data, leading to severe fairness issues. Addressing these fairness problems in LLM-RS faces two significant challenges. 1) Existing debiasing methods, designed for specific bias types, lack the generality to handle diverse or emerging biases in real-world applications. 2) Debiasing methods relying on retraining are computationally infeasible given the massive parameter scale of LLMs. To overcome these challenges, we propose FUDLR (Fast Unified Debiasing for LLM-RS). The core idea is to reformulate the debiasing problem as an efficient machine unlearning task with two stages. First, FUDLR identifies bias-inducing samples to unlearn through a novel bias-agnostic mask, optimized to balance fairness improvement with accuracy preservation. Its bias-agnostic design allows adaptability to various or co-existing biases simply by incorporating different fairness metrics. Second, FUDLR performs efficient debiasing by estimating and removing the influence of identified samples on model parameters. Extensive experiments demonstrate that FUDLR effectively and efficiently improves fairness while preserving recommendation accuracy, offering a practical path toward socially responsible LLM-RS. The code and data are available at https://github.com/JinLi-i/FUDLR.

AnyECG: Evolved ECG Foundation Model for Holistic Health Profiling

Jan 12, 2026Abstract:Background: Artificial intelligence enabled electrocardiography (AI-ECG) has demonstrated the ability to detect diverse pathologies, but most existing models focus on single disease identification, neglecting comorbidities and future risk prediction. Although ECGFounder expanded cardiac disease coverage, a holistic health profiling model remains needed. Methods: We constructed a large multicenter dataset comprising 13.3 million ECGs from 2.98 million patients. Using transfer learning, ECGFounder was fine-tuned to develop AnyECG, a foundation model for holistic health profiling. Performance was evaluated using external validation cohorts and a 10-year longitudinal cohort for current diagnosis, future risk prediction, and comorbidity identification. Results: AnyECG demonstrated systemic predictive capability across 1172 conditions, achieving an AUROC greater than 0.7 for 306 diseases. The model revealed novel disease associations, robust comorbidity patterns, and future disease risks. Representative examples included high diagnostic performance for hyperparathyroidism (AUROC 0.941), type 2 diabetes (0.803), Crohn disease (0.817), lymphoid leukemia (0.856), and chronic obstructive pulmonary disease (0.773). Conclusion: The AnyECG foundation model provides substantial evidence that AI-ECG can serve as a systemic tool for concurrent disease detection and long-term risk prediction.

From Evidence-Based Medicine to Knowledge Graph: Retrieval-Augmented Generation for Sports Rehabilitation and a Domain Benchmark

Jan 01, 2026Abstract:In medicine, large language models (LLMs) increasingly rely on retrieval-augmented generation (RAG) to ground outputs in up-to-date external evidence. However, current RAG approaches focus primarily on performance improvements while overlooking evidence-based medicine (EBM) principles. This study addresses two key gaps: (1) the lack of PICO alignment between queries and retrieved evidence, and (2) the absence of evidence hierarchy considerations during reranking. We present a generalizable strategy for adapting EBM to graph-based RAG, integrating the PICO framework into knowledge graph construction and retrieval, and proposing a Bayesian-inspired reranking algorithm to calibrate ranking scores by evidence grade without introducing predefined weights. We validated this framework in sports rehabilitation, a literature-rich domain currently lacking RAG systems and benchmarks. We released a knowledge graph (357,844 nodes and 371,226 edges) and a reusable benchmark of 1,637 QA pairs. The system achieved 0.830 nugget coverage, 0.819 answer faithfulness, 0.882 semantic similarity, and 0.788 PICOT match accuracy. In a 5-point Likert evaluation, five expert clinicians rated the system 4.66-4.84 across factual accuracy, faithfulness, relevance, safety, and PICO alignment. These findings demonstrate that the proposed EBM adaptation strategy improves retrieval and answer quality and is transferable to other clinical domains. The released resources also help address the scarcity of RAG datasets in sports rehabilitation.

BUT Systems for WildSpoof Challenge: SASV in the Wild

Dec 14, 2025

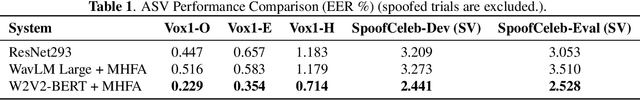

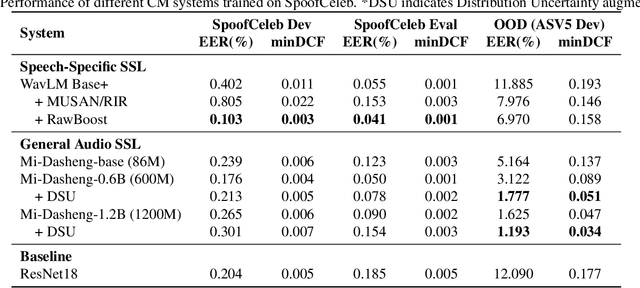

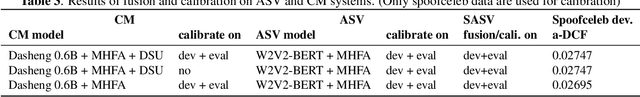

Abstract:This paper presents the BUT submission to the WildSpoof Challenge, focusing on the Spoofing-robust Automatic Speaker Verification (SASV) track. We propose a SASV framework designed to bridge the gap between general audio understanding and specialized speech analysis. Our subsystem integrates diverse Self-Supervised Learning front-ends ranging from general audio models (e.g., Dasheng) to speech-specific encoders (e.g., WavLM). These representations are aggregated via a lightweight Multi-Head Factorized Attention back-end for corresponding subtasks. Furthermore, we introduce a feature domain augmentation strategy based on Distribution Uncertainty to explicitly model and mitigate the domain shift caused by unseen neural vocoders and recording environments. By fusing these robust CM scores with state-of-the-art ASV systems, our approach achieves superior minimization of the a-DCFs and EERs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge