Jian-Fang Hu

Seg-ReSearch: Segmentation with Interleaved Reasoning and External Search

Feb 04, 2026Abstract:Segmentation based on language has been a popular topic in computer vision. While recent advances in multimodal large language models (MLLMs) have endowed segmentation systems with reasoning capabilities, these efforts remain confined by the frozen internal knowledge of MLLMs, which limits their potential for real-world scenarios that involve up-to-date information or domain-specific concepts. In this work, we propose \textbf{Seg-ReSearch}, a novel segmentation paradigm that overcomes the knowledge bottleneck of existing approaches. By enabling interleaved reasoning and external search, Seg-ReSearch empowers segmentation systems to handle dynamic, open-world queries that extend beyond the frozen knowledge of MLLMs. To effectively train this capability, we introduce a hierarchical reward design that harmonizes initial guidance with progressive incentives, mitigating the dilemma between sparse outcome signals and rigid step-wise supervision. For evaluation, we construct OK-VOS, a challenging benchmark that explicitly requires outside knowledge for video object segmentation. Experiments on OK-VOS and two existing reasoning segmentation benchmarks demonstrate that our Seg-ReSearch improves state-of-the-art approaches by a substantial margin. Code and data will be released at https://github.com/iSEE-Laboratory/Seg-ReSearch.

Refer-Agent: A Collaborative Multi-Agent System with Reasoning and Reflection for Referring Video Object Segmentation

Feb 03, 2026Abstract:Referring Video Object Segmentation (RVOS) aims to segment objects in videos based on textual queries. Current methods mainly rely on large-scale supervised fine-tuning (SFT) of Multi-modal Large Language Models (MLLMs). However, this paradigm suffers from heavy data dependence and limited scalability against the rapid evolution of MLLMs. Although recent zero-shot approaches offer a flexible alternative, their performance remains significantly behind SFT-based methods, due to the straightforward workflow designs. To address these limitations, we propose \textbf{Refer-Agent}, a collaborative multi-agent system with alternating reasoning-reflection mechanisms. This system decomposes RVOS into step-by-step reasoning process. During reasoning, we introduce a Coarse-to-Fine frame selection strategy to ensure the frame diversity and textual relevance, along with a Dynamic Focus Layout that adaptively adjusts the agent's visual focus. Furthermore, we propose a Chain-of-Reflection mechanism, which employs a Questioner-Responder pair to generate a self-reflection chain, enabling the system to verify intermediate results and generates feedback for next-round reasoning refinement. Extensive experiments on five challenging benchmarks demonstrate that Refer-Agent significantly outperforms state-of-the-art methods, including both SFT-based models and zero-shot approaches. Moreover, Refer-Agent is flexible and enables fast integration of new MLLMs without any additional fine-tuning costs. Code will be released.

Null-LoRA: Low-Rank Adaptation on Null Space

Dec 18, 2025Abstract:Parameter-efficient fine-tuning methods have gained considerable popularity for adapting large-scale models to downstream tasks, particularly LoRA and its variants. Existing methods perform low-rank adaptation over the full parameter space. However, fine-tuning within a subspace can achieve comparable effectiveness. Inspired by the observation that pre-trained models possess non-trivial null spaces, we propose Null-space based Low-Rank Adaptation (Null-LoRA). Null-LoRA effectively reduces redundancy and enhances effective rank by freezing portions of the low-rank matrices. To further improve parameter efficiency, Null-LoRA constrains the entire incremental update within the null space, maximizing the utilization of incremental updates to adapt to new task paradigms. Null-LoRA surpasses the state of the art with fewer parameters in extensive experiments across image-text retrieval and visual question answering tasks.

IRG-MotionLLM: Interleaving Motion Generation, Assessment and Refinement for Text-to-Motion Generation

Dec 11, 2025Abstract:Recent advances in motion-aware large language models have shown remarkable promise for unifying motion understanding and generation tasks. However, these models typically treat understanding and generation separately, limiting the mutual benefits that could arise from interactive feedback between tasks. In this work, we reveal that motion assessment and refinement tasks act as crucial bridges to enable bidirectional knowledge flow between understanding and generation. Leveraging this insight, we propose Interleaved Reasoning for Motion Generation (IRMoGen), a novel paradigm that tightly couples motion generation with assessment and refinement through iterative text-motion dialogue. To realize this, we introduce IRG-MotionLLM, the first model that seamlessly interleaves motion generation, assessment, and refinement to improve generation performance. IRG-MotionLLM is developed progressively with a novel three-stage training scheme, initializing and subsequently enhancing native IRMoGen capabilities. To facilitate this development, we construct an automated data engine to synthesize interleaved reasoning annotations from existing text-motion datasets. Extensive experiments demonstrate that: (i) Assessment and refinement tasks significantly improve text-motion alignment; (ii) Interleaving motion generation, assessment, and refinement steps yields consistent performance gains across training stages; and (iii) IRG-MotionLLM clearly outperforms the baseline model and achieves advanced performance on standard text-to-motion generation benchmarks. Cross-evaluator testing further validates its effectiveness. Code & Data: https://github.com/HumanMLLM/IRG-MotionLLM/tree/main.

TubeRMC: Tube-conditioned Reconstruction with Mutual Constraints for Weakly-supervised Spatio-Temporal Video Grounding

Nov 13, 2025Abstract:Spatio-Temporal Video Grounding (STVG) aims to localize a spatio-temporal tube that corresponds to a given language query in an untrimmed video. This is a challenging task since it involves complex vision-language understanding and spatiotemporal reasoning. Recent works have explored weakly-supervised setting in STVG to eliminate reliance on fine-grained annotations like bounding boxes or temporal stamps. However, they typically follow a simple late-fusion manner, which generates tubes independent of the text description, often resulting in failed target identification and inconsistent target tracking. To address this limitation, we propose a Tube-conditioned Reconstruction with Mutual Constraints (\textbf{TubeRMC}) framework that generates text-conditioned candidate tubes with pre-trained visual grounding models and further refine them via tube-conditioned reconstruction with spatio-temporal constraints. Specifically, we design three reconstruction strategies from temporal, spatial, and spatio-temporal perspectives to comprehensively capture rich tube-text correspondences. Each strategy is equipped with a Tube-conditioned Reconstructor, utilizing spatio-temporal tubes as condition to reconstruct the key clues in the query. We further introduce mutual constraints between spatial and temporal proposals to enhance their quality for reconstruction. TubeRMC outperforms existing methods on two public benchmarks VidSTG and HCSTVG. Further visualization shows that TubeRMC effectively mitigates both target identification errors and inconsistent tracking.

CoopDiff: Anticipating 3D Human-object Interactions via Contact-consistent Decoupled Diffusion

Aug 10, 2025Abstract:3D human-object interaction (HOI) anticipation aims to predict the future motion of humans and their manipulated objects, conditioned on the historical context. Generally, the articulated humans and rigid objects exhibit different motion patterns, due to their distinct intrinsic physical properties. However, this distinction is ignored by most of the existing works, which intend to capture the dynamics of both humans and objects within a single prediction model. In this work, we propose a novel contact-consistent decoupled diffusion framework CoopDiff, which employs two distinct branches to decouple human and object motion modeling, with the human-object contact points as shared anchors to bridge the motion generation across branches. The human dynamics branch is aimed to predict highly structured human motion, while the object dynamics branch focuses on the object motion with rigid translations and rotations. These two branches are bridged by a series of shared contact points with consistency constraint for coherent human-object motion prediction. To further enhance human-object consistency and prediction reliability, we propose a human-driven interaction module to guide object motion modeling. Extensive experiments on the BEHAVE and Human-object Interaction datasets demonstrate that our CoopDiff outperforms state-of-the-art methods.

Long-RVOS: A Comprehensive Benchmark for Long-term Referring Video Object Segmentation

May 19, 2025Abstract:Referring video object segmentation (RVOS) aims to identify, track and segment the objects in a video based on language descriptions, which has received great attention in recent years. However, existing datasets remain focus on short video clips within several seconds, with salient objects visible in most frames. To advance the task towards more practical scenarios, we introduce \textbf{Long-RVOS}, a large-scale benchmark for long-term referring video object segmentation. Long-RVOS contains 2,000+ videos of an average duration exceeding 60 seconds, covering a variety of objects that undergo occlusion, disappearance-reappearance and shot changing. The objects are manually annotated with three different types of descriptions to individually evaluate the understanding of static attributes, motion patterns and spatiotemporal relationships. Moreover, unlike previous benchmarks that rely solely on the per-frame spatial evaluation, we introduce two new metrics to assess the temporal and spatiotemporal consistency. We benchmark 6 state-of-the-art methods on Long-RVOS. The results show that current approaches struggle severely with the long-video challenges. To address this, we further propose ReferMo, a promising baseline method that integrates motion information to expand the temporal receptive field, and employs a local-to-global architecture to capture both short-term dynamics and long-term dependencies. Despite simplicity, ReferMo achieves significant improvements over current methods in long-term scenarios. We hope that Long-RVOS and our baseline can drive future RVOS research towards tackling more realistic and long-form videos.

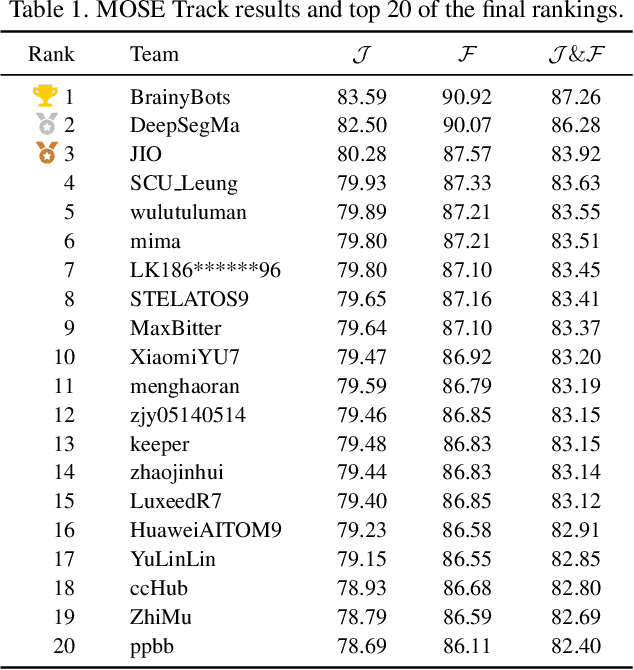

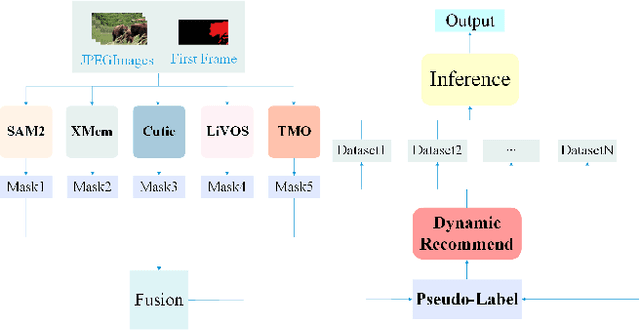

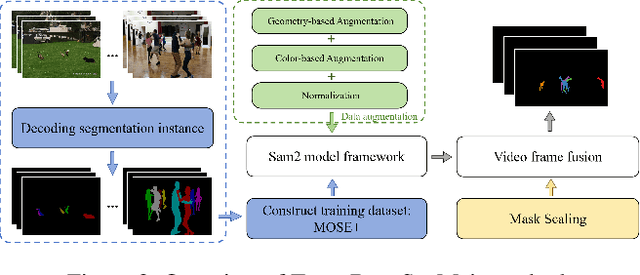

PVUW 2025 Challenge Report: Advances in Pixel-level Understanding of Complex Videos in the Wild

Apr 15, 2025

Abstract:This report provides a comprehensive overview of the 4th Pixel-level Video Understanding in the Wild (PVUW) Challenge, held in conjunction with CVPR 2025. It summarizes the challenge outcomes, participating methodologies, and future research directions. The challenge features two tracks: MOSE, which focuses on complex scene video object segmentation, and MeViS, which targets motion-guided, language-based video segmentation. Both tracks introduce new, more challenging datasets designed to better reflect real-world scenarios. Through detailed evaluation and analysis, the challenge offers valuable insights into the current state-of-the-art and emerging trends in complex video segmentation. More information can be found on the workshop website: https://pvuw.github.io/.

Modeling Multiple Normal Action Representations for Error Detection in Procedural Tasks

Apr 02, 2025

Abstract:Error detection in procedural activities is essential for consistent and correct outcomes in AR-assisted and robotic systems. Existing methods often focus on temporal ordering errors or rely on static prototypes to represent normal actions. However, these approaches typically overlook the common scenario where multiple, distinct actions are valid following a given sequence of executed actions. This leads to two issues: (1) the model cannot effectively detect errors using static prototypes when the inference environment or action execution distribution differs from training; and (2) the model may also use the wrong prototypes to detect errors if the ongoing action label is not the same as the predicted one. To address this problem, we propose an Adaptive Multiple Normal Action Representation (AMNAR) framework. AMNAR predicts all valid next actions and reconstructs their corresponding normal action representations, which are compared against the ongoing action to detect errors. Extensive experiments demonstrate that AMNAR achieves state-of-the-art performance, highlighting the effectiveness of AMNAR and the importance of modeling multiple valid next actions in error detection. The code is available at https://github.com/iSEE-Laboratory/AMNAR.

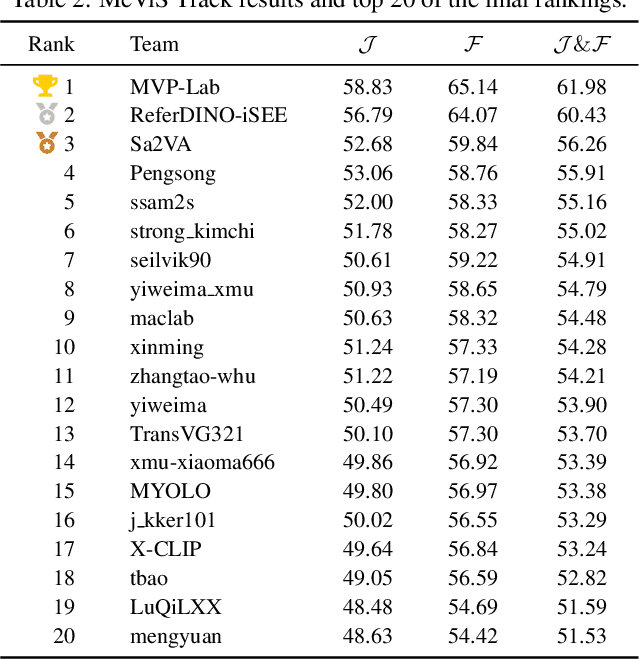

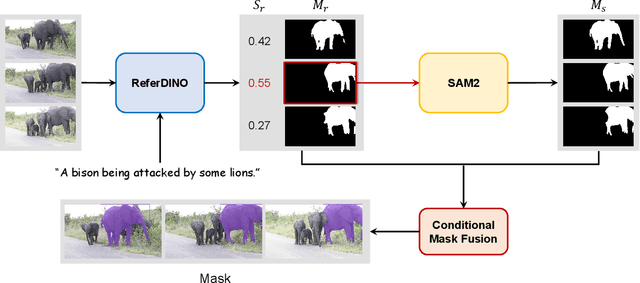

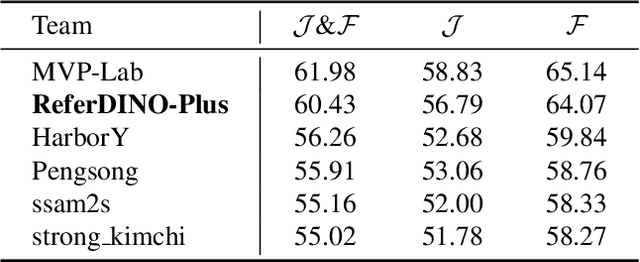

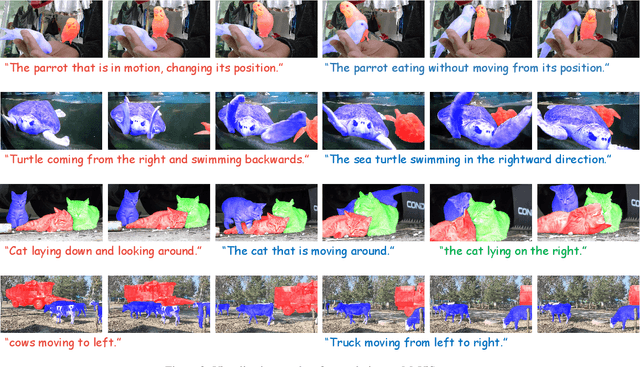

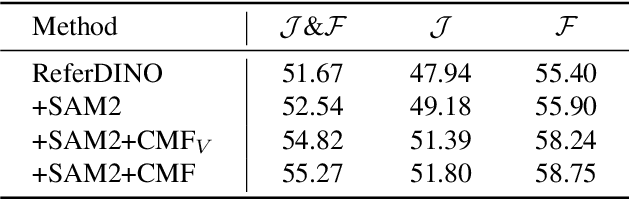

ReferDINO-Plus: 2nd Solution for 4th PVUW MeViS Challenge at CVPR 2025

Mar 30, 2025

Abstract:Referring Video Object Segmentation (RVOS) aims to segment target objects throughout a video based on a text description. This task has attracted increasing attention in the field of computer vision due to its promising applications in video editing and human-agent interaction. Recently, ReferDINO has demonstrated promising performance in this task by adapting object-level vision-language knowledge from pretrained foundational image models. In this report, we further enhance its capabilities by incorporating the advantages of SAM2 in mask quality and object consistency. In addition, to effectively balance performance between single-object and multi-object scenarios, we introduce a conditional mask fusion strategy that adaptively fuses the masks from ReferDINO and SAM2. Our solution, termed ReferDINO-Plus, achieves 60.43 \(\mathcal{J}\&\mathcal{F}\) on MeViS test set, securing 2nd place in the MeViS PVUW challenge at CVPR 2025. The code is available at: https://github.com/iSEE-Laboratory/ReferDINO-Plus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge