Xiao Li

Deep Learning-based Low-Overhead Beam Alignment for mmWave Massive MIMO Systems

Feb 25, 2026Abstract:Millimeter-wave massive multiple-input multiple-output systems employ highly directional beamforming to overcome severe path loss, and their performance critically depends on accurate beam alignment. Conventional codebook-based methods offer low training overhead but suffer from limited angular resolution and sensitivity to hardware impairments. To address these challenges, we propose a deep learning-enhanced super-resolution beam alignment framework with three key components. First, we design the Quaternary Search-based Super-Resolution (QSSR) algorithm, which leverages the monotonic power ratio property between two discrete Fourier transform (DFT) codebook beams to achieve super-resolution angle estimation without increasing measurement complexity relative to binary search. Second, we develop QSSR-Net, a gated recurrent unit-based neural network that exploits sequential multi-layer beam measurements to capture angular dependencies, thereby improving estimation accuracy, robustness to noise, and generalization across diverse propagation environments. Third, to mitigate the adverse effects of hardware impairments such as antenna position and phase errors, we propose a parametric self-calibration method that requires no additional hardware overhead and adapts compensation parameters in real time. Simulation results show that the proposed framework consistently outperforms binary search and even exhaustive search at high signal-to-noise ratios, achieving substantial performance gains while maintaining low overhead.

Learning Query-Aware Budget-Tier Routing for Runtime Agent Memory

Feb 05, 2026Abstract:Memory is increasingly central to Large Language Model (LLM) agents operating beyond a single context window, yet most existing systems rely on offline, query-agnostic memory construction that can be inefficient and may discard query-critical information. Although runtime memory utilization is a natural alternative, prior work often incurs substantial overhead and offers limited explicit control over the performance-cost trade-off. In this work, we present \textbf{BudgetMem}, a runtime agent memory framework for explicit, query-aware performance-cost control. BudgetMem structures memory processing as a set of memory modules, each offered in three budget tiers (i.e., \textsc{Low}/\textsc{Mid}/\textsc{High}). A lightweight router performs budget-tier routing across modules to balance task performance and memory construction cost, which is implemented as a compact neural policy trained with reinforcement learning. Using BudgetMem as a unified testbed, we study three complementary strategies for realizing budget tiers: implementation (method complexity), reasoning (inference behavior), and capacity (module model size). Across LoCoMo, LongMemEval, and HotpotQA, BudgetMem surpasses strong baselines when performance is prioritized (i.e., high-budget setting), and delivers better accuracy-cost frontiers under tighter budgets. Moreover, our analysis disentangles the strengths and weaknesses of different tiering strategies, clarifying when each axis delivers the most favorable trade-offs under varying budget regimes.

A Unified View of Attention and Residual Sinks: Outlier-Driven Rescaling is Essential for Transformer Training

Jan 30, 2026Abstract:We investigate the functional role of emergent outliers in large language models, specifically attention sinks (a few tokens that consistently receive large attention logits) and residual sinks (a few fixed dimensions with persistently large activations across most tokens). We hypothesize that these outliers, in conjunction with the corresponding normalizations (\textit{e.g.}, softmax attention and RMSNorm), effectively rescale other non-outlier components. We term this phenomenon \textit{outlier-driven rescaling} and validate this hypothesis across different model architectures and training token counts. This view unifies the origin and mitigation of both sink types. Our main conclusions and observations include: (1) Outliers function jointly with normalization: removing normalization eliminates the corresponding outliers but degrades training stability and performance; directly clipping outliers while retaining normalization leads to degradation, indicating that outlier-driven rescaling contributes to training stability. (2) Outliers serve more as rescale factors rather than contributors, as the final contributions of attention and residual sinks are significantly smaller than those of non-outliers. (3) Outliers can be absorbed into learnable parameters or mitigated via explicit gated rescaling, leading to improved training performance (average gain of 2 points) and enhanced quantization robustness (1.2 points degradation under W4A4 quantization).

AoI-Driven Queue Management and Power Control in V2V Networks: A GNN-Enhanced MARL Approach

Jan 27, 2026Abstract:Queue management and resource allocation play a critical role in enabling cooperative status awareness in vehicular networks. This paper investigates the problem of age of information (AoI)-aware status updates in vehicle-to-vehicle (V2V) communication, where each vehicle's status is represented by multiple interdependent packets. To enable fine-grained queue management at the packet level under resource constraints, we formulate a joint optimization problem that simultaneously learns active packet dropping and transmit power control strategies. A hybrid action space is designed to support both discrete dropping decisions and continuous power control. To exploit the graph-structured interference inherent in V2V topology, a graph neural network (GNN) is introduced to aggregate slowly varying large-scale fading, allowing agents to capture topological dependencies implicitly without frequent message exchange. The overall framework is built upon multi-agent proximal policy optimization (MAPPO), with centralized training and decentralized execution (CTDE). Simulations demonstrate that the proposed method significantly reduces average AoI across a wide range of network densities, channel conditions, and traffic loads, consistently outperforming several baselines.

AMGFormer: Adaptive Multi-Granular Transformer for Brain Tumor Segmentation with Missing Modalities

Jan 27, 2026Abstract:Multimodal MRI is essential for brain tumor segmentation, yet missing modalities in clinical practice cause existing methods to exhibit >40% performance variance across modality combinations, rendering them clinically unreliable. We propose AMGFormer, achieving significantly improved stability through three synergistic modules: (1) QuadIntegrator Bridge (QIB) enabling spatially adaptive fusion maintaining consistent predictions regardless of available modalities, (2) Multi-Granular Attention Orchestrator (MGAO) focusing on pathological regions to reduce background sensitivity, and (3) Modality Quality-Aware Enhancement (MQAE) preventing error propagation from corrupted sequences. On BraTS 2018, our method achieves 89.33% WT, 82.70% TC, 67.23% ET Dice scores with <0.5% variance across 15 modality combinations, solving the stability crisis. Single-modality ET segmentation shows 40-81% relative improvements over state-of-the-art methods. The method generalizes to BraTS 2020/2021, achieving up to 92.44% WT, 89.91% TC, 84.57% ET. The model demonstrates potential for clinical deployment with 1.2s inference. Code: https://github.com/guochengxiangives/AMGFormer.

Hierarchical Long Video Understanding with Audiovisual Entity Cohesion and Agentic Search

Jan 20, 2026Abstract:Long video understanding presents significant challenges for vision-language models due to extremely long context windows. Existing solutions relying on naive chunking strategies with retrieval-augmented generation, typically suffer from information fragmentation and a loss of global coherence. We present HAVEN, a unified framework for long-video understanding that enables coherent and comprehensive reasoning by integrating audiovisual entity cohesion and hierarchical video indexing with agentic search. First, we preserve semantic consistency by integrating entity-level representations across visual and auditory streams, while organizing content into a structured hierarchy spanning global summary, scene, segment, and entity levels. Then we employ an agentic search mechanism to enable dynamic retrieval and reasoning across these layers, facilitating coherent narrative reconstruction and fine-grained entity tracking. Extensive experiments demonstrate that our method achieves good temporal coherence, entity consistency, and retrieval efficiency, establishing a new state-of-the-art with an overall accuracy of 84.1% on LVBench. Notably, it achieves outstanding performance in the challenging reasoning category, reaching 80.1%. These results highlight the effectiveness of structured, multimodal reasoning for comprehensive and context-consistent understanding of long-form videos.

PartImageNet++ Dataset: Enhancing Visual Models with High-Quality Part Annotations

Jan 04, 2026Abstract:To address the scarcity of high-quality part annotations in existing datasets, we introduce PartImageNet++ (PIN++), a dataset that provides detailed part annotations for all categories in ImageNet-1K. With 100 annotated images per category, totaling 100K images, PIN++ represents the most comprehensive dataset covering a diverse range of object categories. Leveraging PIN++, we propose a Multi-scale Part-supervised recognition Model (MPM) for robust classification on ImageNet-1K. We first trained a part segmentation network using PIN++ and used it to generate pseudo part labels for the remaining unannotated images. MPM then integrated a conventional recognition architecture with auxiliary bypass layers, jointly supervised by both pseudo part labels and the original part annotations. Furthermore, we conducted extensive experiments on PIN++, including part segmentation, object segmentation, and few-shot learning, exploring various ways to leverage part annotations in downstream tasks. Experimental results demonstrated that our approach not only enhanced part-based models for robust object recognition but also established strong baselines for multiple downstream tasks, highlighting the potential of part annotations in improving model performance. The dataset and the code are available at https://github.com/LixiaoTHU/PartImageNetPP.

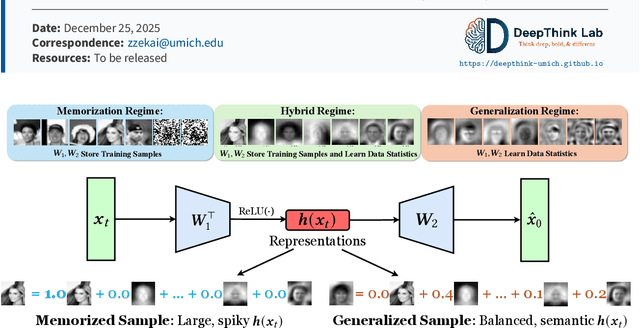

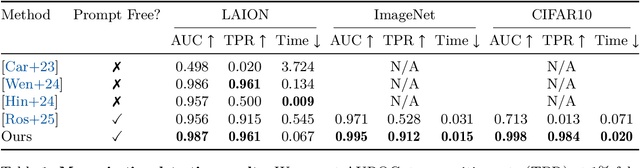

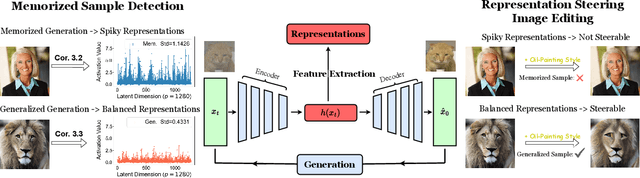

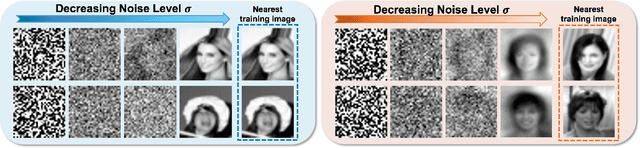

Generalization of Diffusion Models Arises with a Balanced Representation Space

Dec 24, 2025

Abstract:Diffusion models excel at generating high-quality, diverse samples, yet they risk memorizing training data when overfit to the training objective. We analyze the distinctions between memorization and generalization in diffusion models through the lens of representation learning. By investigating a two-layer ReLU denoising autoencoder (DAE), we prove that (i) memorization corresponds to the model storing raw training samples in the learned weights for encoding and decoding, yielding localized "spiky" representations, whereas (ii) generalization arises when the model captures local data statistics, producing "balanced" representations. Furthermore, we validate these theoretical findings on real-world unconditional and text-to-image diffusion models, demonstrating that the same representation structures emerge in deep generative models with significant practical implications. Building on these insights, we propose a representation-based method for detecting memorization and a training-free editing technique that allows precise control via representation steering. Together, our results highlight that learning good representations is central to novel and meaningful generative modeling.

OMP: One-step Meanflow Policy with Directional Alignment

Dec 22, 2025Abstract:Robot manipulation, a key capability of embodied AI, has turned to data-driven generative policy frameworks, but mainstream approaches like Diffusion Models suffer from high inference latency and Flow-based Methods from increased architectural complexity. While simply applying meanFlow on robotic tasks achieves single-step inference and outperforms FlowPolicy, it lacks few-shot generalization due to fixed temperature hyperparameters in its Dispersive Loss and misaligned predicted-true mean velocities. To solve these issues, this study proposes an improved MeanFlow-based Policies: we introduce a lightweight Cosine Loss to align velocity directions and use the Differential Derivation Equation (DDE) to optimize the Jacobian-Vector Product (JVP) operator. Experiments on Adroit and Meta-World tasks show the proposed method outperforms MP1 and FlowPolicy in average success rate, especially in challenging Meta-World tasks, effectively enhancing few-shot generalization and trajectory accuracy of robot manipulation policies while maintaining real-time performance, offering a more robust solution for high-precision robotic manipulation.

Timely Parameter Updating in Over-the-Air Federated Learning

Dec 22, 2025Abstract:Incorporating over-the-air computations (OAC) into the model training process of federated learning (FL) is an effective approach to alleviating the communication bottleneck in FL systems. Under OAC-FL, every client modulates its intermediate parameters, such as gradient, onto the same set of orthogonal waveforms and simultaneously transmits the radio signal to the edge server. By exploiting the superposition property of multiple-access channels, the edge server can obtain an automatically aggregated global gradient from the received signal. However, the limited number of orthogonal waveforms available in practical systems is fundamentally mismatched with the high dimensionality of modern deep learning models. To address this issue, we propose Freshness Freshness-mAgnItude awaRe top-k (FAIR-k), an algorithm that selects, in each communication round, the most impactful subset of gradients to be updated over the air. In essence, FAIR-k combines the complementary strengths of the Round-Robin and Top-k algorithms, striking a delicate balance between timeliness (freshness of parameter updates) and importance (gradient magnitude). Leveraging tools from Markov analysis, we characterize the distribution of parameter staleness under FAIR-k. Building on this, we establish the convergence rate of OAC-FL with FAIR-k, which discloses the joint effect of data heterogeneity, channel noise, and parameter staleness on the training efficiency. Notably, as opposed to conventional analyses that assume a universal Lipschitz constant across all the clients, our framework adopts a finer-grained model of the data heterogeneity. The analysis demonstrates that since FAIR-k promotes fresh (and fair) parameter updates, it not only accelerates convergence but also enhances communication efficiency by enabling an extended period of local training without significantly affecting overall training efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge