Yixuan Huang

Composable Visual Tokenizers with Generator-Free Diagnostics of Learnability

Feb 03, 2026Abstract:We introduce CompTok, a training framework for learning visual tokenizers whose tokens are enhanced for compositionality. CompTok uses a token-conditioned diffusion decoder. By employing an InfoGAN-style objective, where we train a recognition model to predict the tokens used to condition the diffusion decoder using the decoded images, we enforce the decoder to not ignore any of the tokens. To promote compositional control, besides the original images, CompTok also trains on tokens formed by swapping token subsets between images, enabling more compositional control of the token over the decoder. As the swapped tokens between images do not have ground truth image targets, we apply a manifold constraint via an adversarial flow regularizer to keep unpaired swap generations on the natural-image distribution. The resulting tokenizer not only achieves state-of-the-art performance on image class-conditioned generation, but also demonstrates properties such as swapping tokens between images to achieve high level semantic editing of an image. Additionally, we propose two metrics that measures the landscape of the token space that can be useful to describe not only the compositionality of the tokens, but also how easy to learn the landscape is for a generator to be trained on this space. We show in experiments that CompTok can improve on both of the metrics as well as supporting state-of-the-art generators for class conditioned generation.

Hierarchical DLO Routing with Reinforcement Learning and In-Context Vision-language Models

Oct 22, 2025Abstract:Long-horizon routing tasks of deformable linear objects (DLOs), such as cables and ropes, are common in industrial assembly lines and everyday life. These tasks are particularly challenging because they require robots to manipulate DLO with long-horizon planning and reliable skill execution. Successfully completing such tasks demands adapting to their nonlinear dynamics, decomposing abstract routing goals, and generating multi-step plans composed of multiple skills, all of which require accurate high-level reasoning during execution. In this paper, we propose a fully autonomous hierarchical framework for solving challenging DLO routing tasks. Given an implicit or explicit routing goal expressed in language, our framework leverages vision-language models~(VLMs) for in-context high-level reasoning to synthesize feasible plans, which are then executed by low-level skills trained via reinforcement learning. To improve robustness in long horizons, we further introduce a failure recovery mechanism that reorients the DLO into insertion-feasible states. Our approach generalizes to diverse scenes involving object attributes, spatial descriptions, as well as implicit language commands. It outperforms the next best baseline method by nearly 50% and achieves an overall success rate of 92.5% across long-horizon routing scenarios.

Learned Intelligent Recognizer with Adaptively Customized RIS Phases in Communication Systems

May 05, 2025Abstract:This study presents an advanced wireless system that embeds target recognition within reconfigurable intelligent surface (RIS)-aided communication systems, powered by cuttingedge deep learning innovations. Such a system faces the challenge of fine-tuning both the RIS phase shifts and neural network (NN) parameters, since they intricately interdepend on each other to accomplish the recognition task. To address these challenges, we propose an intelligent recognizer that strategically harnesses every piece of prior action responses, thereby ingeniously multiplexing downlink signals to facilitate environment sensing. Specifically, we design a novel NN based on the long short-term memory (LSTM) architecture and the physical channel model. The NN iteratively captures and fuses information from previous measurements and adaptively customizes RIS configurations to acquire the most relevant information for the recognition task in subsequent moments. Tailored dynamically, these configurations adapt to the scene, task, and target specifics. Simulation results reveal that our proposed method significantly outperforms the state-of-the-art method, while resulting in minimal impacts on communication performance, even as sensing is performed simultaneously.

Cooperative ISAC Network for Off-Grid Imaging-based Low-Altitude Surveillance

May 05, 2025

Abstract:The low-altitude economy has emerged as a critical focus for future economic development, emphasizing the urgent need for flight activity surveillance utilizing the existing sensing capabilities of mobile cellular networks. Traditional monostatic or localization-based sensing methods, however, encounter challenges in fusing sensing results and matching channel parameters. To address these challenges, we propose an innovative approach that directly draws the radio images of the low-altitude space, leveraging its inherent sparsity with compressed sensing (CS)-based algorithms and the cooperation of multiple base stations. Furthermore, recognizing that unmanned aerial vehicles (UAVs) are randomly distributed in space, we introduce a physics-embedded learning method to overcome off-grid issues inherent in CS-based models. Additionally, an online hard example mining method is incorporated into the design of the loss function, enabling the network to adaptively concentrate on the samples bearing significant discrepancy with the ground truth, thereby enhancing its ability to detect the rare UAVs within the expansive low-altitude space. Simulation results demonstrate the effectiveness of the imaging-based low-altitude surveillance approach, with the proposed physics-embedded learning algorithm significantly outperforming traditional CS-based methods under off-grid conditions.

MMKB-RAG: A Multi-Modal Knowledge-Based Retrieval-Augmented Generation Framework

Apr 15, 2025Abstract:Recent advancements in large language models (LLMs) and multi-modal LLMs have been remarkable. However, these models still rely solely on their parametric knowledge, which limits their ability to generate up-to-date information and increases the risk of producing erroneous content. Retrieval-Augmented Generation (RAG) partially mitigates these challenges by incorporating external data sources, yet the reliance on databases and retrieval systems can introduce irrelevant or inaccurate documents, ultimately undermining both performance and reasoning quality. In this paper, we propose Multi-Modal Knowledge-Based Retrieval-Augmented Generation (MMKB-RAG), a novel multi-modal RAG framework that leverages the inherent knowledge boundaries of models to dynamically generate semantic tags for the retrieval process. This strategy enables the joint filtering of retrieved documents, retaining only the most relevant and accurate references. Extensive experiments on knowledge-based visual question-answering tasks demonstrate the efficacy of our approach: on the E-VQA dataset, our method improves performance by +4.2% on the Single-Hop subset and +0.4% on the full dataset, while on the InfoSeek dataset, it achieves gains of +7.8% on the Unseen-Q subset, +8.2% on the Unseen-E subset, and +8.1% on the full dataset. These results highlight significant enhancements in both accuracy and robustness over the current state-of-the-art MLLM and RAG frameworks.

Integrated Communication and Learned Recognizer with Customized RIS Phases and Sensing Durations

Mar 04, 2025

Abstract:Future wireless communication networks are expected to be smarter and more aware of their surroundings, enabling a wide range of context-aware applications. Reconfigurable intelligent surfaces (RISs) are set to play a critical role in supporting various sensing tasks, such as target recognition. However, current methods typically use RIS configurations optimized once and applied over fixed sensing durations, limiting their ability to adapt to different targets and reducing sensing accuracy. To overcome these limitations, this study proposes an advanced wireless communication system that multiplexes downlink signals for environmental sensing and introduces an intelligent recognizer powered by deep learning techniques. Specifically, we design a novel neural network based on the long short-term memory architecture and the physical channel model. This network iteratively captures and fuses information from previous measurements, adaptively customizing RIS phases to gather the most relevant information for the recognition task at subsequent moments. These configurations are dynamically adjusted according to scene, task, target, and quantization priors. Furthermore, the recognizer includes a decision-making module that dynamically allocates different sensing durations, determining whether to continue or terminate the sensing process based on the collected measurements. This approach maximizes resource utilization efficiency. Simulation results demonstrate that the proposed method significantly outperforms state-of-the-art techniques while minimizing the impact on communication performance, even when sensing and communication occur simultaneously. Part of the source code for this paper can be accessed at https://github.com/kiwi1944/CRISense.

RainGaugeNet: CSI-Based Sub-6 GHz Rainfall Attenuation Measurement and Classification for ISAC Applications

Jan 04, 2025

Abstract:Rainfall impacts daily activities and can lead to severe hazards such as flooding. Traditional rainfall measurement systems often lack granularity or require extensive infrastructure. While the attenuation of electromagnetic waves due to rainfall is well-documented for frequencies above 10 GHz, sub-6 GHz bands are typically assumed to experience negligible effects. However, recent studies suggest measurable attenuation even at these lower frequencies. This study presents the first channel state information (CSI)-based measurement and analysis of rainfall attenuation at 2.8 GHz. The results confirm the presence of rain-induced attenuation at this frequency, although classification remains challenging. The attenuation follows a power-law decay model, with the rate of attenuation decreasing as rainfall intensity increases. Additionally, rainfall onset significantly increases the delay spread. Building on these insights, we propose RainGaugeNet, the first CSI-based rainfall classification model that leverages multipath and temporal features. Using only 20 seconds of CSI data, RainGaugeNet achieved over 90% classification accuracy in line-of-sight scenarios and over 85% in non-lineof-sight scenarios, significantly outperforming state-of-the-art methods.

Advancing Myopia To Holism: Fully Contrastive Language-Image Pre-training

Nov 30, 2024

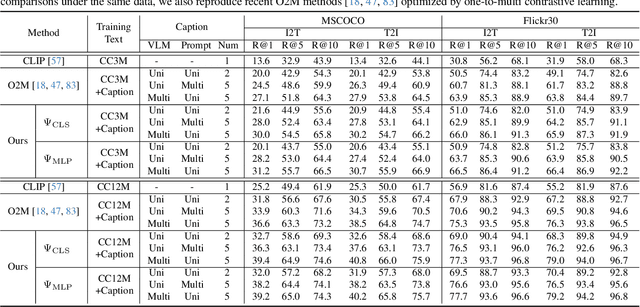

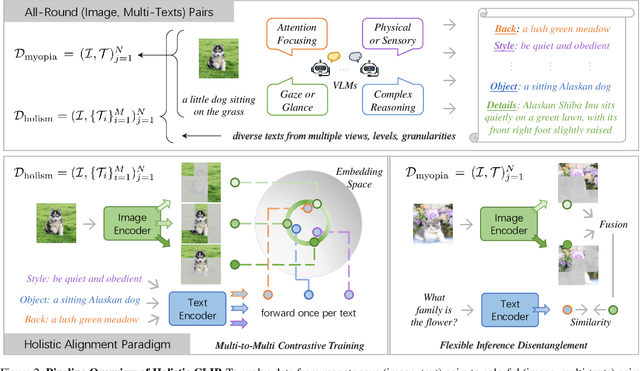

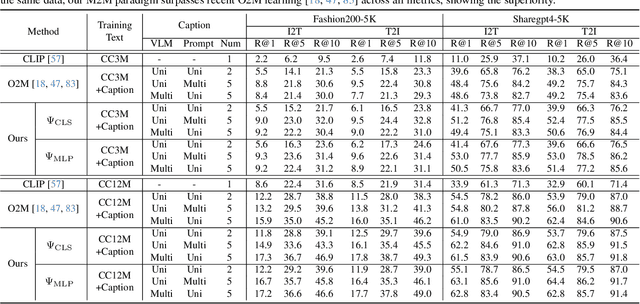

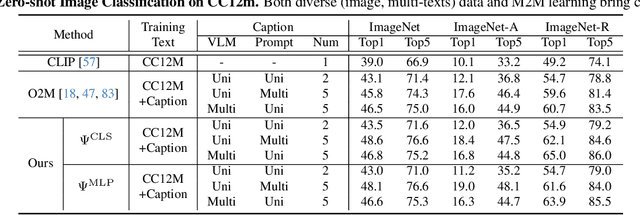

Abstract:In rapidly evolving field of vision-language models (VLMs), contrastive language-image pre-training (CLIP) has made significant strides, becoming foundation for various downstream tasks. However, relying on one-to-one (image, text) contrastive paradigm to learn alignment from large-scale messy web data, CLIP faces a serious myopic dilemma, resulting in biases towards monotonous short texts and shallow visual expressivity. To overcome these issues, this paper advances CLIP into one novel holistic paradigm, by updating both diverse data and alignment optimization. To obtain colorful data with low cost, we use image-to-text captioning to generate multi-texts for each image, from multiple perspectives, granularities, and hierarchies. Two gadgets are proposed to encourage textual diversity. To match such (image, multi-texts) pairs, we modify the CLIP image encoder into multi-branch, and propose multi-to-multi contrastive optimization for image-text part-to-part matching. As a result, diverse visual embeddings are learned for each image, bringing good interpretability and generalization. Extensive experiments and ablations across over ten benchmarks indicate that our holistic CLIP significantly outperforms existing myopic CLIP, including image-text retrieval, open-vocabulary classification, and dense visual tasks.

Points2Plans: From Point Clouds to Long-Horizon Plans with Composable Relational Dynamics

Aug 27, 2024Abstract:We present Points2Plans, a framework for composable planning with a relational dynamics model that enables robots to solve long-horizon manipulation tasks from partial-view point clouds. Given a language instruction and a point cloud of the scene, our framework initiates a hierarchical planning procedure, whereby a language model generates a high-level plan and a sampling-based planner produces constraint-satisfying continuous parameters for manipulation primitives sequenced according to the high-level plan. Key to our approach is the use of a relational dynamics model as a unifying interface between the continuous and symbolic representations of states and actions, thus facilitating language-driven planning from high-dimensional perceptual input such as point clouds. Whereas previous relational dynamics models require training on datasets of multi-step manipulation scenarios that align with the intended test scenarios, Points2Plans uses only single-step simulated training data while generalizing zero-shot to a variable number of steps during real-world evaluations. We evaluate our approach on tasks involving geometric reasoning, multi-object interactions, and occluded object reasoning in both simulated and real-world settings. Results demonstrate that Points2Plans offers strong generalization to unseen long-horizon tasks in the real world, where it solves over 85% of evaluated tasks while the next best baseline solves only 50%. Qualitative demonstrations of our approach operating on a mobile manipulator platform are made available at sites.google.com/stanford.edu/points2plans.

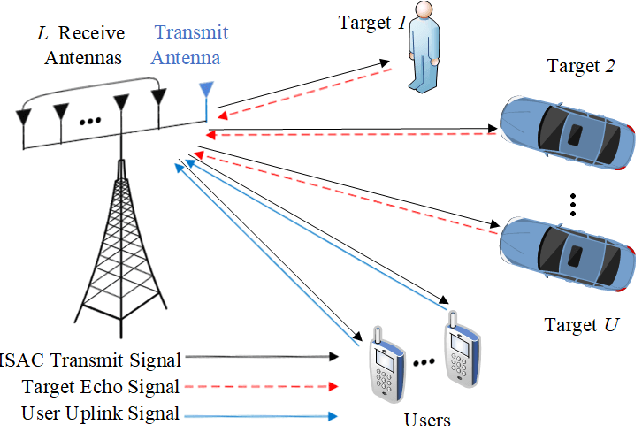

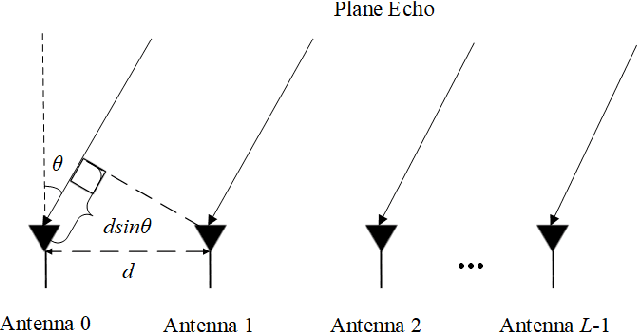

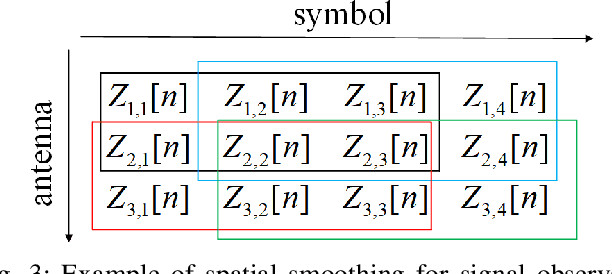

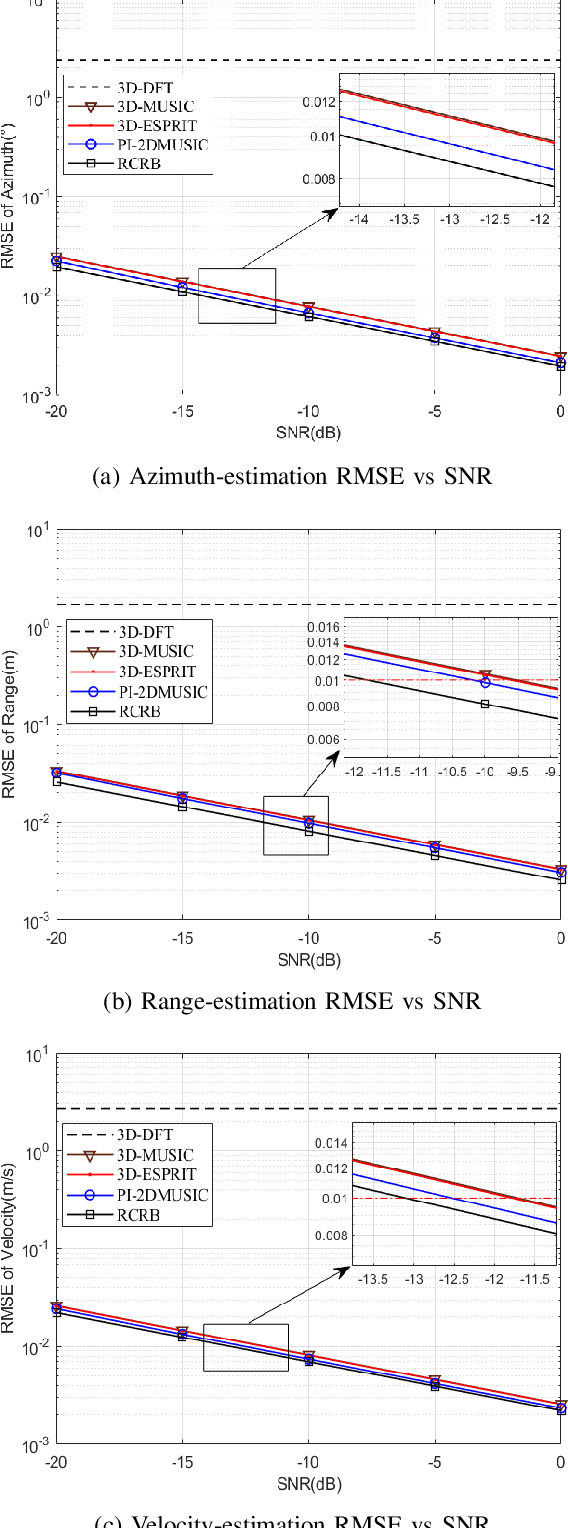

Low-Complexity Joint Azimuth-Range-Velocity Estimation for Integrated Sensing and Communication with OFDM Waveform

May 15, 2024

Abstract:Integrated sensing and communication (ISAC) is a main application scenario of the sixth-generation mobile communication systems. Due to the fast-growing number of antennas and subcarriers in cellular systems, the computational complexity of joint azimuth-range-velocity estimation (JARVE) in ISAC systems is extremely high. This paper studies the JARVE problem for a monostatic ISAC system with orthogonal frequency division multiplexing (OFDM) waveform, in which a base station receives the echos of its transmitted cellular OFDM signals to sense multiple targets. The Cramer-Rao bounds are first derived for JARVE. A low-complexity algorithm is further designed for super-resolution JARVE, which utilizes the proposed iterative subspace update scheme and Levenberg-Marquardt optimization method to replace the exhaustive search of spatial spectrum in multiple-signal-classification (MUSIC) algorithm. Finally, with the practical parameters of 5G New Radio, simulation results verify that the proposed algorithm can reduce the computational complexity by three orders of magnitude and two orders of magnitude compared to the existing three-dimensional MUSIC algorithm and estimation-of-signal-parameters-using-rotational-invariance-techniques (ESPRIT) algorithm, respectively, and also improve the estimation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge