Hao Ye

Reducing Pilots in Channel Estimation With Predictive Foundation Models

Dec 17, 2025

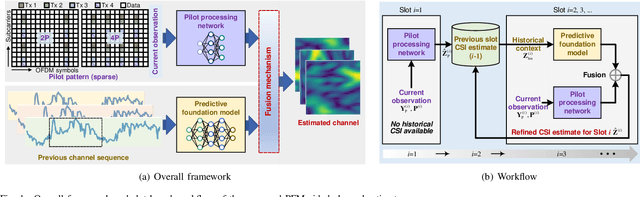

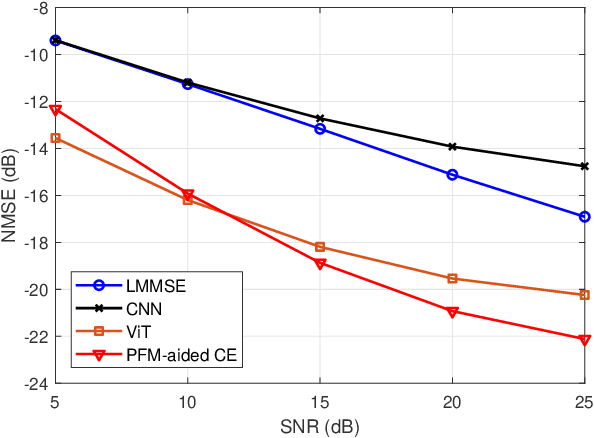

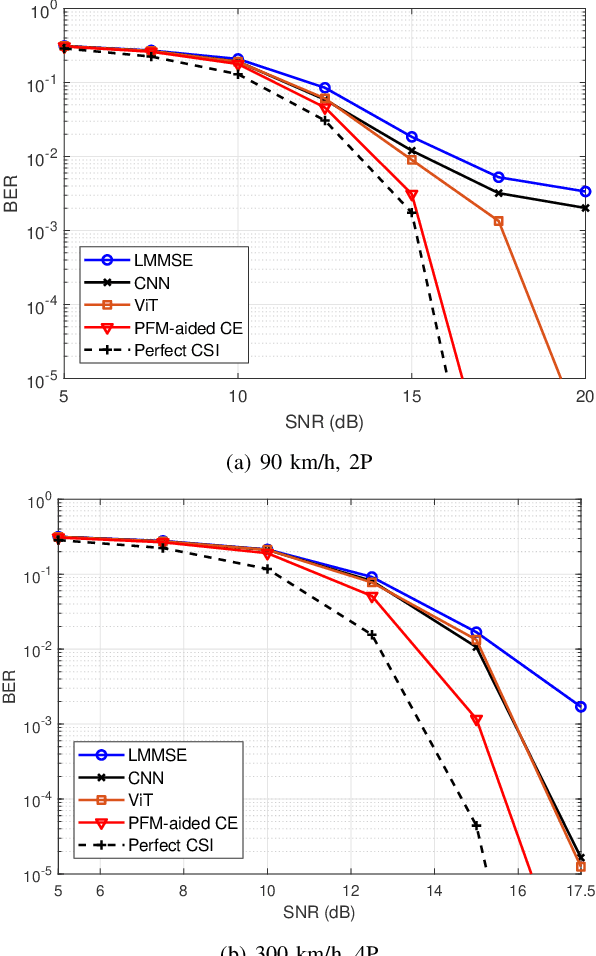

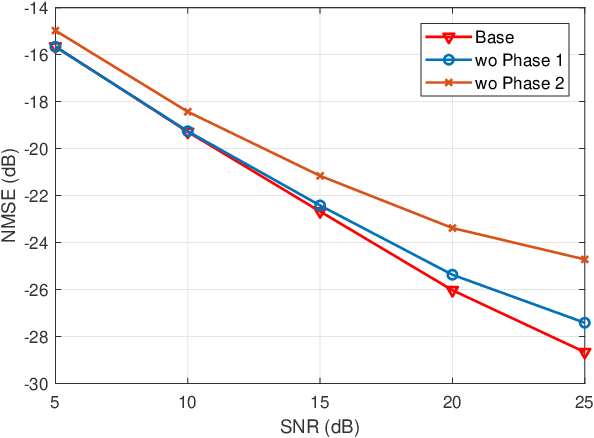

Abstract:Accurate channel state information (CSI) acquisition is essential for modern wireless systems, which becomes increasingly difficult under large antenna arrays, strict pilot overhead constraints, and diverse deployment environments. Existing artificial intelligence-based solutions often lack robustness and fail to generalize across scenarios. To address this limitation, this paper introduces a predictive-foundation-model-based channel estimation framework that enables accurate, low-overhead, and generalizable CSI acquisition. The proposed framework employs a predictive foundation model trained on large-scale cross-domain CSI data to extract universal channel representations and provide predictive priors with strong cross-scenario transferability. A pilot processing network based on a vision transformer architecture is further designed to capture spatial, temporal, and frequency correlations from pilot observations. An efficient fusion mechanism integrates predictive priors with real-time measurements, enabling reliable CSI reconstruction even under sparse or noisy conditions. Extensive evaluations across diverse configurations demonstrate that the proposed estimator significantly outperforms both classical and data-driven baselines in accuracy, robustness, and generalization capability.

SoccerNet 2025 Challenges Results

Aug 26, 2025Abstract:The SoccerNet 2025 Challenges mark the fifth annual edition of the SoccerNet open benchmarking effort, dedicated to advancing computer vision research in football video understanding. This year's challenges span four vision-based tasks: (1) Team Ball Action Spotting, focused on detecting ball-related actions in football broadcasts and assigning actions to teams; (2) Monocular Depth Estimation, targeting the recovery of scene geometry from single-camera broadcast clips through relative depth estimation for each pixel; (3) Multi-View Foul Recognition, requiring the analysis of multiple synchronized camera views to classify fouls and their severity; and (4) Game State Reconstruction, aimed at localizing and identifying all players from a broadcast video to reconstruct the game state on a 2D top-view of the field. Across all tasks, participants were provided with large-scale annotated datasets, unified evaluation protocols, and strong baselines as starting points. This report presents the results of each challenge, highlights the top-performing solutions, and provides insights into the progress made by the community. The SoccerNet Challenges continue to serve as a driving force for reproducible, open research at the intersection of computer vision, artificial intelligence, and sports. Detailed information about the tasks, challenges, and leaderboards can be found at https://www.soccer-net.org, with baselines and development kits available at https://github.com/SoccerNet.

SComCP: Task-Oriented Semantic Communication for Collaborative Perception

Jul 01, 2025Abstract:Reliable detection of surrounding objects is critical for the safe operation of connected automated vehicles (CAVs). However, inherent limitations such as the restricted perception range and occlusion effects compromise the reliability of single-vehicle perception systems in complex traffic environments. Collaborative perception has emerged as a promising approach by fusing sensor data from surrounding CAVs with diverse viewpoints, thereby improving environmental awareness. Although collaborative perception holds great promise, its performance is bottlenecked by wireless communication constraints, as unreliable and bandwidth-limited channels hinder the transmission of sensor data necessary for real-time perception. To address these challenges, this paper proposes SComCP, a novel task-oriented semantic communication framework for collaborative perception. Specifically, SComCP integrates an importance-aware feature selection network that selects and transmits semantic features most relevant to the perception task, significantly reducing communication overhead without sacrificing accuracy. Furthermore, we design a semantic codec network based on a joint source and channel coding (JSCC) architecture, which enables bidirectional transformation between semantic features and noise-tolerant channel symbols, thereby ensuring stable perception under adverse wireless conditions. Extensive experiments demonstrate the effectiveness of the proposed framework. In particular, compared to existing approaches, SComCP can maintain superior perception performance across various channel conditions, especially in low signal-to-noise ratio (SNR) scenarios. In addition, SComCP exhibits strong generalization capability, enabling the framework to maintain high performance across diverse channel conditions, even when trained with a specific channel model.

AgentThink: A Unified Framework for Tool-Augmented Chain-of-Thought Reasoning in Vision-Language Models for Autonomous Driving

May 21, 2025

Abstract:Vision-Language Models (VLMs) show promise for autonomous driving, yet their struggle with hallucinations, inefficient reasoning, and limited real-world validation hinders accurate perception and robust step-by-step reasoning. To overcome this, we introduce \textbf{AgentThink}, a pioneering unified framework that, for the first time, integrates Chain-of-Thought (CoT) reasoning with dynamic, agent-style tool invocation for autonomous driving tasks. AgentThink's core innovations include: \textbf{(i) Structured Data Generation}, by establishing an autonomous driving tool library to automatically construct structured, self-verified reasoning data explicitly incorporating tool usage for diverse driving scenarios; \textbf{(ii) A Two-stage Training Pipeline}, employing Supervised Fine-Tuning (SFT) with Group Relative Policy Optimization (GRPO) to equip VLMs with the capability for autonomous tool invocation; and \textbf{(iii) Agent-style Tool-Usage Evaluation}, introducing a novel multi-tool assessment protocol to rigorously evaluate the model's tool invocation and utilization. Experiments on the DriveLMM-o1 benchmark demonstrate AgentThink significantly boosts overall reasoning scores by \textbf{53.91\%} and enhances answer accuracy by \textbf{33.54\%}, while markedly improving reasoning quality and consistency. Furthermore, ablation studies and robust zero-shot/few-shot generalization experiments across various benchmarks underscore its powerful capabilities. These findings highlight a promising trajectory for developing trustworthy and tool-aware autonomous driving models.

Power Allocation for Delay Optimization in Device-to-Device Networks: A Graph Reinforcement Learning Approach

May 19, 2025Abstract:The pursuit of rate maximization in wireless communication frequently encounters substantial challenges associated with user fairness. This paper addresses these challenges by exploring a novel power allocation approach for delay optimization, utilizing graph neural networks (GNNs)-based reinforcement learning (RL) in device-to-device (D2D) communication. The proposed approach incorporates not only channel state information but also factors such as packet delay, the number of backlogged packets, and the number of transmitted packets into the components of the state information. We adopt a centralized RL method, where a central controller collects and processes the state information. The central controller functions as an agent trained using the proximal policy optimization (PPO) algorithm. To better utilize topology information in the communication network and enhance the generalization of the proposed method, we embed GNN layers into both the actor and critic networks of the PPO algorithm. This integration allows for efficient parameter updates of GNNs and enables the state information to be parameterized as a low-dimensional embedding, which is leveraged by the agent to optimize power allocation strategies. Simulation results demonstrate that the proposed method effectively reduces average delay while ensuring user fairness, outperforms baseline methods, and exhibits scalability and generalization capability.

DD-Ranking: Rethinking the Evaluation of Dataset Distillation

May 19, 2025

Abstract:In recent years, dataset distillation has provided a reliable solution for data compression, where models trained on the resulting smaller synthetic datasets achieve performance comparable to those trained on the original datasets. To further improve the performance of synthetic datasets, various training pipelines and optimization objectives have been proposed, greatly advancing the field of dataset distillation. Recent decoupled dataset distillation methods introduce soft labels and stronger data augmentation during the post-evaluation phase and scale dataset distillation up to larger datasets (e.g., ImageNet-1K). However, this raises a question: Is accuracy still a reliable metric to fairly evaluate dataset distillation methods? Our empirical findings suggest that the performance improvements of these methods often stem from additional techniques rather than the inherent quality of the images themselves, with even randomly sampled images achieving superior results. Such misaligned evaluation settings severely hinder the development of DD. Therefore, we propose DD-Ranking, a unified evaluation framework, along with new general evaluation metrics to uncover the true performance improvements achieved by different methods. By refocusing on the actual information enhancement of distilled datasets, DD-Ranking provides a more comprehensive and fair evaluation standard for future research advancements.

Small-Scale-Fading-Aware Resource Allocation in Wireless Federated Learning

May 06, 2025Abstract:Judicious resource allocation can effectively enhance federated learning (FL) training performance in wireless networks by addressing both system and statistical heterogeneity. However, existing strategies typically rely on block fading assumptions, which overlooks rapid channel fluctuations within each round of FL gradient uploading, leading to a degradation in FL training performance. Therefore, this paper proposes a small-scale-fading-aware resource allocation strategy using a multi-agent reinforcement learning (MARL) framework. Specifically, we establish a one-step convergence bound of the FL algorithm and formulate the resource allocation problem as a decentralized partially observable Markov decision process (Dec-POMDP), which is subsequently solved using the QMIX algorithm. In our framework, each client serves as an agent that dynamically determines spectrum and power allocations within each coherence time slot, based on local observations and a reward derived from the convergence analysis. The MARL setting reduces the dimensionality of the action space and facilitates decentralized decision-making, enhancing the scalability and practicality of the solution. Experimental results demonstrate that our QMIX-based resource allocation strategy significantly outperforms baseline methods across various degrees of statistical heterogeneity. Additionally, ablation studies validate the critical importance of incorporating small-scale fading dynamics, highlighting its role in optimizing FL performance.

Clinically Ready Magnetic Microrobots for Targeted Therapies

Jan 20, 2025

Abstract:Systemic drug administration often causes off-target effects limiting the efficacy of advanced therapies. Targeted drug delivery approaches increase local drug concentrations at the diseased site while minimizing systemic drug exposure. We present a magnetically guided microrobotic drug delivery system capable of precise navigation under physiological conditions. This platform integrates a clinical electromagnetic navigation system, a custom-designed release catheter, and a dissolvable capsule for accurate therapeutic delivery. In vitro tests showed precise navigation in human vasculature models, and in vivo experiments confirmed tracking under fluoroscopy and successful navigation in large animal models. The microrobot balances magnetic material concentration, contrast agent loading, and therapeutic drug capacity, enabling effective hosting of therapeutics despite the integration complexity of its components, offering a promising solution for precise targeted drug delivery.

Action Quality Assessment via Hierarchical Pose-guided Multi-stage Contrastive Regression

Jan 07, 2025

Abstract:Action Quality Assessment (AQA), which aims at automatic and fair evaluation of athletic performance, has gained increasing attention in recent years. However, athletes are often in rapid movement and the corresponding visual appearance variances are subtle, making it challenging to capture fine-grained pose differences and leading to poor estimation performance. Furthermore, most common AQA tasks, such as diving in sports, are usually divided into multiple sub-actions, each of which contains different durations. However, existing methods focus on segmenting the video into fixed frames, which disrupts the temporal continuity of sub-actions resulting in unavoidable prediction errors. To address these challenges, we propose a novel action quality assessment method through hierarchically pose-guided multi-stage contrastive regression. Firstly, we introduce a multi-scale dynamic visual-skeleton encoder to capture fine-grained spatio-temporal visual and skeletal features. Then, a procedure segmentation network is introduced to separate different sub-actions and obtain segmented features. Afterwards, the segmented visual and skeletal features are both fed into a multi-modal fusion module as physics structural priors, to guide the model in learning refined activity similarities and variances. Finally, a multi-stage contrastive learning regression approach is employed to learn discriminative representations and output prediction results. In addition, we introduce a newly-annotated FineDiving-Pose Dataset to improve the current low-quality human pose labels. In experiments, the results on FineDiving and MTL-AQA datasets demonstrate the effectiveness and superiority of our proposed approach. Our source code and dataset are available at https://github.com/Lumos0507/HP-MCoRe.

Meta-Learning Empowered Graph Neural Networks for Radio Resource Management

Aug 29, 2024

Abstract:In this paper, we consider a radio resource management (RRM) problem in the dynamic wireless networks, comprising multiple communication links that share the same spectrum resource. To achieve high network throughput while ensuring fairness across all links, we formulate a resilient power optimization problem with per-user minimum-rate constraints. We obtain the corresponding Lagrangian dual problem and parameterize all variables with neural networks, which can be trained in an unsupervised manner due to the provably acceptable duality gap. We develop a meta-learning approach with graph neural networks (GNNs) as parameterization that exhibits fast adaptation and scalability to varying network configurations. We formulate the objective of meta-learning by amalgamating the Lagrangian functions of different network configurations and utilize a first-order meta-learning algorithm, called Reptile, to obtain the meta-parameters. Numerical results verify that our method can efficiently improve the overall throughput and ensure the minimum rate performance. We further demonstrate that using the meta-parameters as initialization, our method can achieve fast adaptation to new wireless network configurations and reduce the number of required training data samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge