Mengshi Qi

Explainable Action Form Assessment by Exploiting Multimodal Chain-of-Thoughts Reasoning

Dec 17, 2025

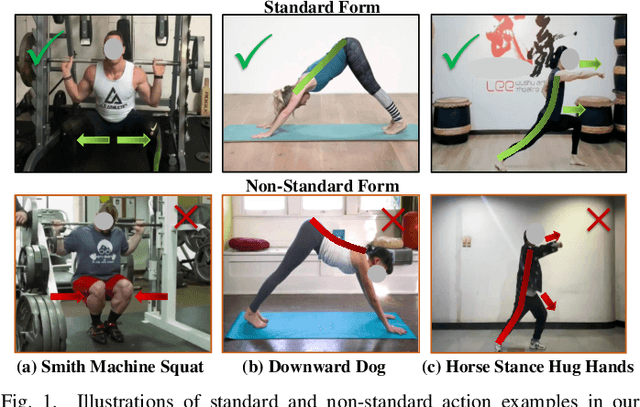

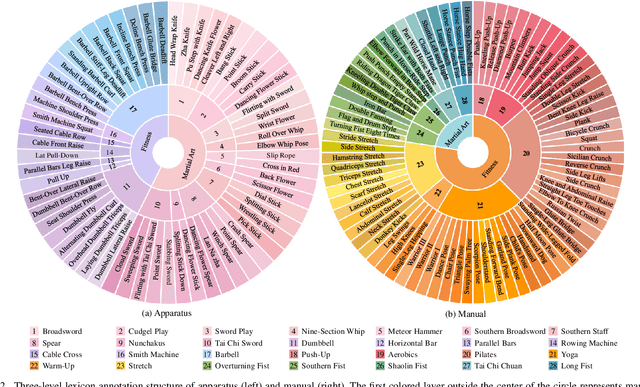

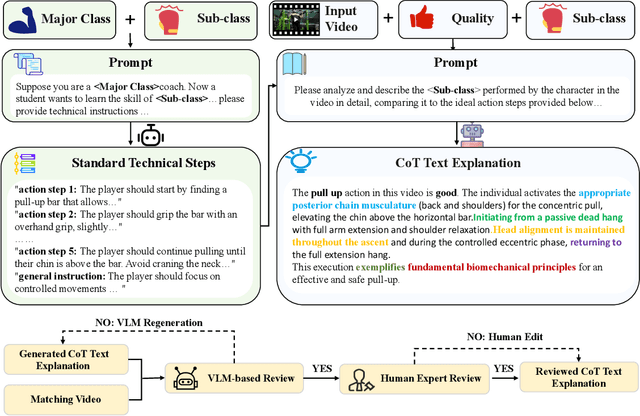

Abstract:Evaluating whether human action is standard or not and providing reasonable feedback to improve action standardization is very crucial but challenging in real-world scenarios. However, current video understanding methods are mainly concerned with what and where the action is, which is unable to meet the requirements. Meanwhile, most of the existing datasets lack the labels indicating the degree of action standardization, and the action quality assessment datasets lack explainability and detailed feedback. Therefore, we define a new Human Action Form Assessment (AFA) task, and introduce a new diverse dataset CoT-AFA, which contains a large scale of fitness and martial arts videos with multi-level annotations for comprehensive video analysis. We enrich the CoT-AFA dataset with a novel Chain-of-Thought explanation paradigm. Instead of offering isolated feedback, our explanations provide a complete reasoning process--from identifying an action step to analyzing its outcome and proposing a concrete solution. Furthermore, we propose a framework named Explainable Fitness Assessor, which can not only judge an action but also explain why and provide a solution. This framework employs two parallel processing streams and a dynamic gating mechanism to fuse visual and semantic information, thereby boosting its analytical capabilities. The experimental results demonstrate that our method has achieved improvements in explanation generation (e.g., +16.0% in CIDEr), action classification (+2.7% in accuracy) and quality assessment (+2.1% in accuracy), revealing great potential of CoT-AFA for future studies. Our dataset and source code is available at https://github.com/MICLAB-BUPT/EFA.

SoccerNet 2025 Challenges Results

Aug 26, 2025Abstract:The SoccerNet 2025 Challenges mark the fifth annual edition of the SoccerNet open benchmarking effort, dedicated to advancing computer vision research in football video understanding. This year's challenges span four vision-based tasks: (1) Team Ball Action Spotting, focused on detecting ball-related actions in football broadcasts and assigning actions to teams; (2) Monocular Depth Estimation, targeting the recovery of scene geometry from single-camera broadcast clips through relative depth estimation for each pixel; (3) Multi-View Foul Recognition, requiring the analysis of multiple synchronized camera views to classify fouls and their severity; and (4) Game State Reconstruction, aimed at localizing and identifying all players from a broadcast video to reconstruct the game state on a 2D top-view of the field. Across all tasks, participants were provided with large-scale annotated datasets, unified evaluation protocols, and strong baselines as starting points. This report presents the results of each challenge, highlights the top-performing solutions, and provides insights into the progress made by the community. The SoccerNet Challenges continue to serve as a driving force for reproducible, open research at the intersection of computer vision, artificial intelligence, and sports. Detailed information about the tasks, challenges, and leaderboards can be found at https://www.soccer-net.org, with baselines and development kits available at https://github.com/SoccerNet.

Chain-of-Thought Textual Reasoning for Few-shot Temporal Action Localization

Apr 18, 2025

Abstract:Traditional temporal action localization (TAL) methods rely on large amounts of detailed annotated data, whereas few-shot TAL reduces this dependence by using only a few training samples to identify unseen action categories. However, existing few-shot TAL methods typically focus solely on video-level information, neglecting textual information, which can provide valuable semantic support for the localization task. Therefore, we propose a new few-shot temporal action localization method by Chain-of-Thought textual reasoning to improve localization performance. Specifically, we design a novel few-shot learning framework that leverages textual semantic information to enhance the model's ability to capture action commonalities and variations, which includes a semantic-aware text-visual alignment module designed to align the query and support videos at different levels. Meanwhile, to better express the temporal dependencies and causal relationships between actions at the textual level to assist action localization, we design a Chain of Thought (CoT)-like reasoning method that progressively guides the Vision Language Model (VLM) and Large Language Model (LLM) to generate CoT-like text descriptions for videos. The generated texts can capture more variance of action than visual features. We conduct extensive experiments on the publicly available ActivityNet1.3 and THUMOS14 datasets. We introduce the first dataset named Human-related Anomaly Localization and explore the application of the TAL task in human anomaly detection. The experimental results demonstrate that our proposed method significantly outperforms existing methods in single-instance and multi-instance scenarios. We will release our code, data and benchmark.

Robo-SGG: Exploiting Layout-Oriented Normalization and Restitution for Robust Scene Graph Generation

Apr 17, 2025Abstract:In this paper, we introduce a novel method named Robo-SGG, i.e., Layout-Oriented Normalization and Restitution for Robust Scene Graph Generation. Compared to the existing SGG setting, the robust scene graph generation aims to perform inference on a diverse range of corrupted images, with the core challenge being the domain shift between the clean and corrupted images. Existing SGG methods suffer from degraded performance due to compromised visual features e.g., corruption interference or occlusions. To obtain robust visual features, we exploit the layout information, which is domain-invariant, to enhance the efficacy of existing SGG methods on corrupted images. Specifically, we employ Instance Normalization(IN) to filter out the domain-specific feature and recover the unchangeable structural features, i.e., the positional and semantic relationships among objects by the proposed Layout-Oriented Restitution. Additionally, we propose a Layout-Embedded Encoder (LEE) that augments the existing object and predicate encoders within the SGG framework, enriching the robust positional and semantic features of objects and predicates. Note that our proposed Robo-SGG module is designed as a plug-and-play component, which can be easily integrated into any baseline SGG model. Extensive experiments demonstrate that by integrating the state-of-the-art method into our proposed Robo-SGG, we achieve relative improvements of 5.6%, 8.0%, and 6.5% in mR@50 for PredCls, SGCls, and SGDet tasks on the VG-C dataset, respectively, and achieve new state-of-the-art performance in corruption scene graph generation benchmark (VG-C and GQA-C). We will release our source code and model.

DC-SAM: In-Context Segment Anything in Images and Videos via Dual Consistency

Apr 16, 2025Abstract:Given a single labeled example, in-context segmentation aims to segment corresponding objects. This setting, known as one-shot segmentation in few-shot learning, explores the segmentation model's generalization ability and has been applied to various vision tasks, including scene understanding and image/video editing. While recent Segment Anything Models have achieved state-of-the-art results in interactive segmentation, these approaches are not directly applicable to in-context segmentation. In this work, we propose the Dual Consistency SAM (DC-SAM) method based on prompt-tuning to adapt SAM and SAM2 for in-context segmentation of both images and videos. Our key insights are to enhance the features of the SAM's prompt encoder in segmentation by providing high-quality visual prompts. When generating a mask prior, we fuse the SAM features to better align the prompt encoder. Then, we design a cycle-consistent cross-attention on fused features and initial visual prompts. Next, a dual-branch design is provided by using the discriminative positive and negative prompts in the prompt encoder. Furthermore, we design a simple mask-tube training strategy to adopt our proposed dual consistency method into the mask tube. Although the proposed DC-SAM is primarily designed for images, it can be seamlessly extended to the video domain with the support of SAM2. Given the absence of in-context segmentation in the video domain, we manually curate and construct the first benchmark from existing video segmentation datasets, named In-Context Video Object Segmentation (IC-VOS), to better assess the in-context capability of the model. Extensive experiments demonstrate that our method achieves 55.5 (+1.4) mIoU on COCO-20i, 73.0 (+1.1) mIoU on PASCAL-5i, and a J&F score of 71.52 on the proposed IC-VOS benchmark. Our source code and benchmark are available at https://github.com/zaplm/DC-SAM.

Robust Disentangled Counterfactual Learning for Physical Audiovisual Commonsense Reasoning

Feb 18, 2025Abstract:In this paper, we propose a new Robust Disentangled Counterfactual Learning (RDCL) approach for physical audiovisual commonsense reasoning. The task aims to infer objects' physics commonsense based on both video and audio input, with the main challenge being how to imitate the reasoning ability of humans, even under the scenario of missing modalities. Most of the current methods fail to take full advantage of different characteristics in multi-modal data, and lacking causal reasoning ability in models impedes the progress of implicit physical knowledge inferring. To address these issues, our proposed RDCL method decouples videos into static (time-invariant) and dynamic (time-varying) factors in the latent space by the disentangled sequential encoder, which adopts a variational autoencoder (VAE) to maximize the mutual information with a contrastive loss function. Furthermore, we introduce a counterfactual learning module to augment the model's reasoning ability by modeling physical knowledge relationships among different objects under counterfactual intervention. To alleviate the incomplete modality data issue, we introduce a robust multimodal learning method to recover the missing data by decomposing the shared features and model-specific features. Our proposed method is a plug-and-play module that can be incorporated into any baseline including VLMs. In experiments, we show that our proposed method improves the reasoning accuracy and robustness of baseline methods and achieves the state-of-the-art performance.

VLM-Assisted Continual learning for Visual Question Answering in Self-Driving

Feb 02, 2025

Abstract:In this paper, we propose a novel approach for solving the Visual Question Answering (VQA) task in autonomous driving by integrating Vision-Language Models (VLMs) with continual learning. In autonomous driving, VQA plays a vital role in enabling the system to understand and reason about its surroundings. However, traditional models often struggle with catastrophic forgetting when sequentially exposed to new driving tasks, such as perception, prediction, and planning, each requiring different forms of knowledge. To address this challenge, we present a novel continual learning framework that combines VLMs with selective memory replay and knowledge distillation, reinforced by task-specific projection layer regularization. The knowledge distillation allows a previously trained model to act as a "teacher" to guide the model through subsequent tasks, minimizing forgetting. Meanwhile, task-specific projection layers calculate the loss based on the divergence of feature representations, ensuring continuity in learning and reducing the shift between tasks. Evaluated on the DriveLM dataset, our framework shows substantial performance improvements, with gains ranging from 21.40% to 32.28% across various metrics. These results highlight the effectiveness of combining continual learning with VLMs in enhancing the resilience and reliability of VQA systems in autonomous driving. We will release our source code.

Target-driven Self-Distillation for Partial Observed Trajectories Forecasting

Jan 28, 2025Abstract:Accurate prediction of future trajectories of traffic agents is essential for ensuring safe autonomous driving. However, partially observed trajectories can significantly degrade the performance of even state-of-the-art models. Previous approaches often rely on knowledge distillation to transfer features from fully observed trajectories to partially observed ones. This involves firstly training a fully observed model and then using a distillation process to create the final model. While effective, they require multi-stage training, making the training process very expensive. Moreover, knowledge distillation can lead to a performance degradation of the model. In this paper, we introduce a Target-driven Self-Distillation method (TSD) for motion forecasting. Our method leverages predicted accurate targets to guide the model in making predictions under partial observation conditions. By employing self-distillation, the model learns from the feature distributions of both fully observed and partially observed trajectories during a single end-to-end training process. This enhances the model's ability to predict motion accurately in both fully observed and partially observed scenarios. We evaluate our method on multiple datasets and state-of-the-art motion forecasting models. Extensive experimental results demonstrate that our approach achieves significant performance improvements in both settings. To facilitate further research, we will release our code and model checkpoints.

Towards Robust Unsupervised Attention Prediction in Autonomous Driving

Jan 25, 2025Abstract:Robustly predicting attention regions of interest for self-driving systems is crucial for driving safety but presents significant challenges due to the labor-intensive nature of obtaining large-scale attention labels and the domain gap between self-driving scenarios and natural scenes. These challenges are further exacerbated by complex traffic environments, including camera corruption under adverse weather, noise interferences, and central bias from long-tail distributions. To address these issues, we propose a robust unsupervised attention prediction method. An Uncertainty Mining Branch refines predictions by analyzing commonalities and differences across multiple pre-trained models on natural scenes, while a Knowledge Embedding Block bridges the domain gap by incorporating driving knowledge to adaptively enhance pseudo-labels. Additionally, we introduce RoboMixup, a novel data augmentation method that improves robustness against corruption through soft attention and dynamic augmentation, and mitigates central bias by integrating random cropping into Mixup as a regularizer.To systematically evaluate robustness in self-driving attention prediction, we introduce the DriverAttention-C benchmark, comprising over 100k frames across three subsets: BDD-A-C, DR(eye)VE-C, and DADA-2000-C. Our method achieves performance equivalent to or surpassing fully supervised state-of-the-art approaches on three public datasets and the proposed robustness benchmark, reducing relative corruption degradation by 58.8% and 52.8%, and improving central bias robustness by 12.4% and 11.4% in KLD and CC metrics, respectively. Code and data are available at https://github.com/zaplm/DriverAttention.

A New Teacher-Reviewer-Student Framework for Semi-supervised 2D Human Pose Estimation

Jan 16, 2025Abstract:Conventional 2D human pose estimation methods typically require extensive labeled annotations, which are both labor-intensive and expensive. In contrast, semi-supervised 2D human pose estimation can alleviate the above problems by leveraging a large amount of unlabeled data along with a small portion of labeled data. Existing semi-supervised 2D human pose estimation methods update the network through backpropagation, ignoring crucial historical information from the previous training process. Therefore, we propose a novel semi-supervised 2D human pose estimation method by utilizing a newly designed Teacher-Reviewer-Student framework. Specifically, we first mimic the phenomenon that human beings constantly review previous knowledge for consolidation to design our framework, in which the teacher predicts results to guide the student's learning and the reviewer stores important historical parameters to provide additional supervision signals. Secondly, we introduce a Multi-level Feature Learning strategy, which utilizes the outputs from different stages of the backbone to estimate the heatmap to guide network training, enriching the supervisory information while effectively capturing keypoint relationships. Finally, we design a data augmentation strategy, i.e., Keypoint-Mix, to perturb pose information by mixing different keypoints, thus enhancing the network's ability to discern keypoints. Extensive experiments on publicly available datasets, demonstrate our method achieves significant improvements compared to the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge