Le Liang

AoI-Driven Queue Management and Power Control in V2V Networks: A GNN-Enhanced MARL Approach

Jan 27, 2026Abstract:Queue management and resource allocation play a critical role in enabling cooperative status awareness in vehicular networks. This paper investigates the problem of age of information (AoI)-aware status updates in vehicle-to-vehicle (V2V) communication, where each vehicle's status is represented by multiple interdependent packets. To enable fine-grained queue management at the packet level under resource constraints, we formulate a joint optimization problem that simultaneously learns active packet dropping and transmit power control strategies. A hybrid action space is designed to support both discrete dropping decisions and continuous power control. To exploit the graph-structured interference inherent in V2V topology, a graph neural network (GNN) is introduced to aggregate slowly varying large-scale fading, allowing agents to capture topological dependencies implicitly without frequent message exchange. The overall framework is built upon multi-agent proximal policy optimization (MAPPO), with centralized training and decentralized execution (CTDE). Simulations demonstrate that the proposed method significantly reduces average AoI across a wide range of network densities, channel conditions, and traffic loads, consistently outperforming several baselines.

CoDS: Collaborative Perception via Digital Semantic Communication

Dec 27, 2025Abstract:Semantic communication has been introduced into collaborative perception systems for autonomous driving, offering a promising approach to enhancing data transmission efficiency and robustness. Despite its potential, existing semantic communication approaches predominantly rely on analog transmission models, rendering these systems fundamentally incompatible with the digital architecture of modern vehicle-to-everything (V2X) networks and posing a significant barrier to real-world deployment. To bridge this critical gap, we propose CoDS, a novel collaborative perception framework based on digital semantic communication, designed to realize semantic-level transmission efficiency within practical digital communication systems. Specifically, we develop a semantic compression codec that extracts and compresses task-oriented semantic features while preserving downstream perception accuracy. Building on this, we propose a novel semantic analog-to-digital converter that converts these continuous semantic features into a discrete bitstream, ensuring integration with existing digital communication pipelines. Furthermore, we develop an uncertainty-aware network (UAN) that assesses the reliability of each received feature and discards those corrupted by decoding failures, thereby mitigating the cliff effect of conventional channel coding schemes under low signal-to-noise ratio (SNR) conditions. Extensive experiments demonstrate that CoDS significantly outperforms existing semantic communication and traditional digital communication schemes, achieving state-of-the-art perception performance while ensuring compatibility with practical digital V2X systems.

Reducing Pilots in Channel Estimation With Predictive Foundation Models

Dec 17, 2025

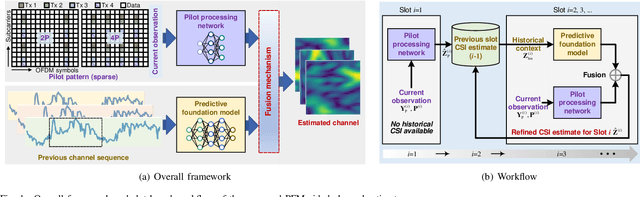

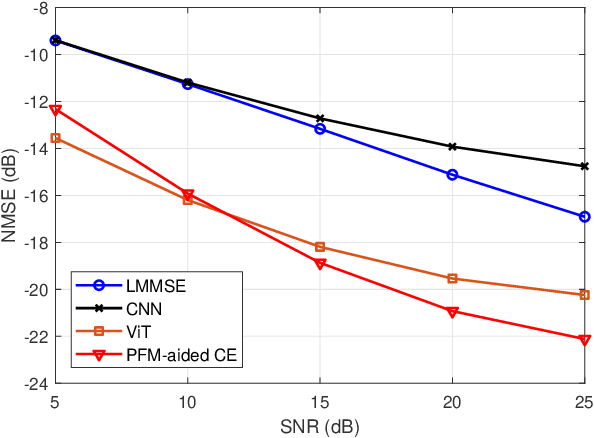

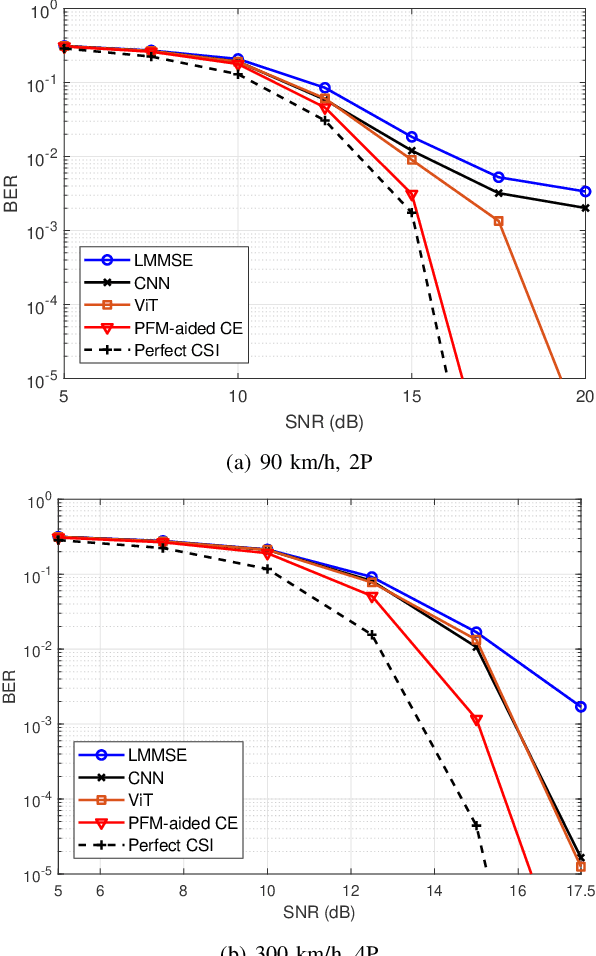

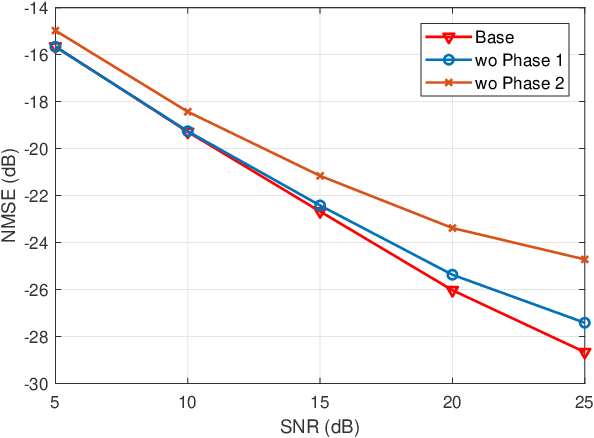

Abstract:Accurate channel state information (CSI) acquisition is essential for modern wireless systems, which becomes increasingly difficult under large antenna arrays, strict pilot overhead constraints, and diverse deployment environments. Existing artificial intelligence-based solutions often lack robustness and fail to generalize across scenarios. To address this limitation, this paper introduces a predictive-foundation-model-based channel estimation framework that enables accurate, low-overhead, and generalizable CSI acquisition. The proposed framework employs a predictive foundation model trained on large-scale cross-domain CSI data to extract universal channel representations and provide predictive priors with strong cross-scenario transferability. A pilot processing network based on a vision transformer architecture is further designed to capture spatial, temporal, and frequency correlations from pilot observations. An efficient fusion mechanism integrates predictive priors with real-time measurements, enabling reliable CSI reconstruction even under sparse or noisy conditions. Extensive evaluations across diverse configurations demonstrate that the proposed estimator significantly outperforms both classical and data-driven baselines in accuracy, robustness, and generalization capability.

Next-Generation AI-Native Wireless Communications: MCMC-Based Receiver Architectures for Unified Processing

Oct 02, 2025Abstract:The multiple-input multiple-output (MIMO) receiver processing is a key technology for current and next-generation wireless communications. However, it faces significant challenges related to complexity and scalability as the number of antennas increases. Artificial intelligence (AI), a cornerstone of next-generation wireless networks, offers considerable potential for addressing these challenges. This paper proposes an AI-driven, universal MIMO receiver architecture based on Markov chain Monte Carlo (MCMC) techniques. Unlike existing AI-based methods that treat receiver processing as a black box, our MCMC-based approach functions as a generic Bayesian computing engine applicable to various processing tasks, including channel estimation, symbol detection, and channel decoding. This method enhances the interpretability, scalability, and flexibility of receivers in diverse scenarios. Furthermore, the proposed approach integrates these tasks into a unified probabilistic framework, thereby enabling overall performance optimization. This unified framework can also be seamlessly combined with data-driven learning methods to facilitate the development of fully intelligent communication receivers.

SComCP: Task-Oriented Semantic Communication for Collaborative Perception

Jul 01, 2025Abstract:Reliable detection of surrounding objects is critical for the safe operation of connected automated vehicles (CAVs). However, inherent limitations such as the restricted perception range and occlusion effects compromise the reliability of single-vehicle perception systems in complex traffic environments. Collaborative perception has emerged as a promising approach by fusing sensor data from surrounding CAVs with diverse viewpoints, thereby improving environmental awareness. Although collaborative perception holds great promise, its performance is bottlenecked by wireless communication constraints, as unreliable and bandwidth-limited channels hinder the transmission of sensor data necessary for real-time perception. To address these challenges, this paper proposes SComCP, a novel task-oriented semantic communication framework for collaborative perception. Specifically, SComCP integrates an importance-aware feature selection network that selects and transmits semantic features most relevant to the perception task, significantly reducing communication overhead without sacrificing accuracy. Furthermore, we design a semantic codec network based on a joint source and channel coding (JSCC) architecture, which enables bidirectional transformation between semantic features and noise-tolerant channel symbols, thereby ensuring stable perception under adverse wireless conditions. Extensive experiments demonstrate the effectiveness of the proposed framework. In particular, compared to existing approaches, SComCP can maintain superior perception performance across various channel conditions, especially in low signal-to-noise ratio (SNR) scenarios. In addition, SComCP exhibits strong generalization capability, enabling the framework to maintain high performance across diverse channel conditions, even when trained with a specific channel model.

Power Allocation for Delay Optimization in Device-to-Device Networks: A Graph Reinforcement Learning Approach

May 19, 2025Abstract:The pursuit of rate maximization in wireless communication frequently encounters substantial challenges associated with user fairness. This paper addresses these challenges by exploring a novel power allocation approach for delay optimization, utilizing graph neural networks (GNNs)-based reinforcement learning (RL) in device-to-device (D2D) communication. The proposed approach incorporates not only channel state information but also factors such as packet delay, the number of backlogged packets, and the number of transmitted packets into the components of the state information. We adopt a centralized RL method, where a central controller collects and processes the state information. The central controller functions as an agent trained using the proximal policy optimization (PPO) algorithm. To better utilize topology information in the communication network and enhance the generalization of the proposed method, we embed GNN layers into both the actor and critic networks of the PPO algorithm. This integration allows for efficient parameter updates of GNNs and enables the state information to be parameterized as a low-dimensional embedding, which is leveraged by the agent to optimize power allocation strategies. Simulation results demonstrate that the proposed method effectively reduces average delay while ensuring user fairness, outperforms baseline methods, and exhibits scalability and generalization capability.

Small-Scale-Fading-Aware Resource Allocation in Wireless Federated Learning

May 06, 2025Abstract:Judicious resource allocation can effectively enhance federated learning (FL) training performance in wireless networks by addressing both system and statistical heterogeneity. However, existing strategies typically rely on block fading assumptions, which overlooks rapid channel fluctuations within each round of FL gradient uploading, leading to a degradation in FL training performance. Therefore, this paper proposes a small-scale-fading-aware resource allocation strategy using a multi-agent reinforcement learning (MARL) framework. Specifically, we establish a one-step convergence bound of the FL algorithm and formulate the resource allocation problem as a decentralized partially observable Markov decision process (Dec-POMDP), which is subsequently solved using the QMIX algorithm. In our framework, each client serves as an agent that dynamically determines spectrum and power allocations within each coherence time slot, based on local observations and a reward derived from the convergence analysis. The MARL setting reduces the dimensionality of the action space and facilitates decentralized decision-making, enhancing the scalability and practicality of the solution. Experimental results demonstrate that our QMIX-based resource allocation strategy significantly outperforms baseline methods across various degrees of statistical heterogeneity. Additionally, ablation studies validate the critical importance of incorporating small-scale fading dynamics, highlighting its role in optimizing FL performance.

Task-Oriented Semantic Communication for Stereo-Vision 3D Object Detection

Feb 18, 2025

Abstract:With the development of computer vision, 3D object detection has become increasingly important in many real-world applications. Limited by the computing power of sensor-side hardware, the detection task is sometimes deployed on remote computing devices or the cloud to execute complex algorithms, which brings massive data transmission overhead. In response, this paper proposes an optical flow-driven semantic communication framework for the stereo-vision 3D object detection task. The proposed framework fully exploits the dependence of stereo-vision 3D detection on semantic information in images and prioritizes the transmission of this semantic information to reduce total transmission data sizes while ensuring the detection accuracy. Specifically, we develop an optical flow-driven module to jointly extract and recover semantics from the left and right images to reduce the loss of the left-right photometric alignment semantic information and improve the accuracy of depth inference. Then, we design a 2D semantic extraction module to identify and extract semantic meaning around the objects to enhance the transmission of semantic information in the key areas. Finally, a fusion network is used to fuse the recovered semantics, and reconstruct the stereo-vision images for 3D detection. Simulation results show that the proposed method improves the detection accuracy by nearly 70% and outperforms the traditional method, especially for the low signal-to-noise ratio regime.

Hybrid Beamforming Design for Bistatic Integrated Sensing and Communication Systems

Feb 17, 2025Abstract:Integrated sensing and communication (ISAC) in millimeter wave is a key enabler for next-generation networks, which leverages large bandwidth and extensive antenna arrays, benefiting both communication and sensing functionalities. The associated high costs can be mitigated by adopting a hybrid beamforming structure. However, the well-studied monostatic ISAC systems face challenges related to full-duplex operation. To address this issue, this paper focuses on a three-dimensional bistatic configuration that requires only half-duplex base stations. To intuitively evaluate the error bound of bistatic sensing using orthogonal frequency division multiplexing waveforms, we propose a positioning scheme that combines angle-of-arrival and time-of-arrival estimation, deriving the closed-form expression of the position error bound (PEB). Using this PEB, we develop two hybrid beamforming algorithms for joint waveform design, aimed at maximizing achievable spectral efficiency (SE) while ensuring a predefined PEB threshold. The first algorithm leverages a Riemannian trust-region approach, achieving superior performance in terms of global optima and convergence speed compared to conventional gradient-based methods, but with higher complexity. In contrast, the second algorithm, which employs orthogonal matching pursuit, offers a more computationally efficient solution, delivering reasonable SE while maintaining the PEB constraint. Numerical results are provided to validate the effectiveness of the proposed designs.

On Privacy, Security, and Trustworthiness in Distributed Wireless Large AI Models (WLAM)

Dec 04, 2024

Abstract:Combining wireless communication with large artificial intelligence (AI) models can open up a myriad of novel application scenarios. In sixth generation (6G) networks, ubiquitous communication and computing resources allow large AI models to serve democratic large AI models-related services to enable real-time applications like autonomous vehicles, smart cities, and Internet of Things (IoT) ecosystems. However, the security considerations and sustainable communication resources limit the deployment of large AI models over distributed wireless networks. This paper provides a comprehensive overview of privacy, security, and trustworthy for distributed wireless large AI model (WLAM). In particular, a detailed privacy and security are analysis for distributed WLAM is fist revealed. The classifications and theoretical findings about privacy and security in distributed WLAM are discussed. Then the trustworthy and ethics for implementing distributed WLAM are described. Finally, the comprehensive applications of distributed WLAM are presented in the context of electromagnetic signal processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge