Xuemin Shen

HALO: Semantic-Aware Distributed LLM Inference in Lossy Edge Network

Jan 16, 2026Abstract:The deployment of large language models' (LLMs) inference at the edge can facilitate prompt service responsiveness while protecting user privacy. However, it is critically challenged by the resource constraints of a single edge node. Distributed inference has emerged to aggregate and leverage computational resources across multiple devices. Yet, existing methods typically require strict synchronization, which is often infeasible due to the unreliable network conditions. In this paper, we propose HALO, a novel framework that can boost the distributed LLM inference in lossy edge network. The core idea is to enable a relaxed yet effective synchronization by strategically allocating less critical neuron groups to unstable devices, thus avoiding the excessive waiting time incurred by delayed packets. HALO introduces three key mechanisms: (1) a semantic-aware predictor to assess the significance of neuron groups prior to activation. (2) a parallel execution scheme of neuron group loading during the model inference. (3) a load-balancing scheduler that efficiently orchestrates multiple devices with heterogeneous resources. Experimental results from a Raspberry Pi cluster demonstrate that HALO achieves a 3.41x end-to-end speedup for LLaMA-series LLMs under unreliable network conditions. It maintains performance comparable to optimal conditions and significantly outperforms the state-of-the-art in various scenarios.

RadioDiff-Flux: Efficient Radio Map Construction via Generative Denoise Diffusion Model Trajectory Midpoint Reuse

Jan 06, 2026Abstract:Accurate radio map (RM) construction is essential to enabling environment-aware and adaptive wireless communication. However, in future 6G scenarios characterized by high-speed network entities and fast-changing environments, it is very challenging to meet real-time requirements. Although generative diffusion models (DMs) can achieve state-of-the-art accuracy with second-level delay, their iterative nature leads to prohibitive inference latency in delay-sensitive scenarios. In this paper, by uncovering a key structural property of diffusion processes: the latent midpoints remain highly consistent across semantically similar scenes, we propose RadioDiff-Flux, a novel two-stage latent diffusion framework that decouples static environmental modeling from dynamic refinement, enabling the reuse of precomputed midpoints to bypass redundant denoising. In particular, the first stage generates a coarse latent representation using only static scene features, which can be cached and shared across similar scenarios. The second stage adapts this representation to dynamic conditions and transmitter locations using a pre-trained model, thereby avoiding repeated early-stage computation. The proposed RadioDiff-Flux significantly reduces inference time while preserving fidelity. Experiment results show that RadioDiff-Flux can achieve up to 50 acceleration with less than 0.15% accuracy loss, demonstrating its practical utility for fast, scalable RM generation in future 6G networks.

QoE-Aware Service Provision for Mobile AR Rendering: An Agent-Driven Approach

Aug 12, 2025Abstract:Mobile augmented reality (MAR) is envisioned as a key immersive application in 6G, enabling virtual content rendering aligned with the physical environment through device pose estimation. In this paper, we propose a novel agent-driven communication service provisioning approach for edge-assisted MAR, aiming to reduce communication overhead between MAR devices and the edge server while ensuring the quality of experience (QoE). First, to address the inaccessibility of MAR application-specific information to the network controller, we establish a digital agent powered by large language models (LLMs) on behalf of the MAR service provider, bridging the data and function gap between the MAR service and network domains. Second, to cope with the user-dependent and dynamic nature of data traffic patterns for individual devices, we develop a user-level QoE modeling method that captures the relationship between communication resource demands and perceived user QoE, enabling personalized, agent-driven communication resource management. Trace-driven simulation results demonstrate that the proposed approach outperforms conventional LLM-based QoE-aware service provisioning methods in both user-level QoE modeling accuracy and communication resource efficiency.

RadioDiff-3D: A 3D$\times$3D Radio Map Dataset and Generative Diffusion Based Benchmark for 6G Environment-Aware Communication

Jul 16, 2025Abstract:Radio maps (RMs) serve as a critical foundation for enabling environment-aware wireless communication, as they provide the spatial distribution of wireless channel characteristics. Despite recent progress in RM construction using data-driven approaches, most existing methods focus solely on pathloss prediction in a fixed 2D plane, neglecting key parameters such as direction of arrival (DoA), time of arrival (ToA), and vertical spatial variations. Such a limitation is primarily due to the reliance on static learning paradigms, which hinder generalization beyond the training data distribution. To address these challenges, we propose UrbanRadio3D, a large-scale, high-resolution 3D RM dataset constructed via ray tracing in realistic urban environments. UrbanRadio3D is over 37$\times$3 larger than previous datasets across a 3D space with 3 metrics as pathloss, DoA, and ToA, forming a novel 3D$\times$33D dataset with 7$\times$3 more height layers than prior state-of-the-art (SOTA) dataset. To benchmark 3D RM construction, a UNet with 3D convolutional operators is proposed. Moreover, we further introduce RadioDiff-3D, a diffusion-model-based generative framework utilizing the 3D convolutional architecture. RadioDiff-3D supports both radiation-aware scenarios with known transmitter locations and radiation-unaware settings based on sparse spatial observations. Extensive evaluations on UrbanRadio3D validate that RadioDiff-3D achieves superior performance in constructing rich, high-dimensional radio maps under diverse environmental dynamics. This work provides a foundational dataset and benchmark for future research in 3D environment-aware communication. The dataset is available at https://github.com/UNIC-Lab/UrbanRadio3D.

Drift-Adaptive Slicing-Based Resource Management for Cooperative ISAC Networks

Jun 25, 2025Abstract:In this paper, we propose a novel drift-adaptive slicing-based resource management scheme for cooperative integrated sensing and communication (ISAC) networks. Particularly, we establish two network slices to provide sensing and communication services, respectively. In the large-timescale planning for the slices, we partition the sensing region of interest (RoI) of each mobile device and reserve network resources accordingly, facilitating low-complexity distance-based sensing target assignment in small timescales. To cope with the non-stationary spatial distributions of mobile devices and sensing targets, which can result in the drift in modeling the distributions and ineffective planning decisions, we construct digital twins (DTs) of the slices. In each DT, a drift-adaptive statistical model and an emulation function are developed for the spatial distributions in the corresponding slice, which facilitates closed-form decision-making and efficient validation of a planning decision, respectively. Numerical results show that the proposed drift-adaptive slicing-based resource management scheme can increase the service satisfaction ratio by up to 18% and reduce resource consumption by up to 13.1% when compared with benchmark schemes.

From Ground to Sky: Architectures, Applications, and Challenges Shaping Low-Altitude Wireless Networks

Jun 14, 2025Abstract:In this article, we introduce a novel low-altitude wireless network (LAWN), which is a reconfigurable, three-dimensional (3D) layered architecture. In particular, the LAWN integrates connectivity, sensing, control, and computing across aerial and terrestrial nodes that enable seamless operation in complex, dynamic, and mission-critical environments. In this article, we introduce a novel low-altitude wireless network (LAWN), which is a reconfigurable, three-dimensional (3D) layered architecture. Different from the conventional aerial communication systems, LAWN's distinctive feature is its tight integration of functional planes in which multiple functionalities continually reshape themselves to operate safely and efficiently in the low-altitude sky. With the LAWN, we discuss several enabling technologies, such as integrated sensing and communication (ISAC), semantic communication, and fully-actuated control systems. Finally, we identify potential applications and key cross-layer challenges. This article offers a comprehensive roadmap for future research and development in the low-altitude airspace.

Directional Sparsity Based Statistical Channel Estimation for 6D Movable Antenna Communications

May 21, 2025Abstract:Six-dimensional movable antenna (6DMA) is an innovative and transformative technology to improve wireless network capacity by adjusting the 3D positions and 3D rotations of antennas/surfaces (sub-arrays) based on the channel spatial distribution. For optimization of the antenna positions and rotations, the acquisition of statistical channel state information (CSI) is essential for 6DMA systems. In this paper, we unveil for the first time a new \textbf{\textit{directional sparsity}} property of the 6DMA channels between the base station (BS) and the distributed users, where each user has significant channel gains only with a (small) subset of 6DMA position-rotation pairs, which can receive direct/reflected signals from the user. By exploiting this property, a covariance-based algorithm is proposed for estimating the statistical CSI in terms of the average channel power at a small number of 6DMA positions and rotations. Based on such limited channel power estimation, the average channel powers for all possible 6DMA positions and rotations in the BS movement region are reconstructed by further estimating the multi-path average power and direction-of-arrival (DOA) vectors of all users. Simulation results show that the proposed directional sparsity-based algorithm can achieve higher channel power estimation accuracy than existing benchmark schemes, while requiring a lower pilot overhead.

Intelligent Polarforming Antenna Enhanced Sensing and Communication: Modeling and Optimization

May 12, 2025Abstract:In this paper, we propose a novel intelligent polarforming antenna (IPA) to achieve cost-effective wireless sensing and communication. Specifically, the IPA can enable polarforming by adaptively controlling the antenna's polarization electrically as well as its position/rotation mechanically, so as to effectively exploit polarization and spatial diversity to reconfigure wireless channels for improving sensing and communication performance. We study an IPA-enhanced integrated sensing and communication (ISAC) system that utilizes user location sensing to facilitate communication between an IPA-equipped base station (BS) and IPA-equipped users. First, we model the IPA channel in terms of transceiver antenna polarforming vectors and antenna positions/rotations. We then propose a two-timescale ISAC protocol, where in the slow timescale, user localization is first performed, followed by the optimization of the BS antennas' positions and rotations based on the sensed user locations; subsequently, in the fast timescale, transceiver polarforming is adapted to cater to the instantaneous channel state information (CSI), with the optimized BS antennas' positions and rotations. We propose a new polarforming-based user localization method that uses a structured time-domain pattern of pilot-polarforming vectors to extract the common stable components in the IPA channel across different polarizations based on the parallel factor (PARAFAC) tensor model. Moreover, we maximize the achievable average sum-rate of users by jointly optimizing the fast-timescale transceiver polarforming, including phase shifts and amplitude variations, along with the slow-timescale antenna rotations and positions at the BS. Simulation results validate the effectiveness of polarforming-based localization algorithm and demonstrate the performance advantages of polarforming, antenna placement, and their joint design.

Hybrid-Field 6D Movable Antenna for Terahertz Communications: Channel Modeling and Estimation

May 07, 2025Abstract:In this work, we study a six-dimensional movable antenna (6DMA)-enhanced Terahertz (THz) network that supports a large number of users with a few antennas by controlling the three-dimensional (3D) positions and 3D rotations of antenna surfaces/subarrays at the base station (BS). However, the short wavelength of THz signals combined with a large 6DMA movement range extends the near-field region. As a result, a user can be in the far-field region relative to the antennas on one 6DMA surface, while simultaneously residing in the near-field region relative to other 6DMA surfaces. Moreover, 6DMA THz channel estimation suffers from increased computational complexity and pilot overhead due to uneven power distribution across the large number of candidate position-rotation pairs, as well as the limited number of radio frequency (RF) chains in THz bands. To address these issues, we propose an efficient hybrid-field generalized 6DMA THz channel model, which accounts for planar wave propagation within individual 6DMA surfaces and spherical waves among different 6DMA surfaces. Furthermore, we propose a low-overhead channel estimation algorithm that leverages directional sparsity to construct a complete channel map for all potential antenna position-rotation pairs. Numerical results show that the proposed hybrid-field channel model achieves a sum rate close to that of the ground-truth near-field channel model and confirm that the channel estimation method yields accurate results with low complexity.

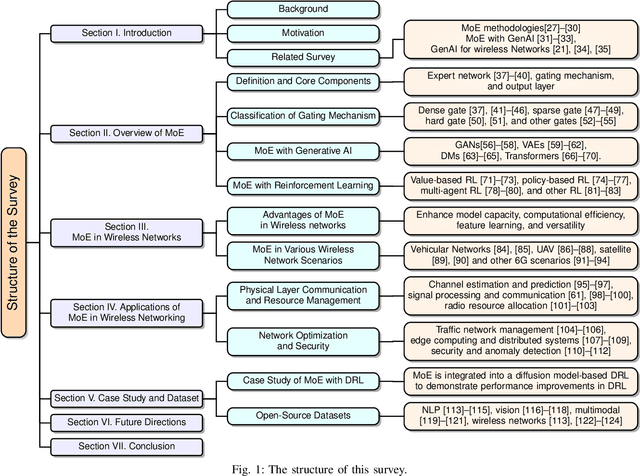

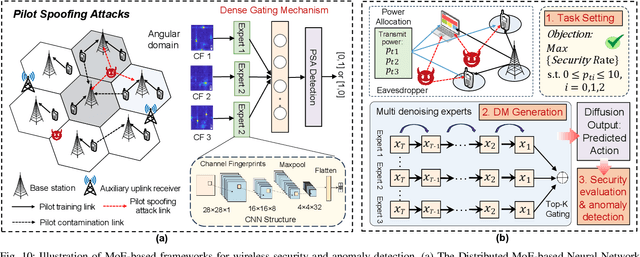

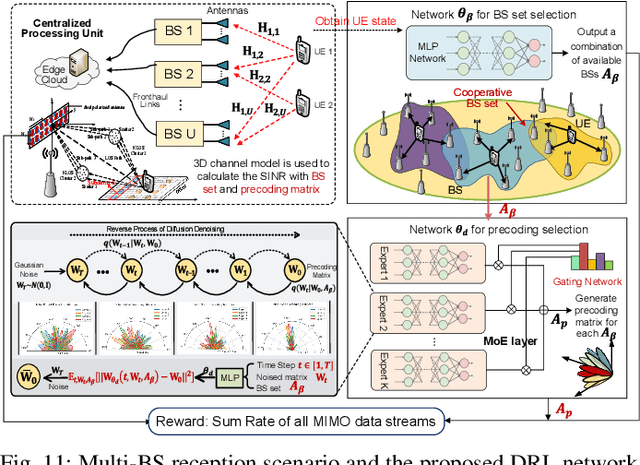

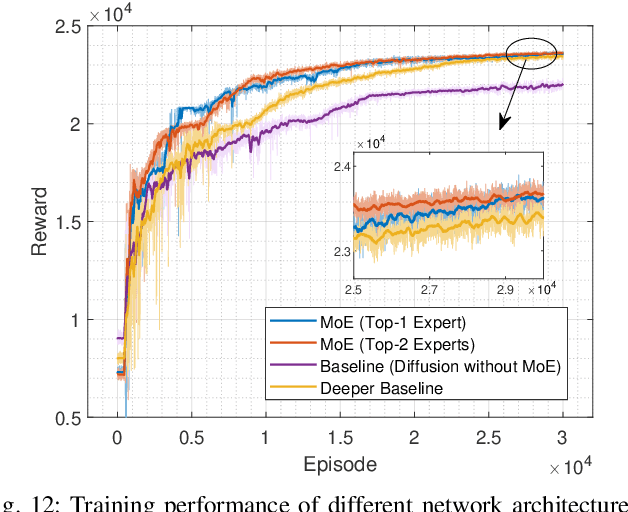

Decentralization of Generative AI via Mixture of Experts for Wireless Networks: A Comprehensive Survey

Apr 28, 2025

Abstract:Mixture of Experts (MoE) has emerged as a promising paradigm for scaling model capacity while preserving computational efficiency, particularly in large-scale machine learning architectures such as large language models (LLMs). Recent advances in MoE have facilitated its adoption in wireless networks to address the increasing complexity and heterogeneity of modern communication systems. This paper presents a comprehensive survey of the MoE framework in wireless networks, highlighting its potential in optimizing resource efficiency, improving scalability, and enhancing adaptability across diverse network tasks. We first introduce the fundamental concepts of MoE, including various gating mechanisms and the integration with generative AI (GenAI) and reinforcement learning (RL). Subsequently, we discuss the extensive applications of MoE across critical wireless communication scenarios, such as vehicular networks, unmanned aerial vehicles (UAVs), satellite communications, heterogeneous networks, integrated sensing and communication (ISAC), and mobile edge networks. Furthermore, key applications in channel prediction, physical layer signal processing, radio resource management, network optimization, and security are thoroughly examined. Additionally, we present a detailed overview of open-source datasets that are widely used in MoE-based models to support diverse machine learning tasks. Finally, this survey identifies crucial future research directions for MoE, emphasizing the importance of advanced training techniques, resource-aware gating strategies, and deeper integration with emerging 6G technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge