Yinqiu Liu

Agentic AI for Integrated Sensing and Communication: Analysis, Framework, and Case Study

Dec 17, 2025

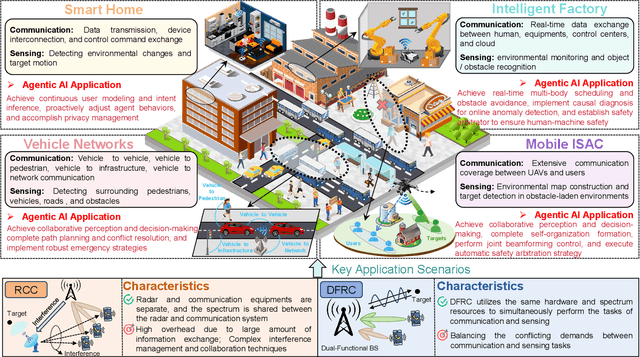

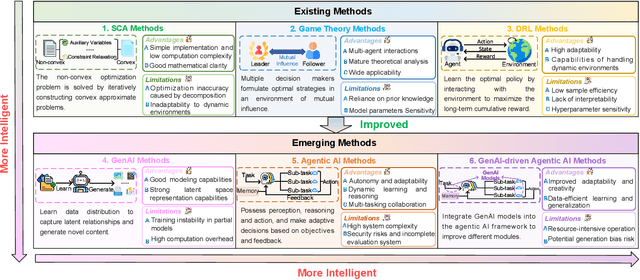

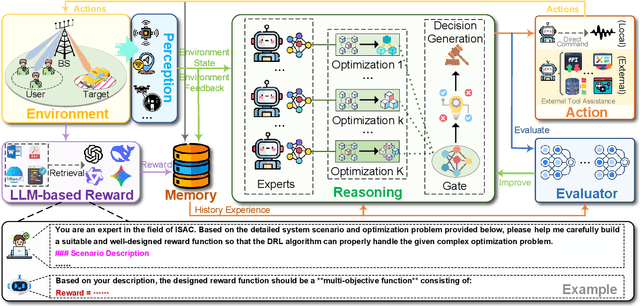

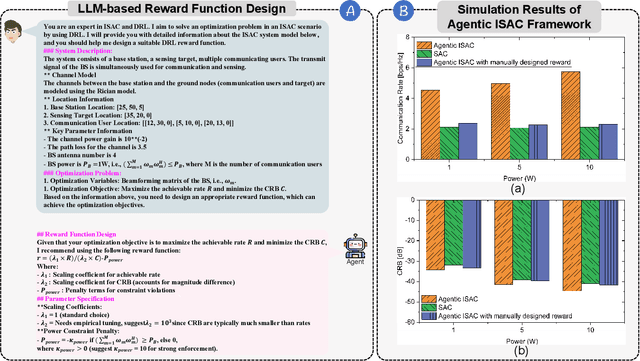

Abstract:Integrated sensing and communication (ISAC) has emerged as a key development direction in the sixth-generation (6G) era, which provides essential support for the collaborative sensing and communication of future intelligent networks. However, as wireless environments become increasingly dynamic and complex, ISAC systems require more intelligent processing and more autonomous operation to maintain efficiency and adaptability. Meanwhile, agentic artificial intelligence (AI) offers a feasible solution to address these challenges by enabling continuous perception-reasoning-action loops in dynamic environments to support intelligent, autonomous, and efficient operation for ISAC systems. As such, we delve into the application value and prospects of agentic AI in ISAC systems in this work. Firstly, we provide a comprehensive review of agentic AI and ISAC systems to demonstrate their key characteristics. Secondly, we show several common optimization approaches for ISAC systems and highlight the significant advantages of generative artificial intelligence (GenAI)-based agentic AI. Thirdly, we propose a novel agentic ISAC framework and prensent a case study to verify its superiority in optimizing ISAC performance. Finally, we clarify future research directions for agentic AI-based ISAC systems.

Context-Aware Semantic Communication for the Wireless Networks

May 29, 2025Abstract:In next-generation wireless networks, supporting real-time applications such as augmented reality, autonomous driving, and immersive Metaverse services demands stringent constraints on bandwidth, latency, and reliability. Existing semantic communication (SemCom) approaches typically rely on static models, overlooking dynamic conditions and contextual cues vital for efficient transmission. To address these challenges, we propose CaSemCom, a context-aware SemCom framework that leverages a Large Language Model (LLM)-based gating mechanism and a Mixture of Experts (MoE) architecture to adaptively select and encode only high-impact semantic features across multiple data modalities. Our multimodal, multi-user case study demonstrates that CaSemCom significantly improves reconstructed image fidelity while reducing bandwidth usage, outperforming single-agent deep reinforcement learning (DRL) methods and traditional baselines in convergence speed, semantic accuracy, and retransmission overhead.

LAMeTA: Intent-Aware Agentic Network Optimization via a Large AI Model-Empowered Two-Stage Approach

May 18, 2025

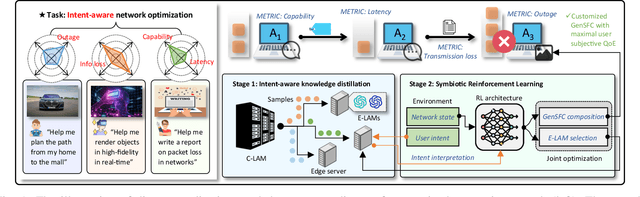

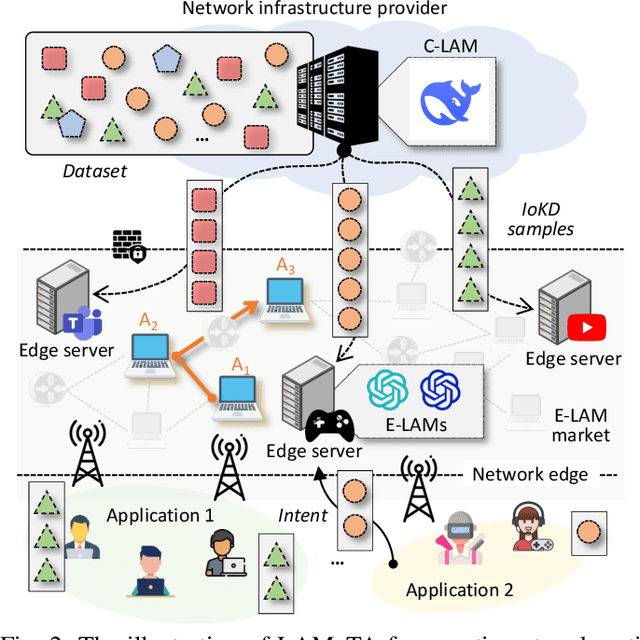

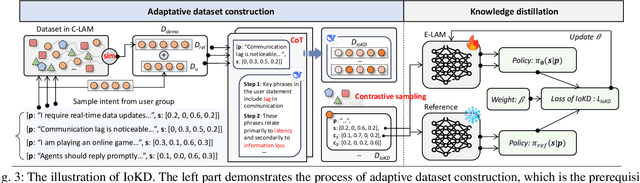

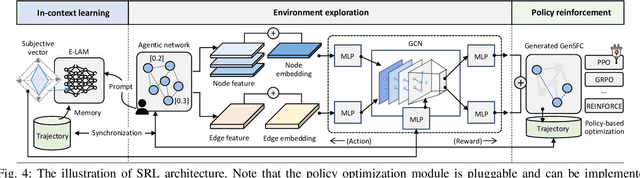

Abstract:Nowadays, Generative AI (GenAI) reshapes numerous domains by enabling machines to create content across modalities. As GenAI evolves into autonomous agents capable of reasoning, collaboration, and interaction, they are increasingly deployed on network infrastructures to serve humans automatically. This emerging paradigm, known as the agentic network, presents new optimization challenges due to the demand to incorporate subjective intents of human users expressed in natural language. Traditional generic Deep Reinforcement Learning (DRL) struggles to capture intent semantics and adjust policies dynamically, thus leading to suboptimality. In this paper, we present LAMeTA, a Large AI Model (LAM)-empowered Two-stage Approach for intent-aware agentic network optimization. First, we propose Intent-oriented Knowledge Distillation (IoKD), which efficiently distills intent-understanding capabilities from resource-intensive LAMs to lightweight edge LAMs (E-LAMs) to serve end users. Second, we develop Symbiotic Reinforcement Learning (SRL), integrating E-LAMs with a policy-based DRL framework. In SRL, E-LAMs translate natural language user intents into structured preference vectors that guide both state representation and reward design. The DRL, in turn, optimizes the generative service function chain composition and E-LAM selection based on real-time network conditions, thus optimizing the subjective Quality-of-Experience (QoE). Extensive experiments conducted in an agentic network with 81 agents demonstrate that IoKD reduces mean squared error in intent prediction by up to 22.5%, while SRL outperforms conventional generic DRL by up to 23.5% in maximizing intent-aware QoE.

LLM-guided DRL for Multi-tier LEO Satellite Networks with Hybrid FSO/RF Links

May 17, 2025

Abstract:Despite significant advancements in terrestrial networks, inherent limitations persist in providing reliable coverage to remote areas and maintaining resilience during natural disasters. Multi-tier networks with low Earth orbit (LEO) satellites and high-altitude platforms (HAPs) offer promising solutions, but face challenges from high mobility and dynamic channel conditions that cause unstable connections and frequent handovers. In this paper, we design a three-tier network architecture that integrates LEO satellites, HAPs, and ground terminals with hybrid free-space optical (FSO) and radio frequency (RF) links to maximize coverage while maintaining connectivity reliability. This hybrid approach leverages the high bandwidth of FSO for satellite-to-HAP links and the weather resilience of RF for HAP-to-ground links. We formulate a joint optimization problem to simultaneously balance downlink transmission rate and handover frequency by optimizing network configuration and satellite handover decisions. The problem is highly dynamic and non-convex with time-coupled constraints. To address these challenges, we propose a novel large language model (LLM)-guided truncated quantile critics algorithm with dynamic action masking (LTQC-DAM) that utilizes dynamic action masking to eliminate unnecessary exploration and employs LLMs to adaptively tune hyperparameters. Simulation results demonstrate that the proposed LTQC-DAM algorithm outperforms baseline algorithms in terms of convergence, downlink transmission rate, and handover frequency. We also reveal that compared to other state-of-the-art LLMs, DeepSeek delivers the best performance through gradual, contextually-aware parameter adjustments.

A Trustworthy Multi-LLM Network: Challenges,Solutions, and A Use Case

May 06, 2025Abstract:Large Language Models (LLMs) demonstrate strong potential across a variety of tasks in communications and networking due to their advanced reasoning capabilities. However, because different LLMs have different model structures and are trained using distinct corpora and methods, they may offer varying optimization strategies for the same network issues. Moreover, the limitations of an individual LLM's training data, aggravated by the potential maliciousness of its hosting device, can result in responses with low confidence or even bias. To address these challenges, we propose a blockchain-enabled collaborative framework that connects multiple LLMs into a Trustworthy Multi-LLM Network (MultiLLMN). This architecture enables the cooperative evaluation and selection of the most reliable and high-quality responses to complex network optimization problems. Specifically, we begin by reviewing related work and highlighting the limitations of existing LLMs in collaboration and trust, emphasizing the need for trustworthiness in LLM-based systems. We then introduce the workflow and design of the proposed Trustworthy MultiLLMN framework. Given the severity of False Base Station (FBS) attacks in B5G and 6G communication systems and the difficulty of addressing such threats through traditional modeling techniques, we present FBS defense as a case study to empirically validate the effectiveness of our approach. Finally, we outline promising future research directions in this emerging area.

Toward Agentic AI: Generative Information Retrieval Inspired Intelligent Communications and Networking

Feb 24, 2025

Abstract:The increasing complexity and scale of modern telecommunications networks demand intelligent automation to enhance efficiency, adaptability, and resilience. Agentic AI has emerged as a key paradigm for intelligent communications and networking, enabling AI-driven agents to perceive, reason, decide, and act within dynamic networking environments. However, effective decision-making in telecom applications, such as network planning, management, and resource allocation, requires integrating retrieval mechanisms that support multi-hop reasoning, historical cross-referencing, and compliance with evolving 3GPP standards. This article presents a forward-looking perspective on generative information retrieval-inspired intelligent communications and networking, emphasizing the role of knowledge acquisition, processing, and retrieval in agentic AI for telecom systems. We first provide a comprehensive review of generative information retrieval strategies, including traditional retrieval, hybrid retrieval, semantic retrieval, knowledge-based retrieval, and agentic contextual retrieval. We then analyze their advantages, limitations, and suitability for various networking scenarios. Next, we present a survey about their applications in communications and networking. Additionally, we introduce an agentic contextual retrieval framework to enhance telecom-specific planning by integrating multi-source retrieval, structured reasoning, and self-reflective validation. Experimental results demonstrate that our framework significantly improves answer accuracy, explanation consistency, and retrieval efficiency compared to traditional and semantic retrieval methods. Finally, we outline future research directions.

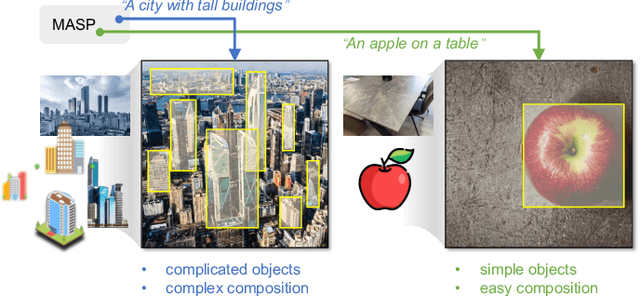

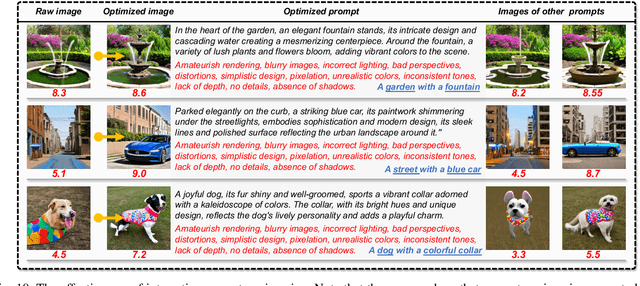

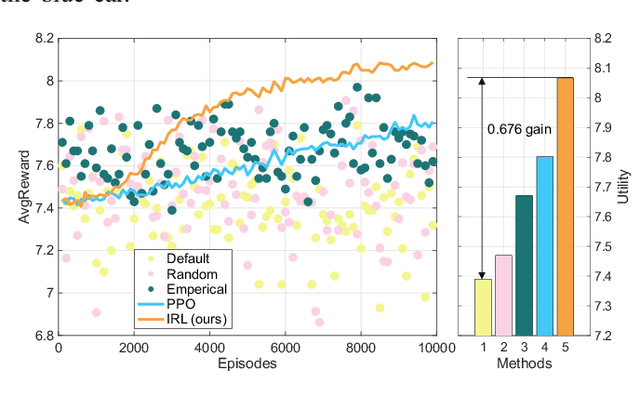

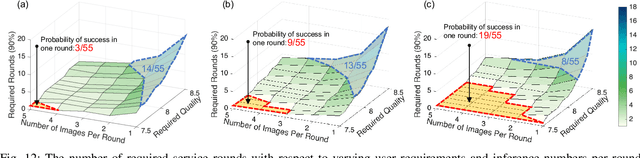

Intelligent Mobile AI-Generated Content Services via Interactive Prompt Engineering and Dynamic Service Provisioning

Feb 17, 2025

Abstract:Due to massive computational demands of large generative models, AI-Generated Content (AIGC) can organize collaborative Mobile AIGC Service Providers (MASPs) at network edges to provide ubiquitous and customized content generation for resource-constrained users. However, such a paradigm faces two significant challenges: 1) raw prompts (i.e., the task description from users) often lead to poor generation quality due to users' lack of experience with specific AIGC models, and 2) static service provisioning fails to efficiently utilize computational and communication resources given the heterogeneity of AIGC tasks. To address these challenges, we propose an intelligent mobile AIGC service scheme. Firstly, we develop an interactive prompt engineering mechanism that leverages a Large Language Model (LLM) to generate customized prompt corpora and employs Inverse Reinforcement Learning (IRL) for policy imitation through small-scale expert demonstrations. Secondly, we formulate a dynamic mobile AIGC service provisioning problem that jointly optimizes the number of inference trials and transmission power allocation. Then, we propose the Diffusion-Enhanced Deep Deterministic Policy Gradient (D3PG) algorithm to solve the problem. By incorporating the diffusion process into Deep Reinforcement Learning (DRL) architecture, the environment exploration capability can be improved, thus adapting to varying mobile AIGC scenarios. Extensive experimental results demonstrate that our prompt engineering approach improves single-round generation success probability by 6.3 times, while D3PG increases the user service experience by 67.8% compared to baseline DRL approaches.

Generative AI based Secure Wireless Sensing for ISAC Networks

Aug 21, 2024

Abstract:Integrated sensing and communications (ISAC) is expected to be a key technology for 6G, and channel state information (CSI) based sensing is a key component of ISAC. However, current research on ISAC focuses mainly on improving sensing performance, overlooking security issues, particularly the unauthorized sensing of users. In this paper, we propose a secure sensing system (DFSS) based on two distinct diffusion models. Specifically, we first propose a discrete conditional diffusion model to generate graphs with nodes and edges, guiding the ISAC system to appropriately activate wireless links and nodes, which ensures the sensing performance while minimizing the operation cost. Using the activated links and nodes, DFSS then employs the continuous conditional diffusion model to generate safeguarding signals, which are next modulated onto the pilot at the transmitter to mask fluctuations caused by user activities. As such, only ISAC devices authorized with the safeguarding signals can extract the true CSI for sensing, while unauthorized devices are unable to achieve the same sensing. Experiment results demonstrate that DFSS can reduce the activity recognition accuracy of the unauthorized devices by approximately 70%, effectively shield the user from the unauthorized surveillance.

ICST-DNET: An Interpretable Causal Spatio-Temporal Diffusion Network for Traffic Speed Prediction

Apr 22, 2024

Abstract:Traffic speed prediction is significant for intelligent navigation and congestion alleviation. However, making accurate predictions is challenging due to three factors: 1) traffic diffusion, i.e., the spatial and temporal causality existing between the traffic conditions of multiple neighboring roads, 2) the poor interpretability of traffic data with complicated spatio-temporal correlations, and 3) the latent pattern of traffic speed fluctuations over time, such as morning and evening rush. Jointly considering these factors, in this paper, we present a novel architecture for traffic speed prediction, called Interpretable Causal Spatio-Temporal Diffusion Network (ICST-DNET). Specifically, ICST-DENT consists of three parts, namely the Spatio-Temporal Causality Learning (STCL), Causal Graph Generation (CGG), and Speed Fluctuation Pattern Recognition (SFPR) modules. First, to model the traffic diffusion within road networks, an STCL module is proposed to capture both the temporal causality on each individual road and the spatial causality in each road pair. The CGG module is then developed based on STCL to enhance the interpretability of the traffic diffusion procedure from the temporal and spatial perspectives. Specifically, a time causality matrix is generated to explain the temporal causality between each road's historical and future traffic conditions. For spatial causality, we utilize causal graphs to visualize the diffusion process in road pairs. Finally, to adapt to traffic speed fluctuations in different scenarios, we design a personalized SFPR module to select the historical timesteps with strong influences for learning the pattern of traffic speed fluctuations. Extensive experimental results prove that ICST-DNET can outperform all existing baselines, as evidenced by the higher prediction accuracy, ability to explain causality, and adaptability to different scenarios.

Interactive Generative AI Agents for Satellite Networks through a Mixture of Experts Transmission

Apr 14, 2024

Abstract:In response to the needs of 6G global communications, satellite communication networks have emerged as a key solution. However, the large-scale development of satellite communication networks is constrained by the complex system models, whose modeling is challenging for massive users. Moreover, transmission interference between satellites and users seriously affects communication performance. To solve these problems, this paper develops generative artificial intelligence (AI) agents for model formulation and then applies a mixture of experts (MoE) approach to design transmission strategies. Specifically, we leverage large language models (LLMs) to build an interactive modeling paradigm and utilize retrieval-augmented generation (RAG) to extract satellite expert knowledge that supports mathematical modeling. Afterward, by integrating the expertise of multiple specialized components, we propose an MoE-proximal policy optimization (PPO) approach to solve the formulated problem. Each expert can optimize the optimization variables at which it excels through specialized training through its own network and then aggregates them through the gating network to perform joint optimization. The simulation results validate the accuracy and effectiveness of employing a generative agent for problem formulation. Furthermore, the superiority of the proposed MoE-ppo approach over other benchmarks is confirmed in solving the formulated problem. The adaptability of MoE-PPO to various customized modeling problems has also been demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge