Dinh Thai Hoang

Energy-Efficient Learning-Based Beamforming for ISAC-Enabled V2X Networks

Aug 27, 2025Abstract:This work proposes an energy-efficient, learning-based beamforming scheme for integrated sensing and communication (ISAC)-enabled V2X networks. Specifically, we first model the dynamic and uncertain nature of V2X environments as a Markov Decision Process. This formulation allows the roadside unit to generate beamforming decisions based solely on current sensing information, thereby eliminating the need for frequent pilot transmissions and extensive channel state information acquisition. We then develop a deep reinforcement learning (DRL) algorithm to jointly optimize beamforming and power allocation, ensuring both communication throughput and sensing accuracy in highly dynamic scenario. To address the high energy demands of conventional learning-based schemes, we embed spiking neural networks (SNNs) into the DRL framework. Leveraging their event-driven and sparsely activated architecture, SNNs significantly enhance energy efficiency while maintaining robust performance. Simulation results confirm that the proposed method achieves substantial energy savings and superior communication performance, demonstrating its potential to support green and sustainable connectivity in future V2X systems.

Privacy-Preserving Driver Drowsiness Detection with Spatial Self-Attention and Federated Learning

Aug 01, 2025Abstract:Driver drowsiness is one of the main causes of road accidents and is recognized as a leading contributor to traffic-related fatalities. However, detecting drowsiness accurately remains a challenging task, especially in real-world settings where facial data from different individuals is decentralized and highly diverse. In this paper, we propose a novel framework for drowsiness detection that is designed to work effectively with heterogeneous and decentralized data. Our approach develops a new Spatial Self-Attention (SSA) mechanism integrated with a Long Short-Term Memory (LSTM) network to better extract key facial features and improve detection performance. To support federated learning, we employ a Gradient Similarity Comparison (GSC) that selects the most relevant trained models from different operators before aggregation. This improves the accuracy and robustness of the global model while preserving user privacy. We also develop a customized tool that automatically processes video data by extracting frames, detecting and cropping faces, and applying data augmentation techniques such as rotation, flipping, brightness adjustment, and zooming. Experimental results show that our framework achieves a detection accuracy of 89.9% in the federated learning settings, outperforming existing methods under various deployment scenarios. The results demonstrate the effectiveness of our approach in handling real-world data variability and highlight its potential for deployment in intelligent transportation systems to enhance road safety through early and reliable drowsiness detection.

Towards Autonomous Riding: A Review of Perception, Planning, and Control in Intelligent Two-Wheelers

Jul 16, 2025Abstract:The rapid adoption of micromobility solutions, particularly two-wheeled vehicles like e-scooters and e-bikes, has created an urgent need for reliable autonomous riding (AR) technologies. While autonomous driving (AD) systems have matured significantly, AR presents unique challenges due to the inherent instability of two-wheeled platforms, limited size, limited power, and unpredictable environments, which pose very serious concerns about road users' safety. This review provides a comprehensive analysis of AR systems by systematically examining their core components, perception, planning, and control, through the lens of AD technologies. We identify critical gaps in current AR research, including a lack of comprehensive perception systems for various AR tasks, limited industry and government support for such developments, and insufficient attention from the research community. The review analyses the gaps of AR from the perspective of AD to highlight promising research directions, such as multimodal sensor techniques for lightweight platforms and edge deep learning architectures. By synthesising insights from AD research with the specific requirements of AR, this review aims to accelerate the development of safe, efficient, and scalable autonomous riding systems for future urban mobility.

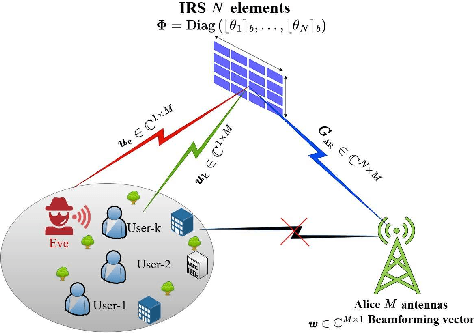

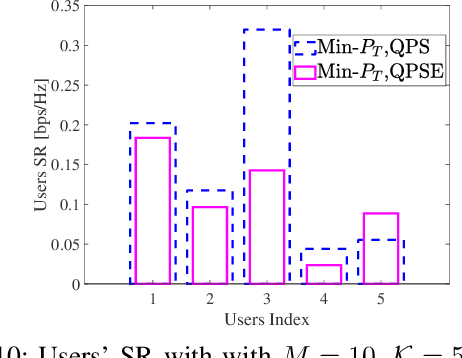

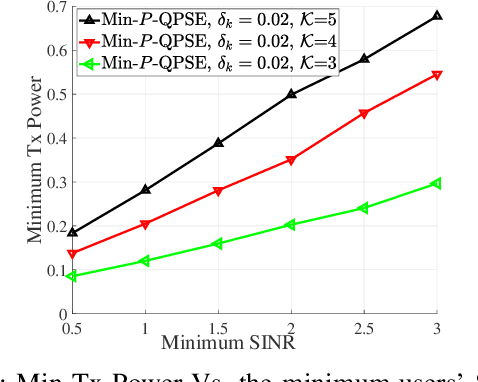

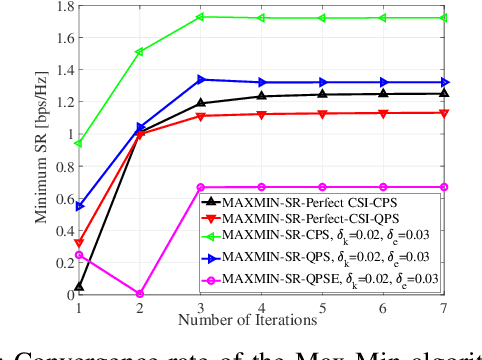

"Security for Everyone" in Finite Blocklength IRS-aided Systems With Perfect and Imperfect CSI

Apr 07, 2025Abstract:Provisioning secrecy for all users, given the heterogeneity in their channel conditions, locations, and the unknown location of the attacker/eavesdropper, is challenging and not always feasible. The problem is even more difficult under finite blocklength constraints that are popular in ultra-reliable low-latency communication (URLLC) and massive machine-type communications (mMTC). This work takes the first step to guarantee secrecy for all URLLC/mMTC users in the finite blocklength regime (FBR) where intelligent reflecting surfaces (IRS) are used to enhance legitimate users' reception and thwart the potential eavesdropper (Eve) from intercepting. To that end, we aim to maximize the minimum secrecy rate (SR) among all users by jointly optimizing the transmitter's beamforming and IRS's passive reflective elements (PREs) under the FBR latency constraints. The resulting optimization problem is non-convex and even more complicated under imperfect channel state information (CSI). To tackle it, we linearize the objective function, and decompose the problem into sequential subproblems. When perfect CSI is not available, we use the successive convex approximation (SCA) approach to transform imperfect CSI-related semi-infinite constraints into finite linear matrix inequalities (LMI).

Secure Communications for All Users in Low-Resolution IRS-aided Systems Under Imperfect and Unknown CSI

Apr 07, 2025

Abstract:Provisioning secrecy for all users, given the heterogeneity and uncertainty of their channel conditions, locations, and the unknown location of the attacker/eavesdropper, is challenging and not always feasible. This work takes the first step to guarantee secrecy for all users where a low resolution intelligent reflecting surfaces (IRS) is used to enhance legitimate users' reception and thwart the potential eavesdropper (Eve) from intercepting. In real-life scenarios, due to hardware limitations of the IRS' passive reflective elements (PREs), the use of a full-resolution (continuous) phase shift (CPS) is impractical. In this paper, we thus consider a more practical case where the phase shift (PS) is modeled by a low-resolution (quantized) phase shift (QPS) while addressing the phase shift error (PSE) induced by the imperfect channel state information (CSI). To that end, we aim to maximize the minimum secrecy rate (SR) among all users by jointly optimizing the transmitter's beamforming vector and the IRS's passive reflective elements (PREs) under perfect/imperfect/unknown CSI. The resulting optimization problem is non-convex and even more complicated under imperfect/unknown CSI.

Right Reward Right Time for Federated Learning

Mar 10, 2025Abstract:Critical learning periods (CLPs) in federated learning (FL) refer to early stages during which low-quality contributions (e.g., sparse training data availability) can permanently impair the learning performance of the global model owned by the model owner (i.e., the cloud server). However, strategies to motivate clients with high-quality contributions to join the FL training process and share trained model updates during CLPs remain underexplored. Additionally, existing incentive mechanisms in FL treat all training periods equally, which consequently fails to motivate clients to participate early. Compounding this challenge is the cloud's limited knowledge of client training capabilities due to privacy regulations, leading to information asymmetry. Therefore, in this article, we propose a time-aware incentive mechanism, called Right Reward Right Time (R3T), to encourage client involvement, especially during CLPs, to maximize the utility of the cloud in FL. Specifically, the cloud utility function captures the trade-off between the achieved model performance and payments allocated for clients' contributions, while accounting for clients' time and system capabilities, efforts, joining time, and rewards. Then, we analytically derive the optimal contract for the cloud and devise a CLP-aware mechanism to incentivize early participation and efforts while maximizing cloud utility, even under information asymmetry. By providing the right reward at the right time, our approach can attract the highest-quality contributions during CLPs. Simulation and proof-of-concept studies show that R3T increases cloud utility and is more economically effective than benchmarks. Notably, our proof-of-concept results show up to a 47.6% reduction in the total number of clients and up to a 300% improvement in convergence time while reaching competitive test accuracies compared with incentive mechanism benchmarks.

End-to-End Human Pose Reconstruction from Wearable Sensors for 6G Extended Reality Systems

Mar 06, 2025Abstract:Full 3D human pose reconstruction is a critical enabler for extended reality (XR) applications in future sixth generation (6G) networks, supporting immersive interactions in gaming, virtual meetings, and remote collaboration. However, achieving accurate pose reconstruction over wireless networks remains challenging due to channel impairments, bit errors, and quantization effects. Existing approaches often assume error-free transmission in indoor settings, limiting their applicability to real-world scenarios. To address these challenges, we propose a novel deep learning-based framework for human pose reconstruction over orthogonal frequency-division multiplexing (OFDM) systems. The framework introduces a two-stage deep learning receiver: the first stage jointly estimates the wireless channel and decodes OFDM symbols, and the second stage maps the received sensor signals to full 3D body poses. Simulation results demonstrate that the proposed neural receiver reduces bit error rate (BER), thus gaining a 5 dB gap at $10^{-4}$ BER, compared to the baseline method that employs separate signal detection steps, i.e., least squares channel estimation and linear minimum mean square error equalization. Additionally, our empirical findings show that 8-bit quantization is sufficient for accurate pose reconstruction, achieving a mean squared error of $5\times10^{-4}$ for reconstructed sensor signals, and reducing joint angular error by 37\% for the reconstructed human poses compared to the baseline.

Multiple-Input Variational Auto-Encoder for Anomaly Detection in Heterogeneous Data

Jan 14, 2025

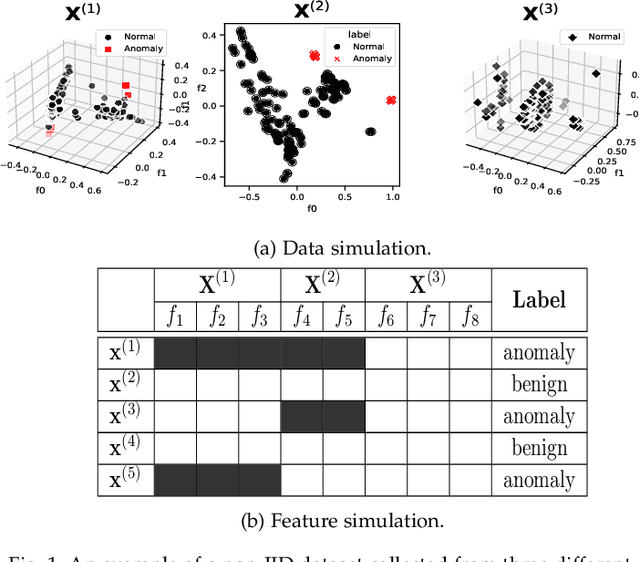

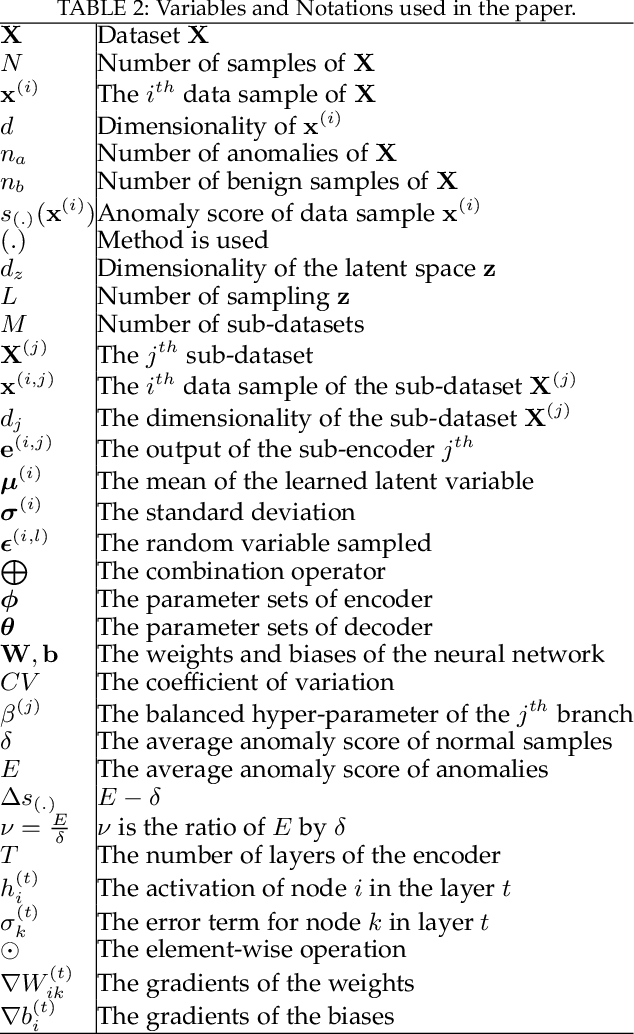

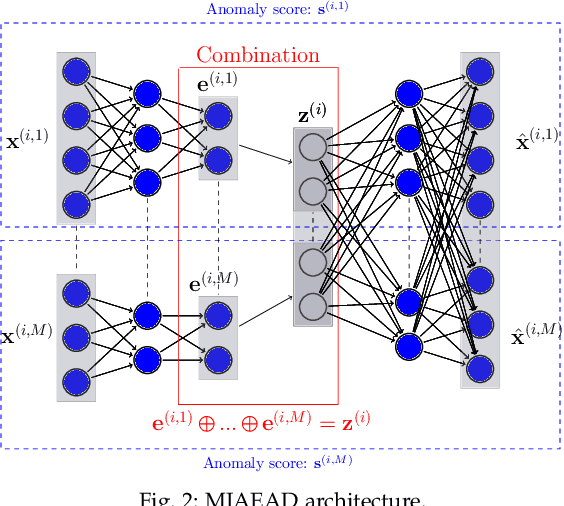

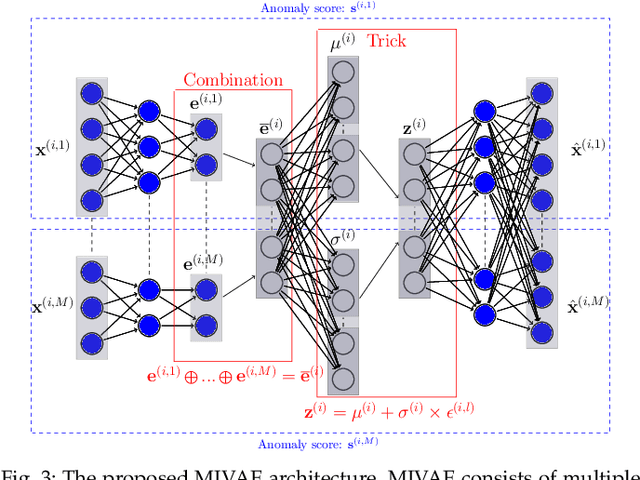

Abstract:Anomaly detection (AD) plays a pivotal role in AI applications, e.g., in classification, and intrusion/threat detection in cybersecurity. However, most existing methods face challenges of heterogeneity amongst feature subsets posed by non-independent and identically distributed (non-IID) data. We propose a novel neural network model called Multiple-Input Auto-Encoder for AD (MIAEAD) to address this. MIAEAD assigns an anomaly score to each feature subset of a data sample to indicate its likelihood of being an anomaly. This is done by using the reconstruction error of its sub-encoder as the anomaly score. All sub-encoders are then simultaneously trained using unsupervised learning to determine the anomaly scores of feature subsets. The final AUC of MIAEAD is calculated for each sub-dataset, and the maximum AUC obtained among the sub-datasets is selected. To leverage the modelling of the distribution of normal data to identify anomalies of the generative models, we develop a novel neural network architecture/model called Multiple-Input Variational Auto-Encoder (MIVAE). MIVAE can process feature subsets through its sub-encoders before learning distribution of normal data in the latent space. This allows MIVAE to identify anomalies that deviate from the learned distribution. We theoretically prove that the difference in the average anomaly score between normal samples and anomalies obtained by the proposed MIVAE is greater than that of the Variational Auto-Encoder (VAEAD), resulting in a higher AUC for MIVAE. Extensive experiments on eight real-world anomaly datasets demonstrate the superior performance of MIAEAD and MIVAE over conventional methods and the state-of-the-art unsupervised models, by up to 6% in terms of AUC score. Alternatively, MIAEAD and MIVAE have a high AUC when applied to feature subsets with low heterogeneity based on the coefficient of variation (CV) score.

Energy-Efficient and Intelligent ISAC in V2X Networks with Spiking Neural Networks-Driven DRL

Jan 02, 2025

Abstract:Integrated sensing and communication (ISAC) has emerged as a pivotal technology for enabling vehicle-to-everything (V2X) connectivity, mobility, and security. However, designing efficient beamforming schemes to achieve accurate sensing and enhance communication performance in the dynamic and uncertain environments of V2X networks presents significant challenges. While AI technologies offer promising solutions, the energy-intensive nature of neural networks (NNs) imposes substantial burdens on communication infrastructures. This work proposes an energy-efficient and intelligent ISAC system for V2X networks. Specifically, we first leverage a Markov Decision Process framework to model the dynamic and uncertain nature of V2X networks. This framework allows the roadside unit (RSU) to develop beamforming schemes relying solely on its current sensing state information, eliminating the need for numerous pilot signals and extensive channel state information acquisition. To endow the system with intelligence and enhance its performance, we then introduce an advanced deep reinforcement learning (DRL) algorithm based on the Actor-Critic framework with a policy-clipping technique, enabling the joint optimization of beamforming and power allocation strategies to guarantee both communication rate and sensing accuracy. Furthermore, to alleviate the energy demands of NNs, we integrate Spiking Neural Networks (SNNs) into the DRL algorithm. By leveraging discrete spikes and their temporal characteristics for information transmission, SNNs not only significantly reduce the energy consumption of deploying AI model in ISAC-assisted V2X networks but also further enhance algorithm performance. Extensive simulation results validate the effectiveness of the proposed scheme with lower energy consumption, superior communication performance, and improved sensing accuracy.

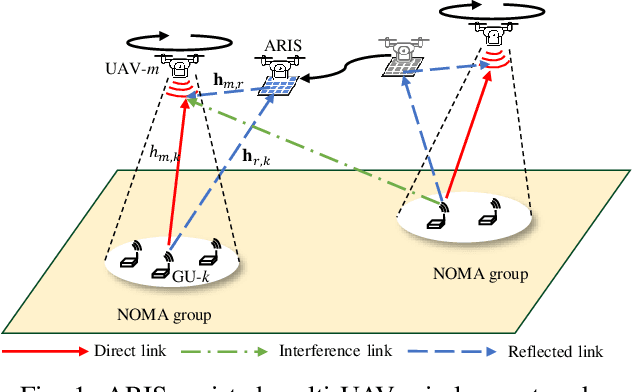

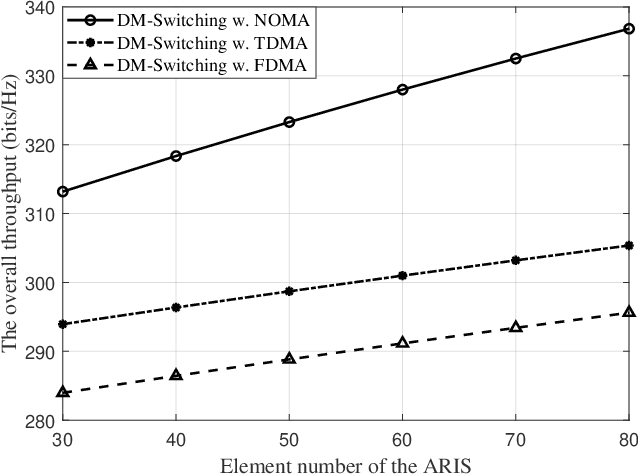

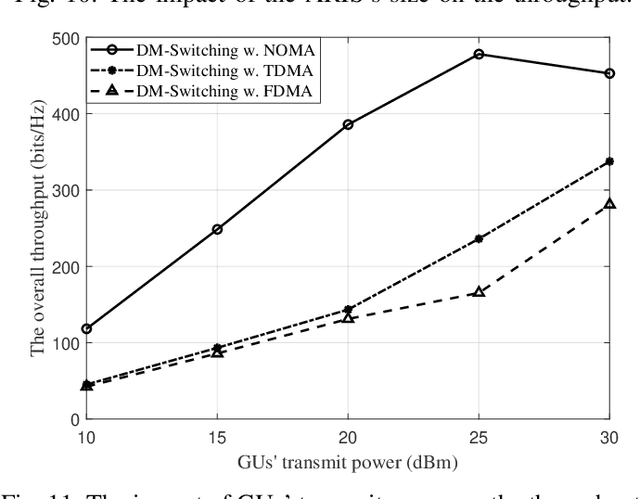

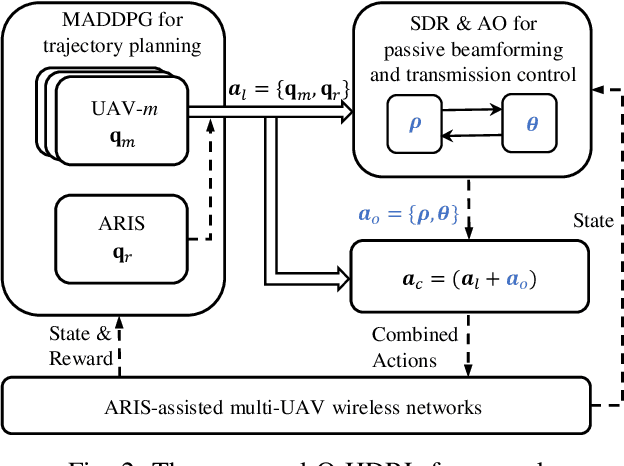

Exploiting NOMA Transmissions in Multi-UAV-assisted Wireless Networks: From Aerial-RIS to Mode-switching UAVs

Dec 29, 2024

Abstract:In this paper, we consider an aerial reconfigurable intelligent surface (ARIS)-assisted wireless network, where multiple unmanned aerial vehicles (UAVs) collect data from ground users (GUs) by using the non-orthogonal multiple access (NOMA) method. The ARIS provides enhanced channel controllability to improve the NOMA transmissions and reduce the co-channel interference among UAVs. We also propose a novel dual-mode switching scheme, where each UAV equipped with both an ARIS and a radio frequency (RF) transceiver can adaptively perform passive reflection or active transmission. We aim to maximize the overall network throughput by jointly optimizing the UAVs' trajectory planning and operating modes, the ARIS's passive beamforming, and the GUs' transmission control strategies. We propose an optimization-driven hierarchical deep reinforcement learning (O-HDRL) method to decompose it into a series of subproblems. Specifically, the multi-agent deep deterministic policy gradient (MADDPG) adjusts the UAVs' trajectory planning and mode switching strategies, while the passive beamforming and transmission control strategies are tackled by the optimization methods. Numerical results reveal that the O-HDRL efficiently improves the learning stability and reward performance compared to the benchmark methods. Meanwhile, the dual-mode switching scheme is verified to achieve a higher throughput performance compared to the fixed ARIS scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge