Jiadong Yu

Energy-Efficient Learning-Based Beamforming for ISAC-Enabled V2X Networks

Aug 27, 2025Abstract:This work proposes an energy-efficient, learning-based beamforming scheme for integrated sensing and communication (ISAC)-enabled V2X networks. Specifically, we first model the dynamic and uncertain nature of V2X environments as a Markov Decision Process. This formulation allows the roadside unit to generate beamforming decisions based solely on current sensing information, thereby eliminating the need for frequent pilot transmissions and extensive channel state information acquisition. We then develop a deep reinforcement learning (DRL) algorithm to jointly optimize beamforming and power allocation, ensuring both communication throughput and sensing accuracy in highly dynamic scenario. To address the high energy demands of conventional learning-based schemes, we embed spiking neural networks (SNNs) into the DRL framework. Leveraging their event-driven and sparsely activated architecture, SNNs significantly enhance energy efficiency while maintaining robust performance. Simulation results confirm that the proposed method achieves substantial energy savings and superior communication performance, demonstrating its potential to support green and sustainable connectivity in future V2X systems.

On Path to Multimodal Historical Reasoning: HistBench and HistAgent

May 26, 2025Abstract:Recent advances in large language models (LLMs) have led to remarkable progress across domains, yet their capabilities in the humanities, particularly history, remain underexplored. Historical reasoning poses unique challenges for AI, involving multimodal source interpretation, temporal inference, and cross-linguistic analysis. While general-purpose agents perform well on many existing benchmarks, they lack the domain-specific expertise required to engage with historical materials and questions. To address this gap, we introduce HistBench, a new benchmark of 414 high-quality questions designed to evaluate AI's capacity for historical reasoning and authored by more than 40 expert contributors. The tasks span a wide range of historical problems-from factual retrieval based on primary sources to interpretive analysis of manuscripts and images, to interdisciplinary challenges involving archaeology, linguistics, or cultural history. Furthermore, the benchmark dataset spans 29 ancient and modern languages and covers a wide range of historical periods and world regions. Finding the poor performance of LLMs and other agents on HistBench, we further present HistAgent, a history-specific agent equipped with carefully designed tools for OCR, translation, archival search, and image understanding in History. On HistBench, HistAgent based on GPT-4o achieves an accuracy of 27.54% pass@1 and 36.47% pass@2, significantly outperforming LLMs with online search and generalist agents, including GPT-4o (18.60%), DeepSeek-R1(14.49%) and Open Deep Research-smolagents(20.29% pass@1 and 25.12% pass@2). These results highlight the limitations of existing LLMs and generalist agents and demonstrate the advantages of HistAgent for historical reasoning.

Improve the Training Efficiency of DRL for Wireless Communication Resource Allocation: The Role of Generative Diffusion Models

Feb 11, 2025Abstract:Dynamic resource allocation in mobile wireless networks involves complex, time-varying optimization problems, motivating the adoption of deep reinforcement learning (DRL). However, most existing works rely on pre-trained policies, overlooking dynamic environmental changes that rapidly invalidate the policies. Periodic retraining becomes inevitable but incurs prohibitive computational costs and energy consumption-critical concerns for resource-constrained wireless systems. We identify three root causes of inefficient retraining: high-dimensional state spaces, suboptimal action spaces exploration-exploitation trade-offs, and reward design limitations. To overcome these limitations, we propose Diffusion-based Deep Reinforcement Learning (D2RL), which leverages generative diffusion models (GDMs) to holistically enhance all three DRL components. Iterative refinement process and distribution modelling of GDMs enable (1) the generation of diverse state samples to improve environmental understanding, (2) balanced action space exploration to escape local optima, and (3) the design of discriminative reward functions that better evaluate action quality. Our framework operates in two modes: Mode I leverages GDMs to explore reward spaces and design discriminative reward functions that rigorously evaluate action quality, while Mode II synthesizes diverse state samples to enhance environmental understanding and generalization. Extensive experiments demonstrate that D2RL achieves faster convergence and reduced computational costs over conventional DRL methods for resource allocation in wireless communications while maintaining competitive policy performance. This work underscores the transformative potential of GDMs in overcoming fundamental DRL training bottlenecks for wireless networks, paving the way for practical, real-time deployments.

Energy-Efficient and Intelligent ISAC in V2X Networks with Spiking Neural Networks-Driven DRL

Jan 02, 2025

Abstract:Integrated sensing and communication (ISAC) has emerged as a pivotal technology for enabling vehicle-to-everything (V2X) connectivity, mobility, and security. However, designing efficient beamforming schemes to achieve accurate sensing and enhance communication performance in the dynamic and uncertain environments of V2X networks presents significant challenges. While AI technologies offer promising solutions, the energy-intensive nature of neural networks (NNs) imposes substantial burdens on communication infrastructures. This work proposes an energy-efficient and intelligent ISAC system for V2X networks. Specifically, we first leverage a Markov Decision Process framework to model the dynamic and uncertain nature of V2X networks. This framework allows the roadside unit (RSU) to develop beamforming schemes relying solely on its current sensing state information, eliminating the need for numerous pilot signals and extensive channel state information acquisition. To endow the system with intelligence and enhance its performance, we then introduce an advanced deep reinforcement learning (DRL) algorithm based on the Actor-Critic framework with a policy-clipping technique, enabling the joint optimization of beamforming and power allocation strategies to guarantee both communication rate and sensing accuracy. Furthermore, to alleviate the energy demands of NNs, we integrate Spiking Neural Networks (SNNs) into the DRL algorithm. By leveraging discrete spikes and their temporal characteristics for information transmission, SNNs not only significantly reduce the energy consumption of deploying AI model in ISAC-assisted V2X networks but also further enhance algorithm performance. Extensive simulation results validate the effectiveness of the proposed scheme with lower energy consumption, superior communication performance, and improved sensing accuracy.

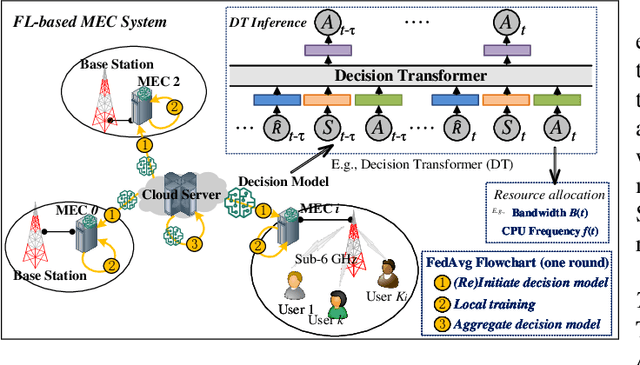

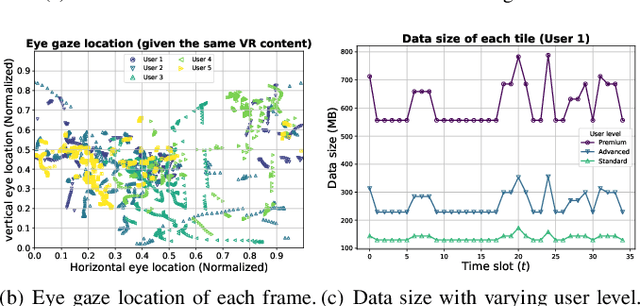

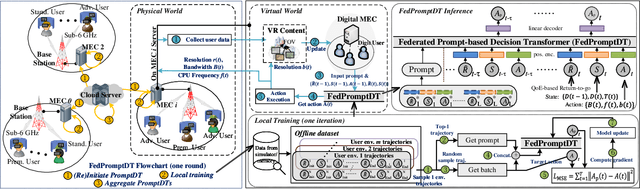

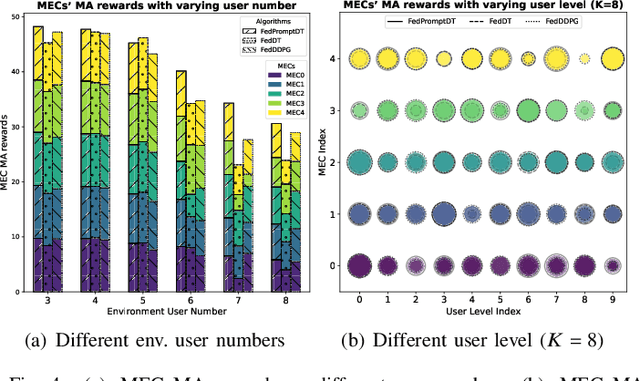

Federated Prompt-based Decision Transformer for Customized VR Services in Mobile Edge Computing System

Feb 15, 2024

Abstract:This paper investigates resource allocation to provide heterogeneous users with customized virtual reality (VR) services in a mobile edge computing (MEC) system. We first introduce a quality of experience (QoE) metric to measure user experience, which considers the MEC system's latency, user attention levels, and preferred resolutions. Then, a QoE maximization problem is formulated for resource allocation to ensure the highest possible user experience,which is cast as a reinforcement learning problem, aiming to learn a generalized policy applicable across diverse user environments for all MEC servers. To learn the generalized policy, we propose a framework that employs federated learning (FL) and prompt-based sequence modeling to pre-train a common decision model across MEC servers, which is named FedPromptDT. Using FL solves the problem of insufficient local MEC data while protecting user privacy during offline training. The design of prompts integrating user-environment cues and user-preferred allocation improves the model's adaptability to various user environments during online execution.

Bi-directional Digital Twin and Edge Computing in the Metaverse

Nov 16, 2022Abstract:The Metaverse has emerged to extend our lifestyle beyond physical limitations. As essential components in the Metaverse, digital twins (DTs) are the digital replicas of physical items. End users access the Metaverse using a variety of devices (e.g., head-mounted devices (HMDs)), mostly lightweight. Multi-access edge computing (MEC) and edge networks provide responsive services to the end users, leading to an immersive Metaverse experience. With the anticipation to represent physical objects, end users, and edge computing systems as DTs in the Metaverse, the construction of these DTs and the interplay between them have not been investigated. In this paper, we discuss the bidirectional reliance between the DT and the MEC system and investigate the creation of DTs of objects and users on the MEC servers and DT-assisted edge computing (DTEC). We also study the interplay between the DTs and DTECs to allocate the resources fairly and adequately and provide an immersive experience in the Metaverse. Owing to the dynamic network states (e.g., channel states) and mobility of the users, we discuss the interplay between local DTECs (on local MEC servers) and the global DTEC (on cloud server) to cope with the handover among MEC servers and avoid intermittent Metaverse services.

IRS Assisted NOMA Aided Mobile Edge Computing with Queue Stability: Heterogeneous Multi-Agent Reinforcement Learning

Sep 21, 2022

Abstract:By employing powerful edge servers for data processing, mobile edge computing (MEC) has been recognized as a promising technology to support emerging computation-intensive applications. Besides, non-orthogonal multiple access (NOMA)-aided MEC system can further enhance the spectral-efficiency with massive tasks offloading. However, with more dynamic devices brought online and the uncontrollable stochastic channel environment, it is even desirable to deploy appealing technique, i.e., intelligent reflecting surfaces (IRS), in the MEC system to flexibly tune the communication environment and improve the system energy efficiency. In this paper, we investigate the joint offloading, communication and computation resource allocation for IRS-assisted NOMA MEC system. We firstly formulate a mixed integer energy efficiency maximization problem with system queue stability constraint. We then propose the Lyapunov-function-based Mixed Integer Deep Deterministic Policy Gradient (LMIDDPG) algorithm which is based on the centralized reinforcement learning (RL) framework. To be specific, we design the mixed integer action space mapping which contains both continuous mapping and integer mapping. Moreover, the award function is defined as the upper-bound of the Lyapunov drift-plus-penalty function. To enable end devices (EDs) to choose actions independently at the execution stage, we further propose the Heterogeneous Multi-agent LMIDDPG (HMA-LMIDDPG) algorithm based on distributed RL framework with homogeneous EDs and heterogeneous base station (BS) as heterogeneous multi-agent. Numerical results show that our proposed algorithms can achieve superior energy efficiency performance to the benchmark algorithms while maintaining the queue stability. Specially, the distributed structure HMA-LMIDDPG can acquire more energy efficiency gain than centralized structure LMIDDPG.

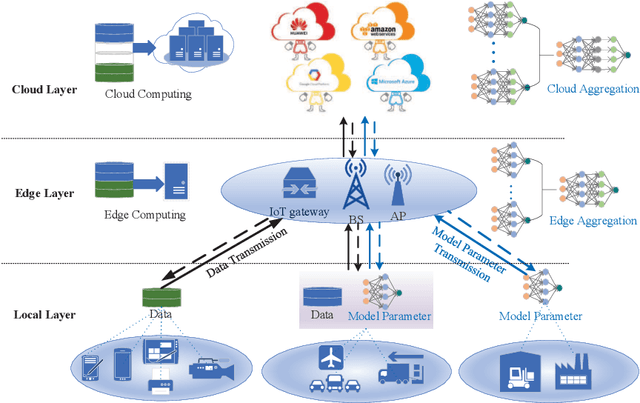

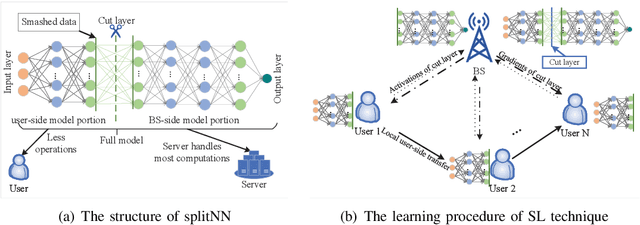

Distributed Intelligence in Wireless Networks

Aug 01, 2022

Abstract:The cloud-based solutions are becoming inefficient due to considerably large time delays, high power consumption, security and privacy concerns caused by billions of connected wireless devices and typically zillions bytes of data they produce at the network edge. A blend of edge computing and Artificial Intelligence (AI) techniques could optimally shift the resourceful computation servers closer to the network edge, which provides the support for advanced AI applications (e.g., video/audio surveillance and personal recommendation system) by enabling intelligent decision making on computing at the point of data generation as and when it is needed, and distributed Machine Learning (ML) with its potential to avoid the transmission of large dataset and possible compromise of privacy that may exist in cloud-based centralized learning. Therefore, AI is envisioned to become native and ubiquitous in future communication and networking systems. In this paper, we conduct a comprehensive overview of recent advances in distributed intelligence in wireless networks under the umbrella of native-AI wireless networks, with a focus on the basic concepts of native-AI wireless networks, on the AI-enabled edge computing, on the design of distributed learning architectures for heterogeneous networks, on the communication-efficient technologies to support distributed learning, and on the AI-empowered end-to-end communications. We highlight the advantages of hybrid distributed learning architectures compared to the state-of-art distributed learning techniques. We summarize the challenges of existing research contributions in distributed intelligence in wireless networks and identify the potential future opportunities.

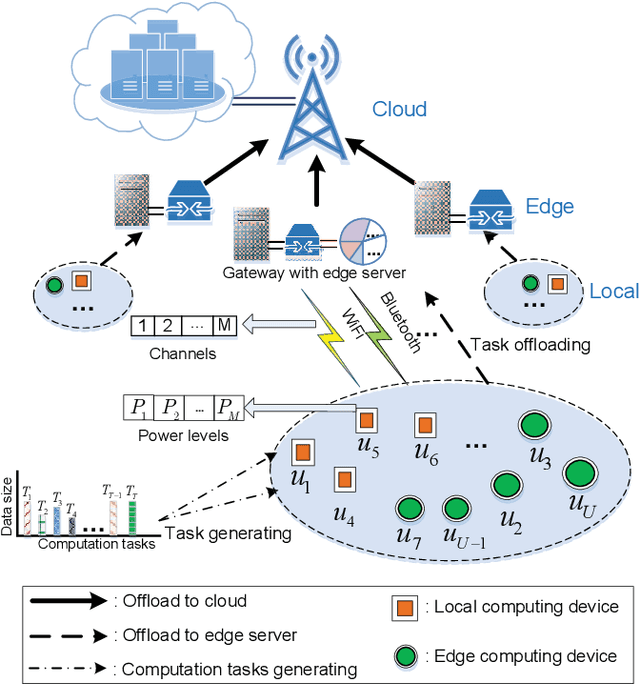

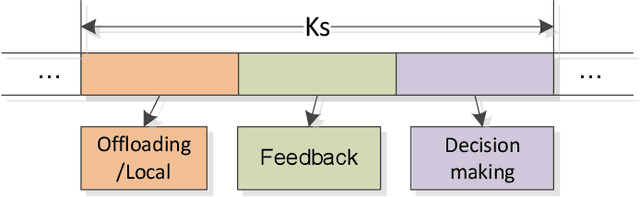

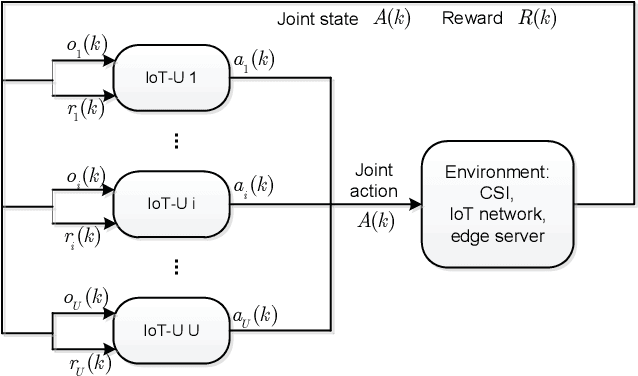

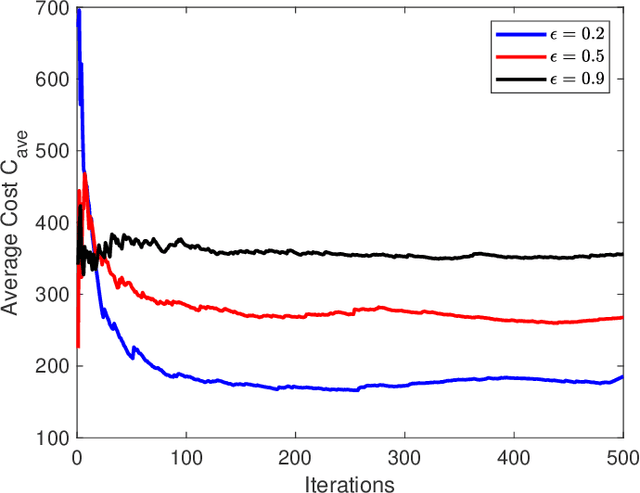

Multi-agent Reinforcement Learning for Resource Allocation in IoT networks with Edge Computing

Apr 05, 2020

Abstract:To support popular Internet of Things (IoT) applications such as virtual reality, mobile games and wearable devices, edge computing provides a front-end distributed computing archetype of centralized cloud computing with low latency. However, it's challenging for end users to offload computation due to their massive requirements on spectrum and computation resources and frequent requests on Radio Access Technology (RAT). In this paper, we investigate computation offloading mechanism with resource allocation in IoT edge computing networks by formulating it as a stochastic game. Here, each end user is a learning agent observing its local environment to learn optimal decisions on either local computing or edge computing with the goal of minimizing long term system cost by choosing its transmit power level, RAT and sub-channel without knowing any information of the other end users. Therefore, a multi-agent reinforcement learning framework is developed to solve the stochastic game with a proposed independent learners based multi-agent Q-learning (IL-based MA-Q) algorithm. Simulations demonstrate that the proposed IL-based MA-Q algorithm is feasible to solve the formulated problem and is more energy efficient without extra cost on channel estimation at the centralized gateway compared to the other two benchmark algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge