Eryk Dutkiewicz

Secure Communications for All Users in Low-Resolution IRS-aided Systems Under Imperfect and Unknown CSI

Apr 07, 2025

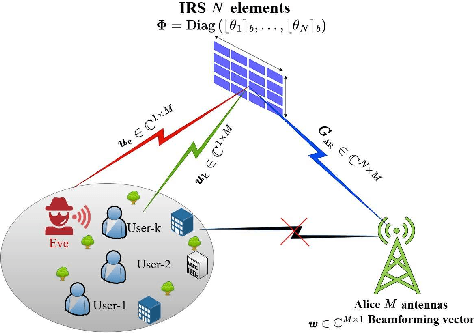

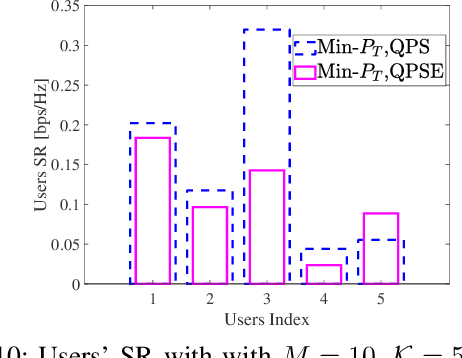

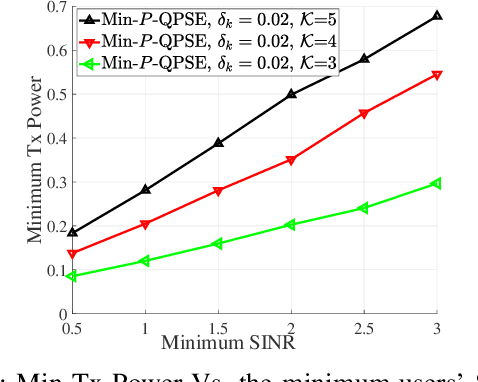

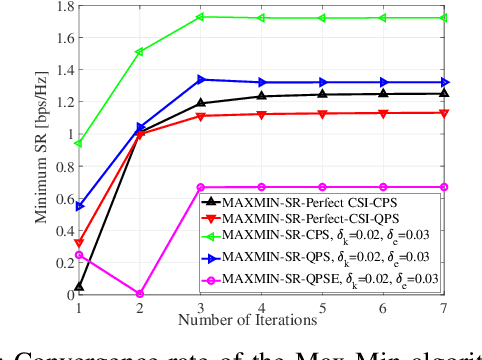

Abstract:Provisioning secrecy for all users, given the heterogeneity and uncertainty of their channel conditions, locations, and the unknown location of the attacker/eavesdropper, is challenging and not always feasible. This work takes the first step to guarantee secrecy for all users where a low resolution intelligent reflecting surfaces (IRS) is used to enhance legitimate users' reception and thwart the potential eavesdropper (Eve) from intercepting. In real-life scenarios, due to hardware limitations of the IRS' passive reflective elements (PREs), the use of a full-resolution (continuous) phase shift (CPS) is impractical. In this paper, we thus consider a more practical case where the phase shift (PS) is modeled by a low-resolution (quantized) phase shift (QPS) while addressing the phase shift error (PSE) induced by the imperfect channel state information (CSI). To that end, we aim to maximize the minimum secrecy rate (SR) among all users by jointly optimizing the transmitter's beamforming vector and the IRS's passive reflective elements (PREs) under perfect/imperfect/unknown CSI. The resulting optimization problem is non-convex and even more complicated under imperfect/unknown CSI.

"Security for Everyone" in Finite Blocklength IRS-aided Systems With Perfect and Imperfect CSI

Apr 07, 2025Abstract:Provisioning secrecy for all users, given the heterogeneity in their channel conditions, locations, and the unknown location of the attacker/eavesdropper, is challenging and not always feasible. The problem is even more difficult under finite blocklength constraints that are popular in ultra-reliable low-latency communication (URLLC) and massive machine-type communications (mMTC). This work takes the first step to guarantee secrecy for all URLLC/mMTC users in the finite blocklength regime (FBR) where intelligent reflecting surfaces (IRS) are used to enhance legitimate users' reception and thwart the potential eavesdropper (Eve) from intercepting. To that end, we aim to maximize the minimum secrecy rate (SR) among all users by jointly optimizing the transmitter's beamforming and IRS's passive reflective elements (PREs) under the FBR latency constraints. The resulting optimization problem is non-convex and even more complicated under imperfect channel state information (CSI). To tackle it, we linearize the objective function, and decompose the problem into sequential subproblems. When perfect CSI is not available, we use the successive convex approximation (SCA) approach to transform imperfect CSI-related semi-infinite constraints into finite linear matrix inequalities (LMI).

Multiple-Input Variational Auto-Encoder for Anomaly Detection in Heterogeneous Data

Jan 14, 2025

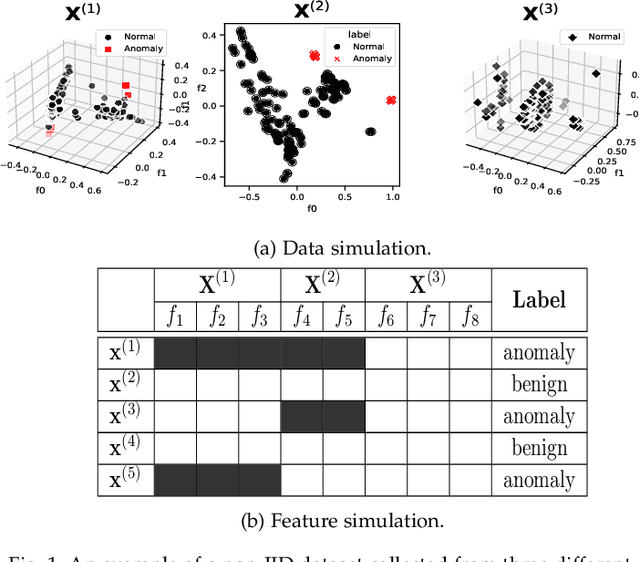

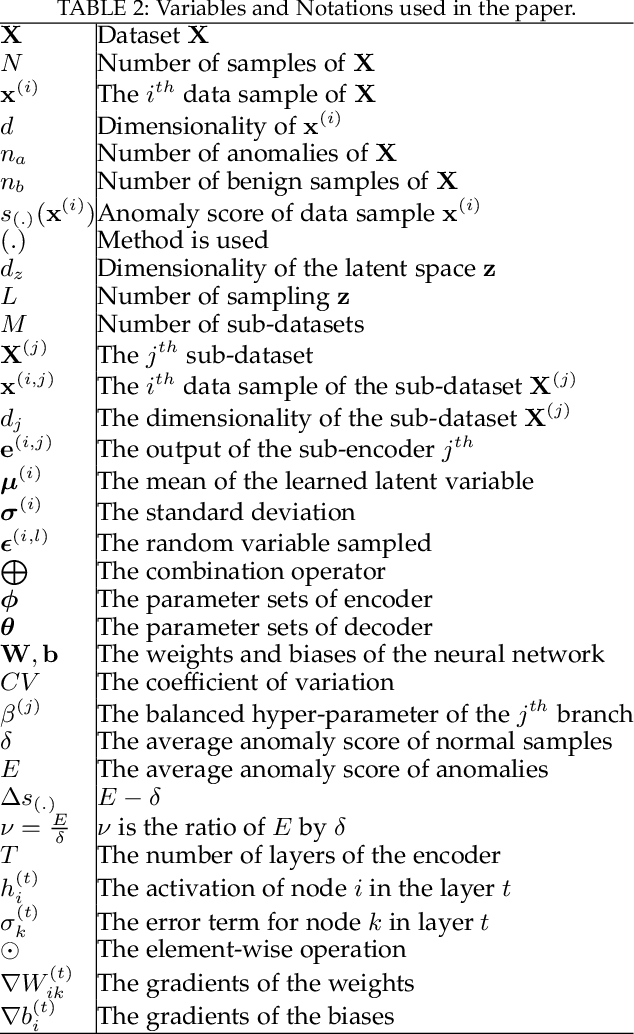

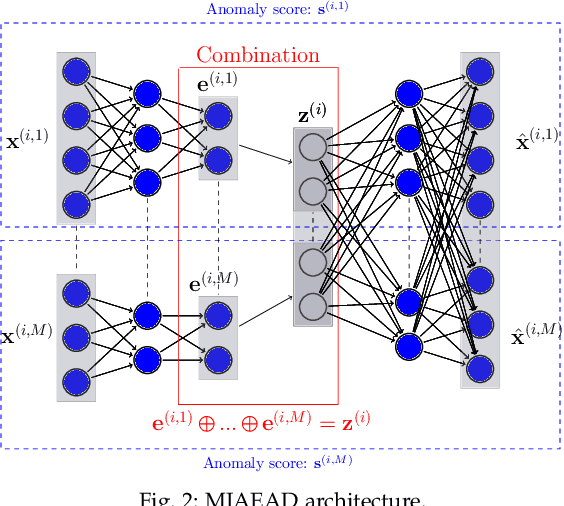

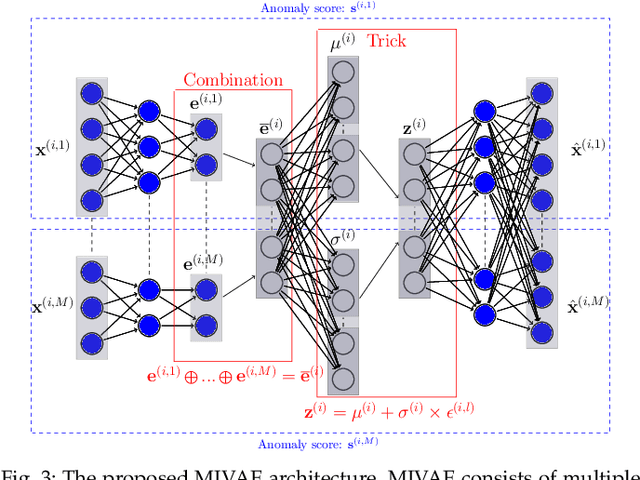

Abstract:Anomaly detection (AD) plays a pivotal role in AI applications, e.g., in classification, and intrusion/threat detection in cybersecurity. However, most existing methods face challenges of heterogeneity amongst feature subsets posed by non-independent and identically distributed (non-IID) data. We propose a novel neural network model called Multiple-Input Auto-Encoder for AD (MIAEAD) to address this. MIAEAD assigns an anomaly score to each feature subset of a data sample to indicate its likelihood of being an anomaly. This is done by using the reconstruction error of its sub-encoder as the anomaly score. All sub-encoders are then simultaneously trained using unsupervised learning to determine the anomaly scores of feature subsets. The final AUC of MIAEAD is calculated for each sub-dataset, and the maximum AUC obtained among the sub-datasets is selected. To leverage the modelling of the distribution of normal data to identify anomalies of the generative models, we develop a novel neural network architecture/model called Multiple-Input Variational Auto-Encoder (MIVAE). MIVAE can process feature subsets through its sub-encoders before learning distribution of normal data in the latent space. This allows MIVAE to identify anomalies that deviate from the learned distribution. We theoretically prove that the difference in the average anomaly score between normal samples and anomalies obtained by the proposed MIVAE is greater than that of the Variational Auto-Encoder (VAEAD), resulting in a higher AUC for MIVAE. Extensive experiments on eight real-world anomaly datasets demonstrate the superior performance of MIAEAD and MIVAE over conventional methods and the state-of-the-art unsupervised models, by up to 6% in terms of AUC score. Alternatively, MIAEAD and MIVAE have a high AUC when applied to feature subsets with low heterogeneity based on the coefficient of variation (CV) score.

Twin Auto-Encoder Model for Learning Separable Representation in Cyberattack Detection

Mar 22, 2024

Abstract:Representation Learning (RL) plays a pivotal role in the success of many problems including cyberattack detection. Most of the RL methods for cyberattack detection are based on the latent vector of Auto-Encoder (AE) models. An AE transforms raw data into a new latent representation that better exposes the underlying characteristics of the input data. Thus, it is very useful for identifying cyberattacks. However, due to the heterogeneity and sophistication of cyberattacks, the representation of AEs is often entangled/mixed resulting in the difficulty for downstream attack detection models. To tackle this problem, we propose a novel mod called Twin Auto-Encoder (TAE). TAE deterministically transforms the latent representation into a more distinguishable representation namely the \textit{separable representation} and the reconstructsuct the separable representation at the output. The output of TAE called the \textit{reconstruction representation} is input to downstream models to detect cyberattacks. We extensively evaluate the effectiveness of TAE using a wide range of bench-marking datasets. Experiment results show the superior accuracy of TAE over state-of-the-art RL models and well-known machine learning algorithms. Moreover, TAE also outperforms state-of-the-art models on some sophisticated and challenging attacks. We then investigate various characteristics of TAE to further demonstrate its superiority.

Multiple-Input Auto-Encoder Guided Feature Selection for IoT Intrusion Detection Systems

Mar 22, 2024Abstract:While intrusion detection systems (IDSs) benefit from the diversity and generalization of IoT data features, the data diversity (e.g., the heterogeneity and high dimensions of data) also makes it difficult to train effective machine learning models in IoT IDSs. This also leads to potentially redundant/noisy features that may decrease the accuracy of the detection engine in IDSs. This paper first introduces a novel neural network architecture called Multiple-Input Auto-Encoder (MIAE). MIAE consists of multiple sub-encoders that can process inputs from different sources with different characteristics. The MIAE model is trained in an unsupervised learning mode to transform the heterogeneous inputs into lower-dimensional representation, which helps classifiers distinguish between normal behaviour and different types of attacks. To distil and retain more relevant features but remove less important/redundant ones during the training process, we further design and embed a feature selection layer right after the representation layer of MIAE resulting in a new model called MIAEFS. This layer learns the importance of features in the representation vector, facilitating the selection of informative features from the representation vector. The results on three IDS datasets, i.e., NSLKDD, UNSW-NB15, and IDS2017, show the superior performance of MIAE and MIAEFS compared to other methods, e.g., conventional classifiers, dimensionality reduction models, unsupervised representation learning methods with different input dimensions, and unsupervised feature selection models. Moreover, MIAE and MIAEFS combined with the Random Forest (RF) classifier achieve accuracy of 96.5% in detecting sophisticated attacks, e.g., Slowloris. The average running time for detecting an attack sample using RF with the representation of MIAE and MIAEFS is approximate 1.7E-6 seconds, whilst the model size is lower than 1 MB.

Securing MIMO Wiretap Channel with Learning-Based Friendly Jamming under Imperfect CSI

Dec 12, 2023

Abstract:Wireless communications are particularly vulnerable to eavesdropping attacks due to their broadcast nature. To effectively deal with eavesdroppers, existing security techniques usually require accurate channel state information (CSI), e.g., for friendly jamming (FJ), and/or additional computing resources at transceivers, e.g., cryptography-based solutions, which unfortunately may not be feasible in practice. This challenge is even more acute in low-end IoT devices. We thus introduce a novel deep learning-based FJ framework that can effectively defeat eavesdropping attacks with imperfect CSI and even without CSI of legitimate channels. In particular, we first develop an autoencoder-based communication architecture with FJ, namely AEFJ, to jointly maximize the secrecy rate and minimize the block error rate at the receiver without requiring perfect CSI of the legitimate channels. In addition, to deal with the case without CSI, we leverage the mutual information neural estimation (MINE) concept and design a MINE-based FJ scheme that can achieve comparable security performance to the conventional FJ methods that require perfect CSI. Extensive simulations in a multiple-input multiple-output (MIMO) system demonstrate that our proposed solution can effectively deal with eavesdropping attacks in various settings. Moreover, the proposed framework can seamlessly integrate MIMO security and detection tasks into a unified end-to-end learning process. This integrated approach can significantly maximize the throughput and minimize the block error rate, offering a good solution for enhancing communication security in wireless communication systems.

Constrained Twin Variational Auto-Encoder for Intrusion Detection in IoT Systems

Dec 05, 2023Abstract:Intrusion detection systems (IDSs) play a critical role in protecting billions of IoT devices from malicious attacks. However, the IDSs for IoT devices face inherent challenges of IoT systems, including the heterogeneity of IoT data/devices, the high dimensionality of training data, and the imbalanced data. Moreover, the deployment of IDSs on IoT systems is challenging, and sometimes impossible, due to the limited resources such as memory/storage and computing capability of typical IoT devices. To tackle these challenges, this article proposes a novel deep neural network/architecture called Constrained Twin Variational Auto-Encoder (CTVAE) that can feed classifiers of IDSs with more separable/distinguishable and lower-dimensional representation data. Additionally, in comparison to the state-of-the-art neural networks used in IDSs, CTVAE requires less memory/storage and computing power, hence making it more suitable for IoT IDS systems. Extensive experiments with the 11 most popular IoT botnet datasets show that CTVAE can boost around 1% in terms of accuracy and Fscore in detection attack compared to the state-of-the-art machine learning and representation learning methods, whilst the running time for attack detection is lower than 2E-6 seconds and the model size is lower than 1 MB. We also further investigate various characteristics of CTVAE in the latent space and in the reconstruction representation to demonstrate its efficacy compared with current well-known methods.

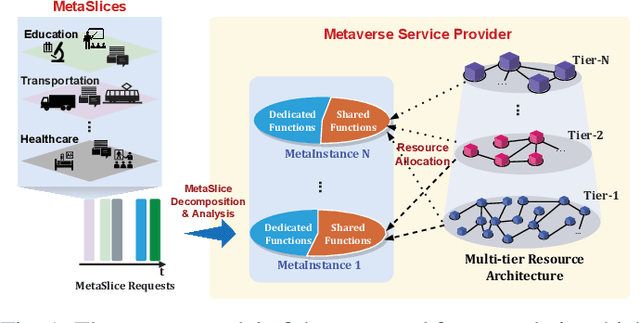

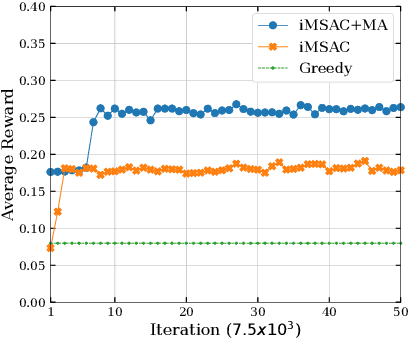

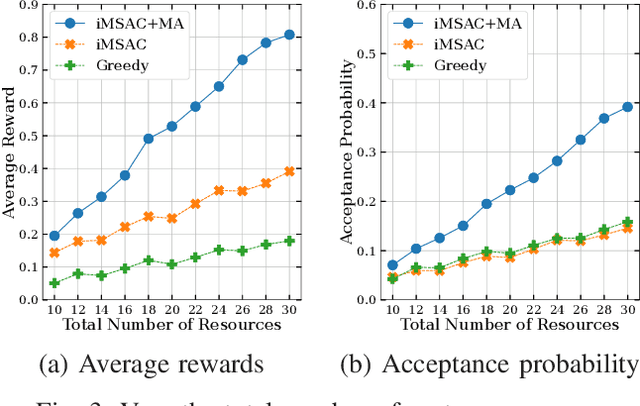

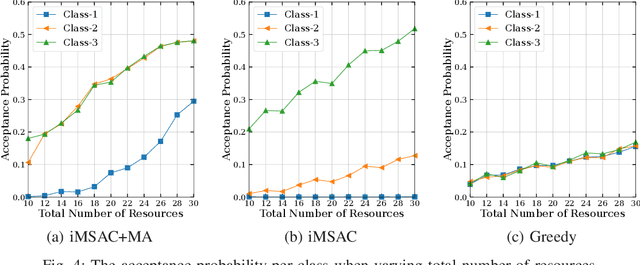

Dynamic Resource Allocation for Metaverse Applications with Deep Reinforcement Learning

Feb 27, 2023

Abstract:This work proposes a novel framework to dynamically and effectively manage and allocate different types of resources for Metaverse applications, which are forecasted to demand massive resources of various types that have never been seen before. Specifically, by studying functions of Metaverse applications, we first propose an effective solution to divide applications into groups, namely MetaInstances, where common functions can be shared among applications to enhance resource usage efficiency. Then, to capture the real-time, dynamic, and uncertain characteristics of request arrival and application departure processes, we develop a semi-Markov decision process-based framework and propose an intelligent algorithm that can gradually learn the optimal admission policy to maximize the revenue and resource usage efficiency for the Metaverse service provider and at the same time enhance the Quality-of-Service for Metaverse users. Extensive simulation results show that our proposed approach can achieve up to 120% greater revenue for the Metaverse service providers and up to 178.9% higher acceptance probability for Metaverse application requests than those of other baselines.

Toward BCI-enabled Metaverse: A Joint Radio and Computing Resource Allocation Approach

Dec 17, 2022Abstract:Toward user-driven Metaverse applications with fast wireless connectivity and tremendous computing demand through future 6G infrastructures, we propose a Brain-Computer Interface (BCI) enabled framework that paves the way for the creation of intelligent human-like avatars. Our approach takes a first step toward the Metaverse systems in which the digital avatars are envisioned to be more intelligent by collecting and analyzing brain signals through cellular networks. In our proposed system, Metaverse users experience Metaverse applications while sending their brain signals via uplink wireless channels in order to create intelligent human-like avatars at the base station. As such, the digital avatars can not only give useful recommendations for the users but also enable the system to create user-driven applications. Our proposed framework involves a mixed decision-making and classification problem in which the base station has to allocate its computing and radio resources to the users and classify the brain signals of users in an efficient manner. To this end, we propose a hybrid training algorithm that utilizes recent advances in deep reinforcement learning to address the problem. Specifically, our hybrid training algorithm contains three deep neural networks cooperating with each other to enable better realization of the mixed decision-making and classification problem. Simulation results show that our proposed framework can jointly address resource allocation for the system and classify brain signals of the users with highly accurate predictions.

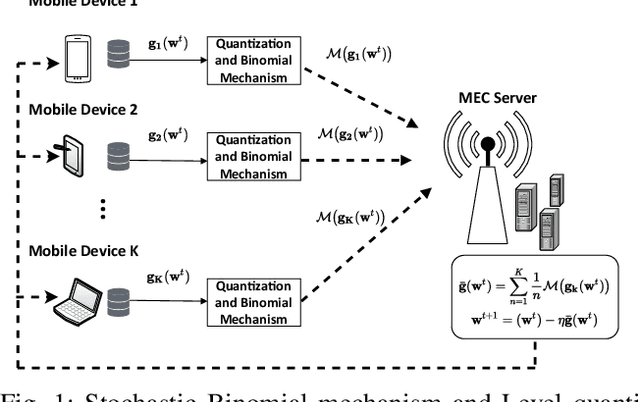

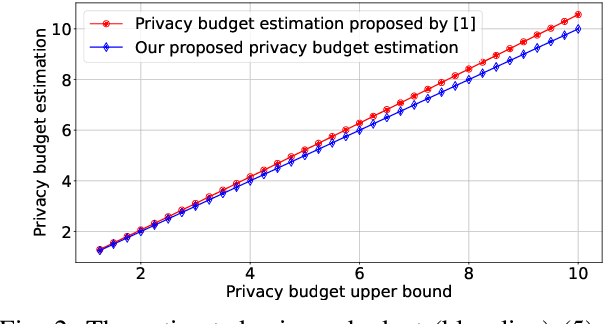

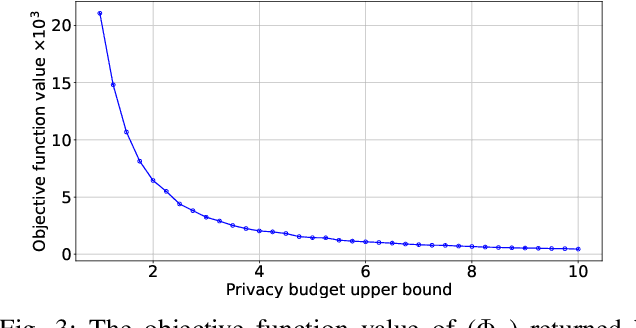

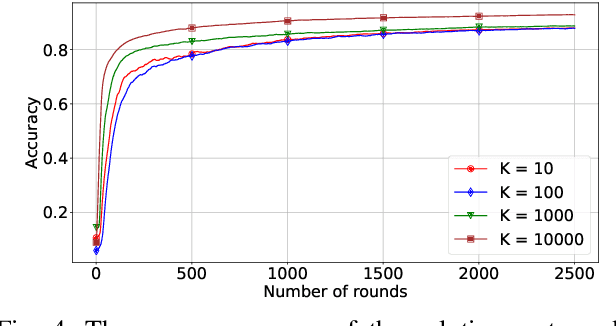

Optimal Privacy Preserving in Wireless Federated Learning System over Mobile Edge Computing

Nov 14, 2022

Abstract:Federated Learning (FL) with quantization and deliberately added noise over wireless networks is a promising approach to preserve the user differential privacy while reducing the wireless resources. Specifically, an FL learning process can be fused with quantized Binomial mechanism-based updates contributed by multiple users to reduce the communication overhead/cost as well as to protect the privacy of {participating} users. However, the optimization of wireless transmission and quantization parameters (e.g., transmit power, bandwidth, and quantization bits) as well as the added noise while guaranteeing the privacy requirement and the performance of the learned FL model remains an open and challenging problem. In this paper, we aim to jointly optimize the level of quantization, parameters of the Binomial mechanism, and devices' transmit powers to minimize the training time under the constraints of the wireless networks. The resulting optimization turns out to be a Mixed Integer Non-linear Programming (MINLP) problem, which is known to be NP-hard. To tackle it, we transform this MINLP problem into a new problem whose solutions are proved to be the optimal solutions of the original one. We then propose an approximate algorithm that can solve the transformed problem with an arbitrary relative error guarantee. Intensive simulations show that for the same wireless resources the proposed approach achieves the highest accuracy, close to that of the standard FL with no quantization and no noise added. This suggests the faster convergence/training time of the proposed wireless FL framework while optimally preserving users' privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge