Bo Gu

Learning Joint Source-Channel Encoding in IRS-assisted Multi-User Semantic Communications

Apr 10, 2025Abstract:In this paper, we investigate a joint source-channel encoding (JSCE) scheme in an intelligent reflecting surface (IRS)-assisted multi-user semantic communication system. Semantic encoding not only compresses redundant information, but also enhances information orthogonality in a semantic feature space. Meanwhile, the IRS can adjust the spatial orthogonality, enabling concurrent multi-user semantic communication in densely deployed wireless networks to improve spectrum efficiency. We aim to maximize the users' semantic throughput by jointly optimizing the users' scheduling, the IRS's passive beamforming, and the semantic encoding strategies. To tackle this non-convex problem, we propose an explainable deep neural network-driven deep reinforcement learning (XD-DRL) framework. Specifically, we employ a deep neural network (DNN) to serve as a joint source-channel semantic encoder, enabling transmitters to extract semantic features from raw images. By leveraging structural similarity, we assign some DNN weight coefficients as the IRS's phase shifts, allowing simultaneous optimization of IRS's passive beamforming and DNN training. Given the IRS's passive beamforming and semantic encoding strategies, user scheduling is optimized using the DRL method. Numerical results validate that our JSCE scheme achieves superior semantic throughput compared to the conventional schemes and efficiently reduces the semantic encoder's mode size in multi-user scenarios.

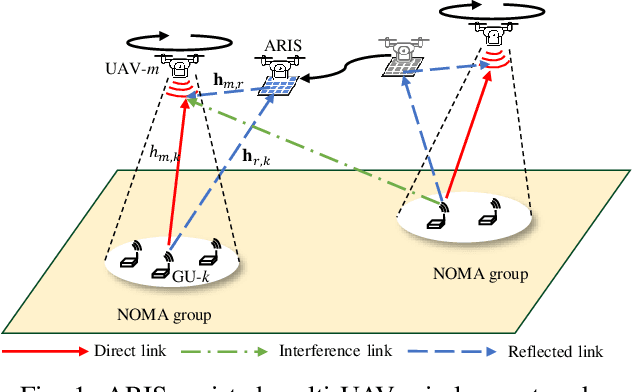

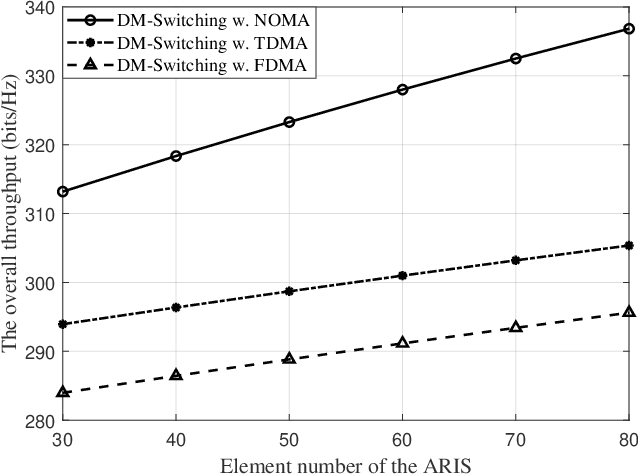

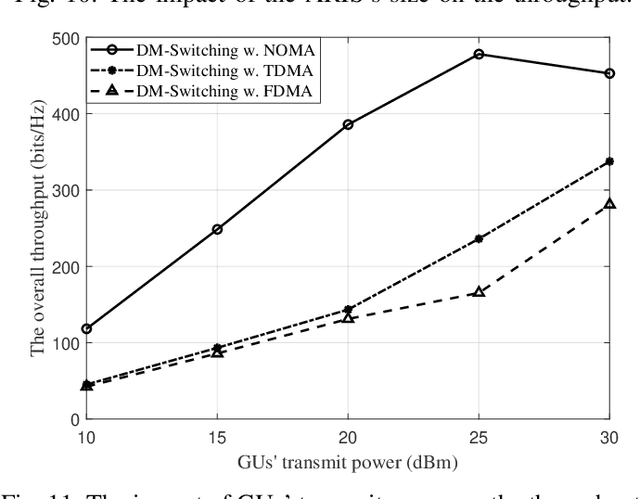

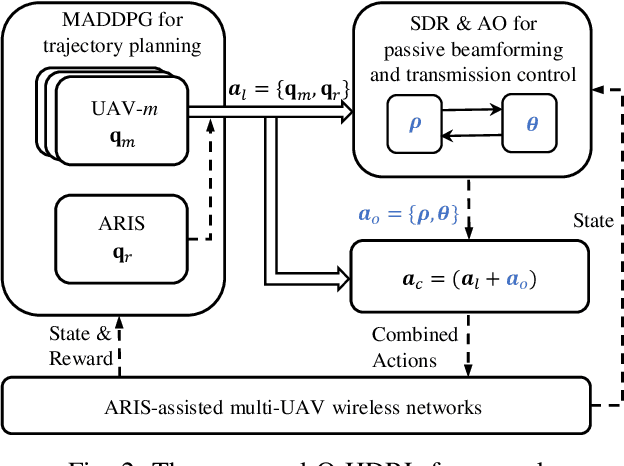

Exploiting NOMA Transmissions in Multi-UAV-assisted Wireless Networks: From Aerial-RIS to Mode-switching UAVs

Dec 29, 2024

Abstract:In this paper, we consider an aerial reconfigurable intelligent surface (ARIS)-assisted wireless network, where multiple unmanned aerial vehicles (UAVs) collect data from ground users (GUs) by using the non-orthogonal multiple access (NOMA) method. The ARIS provides enhanced channel controllability to improve the NOMA transmissions and reduce the co-channel interference among UAVs. We also propose a novel dual-mode switching scheme, where each UAV equipped with both an ARIS and a radio frequency (RF) transceiver can adaptively perform passive reflection or active transmission. We aim to maximize the overall network throughput by jointly optimizing the UAVs' trajectory planning and operating modes, the ARIS's passive beamforming, and the GUs' transmission control strategies. We propose an optimization-driven hierarchical deep reinforcement learning (O-HDRL) method to decompose it into a series of subproblems. Specifically, the multi-agent deep deterministic policy gradient (MADDPG) adjusts the UAVs' trajectory planning and mode switching strategies, while the passive beamforming and transmission control strategies are tackled by the optimization methods. Numerical results reveal that the O-HDRL efficiently improves the learning stability and reward performance compared to the benchmark methods. Meanwhile, the dual-mode switching scheme is verified to achieve a higher throughput performance compared to the fixed ARIS scheme.

Matching-Driven Deep Reinforcement Learning for Energy-Efficient Transmission Parameter Allocation in Multi-Gateway LoRa Networks

Jul 18, 2024

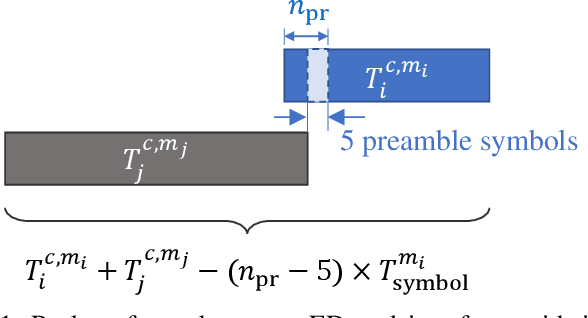

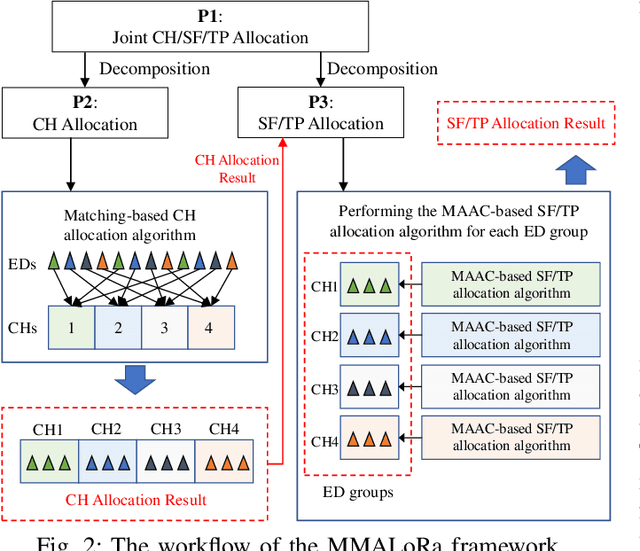

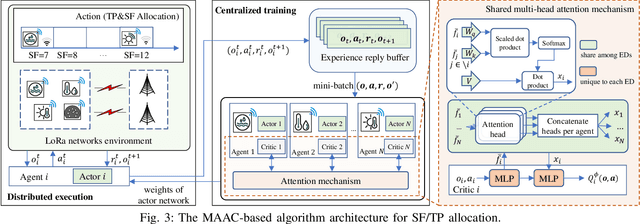

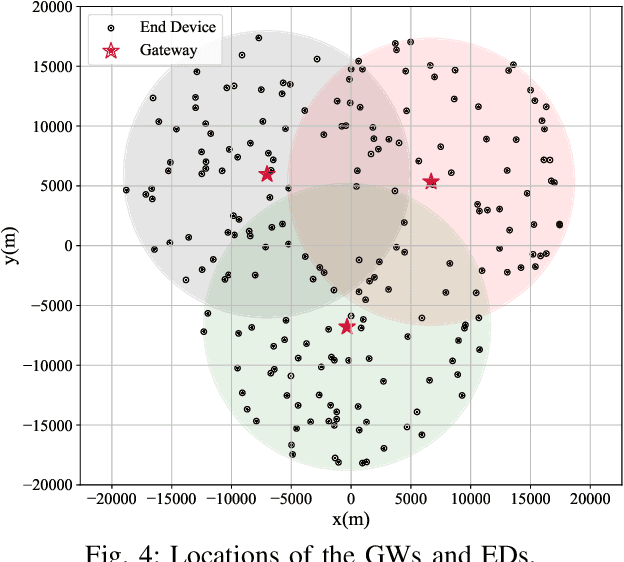

Abstract:Long-range (LoRa) communication technology, distinguished by its low power consumption and long communication range, is widely used in the Internet of Things. Nevertheless, the LoRa MAC layer adopts pure ALOHA for medium access control, which may suffer from severe packet collisions as the network scale expands, consequently reducing the system energy efficiency (EE). To address this issue, it is critical to carefully allocate transmission parameters such as the channel (CH), transmission power (TP) and spreading factor (SF) to each end device (ED). Owing to the low duty cycle and sporadic traffic of LoRa networks, evaluating the system EE under various parameter settings proves to be time-consuming. Consequently, we propose an analytical model aimed at calculating the system EE while fully considering the impact of multiple gateways, duty cycling, quasi-orthogonal SFs and capture effects. On this basis, we investigate a joint CH, SF and TP allocation problem, with the objective of optimizing the system EE for uplink transmissions. Due to the NP-hard complexity of the problem, the optimization problem is decomposed into two subproblems: CH assignment and SF/TP assignment. First, a matching-based algorithm is introduced to address the CH assignment subproblem. Then, an attention-based multiagent reinforcement learning technique is employed to address the SF/TP assignment subproblem for EDs allocated to the same CH, which reduces the number of learning agents to achieve fast convergence. The simulation outcomes indicate that the proposed approach converges quickly under various parameter settings and obtains significantly better system EE than baseline algorithms.

Multi-Time Scale Service Caching and Pricing in MEC Systems with Dynamic Program Popularity

Jul 04, 2024

Abstract:In mobile edge computing systems, base stations (BSs) equipped with edge servers can provide computing services to users to reduce their task execution time. However, there is always a conflict of interest between the BS and users. The BS prices the service programs based on user demand to maximize its own profit, while the users determine their offloading strategies based on the prices to minimize their costs. Moreover, service programs need to be pre-cached to meet immediate computing needs. Due to the limited caching capacity and variations in service program popularity, the BS must dynamically select which service programs to cache. Since service caching and pricing have different needs for adjustment time granularities, we propose a two-time scale framework to jointly optimize service caching, pricing and task offloading. For the large time scale, we propose a game-nested deep reinforcement learning algorithm to dynamically adjust service caching according to the estimated popularity information. For the small time scale, by modeling the interaction between the BS and users as a two-stage game, we prove the existence of the equilibrium under incomplete information and then derive the optimal pricing and offloading strategies. Extensive simulations based on a real-world dataset demonstrate the efficiency of the proposed approach.

Can We Enhance the Quality of Mobile Crowdsensing Data Without Ground Truth?

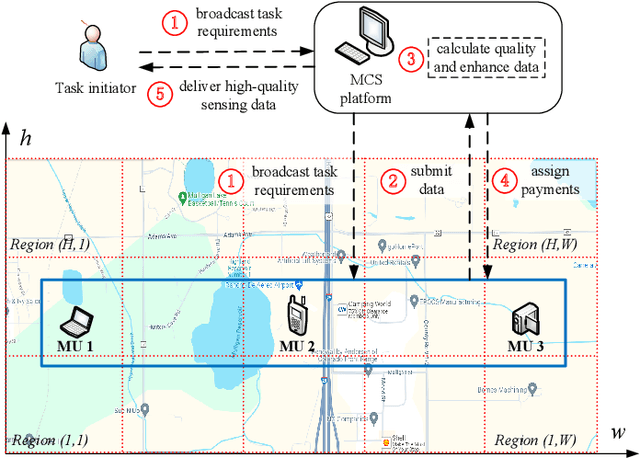

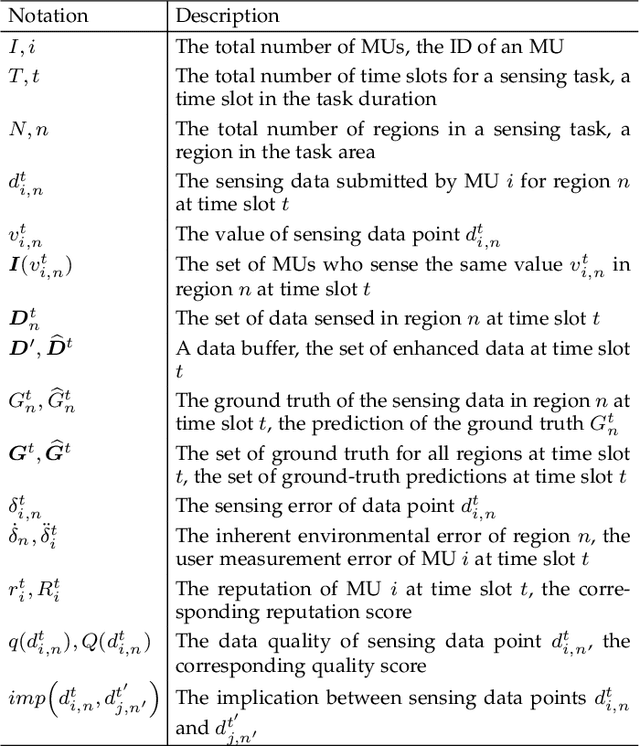

May 29, 2024

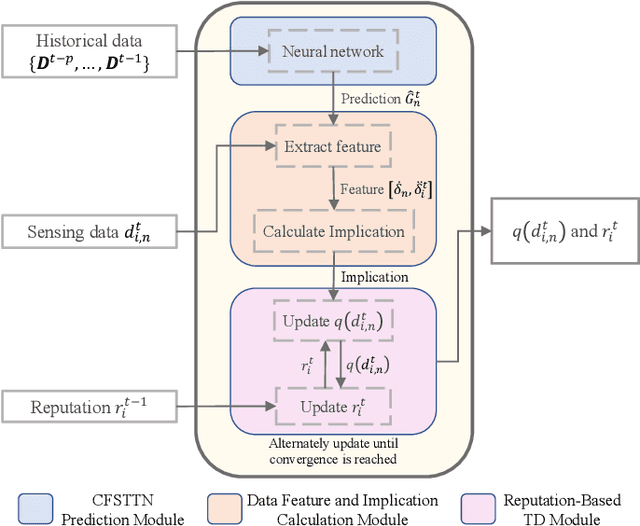

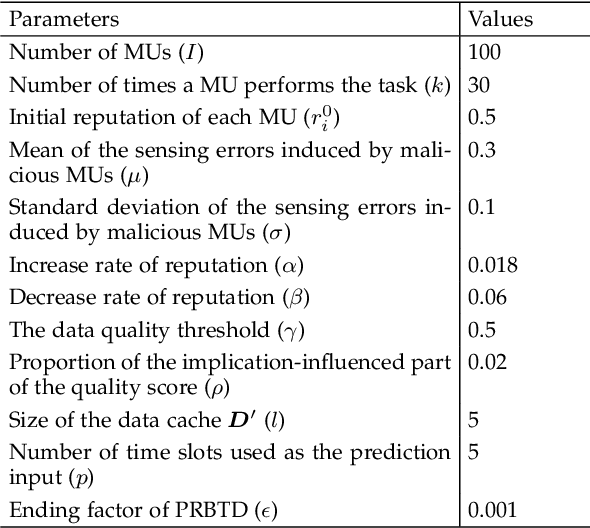

Abstract:Mobile crowdsensing (MCS) has emerged as a prominent trend across various domains. However, ensuring the quality of the sensing data submitted by mobile users (MUs) remains a complex and challenging problem. To address this challenge, an advanced method is required to detect low-quality sensing data and identify malicious MUs that may disrupt the normal operations of an MCS system. Therefore, this article proposes a prediction- and reputation-based truth discovery (PRBTD) framework, which can separate low-quality data from high-quality data in sensing tasks. First, we apply a correlation-focused spatial-temporal transformer network to predict the ground truth of the input sensing data. Then, we extract the sensing errors of the data as features based on the prediction results to calculate the implications among the data. Finally, we design a reputation-based truth discovery (TD) module for identifying low-quality data with their implications. Given sensing data submitted by MUs, PRBTD can eliminate the data with heavy noise and identify malicious MUs with high accuracy. Extensive experimental results demonstrate that PRBTD outperforms the existing methods in terms of identification accuracy and data quality enhancement.

Multiagent Reinforcement Learning with an Attention Mechanism for Improving Energy Efficiency in LoRa Networks

Sep 16, 2023

Abstract:Long Range (LoRa) wireless technology, characterized by low power consumption and a long communication range, is regarded as one of the enabling technologies for the Industrial Internet of Things (IIoT). However, as the network scale increases, the energy efficiency (EE) of LoRa networks decreases sharply due to severe packet collisions. To address this issue, it is essential to appropriately assign transmission parameters such as the spreading factor and transmission power for each end device (ED). However, due to the sporadic traffic and low duty cycle of LoRa networks, evaluating the system EE performance under different parameter settings is time-consuming. Therefore, we first formulate an analytical model to calculate the system EE. On this basis, we propose a transmission parameter allocation algorithm based on multiagent reinforcement learning (MALoRa) with the aim of maximizing the system EE of LoRa networks. Notably, MALoRa employs an attention mechanism to guide each ED to better learn how much ''attention'' should be given to the parameter assignments for relevant EDs when seeking to improve the system EE. Simulation results demonstrate that MALoRa significantly improves the system EE compared with baseline algorithms with an acceptable degradation in packet delivery rate (PDR).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge