Nan Cheng

Tony

LLM-Empowered Cooperative Content Caching in Vehicular Fog Caching-Assisted Platoon Networks

Feb 04, 2026Abstract:This letter proposes a novel three-tier content caching architecture for Vehicular Fog Caching (VFC)-assisted platoon, where the VFC is formed by the vehicles driving near the platoon. The system strategically coordinates storage across local platoon vehicles, dynamic VFC clusters, and cloud server (CS) to minimize content retrieval latency. To efficiently manage distributed storage, we integrate large language models (LLMs) for real-time and intelligent caching decisions. The proposed approach leverages LLMs' ability to process heterogeneous information, including user profiles, historical data, content characteristics, and dynamic system states. Through a designed prompting framework encoding task objectives and caching constraints, the LLMs formulate caching as a decision-making task, and our hierarchical deterministic caching mapping strategy enables adaptive requests prediction and precise content placement across three tiers without frequent retraining. Simulation results demonstrate the advantages of our proposed caching scheme.

Accurate Network Traffic Matrix Prediction via LEAD: an LLM-Enhanced Adapter-Based Conditional Diffusion Model

Jan 29, 2026Abstract:Driven by the evolution toward 6G and AI-native edge intelligence, network operations increasingly require predictive and risk-aware adaptation under stringent computation and latency constraints. Network Traffic Matrix (TM), which characterizes flow volumes between nodes, is a fundamental signal for proactive traffic engineering. However, accurate TM forecasting remains challenging due to the stochastic, non-linear, and bursty nature of network dynamics. Existing discriminative models often suffer from over-smoothing and provide limited uncertainty awareness, leading to poor fidelity under extreme bursts. To address these limitations, we propose LEAD, a Large Language Model (LLM)-Enhanced Adapter-based conditional Diffusion model. First, LEAD adopts a "Traffic-to-Image" paradigm to transform traffic matrices into RGB images, enabling global dependency modeling via vision backbones. Then, we design a "Frozen LLM with Trainable Adapter" model, which efficiently captures temporal semantics with limited computational cost. Moreover, we propose a Dual-Conditioning Strategy to precisely guide a diffusion model to generate complex, dynamic network traffic matrices. Experiments on the Abilene and GEANT datasets demonstrate that LEAD outperforms all baselines. On the Abilene dataset, LEAD attains a remarkable 45.2% reduction in RMSE against the best baseline, with the error margin rising only marginally from 0.1098 at one-step to 0.1134 at 20-step predictions. Meanwhile, on the GEANT dataset, LEAD achieves a 0.0258 RMSE at 20-step prediction horizon which is 27.3% lower than the best baseline.

RadioDiff-Flux: Efficient Radio Map Construction via Generative Denoise Diffusion Model Trajectory Midpoint Reuse

Jan 06, 2026Abstract:Accurate radio map (RM) construction is essential to enabling environment-aware and adaptive wireless communication. However, in future 6G scenarios characterized by high-speed network entities and fast-changing environments, it is very challenging to meet real-time requirements. Although generative diffusion models (DMs) can achieve state-of-the-art accuracy with second-level delay, their iterative nature leads to prohibitive inference latency in delay-sensitive scenarios. In this paper, by uncovering a key structural property of diffusion processes: the latent midpoints remain highly consistent across semantically similar scenes, we propose RadioDiff-Flux, a novel two-stage latent diffusion framework that decouples static environmental modeling from dynamic refinement, enabling the reuse of precomputed midpoints to bypass redundant denoising. In particular, the first stage generates a coarse latent representation using only static scene features, which can be cached and shared across similar scenarios. The second stage adapts this representation to dynamic conditions and transmitter locations using a pre-trained model, thereby avoiding repeated early-stage computation. The proposed RadioDiff-Flux significantly reduces inference time while preserving fidelity. Experiment results show that RadioDiff-Flux can achieve up to 50 acceleration with less than 0.15% accuracy loss, demonstrating its practical utility for fast, scalable RM generation in future 6G networks.

Phase-space entropy at acquisition reflects downstream learnability

Dec 22, 2025

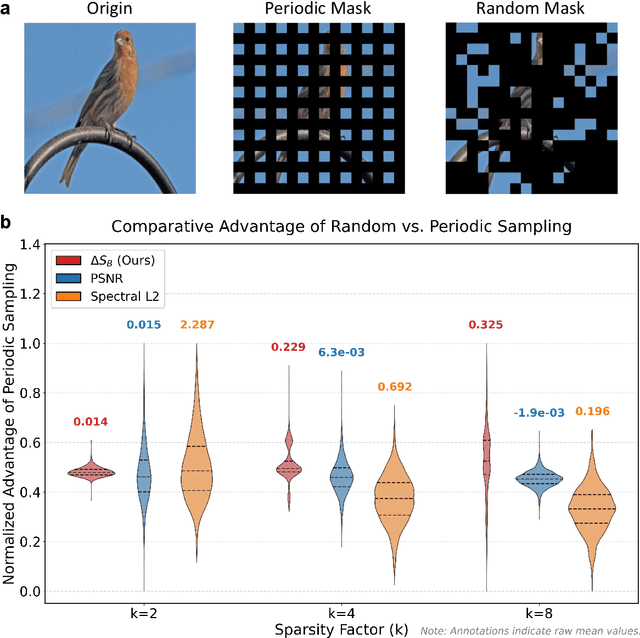

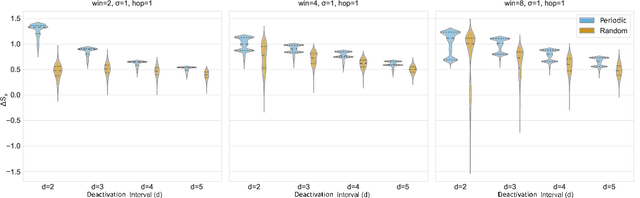

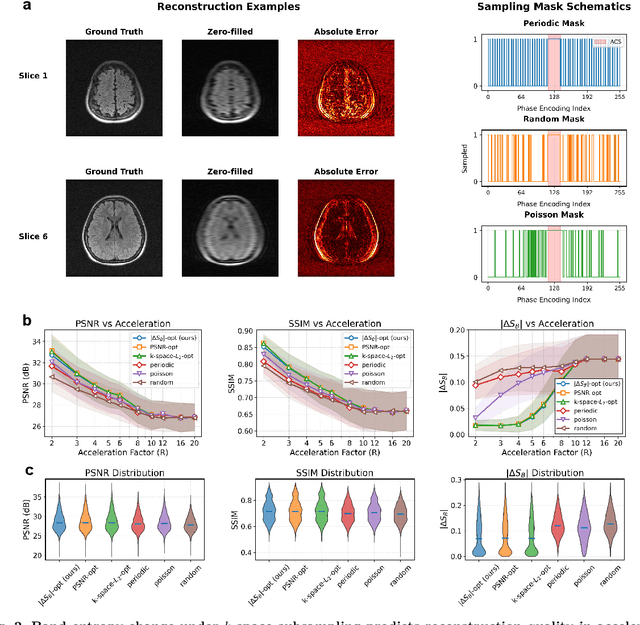

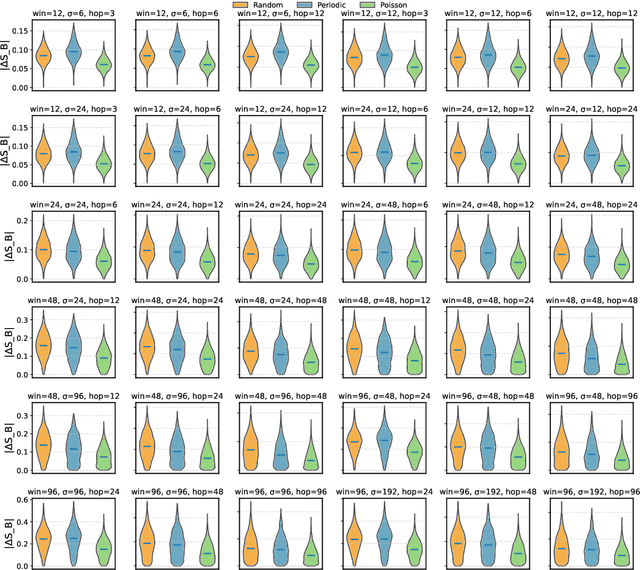

Abstract:Modern learning systems work with data that vary widely across domains, but they all ultimately depend on how much structure is already present in the measurements before any model is trained. This raises a basic question: is there a general, modality-agnostic way to quantify how acquisition itself preserves or destroys the information that downstream learners could use? Here we propose an acquisition-level scalar $ΔS_{\mathcal B}$ based on instrument-resolved phase space. Unlike pixelwise distortion or purely spectral errors that often saturate under aggressive undersampling, $ΔS_{\mathcal B}$ directly quantifies how acquisition mixes or removes joint space--frequency structure at the instrument scale. We show theoretically that \(ΔS_{\mathcal B}\) correctly identifies the phase-space coherence of periodic sampling as the physical source of aliasing, recovering classical sampling-theorem consequences. Empirically, across masked image classification, accelerated MRI, and massive MIMO (including over-the-air measurements), $|ΔS_{\mathcal B}|$ consistently ranks sampling geometries and predicts downstream reconstruction/recognition difficulty \emph{without training}. In particular, minimizing $|ΔS_{\mathcal B}|$ enables zero-training selection of variable-density MRI mask parameters that matches designs tuned by conventional pre-reconstruction criteria. These results suggest that phase-space entropy at acquisition reflects downstream learnability, enabling pre-training selection of candidate sampling policies and as a shared notion of information preservation across modalities.

Performance Analysis of End-to-End LEO Satellite-Aided Shore-to-Ship Communications: A Stochastic Geometry Approach

Oct 23, 2025Abstract:Low Earth orbit (LEO) satellite networks have shown strategic superiority in maritime communications, assisting in establishing signal transmissions from shore to ship through space-based links. Traditional performance modeling based on multiple circular orbits is challenging to characterize large-scale LEO satellite constellations, thus requiring a tractable approach to accurately evaluate the network performance. In this paper, we propose a theoretical framework for an LEO satellite-aided shore-to-ship communication network (LEO-SSCN), where LEO satellites are distributed as a binomial point process (BPP) on a specific spherical surface. The framework aims to obtain the end-to-end transmission performance by considering signal transmissions through either a marine link or a space link subject to Rician or Shadowed Rician fading, respectively. Due to the indeterminate position of the serving satellite, accurately modeling the distance from the serving satellite to the destination ship becomes intractable. To address this issue, we propose a distance approximation approach. Then, by approximation and incorporating a threshold-based communication scheme, we leverage stochastic geometry to derive analytical expressions of end-to-end transmission success probability and average transmission rate capacity. Extensive numerical results verify the accuracy of the analysis and demonstrate the effect of key parameters on the performance of LEO-SSCN.

Cross-Receiver Generalization for RF Fingerprint Identification via Feature Disentanglement and Adversarial Training

Oct 10, 2025

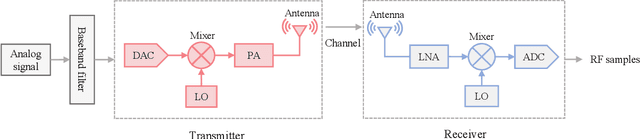

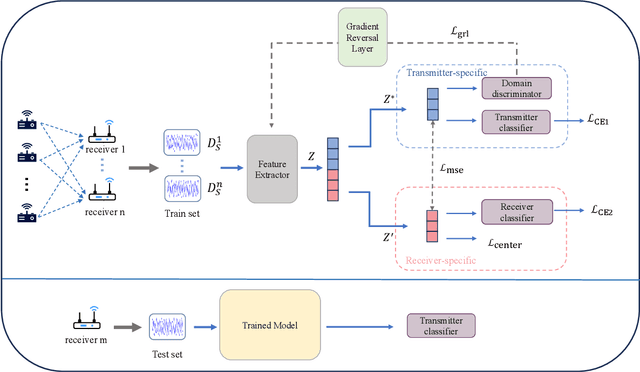

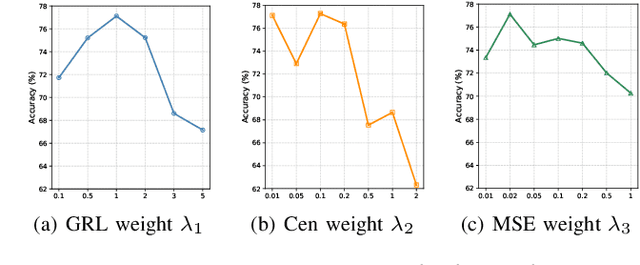

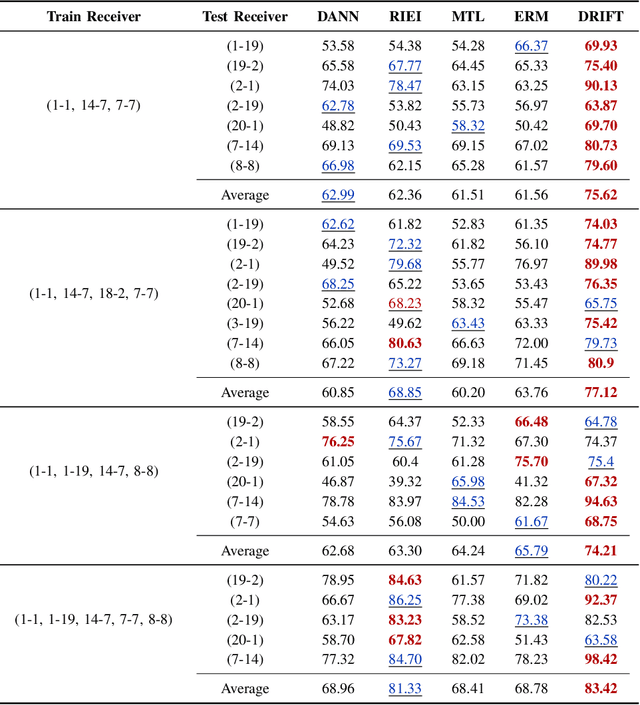

Abstract:Radio frequency fingerprint identification (RFFI) is a critical technique for wireless network security, leveraging intrinsic hardware-level imperfections introduced during device manufacturing to enable precise transmitter identification. While deep neural networks have shown remarkable capability in extracting discriminative features, their real-world deployment is hindered by receiver-induced variability. In practice, RF fingerprint signals comprise transmitter-specific features as well as channel distortions and receiver-induced biases. Although channel equalization can mitigate channel noise, receiver-induced feature shifts remain largely unaddressed, causing the RFFI models to overfit to receiver-specific patterns. This limitation is particularly problematic when training and evaluation share the same receiver, as replacing the receiver in deployment can cause substantial performance degradation. To tackle this challenge, we propose an RFFI framework robust to cross-receiver variability, integrating adversarial training and style transfer to explicitly disentangle transmitter and receiver features. By enforcing domain-invariant representation learning, our method isolates genuine hardware signatures from receiver artifacts, ensuring robustness against receiver changes. Extensive experiments on multi-receiver datasets demonstrate that our approach consistently outperforms state-of-the-art baselines, achieving up to a 10% improvement in average accuracy across diverse receiver settings.

Multibeam High Throughput Satellite: Hardware Foundation, Resource Allocation, and Precoding

Aug 01, 2025

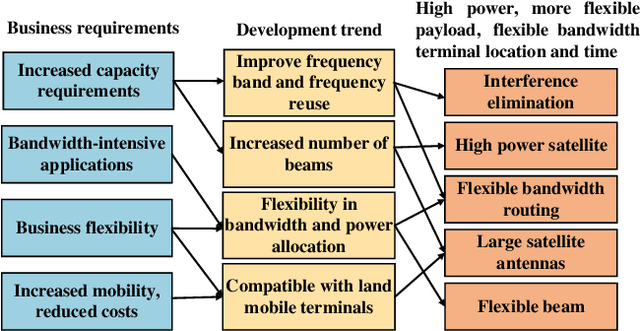

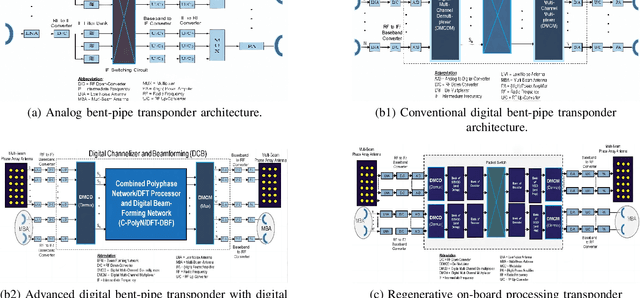

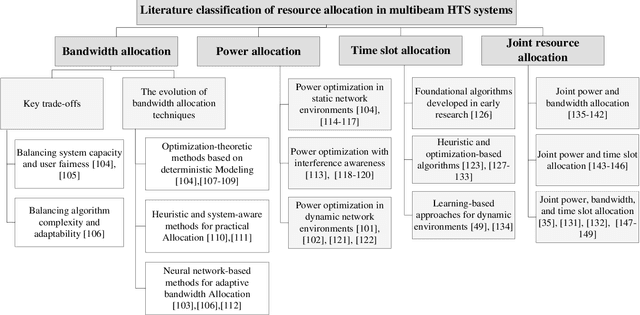

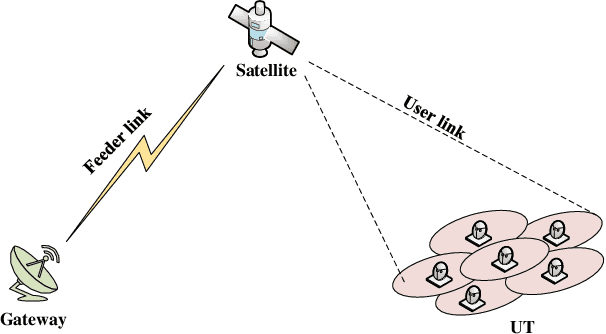

Abstract:With its wide coverage and uninterrupted service, satellite communication is a critical technology for next-generation 6G communications. High throughput satellite (HTS) systems, utilizing multipoint beam and frequency multiplexing techniques, enable satellite communication capacity of up to Tbps to meet the growing traffic demand. Therefore, it is imperative to review the-state-of-the-art of multibeam HTS systems and identify their associated challenges and perspectives. Firstly, we summarize the multibeam HTS hardware foundations, including ground station systems, on-board payloads, and user terminals. Subsequently, we review the flexible on-board radio resource allocation approaches of bandwidth, power, time slot, and joint allocation schemes of HTS systems to optimize resource utilization and cater to non-uniform service demand. Additionally, we survey multibeam precoding methods for the HTS system to achieve full-frequency reuse and interference cancellation, which are classified according to different deployments such as single gateway precoding, multiple gateway precoding, on-board precoding, and hybrid on-board/on-ground precoding. Finally, we disscuss the challenges related to Q/V band link outage, time and frequency synchronization of gateways, the accuracy of channel state information (CSI), payload light-weight development, and the application of deep learning (DL). Research on these topics will contribute to enhancing the performance of HTS systems and finally delivering high-speed data to areas underserved by terrestrial networks.

RadioDiff-3D: A 3D$\times$3D Radio Map Dataset and Generative Diffusion Based Benchmark for 6G Environment-Aware Communication

Jul 16, 2025Abstract:Radio maps (RMs) serve as a critical foundation for enabling environment-aware wireless communication, as they provide the spatial distribution of wireless channel characteristics. Despite recent progress in RM construction using data-driven approaches, most existing methods focus solely on pathloss prediction in a fixed 2D plane, neglecting key parameters such as direction of arrival (DoA), time of arrival (ToA), and vertical spatial variations. Such a limitation is primarily due to the reliance on static learning paradigms, which hinder generalization beyond the training data distribution. To address these challenges, we propose UrbanRadio3D, a large-scale, high-resolution 3D RM dataset constructed via ray tracing in realistic urban environments. UrbanRadio3D is over 37$\times$3 larger than previous datasets across a 3D space with 3 metrics as pathloss, DoA, and ToA, forming a novel 3D$\times$33D dataset with 7$\times$3 more height layers than prior state-of-the-art (SOTA) dataset. To benchmark 3D RM construction, a UNet with 3D convolutional operators is proposed. Moreover, we further introduce RadioDiff-3D, a diffusion-model-based generative framework utilizing the 3D convolutional architecture. RadioDiff-3D supports both radiation-aware scenarios with known transmitter locations and radiation-unaware settings based on sparse spatial observations. Extensive evaluations on UrbanRadio3D validate that RadioDiff-3D achieves superior performance in constructing rich, high-dimensional radio maps under diverse environmental dynamics. This work provides a foundational dataset and benchmark for future research in 3D environment-aware communication. The dataset is available at https://github.com/UNIC-Lab/UrbanRadio3D.

AI-based Environment-Aware XL-MIMO Channel Estimation with Location-Specific Prior Knowledge Enabled by CKM

Jul 08, 2025Abstract:Accurate and efficient acquisition of wireless channel state information (CSI) is crucial to enhance the communication performance of wireless systems. However, with the continuous densification of wireless links, increased channel dimensions, and the use of higher-frequency bands, channel estimation in the sixth generation (6G) and beyond wireless networks faces new challenges, such as insufficient orthogonal pilot sequences, inadequate signal-to-noise ratio (SNR) for channel training, and more sophisticated channel statistical distributions in complex environment. These challenges pose significant difficulties for classical channel estimation algorithms like least squares (LS) and maximum a posteriori (MAP). To address this problem, we propose a novel environment-aware channel estimation framework with location-specific prior channel distribution enabled by the new concept of channel knowledge map (CKM). To this end, we propose a new type of CKM called channel score function map (CSFM), which learns the channel probability density function (PDF) using artificial intelligence (AI) techniques. To fully exploit the prior information in CSFM, we propose a plug-and-play (PnP) based algorithm to decouple the regularized MAP channel estimation problem, thereby reducing the complexity of the optimization process. Besides, we employ Tweedie's formula to establish a connection between the channel score function, defined as the logarithmic gradient of the channel PDF, and the channel denoiser. This allows the use of the high-precision, environment-aware channel denoiser from the CSFM to approximate the channel score function, thus enabling efficient processing of the decoupled channel statistical components. Simulation results show that the proposed CSFM-PnP based channel estimation technique significantly outperforms the conventional techniques in the aforementioned challenging scenarios.

Latent Diffusion Model Based Denoising Receiver for 6G Semantic Communication: From Stochastic Differential Theory to Application

Jun 06, 2025Abstract:In this paper, a novel semantic communication framework empowered by generative artificial intelligence (GAI) is proposed, specifically leveraging the capabilities of diffusion models (DMs). A rigorous theoretical foundation is established based on stochastic differential equations (SDEs), which elucidates the denoising properties of DMs in mitigating additive white Gaussian noise (AWGN) in latent semantic representations. Crucially, a closed-form analytical relationship between the signal-to-noise ratio (SNR) and the denoising timestep is derived, enabling the optimal selection of diffusion parameters for any given channel condition. To address the distribution mismatch between the received signal and the DM's training data, a mathematically principled scaling mechanism is introduced, ensuring robust performance across a wide range of SNRs without requiring model fine-tuning. Built upon this theoretical insight, we develop a latent diffusion model (LDM)-based semantic transceiver, wherein a variational autoencoder (VAE) is employed for efficient semantic compression, and a pretrained DM serves as a universal denoiser. Notably, the proposed architecture is fully training-free at inference time, offering high modularity and compatibility with large-scale pretrained LDMs. This design inherently supports zero-shot generalization and mitigates the challenges posed by out-of-distribution inputs. Extensive experimental evaluations demonstrate that the proposed framework significantly outperforms conventional neural-network-based semantic communication baselines, particularly under low SNR conditions and distributional shifts, thereby establishing a promising direction for GAI-driven robust semantic transmission in future 6G systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge