Ruijin Sun

RadioDiff-Flux: Efficient Radio Map Construction via Generative Denoise Diffusion Model Trajectory Midpoint Reuse

Jan 06, 2026Abstract:Accurate radio map (RM) construction is essential to enabling environment-aware and adaptive wireless communication. However, in future 6G scenarios characterized by high-speed network entities and fast-changing environments, it is very challenging to meet real-time requirements. Although generative diffusion models (DMs) can achieve state-of-the-art accuracy with second-level delay, their iterative nature leads to prohibitive inference latency in delay-sensitive scenarios. In this paper, by uncovering a key structural property of diffusion processes: the latent midpoints remain highly consistent across semantically similar scenes, we propose RadioDiff-Flux, a novel two-stage latent diffusion framework that decouples static environmental modeling from dynamic refinement, enabling the reuse of precomputed midpoints to bypass redundant denoising. In particular, the first stage generates a coarse latent representation using only static scene features, which can be cached and shared across similar scenarios. The second stage adapts this representation to dynamic conditions and transmitter locations using a pre-trained model, thereby avoiding repeated early-stage computation. The proposed RadioDiff-Flux significantly reduces inference time while preserving fidelity. Experiment results show that RadioDiff-Flux can achieve up to 50 acceleration with less than 0.15% accuracy loss, demonstrating its practical utility for fast, scalable RM generation in future 6G networks.

RadioDiff-$k^2$: Helmholtz Equation Informed Generative Diffusion Model for Multi-Path Aware Radio Map Construction

Apr 22, 2025Abstract:In this paper, we propose a novel physics-informed generative learning approach, termed RadioDiff-$\bm{k^2}$, for accurate and efficient multipath-aware radio map (RM) construction. As wireless communication evolves towards environment-aware paradigms, driven by the increasing demand for intelligent and proactive optimization in sixth-generation (6G) networks, accurate construction of RMs becomes crucial yet highly challenging. Conventional electromagnetic (EM)-based methods, such as full-wave solvers and ray-tracing approaches, exhibit substantial computational overhead and limited adaptability to dynamic scenarios. Although, existing neural network (NN) approaches have efficient inferencing speed, they lack sufficient consideration of the underlying physics of EM wave propagation, limiting their effectiveness in accurately modeling critical EM singularities induced by complex multipath environments. To address these fundamental limitations, we propose a novel physics-inspired RM construction method guided explicitly by the Helmholtz equation, which inherently governs EM wave propagation. Specifically, we theoretically establish a direct correspondence between EM singularities, which correspond to the critical spatial features influencing wireless propagation, and regions defined by negative wave numbers in the Helmholtz equation. Based on this insight, we design an innovative dual generative diffusion model (DM) framework comprising one DM dedicated to accurately inferring EM singularities and another DM responsible for reconstructing the complete RM using these singularities along with environmental contextual information. Our physics-informed approach uniquely combines the efficiency advantages of data-driven methods with rigorous physics-based EM modeling, significantly enhancing RM accuracy, particularly in complex propagation environments dominated by multipath effects.

Structural Knowledge-Driven Meta-Learning for Task Offloading in Vehicular Networks with Integrated Communications, Sensing and Computing

Feb 25, 2024

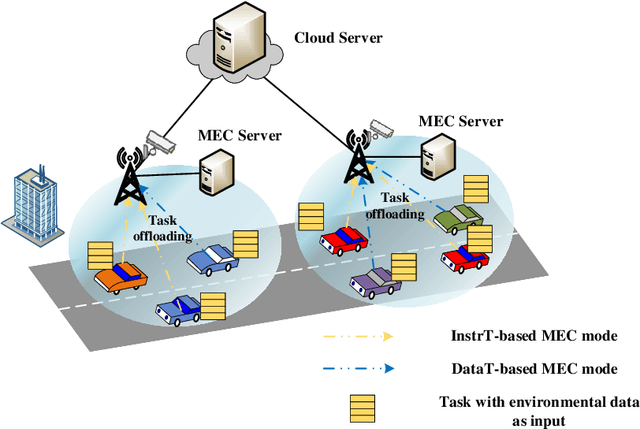

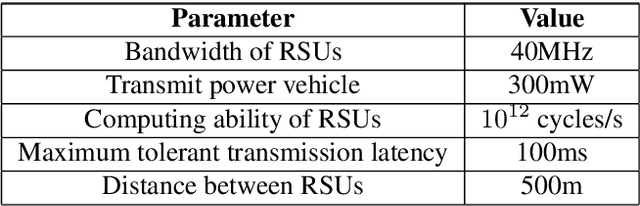

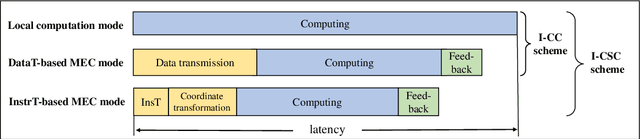

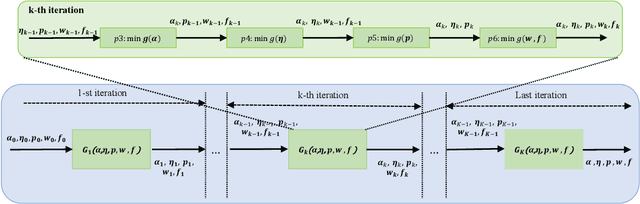

Abstract:Task offloading is a potential solution to satisfy the strict requirements of computation-intensive and latency-sensitive vehicular applications due to the limited onboard computing resources. However, the overwhelming upload traffic may lead to unacceptable uploading time. To tackle this issue, for tasks taking environmental data as input, the data perceived by roadside units (RSU) equipped with several sensors can be directly exploited for computation, resulting in a novel task offloading paradigm with integrated communications, sensing and computing (I-CSC). With this paradigm, vehicles can select to upload their sensed data to RSUs or transmit computing instructions to RSUs during the offloading. By optimizing the computation mode and network resources, in this paper, we investigate an I-CSC-based task offloading problem to reduce the cost caused by resource consumption while guaranteeing the latency of each task. Although this non-convex problem can be handled by the alternating minimization (AM) algorithm that alternatively minimizes the divided four sub-problems, it leads to high computational complexity and local optimal solution. To tackle this challenge, we propose a creative structural knowledge-driven meta-learning (SKDML) method, involving both the model-based AM algorithm and neural networks. Specifically, borrowing the iterative structure of the AM algorithm, also referred to as structural knowledge, the proposed SKDML adopts long short-term memory (LSTM) network-based meta-learning to learn an adaptive optimizer for updating variables in each sub-problem, instead of the handcrafted counterpart in the AM algorithm.

Knowledge-Driven Deep Learning Paradigms for Wireless Network Optimization in 6G

Jan 15, 2024

Abstract:In the sixth-generation (6G) networks, newly emerging diversified services of massive users in dynamic network environments are required to be satisfied by multi-dimensional heterogeneous resources. The resulting large-scale complicated network optimization problems are beyond the capability of model-based theoretical methods due to the overwhelming computational complexity and the long processing time. Although with fast online inference and universal approximation ability, data-driven deep learning (DL) heavily relies on abundant training data and lacks interpretability. To address these issues, a new paradigm called knowledge-driven DL has emerged, aiming to integrate proven domain knowledge into the construction of neural networks, thereby exploiting the strengths of both methods. This article provides a systematic review of knowledge-driven DL in wireless networks. Specifically, a holistic framework of knowledge-driven DL in wireless networks is proposed, where knowledge sources, knowledge representation, knowledge integration and knowledge application are forming as a closed loop. Then, a detailed taxonomy of knowledge integration approaches, including knowledge-assisted, knowledge-fused, and knowledge-embedded DL, is presented. Several open issues for future research are also discussed. The insights offered in this article provide a basic principle for the design of network optimization that incorporates communication-specific domain knowledge and DL, facilitating the realization of intelligent 6G networks.

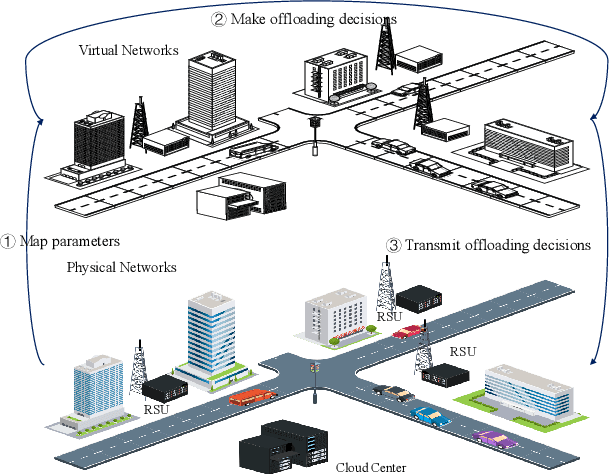

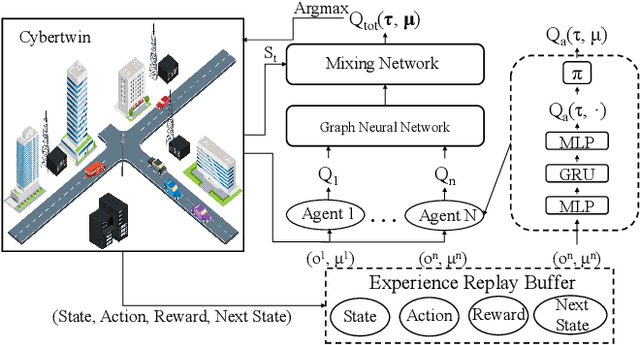

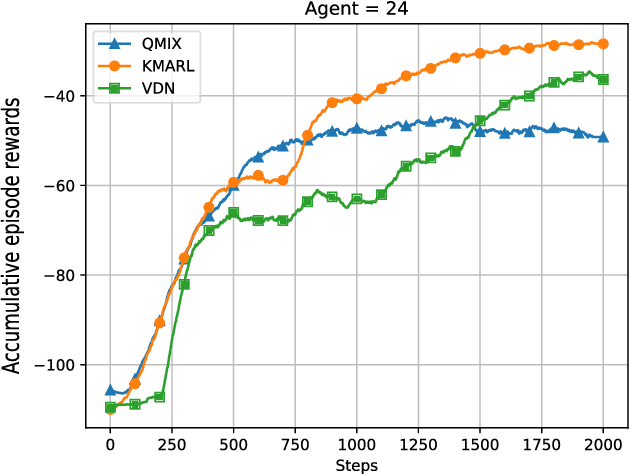

Knowledge-Driven Multi-Agent Reinforcement Learning for Computation Offloading in Cybertwin-Enabled Internet of Vehicles

Aug 04, 2023

Abstract:By offloading computation-intensive tasks of vehicles to roadside units (RSUs), mobile edge computing (MEC) in the Internet of Vehicles (IoV) can relieve the onboard computation burden. However, existing model-based task offloading methods suffer from heavy computational complexity with the increase of vehicles and data-driven methods lack interpretability. To address these challenges, in this paper, we propose a knowledge-driven multi-agent reinforcement learning (KMARL) approach to reduce the latency of task offloading in cybertwin-enabled IoV. Specifically, in the considered scenario, the cybertwin serves as a communication agent for each vehicle to exchange information and make offloading decisions in the virtual space. To reduce the latency of task offloading, a KMARL approach is proposed to select the optimal offloading option for each vehicle, where graph neural networks are employed by leveraging domain knowledge concerning graph-structure communication topology and permutation invariance into neural networks. Numerical results show that our proposed KMARL yields higher rewards and demonstrates improved scalability compared with other methods, benefitting from the integration of domain knowledge.

Scalable Resource Management for Dynamic MEC: An Unsupervised Link-Output Graph Neural Network Approach

Jun 20, 2023

Abstract:Deep learning has been successfully adopted in mobile edge computing (MEC) to optimize task offloading and resource allocation. However, the dynamics of edge networks raise two challenges in neural network (NN)-based optimization methods: low scalability and high training costs. Although conventional node-output graph neural networks (GNN) can extract features of edge nodes when the network scales, they fail to handle a new scalability issue whereas the dimension of the decision space may change as the network scales. To address the issue, in this paper, a novel link-output GNN (LOGNN)-based resource management approach is proposed to flexibly optimize the resource allocation in MEC for an arbitrary number of edge nodes with extremely low algorithm inference delay. Moreover, a label-free unsupervised method is applied to train the LOGNN efficiently, where the gradient of edge tasks processing delay with respect to the LOGNN parameters is derived explicitly. In addition, a theoretical analysis of the scalability of the node-output GNN and link-output GNN is performed. Simulation results show that the proposed LOGNN can efficiently optimize the MEC resource allocation problem in a scalable way, with an arbitrary number of servers and users. In addition, the proposed unsupervised training method has better convergence performance and speed than supervised learning and reinforcement learning-based training methods. The code is available at \url{https://github.com/UNIC-Lab/LOGNN}.

Digital Twin-Assisted Knowledge Distillation Framework for Heterogeneous Federated Learning

Mar 10, 2023

Abstract:In this paper, to deal with the heterogeneity in federated learning (FL) systems, a knowledge distillation (KD) driven training framework for FL is proposed, where each user can select its neural network model on demand and distill knowledge from a big teacher model using its own private dataset. To overcome the challenge of train the big teacher model in resource limited user devices, the digital twin (DT) is exploit in the way that the teacher model can be trained at DT located in the server with enough computing resources. Then, during model distillation, each user can update the parameters of its model at either the physical entity or the digital agent. The joint problem of model selection and training offloading and resource allocation for users is formulated as a mixed integer programming (MIP) problem. To solve the problem, Q-learning and optimization are jointly used, where Q-learning selects models for users and determines whether to train locally or on the server, and optimization is used to allocate resources for users based on the output of Q-learning. Simulation results show the proposed DT-assisted KD framework and joint optimization method can significantly improve the average accuracy of users while reducing the total delay.

SigT: An Efficient End-to-End MIMO-OFDM Receiver Framework Based on Transformer

Nov 02, 2022Abstract:Multiple-input multiple-output and orthogonal frequency-division multiplexing (MIMO-OFDM) are the key technologies in 4G and subsequent wireless communication systems. Conventionally, the MIMO-OFDM receiver is performed by multiple cascaded blocks with different functions and the algorithm in each block is designed based on ideal assumptions of wireless channel distributions. However, these assumptions may fail in practical complex wireless environments. The deep learning (DL) method has the ability to capture key features from complex and huge data. In this paper, a novel end-to-end MIMO-OFDM receiver framework based on \textit{transformer}, named SigT, is proposed. By regarding the signal received from each antenna as a token of the transformer, the spatial correlation of different antennas can be learned and the critical zero-shot problem can be mitigated. Furthermore, the proposed SigT framework can work well without the inserted pilots, which improves the useful data transmission efficiency. Experiment results show that SigT achieves much higher performance in terms of signal recovery accuracy than benchmark methods, even in a low SNR environment or with a small number of training samples. Code is available at https://github.com/SigTransformer/SigT.

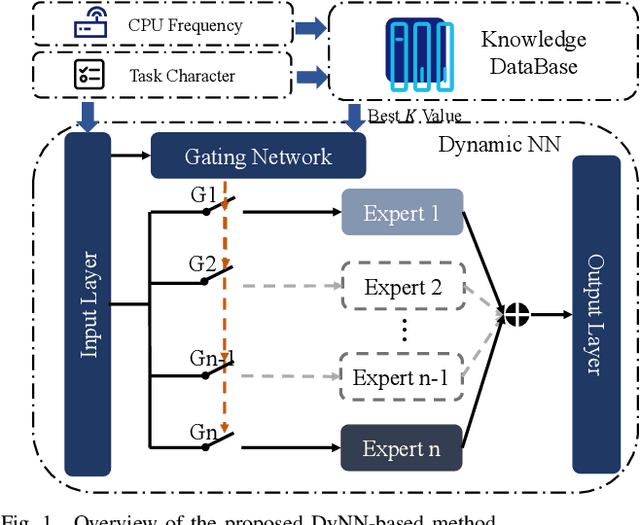

On-Demand Resource Management for 6G Wireless Networks Using Knowledge-Assisted Dynamic Neural Networks

Aug 02, 2022

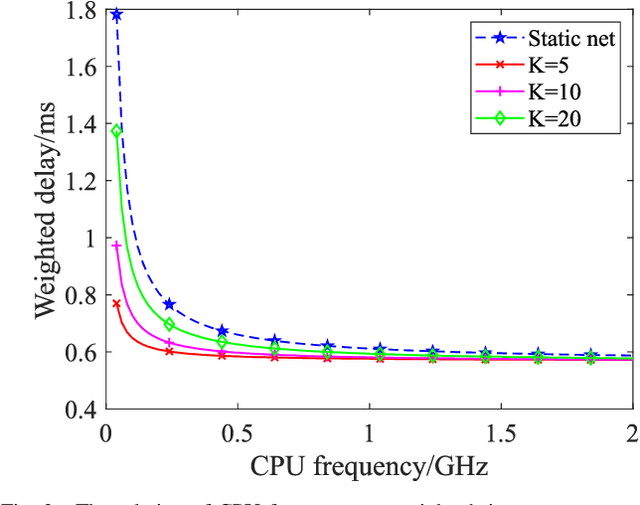

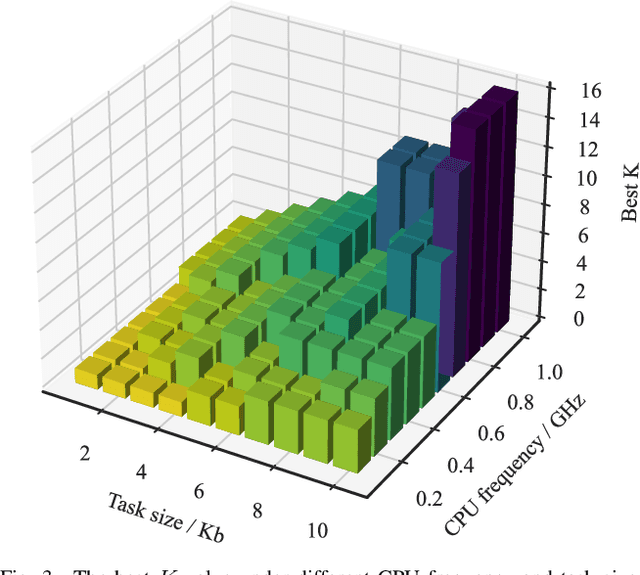

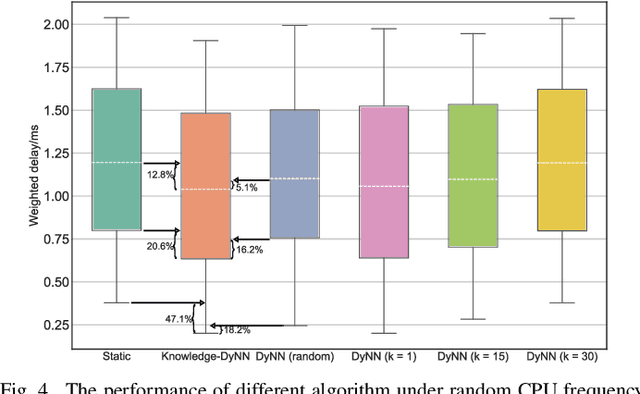

Abstract:On-demand service provisioning is a critical yet challenging issue in 6G wireless communication networks, since emerging services have significantly diverse requirements and the network resources become increasingly heterogeneous and dynamic. In this paper, we study the on-demand wireless resource orchestration problem with the focus on the computing delay in orchestration decision-making process. Specifically, we take the decision-making delay into the optimization problem. Then, a dynamic neural network (DyNN)-based method is proposed, where the model complexity can be adjusted according to the service requirements. We further build a knowledge base representing the relationship among the service requirements, available computing resources, and the resource allocation performance. By exploiting the knowledge, the width of DyNN can be selected in a timely manner, further improving the performance of orchestration. Simulation results show that the proposed scheme significantly outperforms the traditional static neural network, and also shows sufficient flexibility in on-demand service provisioning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge