Tingxiang Fan

SMAT: A Self-Reinforcing Framework for Simultaneous Mapping and Tracking in Unbounded Urban Environments

Apr 27, 2023Abstract:With the increasing prevalence of robots in daily life, it is crucial to enable robots to construct a reliable map online to navigate in unbounded and changing environments. Although existing methods can individually achieve the goals of spatial mapping and dynamic object detection and tracking, limited research has been conducted on an effective combination of these two important abilities. The proposed framework, SMAT (Simultaneous Mapping and Tracking), integrates the front-end dynamic object detection and tracking module with the back-end static mapping module using a self-reinforcing mechanism, which promotes mutual improvement of mapping and tracking performance. The conducted experiments demonstrate the framework's effectiveness in real-world applications, achieving successful long-range navigation and mapping in multiple urban environments using only one LiDAR, a CPU-only onboard computer, and a consumer-level GPS receiver.

DynamicFilter: an Online Dynamic Objects Removal Framework for Highly Dynamic Environments

Jun 30, 2022

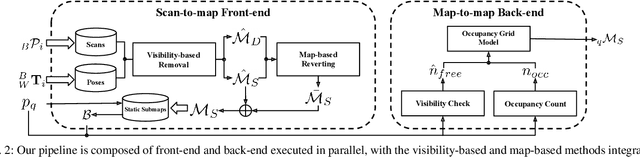

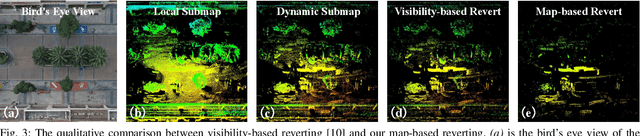

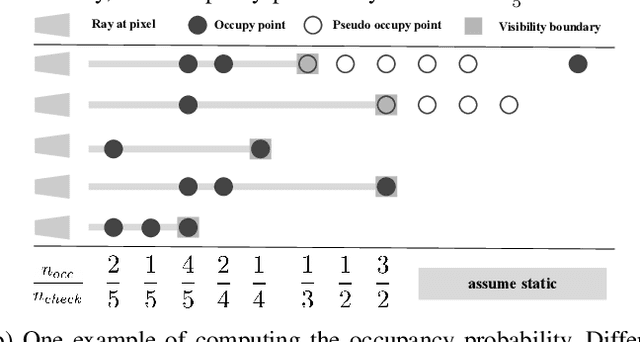

Abstract:Emergence of massive dynamic objects will diversify spatial structures when robots navigate in urban environments. Therefore, the online removal of dynamic objects is critical. In this paper, we introduce a novel online removal framework for highly dynamic urban environments. The framework consists of the scan-to-map front-end and the map-to-map back-end modules. Both the front- and back-ends deeply integrate the visibility-based approach and map-based approach. The experiments validate the framework in highly dynamic simulation scenarios and real-world datasets.

DiffSRL: Learning Dynamic-aware State Representation for Deformable Object Control with Differentiable Simulator

Oct 24, 2021

Abstract:Dynamic state representation learning is an important task in robot learning. Latent space that can capture dynamics related information has wide application in areas such as accelerating model free reinforcement learning, closing the simulation to reality gap, as well as reducing the motion planning complexity. However, current dynamic state representation learning methods scale poorly on complex dynamic systems such as deformable objects, and cannot directly embed well defined simulation function into the training pipeline. We propose DiffSRL, a dynamic state representation learning pipeline utilizing differentiable simulation that can embed complex dynamics models as part of the end-to-end training. We also integrate differentiable dynamic constraints as part of the pipeline which provide incentives for the latent state to be aware of dynamical constraints. We further establish a state representation learning benchmark on a soft-body simulation system, PlasticineLab, and our model demonstrates superior performance in terms of capturing long-term dynamics as well as reward prediction.

Crowd-Driven Mapping, Localization and Planning

Jan 03, 2021

Abstract:Navigation in dense crowds is a well-known open problem in robotics with many challenges in mapping, localization, and planning. Traditional solutions consider dense pedestrians as passive/active moving obstacles that are the cause of all troubles: they negatively affect the sensing of static scene landmarks and must be actively avoided for safety. In this paper, we provide a new perspective: the crowd flow locally observed can be treated as a sensory measurement about the surrounding scenario, encoding not only the scene's traversability but also its social navigation preference. We demonstrate that even using the crowd-flow measurement alone without any sensing about static obstacles, our method still accomplishes good results for mapping, localization, and social-aware planning in dense crowds. Videos of the experiments are available at https://sites.google.com/view/crowdmapping.

Autonomous Social Distancing in Urban Environments using a Quadruped Robot

Aug 20, 2020

Abstract:COVID-19 pandemic has become a global challenge faced by people all over the world. Social distancing has been proved to be an effective practice to reduce the spread of COVID-19. Against this backdrop, we propose that the surveillance robots can not only monitor but also promote social distancing. Robots can be flexibly deployed and they can take precautionary actions to remind people of practicing social distancing. In this paper, we introduce a fully autonomous surveillance robot based on a quadruped platform that can promote social distancing in complex urban environments. Specifically, to achieve autonomy, we mount multiple cameras and a 3D LiDAR on the legged robot. The robot then uses an onboard real-time social distancing detection system to track nearby pedestrian groups. Next, the robot uses a crowd-aware navigation algorithm to move freely in highly dynamic scenarios. The robot finally uses a crowd-aware routing algorithm to effectively promote social distancing by using human-friendly verbal cues to send suggestions to over-crowded pedestrians. We demonstrate and validate that our robot can be operated autonomously by conducting several experiments in various urban scenarios.

Modeling 3D Shapes by Reinforcement Learning

Mar 27, 2020

Abstract:We explore how to enable machines to model 3D shapes like human modelers using reinforcement learning (RL). In 3D modeling software like Maya, a modeler usually creates a mesh model in two steps: (1) approximating the shape using a set of primitives; (2) editing the meshes of the primitives to create detailed geometry. Inspired by such artist-based modeling, we propose a two-step neural framework based on RL to learn 3D modeling policies. By taking actions and collecting rewards in an interactive environment, the agents first learn to parse a target shape into primitives and then to edit the geometry. To effectively train the modeling agents, we introduce a novel training algorithm that combines heuristic policy, imitation learning and reinforcement learning. Our experiments show that the agents can learn good policies to produce regular and structure-aware mesh models, which demonstrates the feasibility and effectiveness of the proposed RL framework.

DeepMNavigate: Deep Reinforced Multi-Robot Navigation Unifying Local & Global Collision Avoidance

Oct 22, 2019

Abstract:We present a novel algorithm (DeepMNavigate) for global multi-agent navigation in dense scenarios using deep reinforcement learning. Our approach uses local and global information for each robot based on motion information maps. We use a three-layer CNN that uses these maps as input and generate a suitable action to drive each robot to its goal position. Our approach is general, learns an optimal policy using a multi-scenario, multi-state training algorithm, and can directly handle raw sensor measurements for local observations. We demonstrate the performance on complex, dense benchmarks with narrow passages on environments with tens of agents. We highlight the algorithm's benefits over prior learning methods and geometric decentralized algorithms in complex scenarios.

Learning Resilient Behaviors for Navigation Under Uncertainty Environments

Oct 22, 2019

Abstract:Deep reinforcement learning has great potential to acquire complex, adaptive behaviors for autonomous agents automatically. However, the underlying neural network polices have not been widely deployed in real-world applications, especially in these safety-critical tasks (e.g., autonomous driving). One of the reasons is that the learned policy cannot perform flexible and resilient behaviors as traditional methods to adapt to diverse environments. In this paper, we consider the problem that a mobile robot learns adaptive and resilient behaviors for navigating in unseen uncertain environments while avoiding collisions. We present a novel approach for uncertainty-aware navigation by introducing an uncertainty-aware predictor to model the environmental uncertainty, and we propose a novel uncertainty-aware navigation network to learn resilient behaviors in the prior unknown environments. To train the proposed uncertainty-aware network more stably and efficiently, we present the temperature decay training paradigm, which balances exploration and exploitation during the training process. Our experimental evaluation demonstrates that our approach can learn resilient behaviors in diverse environments and generate adaptive trajectories according to environmental uncertainties.

Intervention Aided Reinforcement Learning for Safe and Practical Policy Optimization in Navigation

Nov 15, 2018

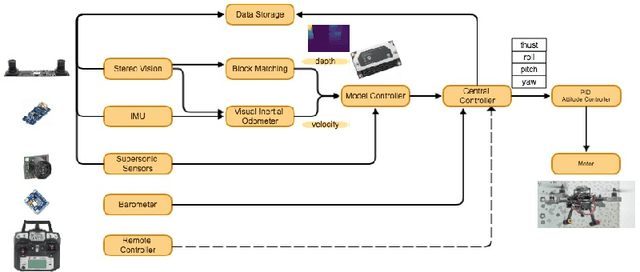

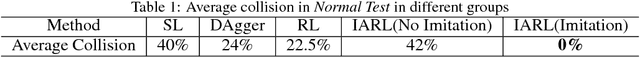

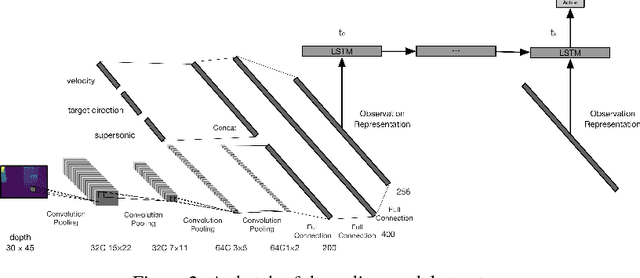

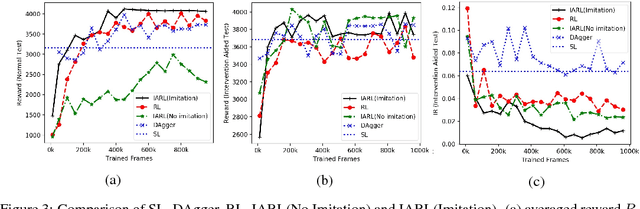

Abstract:Combining deep neural networks with reinforcement learning has shown great potential in the next-generation intelligent control. However, there are challenges in terms of safety and cost in practical applications. In this paper, we propose the Intervention Aided Reinforcement Learning (IARL) framework, which utilizes human intervened robot-environment interaction to improve the policy. We used the Unmanned Aerial Vehicle (UAV) as the test platform. We built neural networks as our policy to map sensor readings to control signals on the UAV. Our experiment scenarios cover both simulation and reality. We show that our approach substantially reduces the human intervention and improves the performance in autonomous navigation, at the same time it ensures safety and keeps training cost acceptable.

Getting Robots Unfrozen and Unlost in Dense Pedestrian Crowds

Sep 30, 2018

Abstract:We aim to enable a mobile robot to navigate through environments with dense crowds, e.g., shopping malls, canteens, train stations, or airport terminals. In these challenging environments, existing approaches suffer from two common problems: the robot may get frozen and cannot make any progress toward its goal, or it may get lost due to severe occlusions inside a crowd. Here we propose a navigation framework that handles the robot freezing and the navigation lost problems simultaneously. First, we enhance the robot's mobility and unfreeze the robot in the crowd using a reinforcement learning based local navigation policy developed in our previous work~\cite{long2017towards}, which naturally takes into account the coordination between the robot and the human. Secondly, the robot takes advantage of its excellent local mobility to recover from its localization failure. In particular, it dynamically chooses to approach a set of recovery positions with rich features. To the best of our knowledge, our method is the first approach that simultaneously solves the freezing problem and the navigation lost problem in dense crowds. We evaluate our method in both simulated and real-world environments and demonstrate that it outperforms the state-of-the-art approaches. Videos are available at https://sites.google.com/view/rlslam.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge