Pinxin Long

AES: Autonomous Excavator System for Real-World and Hazardous Environments

Nov 10, 2020

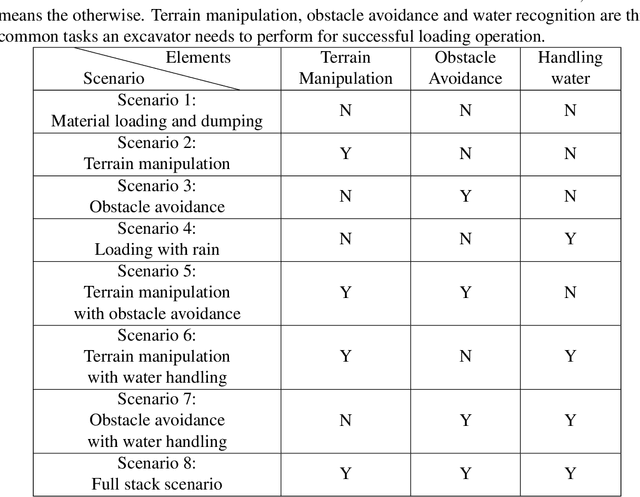

Abstract:Excavators are widely used for material-handling applications in unstructured environments, including mining and construction. The size of the global market of excavators is 44.12 Billion USD in 2018 and is predicted to grow to 63.14 Billion USD by 2026. Operating excavators in a real-world environment can be challenging due to extreme conditions and rock sliding, ground collapse, or exceeding dust. Multiple fatalities and injuries occur each year during excavations. An autonomous excavator that can substitute human operators in these hazardous environments would substantially lower the number of injuries and can improve the overall productivity.

Optimization-Based Framework for Excavation Trajectory Generation

Oct 27, 2020

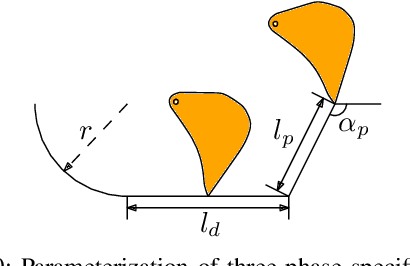

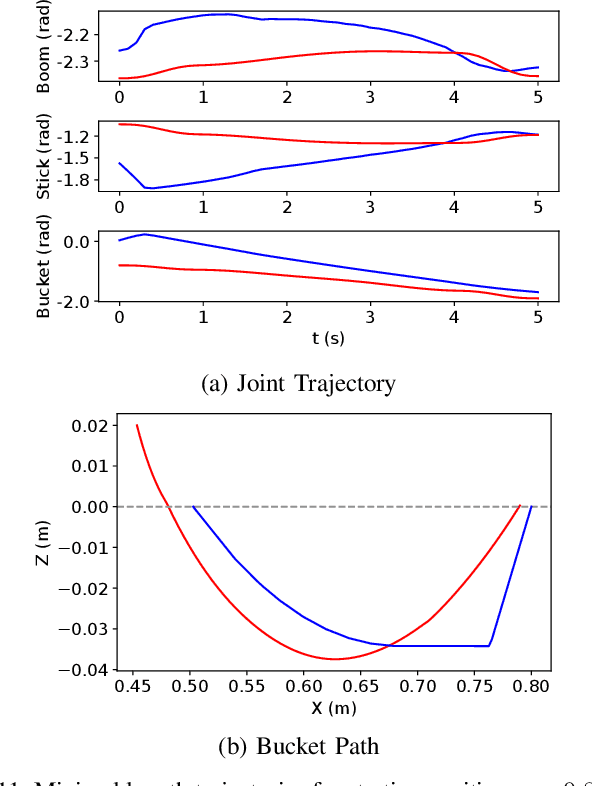

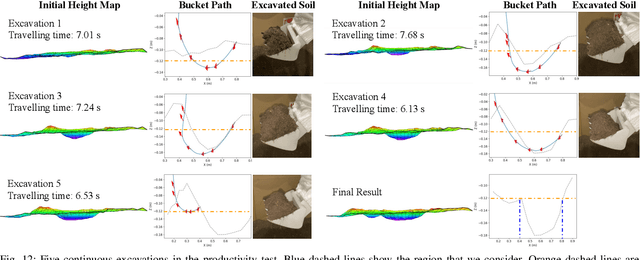

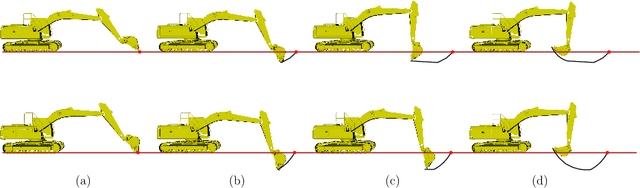

Abstract:In this paper, we present a novel optimization-based framework for autonomous excavator trajectory generation under various objectives, including minimum joint displacement and minimum time. Traditional methods on excavation trajectory generation usually separate the excavation motion into a sequence of fixed phases, resulting in limited trajectory searching space. Our framework explores the space of all possible excavation trajectories represented with waypoints interpolated by a polynomial spline, thereby enabling optimization over a larger searching space. We formulate a generic task specification for excavation by constraining the instantaneous motion of the bucket and further add a target-oriented constraint, i.e. swept volume that indicates the estimated amount of excavated materials. To formulate time related objectives and constraints, we introduce time intervals between waypoints as variables into the optimization framework. We implement the proposed framework and evaluate its performance on a UR5 robotic arm. The experimental results demonstrate that the generated trajectories are able to excavate sufficient mass of soil for different terrain shapes and have 60% shorter minimal length than traditional excavation methods. We further compare our one-stage time optimal trajectory generation with the two-stage method. The result shows that trajectories generated by our one-stage method cost 18% less time on average.

Time Variable Minimum Torque Trajectory Optimization for Autonomous Excavator

Jun 01, 2020

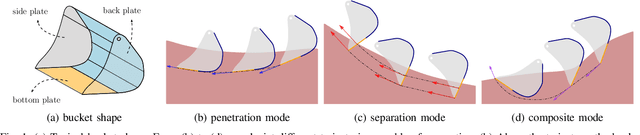

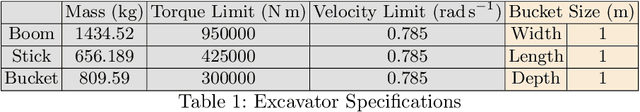

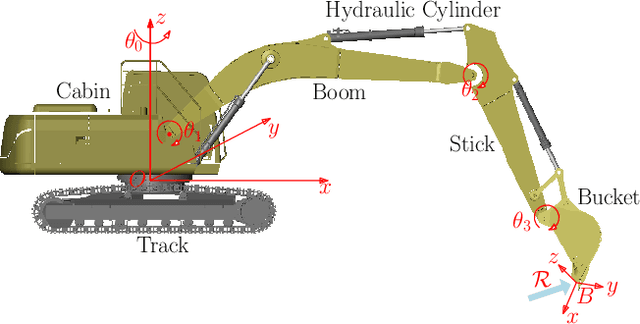

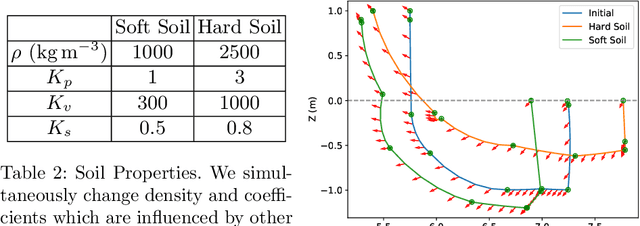

Abstract:In this paper, we present a minimal torque and time variable trajectory optimization method for autonomous excavator considering the soil-tool interaction. The method formulates the excavation motion generation as a trajectory optimization problem and takes into account geometric, kinematic and dynamics constraints. To generate time-efficient trajectory and improve the overall optimization efficiency, we propose a time variable trajectory optimization mechanism so that the time intervals between the keypoints along the trajectory subject to the optimization. As a result, the method uses few keypoints and reduces the total number of optimization variables. We further introduce a soil-tool interaction force model, which considers the geometric shape of the bucket and the physical properties of the soil. The experimental result on a high fidelity dynamic simulator shows our method can generate feasible trajectories, which satisfy excavation task constraints and are adaptive to different soil conditions.

Learning Resilient Behaviors for Navigation Under Uncertainty Environments

Oct 22, 2019

Abstract:Deep reinforcement learning has great potential to acquire complex, adaptive behaviors for autonomous agents automatically. However, the underlying neural network polices have not been widely deployed in real-world applications, especially in these safety-critical tasks (e.g., autonomous driving). One of the reasons is that the learned policy cannot perform flexible and resilient behaviors as traditional methods to adapt to diverse environments. In this paper, we consider the problem that a mobile robot learns adaptive and resilient behaviors for navigating in unseen uncertain environments while avoiding collisions. We present a novel approach for uncertainty-aware navigation by introducing an uncertainty-aware predictor to model the environmental uncertainty, and we propose a novel uncertainty-aware navigation network to learn resilient behaviors in the prior unknown environments. To train the proposed uncertainty-aware network more stably and efficiently, we present the temperature decay training paradigm, which balances exploration and exploitation during the training process. Our experimental evaluation demonstrates that our approach can learn resilient behaviors in diverse environments and generate adaptive trajectories according to environmental uncertainties.

Getting Robots Unfrozen and Unlost in Dense Pedestrian Crowds

Sep 30, 2018

Abstract:We aim to enable a mobile robot to navigate through environments with dense crowds, e.g., shopping malls, canteens, train stations, or airport terminals. In these challenging environments, existing approaches suffer from two common problems: the robot may get frozen and cannot make any progress toward its goal, or it may get lost due to severe occlusions inside a crowd. Here we propose a navigation framework that handles the robot freezing and the navigation lost problems simultaneously. First, we enhance the robot's mobility and unfreeze the robot in the crowd using a reinforcement learning based local navigation policy developed in our previous work~\cite{long2017towards}, which naturally takes into account the coordination between the robot and the human. Secondly, the robot takes advantage of its excellent local mobility to recover from its localization failure. In particular, it dynamically chooses to approach a set of recovery positions with rich features. To the best of our knowledge, our method is the first approach that simultaneously solves the freezing problem and the navigation lost problem in dense crowds. We evaluate our method in both simulated and real-world environments and demonstrate that it outperforms the state-of-the-art approaches. Videos are available at https://sites.google.com/view/rlslam.

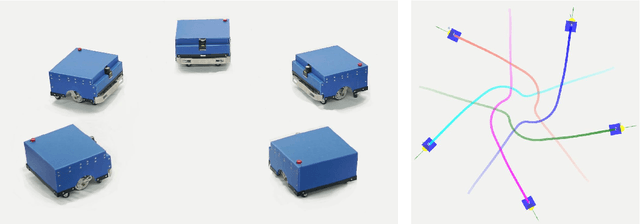

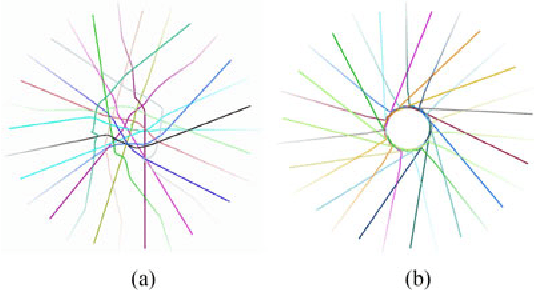

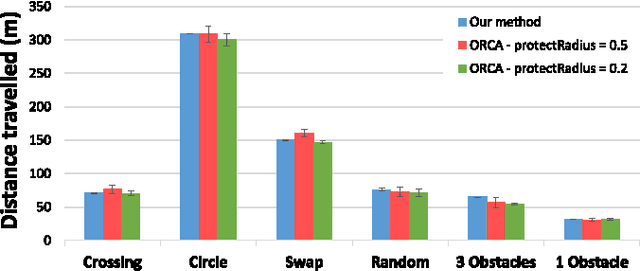

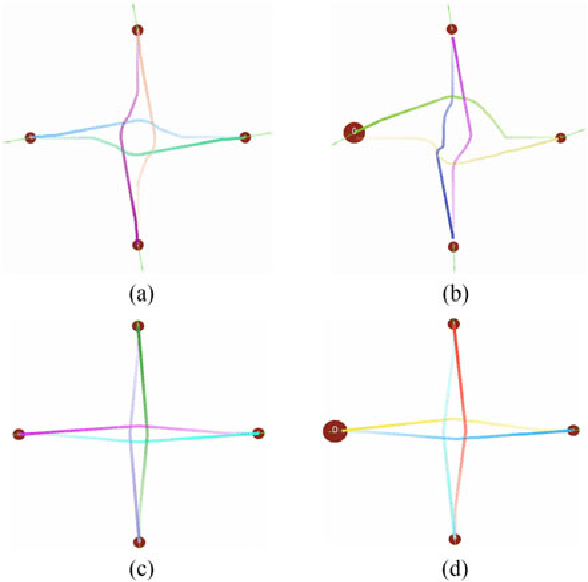

Fully Distributed Multi-Robot Collision Avoidance via Deep Reinforcement Learning for Safe and Efficient Navigation in Complex Scenarios

Aug 11, 2018

Abstract:In this paper, we present a decentralized sensor-level collision avoidance policy for multi-robot systems, which shows promising results in practical applications. In particular, our policy directly maps raw sensor measurements to an agent's steering commands in terms of the movement velocity. As a first step toward reducing the performance gap between decentralized and centralized methods, we present a multi-scenario multi-stage training framework to learn an optimal policy. The policy is trained over a large number of robots in rich, complex environments simultaneously using a policy gradient based reinforcement learning algorithm. The learning algorithm is also integrated into a hybrid control framework to further improve the policy's robustness and effectiveness. We validate the learned sensor-level collision avoidance policy in a variety of simulated and real-world scenarios with thorough performance evaluations for large-scale multi-robot systems. The generalization of the learned policy is verified in a set of unseen scenarios including the navigation of a group of heterogeneous robots and a large-scale scenario with 100 robots. Although the policy is trained using simulation data only, we have successfully deployed it on physical robots with shapes and dynamics characteristics that are different from the simulated agents, in order to demonstrate the controller's robustness against the sim-to-real modeling error. Finally, we show that the collision-avoidance policy learned from multi-robot navigation tasks provides an excellent solution to the safe and effective autonomous navigation for a single robot working in a dense real human crowd. Our learned policy enables a robot to make effective progress in a crowd without getting stuck. Videos are available at https://sites.google.com/view/hybridmrca

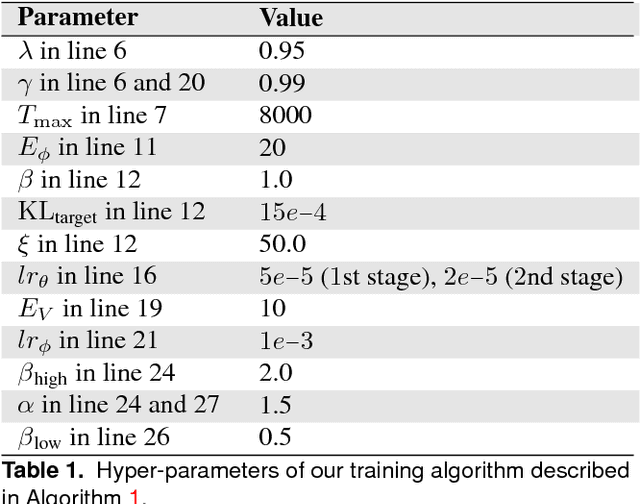

Towards Optimally Decentralized Multi-Robot Collision Avoidance via Deep Reinforcement Learning

May 20, 2018

Abstract:Developing a safe and efficient collision avoidance policy for multiple robots is challenging in the decentralized scenarios where each robot generate its paths without observing other robots' states and intents. While other distributed multi-robot collision avoidance systems exist, they often require extracting agent-level features to plan a local collision-free action, which can be computationally prohibitive and not robust. More importantly, in practice the performance of these methods are much lower than their centralized counterparts. We present a decentralized sensor-level collision avoidance policy for multi-robot systems, which directly maps raw sensor measurements to an agent's steering commands in terms of movement velocity. As a first step toward reducing the performance gap between decentralized and centralized methods, we present a multi-scenario multi-stage training framework to find an optimal policy which is trained over a large number of robots on rich, complex environments simultaneously using a policy gradient based reinforcement learning algorithm. We validate the learned sensor-level collision avoidance policy in a variety of simulated scenarios with thorough performance evaluations and show that the final learned policy is able to find time efficient, collision-free paths for a large-scale robot system. We also demonstrate that the learned policy can be well generalized to new scenarios that do not appear in the entire training period, including navigating a heterogeneous group of robots and a large-scale scenario with 100 robots. Videos are available at https://sites.google.com/view/drlmaca

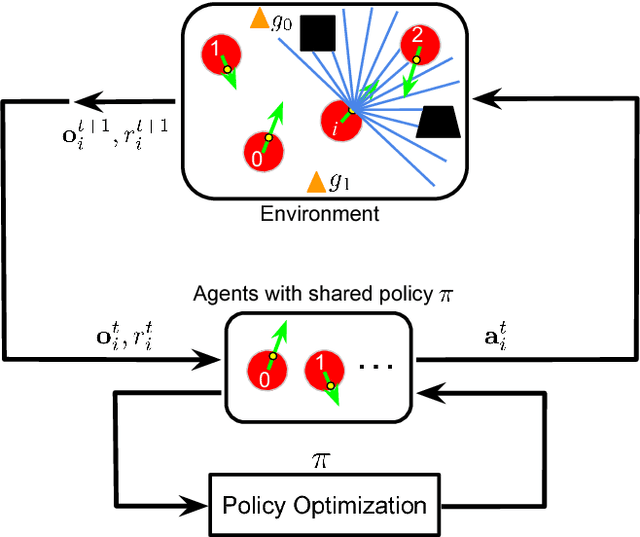

Deep-Learned Collision Avoidance Policy for Distributed Multi-Agent Navigation

Jul 06, 2017

Abstract:High-speed, low-latency obstacle avoidance that is insensitive to sensor noise is essential for enabling multiple decentralized robots to function reliably in cluttered and dynamic environments. While other distributed multi-agent collision avoidance systems exist, these systems require online geometric optimization where tedious parameter tuning and perfect sensing are necessary. We present a novel end-to-end framework to generate reactive collision avoidance policy for efficient distributed multi-agent navigation. Our method formulates an agent's navigation strategy as a deep neural network mapping from the observed noisy sensor measurements to the agent's steering commands in terms of movement velocity. We train the network on a large number of frames of collision avoidance data collected by repeatedly running a multi-agent simulator with different parameter settings. We validate the learned deep neural network policy in a set of simulated and real scenarios with noisy measurements and demonstrate that our method is able to generate a robust navigation strategy that is insensitive to imperfect sensing and works reliably in all situations. We also show that our method can be well generalized to scenarios that do not appear in our training data, including scenes with static obstacles and agents with different sizes. Videos are available at https://sites.google.com/view/deepmaca.

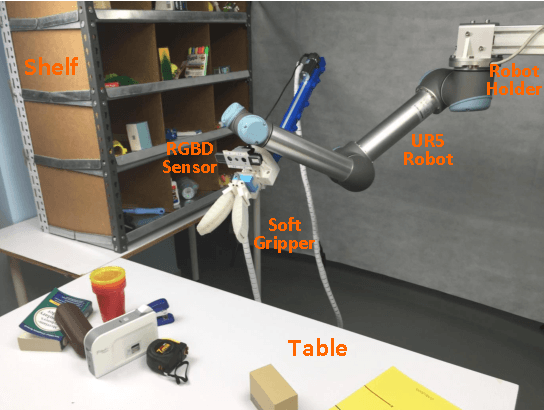

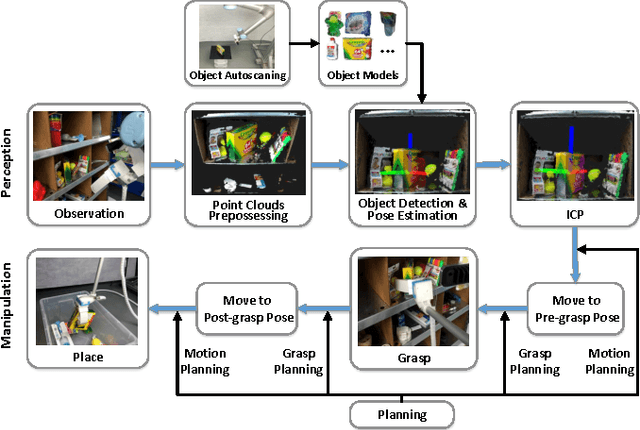

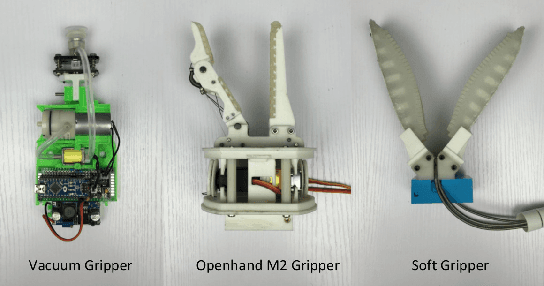

DoraPicker: An Autonomous Picking System for General Objects

Mar 21, 2016

Abstract:Robots that autonomously manipulate objects within warehouses have the potential to shorten the package delivery time and improve the efficiency of the e-commerce industry. In this paper, we present a robotic system that is capable of both picking and placing general objects in warehouse scenarios. Given a target object, the robot autonomously detects it from a shelf or a table and estimates its full 6D pose. With this pose information, the robot picks the object using its gripper, and then places it into a container or at a specified location. We describe our pick-and-place system in detail while highlighting our design principles for the warehouse settings, including the perception method that leverages knowledge about its workspace, three grippers designed to handle a large variety of different objects in terms of shape, weight and material, and grasp planning in cluttered scenarios. We also present extensive experiments to evaluate the performance of our picking system and demonstrate that the robot is competent to accomplish various tasks in warehouse settings, such as picking a target item from a tight space, grasping different objects from the shelf, and performing pick-and-place tasks on the table.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge