Intervention Aided Reinforcement Learning for Safe and Practical Policy Optimization in Navigation

Paper and Code

Nov 15, 2018

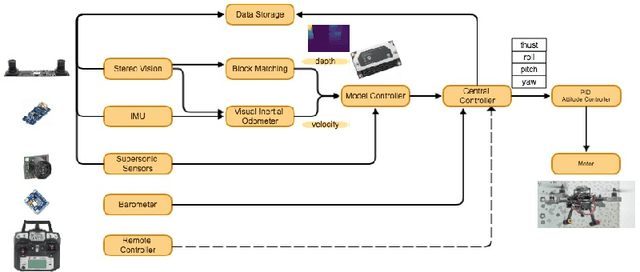

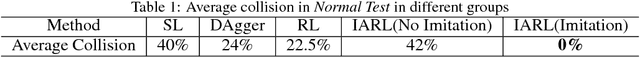

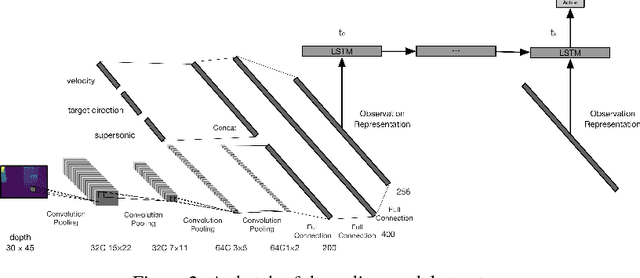

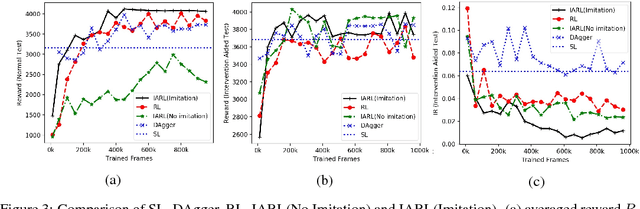

Combining deep neural networks with reinforcement learning has shown great potential in the next-generation intelligent control. However, there are challenges in terms of safety and cost in practical applications. In this paper, we propose the Intervention Aided Reinforcement Learning (IARL) framework, which utilizes human intervened robot-environment interaction to improve the policy. We used the Unmanned Aerial Vehicle (UAV) as the test platform. We built neural networks as our policy to map sensor readings to control signals on the UAV. Our experiment scenarios cover both simulation and reality. We show that our approach substantially reduces the human intervention and improves the performance in autonomous navigation, at the same time it ensures safety and keeps training cost acceptable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge