Xuan Zhao

Pardon? Evaluating Conversational Repair in Large Audio-Language Models

Jan 19, 2026Abstract:Large Audio-Language Models (LALMs) have demonstrated strong performance in spoken question answering (QA), with existing evaluations primarily focusing on answer accuracy and robustness to acoustic perturbations. However, such evaluations implicitly assume that spoken inputs remain semantically answerable, an assumption that often fails in real-world interaction when essential information is missing. In this work, we introduce a repair-aware evaluation setting that explicitly distinguishes between answerable and unanswerable audio inputs. We define answerability as a property of the input itself and construct paired evaluation conditions using a semantic-acoustic masking protocol. Based on this setting, we propose the Evaluability Awareness and Repair (EAR) score, a non-compensatory metric that jointly evaluates task competence under answerable conditions and repair behavior under unanswerable conditions. Experiments on two spoken QA benchmarks across diverse LALMs reveal a consistent gap between answer accuracy and conversational reliability: while many models perform well when inputs are answerable, most fail to recognize semantic unanswerability and initiate appropriate conversational repair. These findings expose a limitation of prevailing accuracy-centric evaluation practices and motivate reliability assessments that treat unanswerable inputs as cues for repair and continued interaction.

Classifier Reconstruction Through Counterfactual-Aware Wasserstein Prototypes

Dec 11, 2025Abstract:Counterfactual explanations provide actionable insights by identifying minimal input changes required to achieve a desired model prediction. Beyond their interpretability benefits, counterfactuals can also be leveraged for model reconstruction, where a surrogate model is trained to replicate the behavior of a target model. In this work, we demonstrate that model reconstruction can be significantly improved by recognizing that counterfactuals, which typically lie close to the decision boundary, can serve as informative though less representative samples for both classes. This is particularly beneficial in settings with limited access to labeled data. We propose a method that integrates original data samples with counterfactuals to approximate class prototypes using the Wasserstein barycenter, thereby preserving the underlying distributional structure of each class. This approach enhances the quality of the surrogate model and mitigates the issue of decision boundary shift, which commonly arises when counterfactuals are naively treated as ordinary training instances. Empirical results across multiple datasets show that our method improves fidelity between the surrogate and target models, validating its effectiveness.

GloTok: Global Perspective Tokenizer for Image Reconstruction and Generation

Nov 19, 2025Abstract:Existing state-of-the-art image tokenization methods leverage diverse semantic features from pre-trained vision models for additional supervision, to expand the distribution of latent representations and thereby improve the quality of image reconstruction and generation. These methods employ a locally supervised approach for semantic supervision, which limits the uniformity of semantic distribution. However, VA-VAE proves that a more uniform feature distribution yields better generation performance. In this work, we introduce a Global Perspective Tokenizer (GloTok), which utilizes global relational information to model a more uniform semantic distribution of tokenized features. Specifically, a codebook-wise histogram relation learning method is proposed to transfer the semantics, which are modeled by pre-trained models on the entire dataset, to the semantic codebook. Then, we design a residual learning module that recovers the fine-grained details to minimize the reconstruction error caused by quantization. Through the above design, GloTok delivers more uniformly distributed semantic latent representations, which facilitates the training of autoregressive (AR) models for generating high-quality images without requiring direct access to pre-trained models during the training process. Experiments on the standard ImageNet-1k benchmark clearly show that our proposed method achieves state-of-the-art reconstruction performance and generation quality.

FlowTIE: Flow-based Transport of Intensity Equation for Phase Gradient Estimation from 4D-STEM Data

Nov 10, 2025Abstract:We introduce FlowTIE, a neural-network-based framework for phase reconstruction from 4D-Scanning Transmission Electron Microscopy (STEM) data, which integrates the Transport of Intensity Equation (TIE) with a flow-based representation of the phase gradient. This formulation allows the model to bridge data-driven learning with physics-based priors, improving robustness under dynamical scattering conditions for thick specimen. The validation on simulated datasets of crystalline materials, benchmarking to classical TIE and gradient-based optimization methods are presented. The results demonstrate that FlowTIE improves phase reconstruction accuracy, fast, and can be integrated with a thick specimen model, namely multislice method.

Data Synthesis with Diverse Styles for Face Recognition via 3DMM-Guided Diffusion

Apr 01, 2025

Abstract:Identity-preserving face synthesis aims to generate synthetic face images of virtual subjects that can substitute real-world data for training face recognition models. While prior arts strive to create images with consistent identities and diverse styles, they face a trade-off between them. Identifying their limitation of treating style variation as subject-agnostic and observing that real-world persons actually have distinct, subject-specific styles, this paper introduces MorphFace, a diffusion-based face generator. The generator learns fine-grained facial styles, e.g., shape, pose and expression, from the renderings of a 3D morphable model (3DMM). It also learns identities from an off-the-shelf recognition model. To create virtual faces, the generator is conditioned on novel identities of unlabeled synthetic faces, and novel styles that are statistically sampled from a real-world prior distribution. The sampling especially accounts for both intra-subject variation and subject distinctiveness. A context blending strategy is employed to enhance the generator's responsiveness to identity and style conditions. Extensive experiments show that MorphFace outperforms the best prior arts in face recognition efficacy.

CHOrD: Generation of Collision-Free, House-Scale, and Organized Digital Twins for 3D Indoor Scenes with Controllable Floor Plans and Optimal Layouts

Mar 15, 2025Abstract:We introduce CHOrD, a novel framework for scalable synthesis of 3D indoor scenes, designed to create house-scale, collision-free, and hierarchically structured indoor digital twins. In contrast to existing methods that directly synthesize the scene layout as a scene graph or object list, CHOrD incorporates a 2D image-based intermediate layout representation, enabling effective prevention of collision artifacts by successfully capturing them as out-of-distribution (OOD) scenarios during generation. Furthermore, unlike existing methods, CHOrD is capable of generating scene layouts that adhere to complex floor plans with multi-modal controls, enabling the creation of coherent, house-wide layouts robust to both geometric and semantic variations in room structures. Additionally, we propose a novel dataset with expanded coverage of household items and room configurations, as well as significantly improved data quality. CHOrD demonstrates state-of-the-art performance on both the 3D-FRONT and our proposed datasets, delivering photorealistic, spatially coherent indoor scene synthesis adaptable to arbitrary floor plan variations.

X-IL: Exploring the Design Space of Imitation Learning Policies

Feb 19, 2025Abstract:Designing modern imitation learning (IL) policies requires making numerous decisions, including the selection of feature encoding, architecture, policy representation, and more. As the field rapidly advances, the range of available options continues to grow, creating a vast and largely unexplored design space for IL policies. In this work, we present X-IL, an accessible open-source framework designed to systematically explore this design space. The framework's modular design enables seamless swapping of policy components, such as backbones (e.g., Transformer, Mamba, xLSTM) and policy optimization techniques (e.g., Score-matching, Flow-matching). This flexibility facilitates comprehensive experimentation and has led to the discovery of novel policy configurations that outperform existing methods on recent robot learning benchmarks. Our experiments demonstrate not only significant performance gains but also provide valuable insights into the strengths and weaknesses of various design choices. This study serves as both a practical reference for practitioners and a foundation for guiding future research in imitation learning.

Enhancing Fairness through Reweighting: A Path to Attain the Sufficiency Rule

Aug 26, 2024

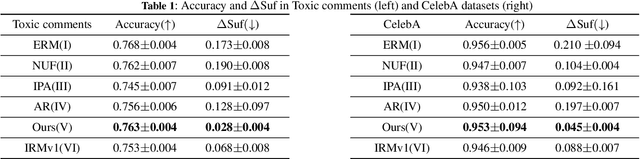

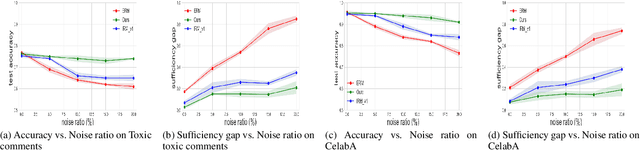

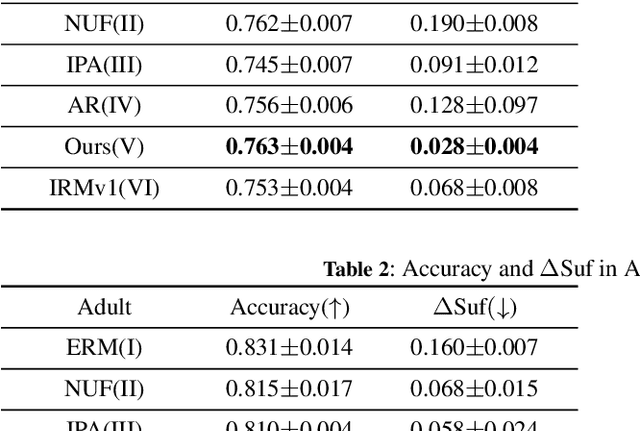

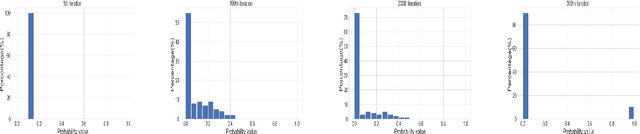

Abstract:We introduce an innovative approach to enhancing the empirical risk minimization (ERM) process in model training through a refined reweighting scheme of the training data to enhance fairness. This scheme aims to uphold the sufficiency rule in fairness by ensuring that optimal predictors maintain consistency across diverse sub-groups. We employ a bilevel formulation to address this challenge, wherein we explore sample reweighting strategies. Unlike conventional methods that hinge on model size, our formulation bases generalization complexity on the space of sample weights. We discretize the weights to improve training speed. Empirical validation of our method showcases its effectiveness and robustness, revealing a consistent improvement in the balance between prediction performance and fairness metrics across various experiments.

Sunnie: An Anthropomorphic LLM-Based Conversational Agent for Mental Well-Being Activity Recommendation

May 22, 2024

Abstract:A longstanding challenge in mental well-being support is the reluctance of people to adopt psychologically beneficial activities, often due to a lack of motivation, low perceived trustworthiness, and limited personalization of recommendations. Chatbots have shown promise in promoting positive mental health practices, yet their rigid interaction flows and less human-like conversational experiences present significant limitations. In this work, we explore whether the anthropomorphic design (both LLM's persona design and conversational experience design) can enhance users' perception of the system and their willingness to adopt mental well-being activity recommendations. To this end, we introduce Sunnie, an anthropomorphic LLM-based conversational agent designed to offer personalized guidance for mental well-being support through multi-turn conversation and activity recommendations based on positive psychological theory. An empirical user study comparing the user experience with Sunnie and with a traditional survey-based activity recommendation system suggests that the anthropomorphic characteristics of Sunnie significantly enhance users' perception of the system and the overall usability; nevertheless, users' willingness to adopt activity recommendations did not change significantly.

Discovering Galaxy Features via Dataset Distillation

Nov 29, 2023Abstract:In many applications, Neural Nets (NNs) have classification performance on par or even exceeding human capacity. Moreover, it is likely that NNs leverage underlying features that might differ from those humans perceive to classify. Can we "reverse-engineer" pertinent features to enhance our scientific understanding? Here, we apply this idea to the notoriously difficult task of galaxy classification: NNs have reached high performance for this task, but what does a neural net (NN) "see" when it classifies galaxies? Are there morphological features that the human eye might overlook that could help with the task and provide new insights? Can we visualize tracers of early evolution, or additionally incorporated spectral data? We present a novel way to summarize and visualize galaxy morphology through the lens of neural networks, leveraging Dataset Distillation, a recent deep-learning methodology with the primary objective to distill knowledge from a large dataset and condense it into a compact synthetic dataset, such that a model trained on this synthetic dataset achieves performance comparable to a model trained on the full dataset. We curate a class-balanced, medium-size high-confidence version of the Galaxy Zoo 2 dataset, and proceed with dataset distillation from our accurate NN-classifier to create synthesized prototypical images of galaxy morphological features, demonstrating its effectiveness. Of independent interest, we introduce a self-adaptive version of the state-of-the-art Matching Trajectory algorithm to automate the distillation process, and show enhanced performance on computer vision benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge